Discover Machine Learning Street Talk (MLST)

Machine Learning Street Talk (MLST)

Machine Learning Street Talk (MLST)

Author: Machine Learning Street Talk (MLST)

Subscribed: 1,239Played: 40,394Subscribe

Share

© Machine Learning Street Talk (MLST)

Description

Welcome! We engage in fascinating discussions with pre-eminent figures in the AI field. Our flagship show covers current affairs in AI, cognitive science, neuroscience and philosophy of mind with in-depth analysis. Our approach is unrivalled in terms of scope and rigour – we believe in intellectual diversity in AI, and we touch on all of the main ideas in the field with the hype surgically removed. MLST is run by Tim Scarfe, Ph.D (https://www.linkedin.com/in/ecsquizor/) and features regular appearances from MIT Doctor of Philosophy Keith Duggar (https://www.linkedin.com/in/dr-keith-duggar/).

246 Episodes

Reverse

What makes something truly *intelligent?* Is a rock an agent? Could a perfect simulation of your brain actually *be* you? In this fascinating conversation, Dr. Jeff Beck takes us on a journey through the philosophical and technical foundations of agency, intelligence, and the future of AI.Jeff doesn't hold back on the big questions. He argues that from a purely mathematical perspective, there's no structural difference between an agent and a rock – both execute policies that map inputs to outputs. The real distinction lies in *sophistication* – how complex are the internal computations? Does the system engage in planning and counterfactual reasoning, or is it just a lookup table that happens to give the right answers?*Key topics explored in this conversation:**The Black Box Problem of Agency* – How can we tell if something is truly planning versus just executing a pre-computed response? Jeff explains why this question is nearly impossible to answer from the outside, and why the best we can do is ask which model gives us the simplest explanation.*Energy-Based Models Explained* – A masterclass on how EBMs differ from standard neural networks. The key insight: traditional networks only optimize weights, while energy-based models optimize *both* weights and internal states – a subtle but profound distinction that connects to Bayesian inference.*Why Your Brain Might Have Evolved from Your Nose* – One of the most surprising moments in the conversation. Jeff proposes that the complex, non-smooth nature of olfactory space may have driven the evolution of our associative cortex and planning abilities.*The JEPA Revolution* – A deep dive into Yann LeCun's Joint Embedding Prediction Architecture and why learning in latent space (rather than predicting every pixel) might be the key to more robust AI representations.*AI Safety Without Skynet Fears* – Jeff takes a refreshingly grounded stance on AI risk. He's less worried about rogue superintelligences and more concerned about humans becoming "reward function selectors" – couch potatoes who just approve or reject AI outputs. His proposed solution? Use inverse reinforcement learning to derive AI goals from observed human behavior, then make *small* perturbations rather than naive commands like "end world hunger."Whether you're interested in the philosophy of mind, the technical details of modern machine learning, or just want to understand what makes intelligence *tick,* this conversation delivers insights you won't find anywhere else.---TIMESTAMPS:00:00:00 Geometric Deep Learning & Physical Symmetries00:00:56 Defining Agency: From Rocks to Planning00:05:25 The Black Box Problem & Counterfactuals00:08:45 Simulated Agency vs. Physical Reality00:12:55 Energy-Based Models & Test-Time Training00:17:30 Bayesian Inference & Free Energy00:20:07 JEPA, Latent Space, & Non-Contrastive Learning00:27:07 Evolution of Intelligence & Modular Brains00:34:00 Scientific Discovery & Automated Experimentation00:38:04 AI Safety, Enfeeblement & The Future of Work---REFERENCES:Concept:[00:00:58] Free Energy Principle (FEP)https://en.wikipedia.org/wiki/Free_energy_principle[00:06:00] Monte Carlo Tree Searchhttps://en.wikipedia.org/wiki/Monte_Carlo_tree_searchBook:[00:09:00] The Intentional Stancehttps://mitpress.mit.edu/9780262540537/the-intentional-stance/Paper:[00:13:00] A Tutorial on Energy-Based Learning (LeCun 2006)http://yann.lecun.com/exdb/publis/pdf/lecun-06.pdf[00:15:00] Auto-Encoding Variational Bayes (VAE)https://arxiv.org/abs/1312.6114[00:20:15] JEPA (Joint Embedding Prediction Architecture)https://openreview.net/forum?id=BZ5a1r-kVsf[00:22:30] The Wake-Sleep Algorithmhttps://www.cs.toronto.edu/~hinton/absps/ws.pdf<trunc, see rescript>---RESCRIPT:https://app.rescript.info/public/share/DJlSbJ_Qx080q315tWaqMWn3PixCQsOcM4Kf1IW9_EoPDF:https://app.rescript.info/api/public/sessions/0efec296b9b6e905/pdf

Professor Mazviita Chirimuuta joins us for a fascinating deep dive into the philosophy of neuroscience and what it really means to understand the mind.*What can neuroscience actually tell us about how the mind works?* In this thought-provoking conversation, we explore the hidden assumptions behind computational theories of the brain, the limits of scientific abstraction, and why the question of machine consciousness might be more complicated than AI researchers assume.Mazviita, author of *The Brain Abstracted,* brings a unique perspective shaped by her background in both neuroscience research and philosophy. She challenges us to think critically about the metaphors we use to understand cognition — from the reflex theory of the late 19th century to today's dominant view of the brain as a computer.*Key topics explored:**The problem of oversimplification* — Why scientific models necessarily leave things out, and how this can sometimes lead entire fields astray. The cautionary tale of reflex theory shows how elegant explanations can blind us to biological complexity.*Is the brain really a computer?* — Mazviita unpacks the philosophical assumptions behind computational neuroscience and asks: if we can model anything computationally, what makes brains special? The answer might challenge everything you thought you knew about AI.*Haptic realism* — A fresh way of thinking about scientific knowledge that emphasizes interaction over passive observation. Knowledge isn't about reading the "source code of the universe" — it's something we actively construct through engagement with the world.*Why embodiment matters for understanding* — Can a disembodied language model truly understand? Mazviita makes a compelling case that human cognition is deeply entangled with our sensory-motor engagement and biological existence in ways that can't simply be abstracted away.*Technology and human finitude* — Drawing on Heidegger, we discuss how the dream of transcending our physical limitations through technology might reflect a fundamental misunderstanding of what it means to be a knower.This conversation is essential viewing for anyone interested in AI, consciousness, philosophy of mind, or the future of cognitive science. Whether you're skeptical of strong AI claims or a true believer in machine consciousness, Mazviita's careful philosophical analysis will give you new tools for thinking through these profound questions.---TIMESTAMPS:00:00:00 The Problem of Generalizing Neuroscience00:02:51 Abstraction vs. Idealization: The "Kaleidoscope"00:05:39 Platonism in AI: Discovering or Inventing Patterns?00:09:42 When Simplification Fails: The Reflex Theory00:12:23 Behaviorism and the "Black Box" Trap00:14:20 Haptic Realism: Knowledge Through Interaction00:20:23 Is Nature Protean? The Myth of Converging Truth00:23:23 The Computational Theory of Mind: A Useful Fiction?00:27:25 Biological Constraints: Why Brains Aren't Just Neural Nets00:31:01 Agency, Distal Causes, and Dennett's Stances00:37:13 Searle's Challenge: Causal Powers and Understanding00:41:58 Heidegger's Warning & The Experiment on Children---REFERENCES:Book:[00:01:28] The Brain Abstractedhttps://mitpress.mit.edu/9780262548045/the-brain-abstracted/[00:11:05] The Integrated Action of the Nervous Systemhttps://www.amazon.sg/integrative-action-nervous-system/dp/9354179029[00:18:15] The Quest for Certainty (Dewey)https://www.amazon.com/Quest-Certainty-Relation-Knowledge-Lectures/dp/0399501916[00:19:45] Realism for Realistic People (Chang)https://www.cambridge.org/core/books/realism-for-realistic-people/ACC93A7F03B15AA4D6F3A466E3FC5AB7<truncated, see ReScript>---RESCRIPT:https://app.rescript.info/public/share/A6cZ1TY35p8ORMmYCWNBI0no9ChU3-Kx7dPXGJURvZ0PDF Transcript:https://app.rescript.info/api/public/sessions/0fb7767e066cf712/pdf

What if everything we think we know about the brain is just a really good metaphor that we forgot was a metaphor?This episode takes you on a journey through the history of scientific simplification, from a young Karl Friston watching wood lice in his garden to the bold claims that your mind is literally software running on biological hardware.We bring together some of the most brilliant minds we've interviewed — Professor Mazviita Chirimuuta, Francois Chollet, Joscha Bach, Professor Luciano Floridi, Professor Noam Chomsky, Nobel laureate John Jumper, and more — to wrestle with a deceptively simple question: *When scientists simplify reality to study it, what gets captured and what gets lost?**Key ideas explored:**The Spherical Cow Problem* — Science requires simplification. We're limited creatures trying to understand systems far more complex than our working memory can hold. But when does a useful model become a dangerous illusion?*The Kaleidoscope Hypothesis* — Francois Chollet's beautiful idea that beneath all the apparent chaos of reality lies simple, repeating patterns — like bits of colored glass in a kaleidoscope creating infinite complexity. Is this profound truth or Platonic wishful thinking?*Is Software Really Spirit?* — Joscha Bach makes the provocative claim that software is literally spirit, not metaphorically. We push back on this, asking whether the "sameness" we see across different computers running the same program exists in nature or only in our descriptions.*The Cultural Illusion of AGI* — Why does artificial general intelligence seem so inevitable to people in Silicon Valley? Professor Chirimuuta suggests we might be caught in a "cultural historical illusion" — our mechanistic assumptions about minds making AI seem like destiny when it might just be a bet.*Prediction vs. Understanding* — Nobel Prize winner John Jumper: AI can predict and control, but understanding requires a human in the loop. Throughout history, we've described the brain as hydraulic pumps, telegraph networks, telephone switchboards, and now computers. Each metaphor felt obviously true at the time. This episode asks: what will we think was naive about our current assumptions in fifty years?Featuring insights from *The Brain Abstracted* by Mazviita Chirimuuta — possibly the most influential book on how we think about thinking in 2025.---TIMESTAMPS:00:00:00 The Wood Louse & The Spherical Cow00:02:04 The Necessity of Abstraction00:04:42 Simplicius vs. Ignorantio: The Boxing Match00:06:39 The Kaleidoscope Hypothesis00:08:40 Is the Mind Software?00:13:15 Critique of Causal Patterns00:14:40 Temperature is Not a Thing00:18:24 The Ship of Theseus & Ontology00:23:45 Metaphors Hardening into Reality00:25:41 The Illusion of AGI Inevitability00:27:45 Prediction vs. Understanding00:32:00 Climbing the Mountain vs. The Helicopter00:34:53 Haptic Realism & The Limits of Knowledge---REFERENCES:Person:[00:00:00] Karl Friston (UCL)https://profiles.ucl.ac.uk/1236-karl-friston[00:06:30] Francois Chollethttps://fchollet.com/[00:14:41] Cesar Hidalgo, MLST interview.https://www.youtube.com/watch?v=vzpFOJRteeI[00:30:30] Terence Tao's Bloghttps://terrytao.wordpress.com/Book:[00:02:25] The Brain Abstractedhttps://mitpress.mit.edu/9780262548045/the-brain-abstracted/[00:06:00] On Learned Ignorancehttps://www.amazon.com/Nicholas-Cusa-learned-ignorance-translation/dp/0938060236[00:24:15] Science and the Modern Worldhttps://amazon.com/dp/0684836394<truncated, see ReScript>RESCRIPT:https://app.rescript.info/public/share/CYy0ex2M2kvcVRdMnSUky5O7H7hB7v2u_nVhoUiuKD4PDF Transcript: https://app.rescript.info/api/public/sessions/6c44c41e1e0fa6dd/pdf Thank you to Dr. Maxwell Ramstead for early script work on this show (Ph.D student of Friston) and the woodlice story came from him!

Dr. Jeff Beck, mathematician turned computational neuroscientist, joins us for a fascinating deep dive into why the future of AI might look less like ChatGPT and more like your own brain.**SPONSOR MESSAGES START**—Prolific - Quality data. From real people. For faster breakthroughs.https://www.prolific.com/?utm_source=mlst—**END***What if the key to building truly intelligent machines isn't bigger models, but smarter ones?*In this conversation, Jeff makes a compelling case that we've been building AI backwards. While the tech industry races to scale up transformers and language models, Jeff argues we're missing something fundamental: the brain doesn't work like a giant prediction engine. It works like a scientist, constantly testing hypotheses about a world made of *objects* that interact through *forces* — not pixels and tokens.*The Bayesian Brain* — Jeff explains how your brain is essentially running the scientific method on autopilot. When you combine what you see with what you hear, you're doing optimal Bayesian inference without even knowing it. This isn't just philosophy — it's backed by decades of behavioral experiments showing humans are surprisingly efficient at handling uncertainty.*AutoGrad Changed Everything* — Forget transformers for a moment. Jeff argues the real hero of the AI boom was automatic differentiation, which turned AI from a math problem into an engineering problem. But in the process, we lost sight of what actually makes intelligence work.*The Cat in the Warehouse Problem* — Here's where it gets practical. Imagine a warehouse robot that's never seen a cat. Current AI would either crash or make something up. Jeff's approach? Build models that *know what they don't know*, can phone a friend to download new object models on the fly, and keep learning continuously. It's like giving robots the ability to say "wait, what IS that?" instead of confidently being wrong.*Why Language is a Terrible Model for Thought* — In a provocative twist, Jeff argues that grounding AI in language (like we do with LLMs) is fundamentally misguided. Self-report is the least reliable data in psychology — people routinely explain their own behavior incorrectly. We should be grounding AI in physics, not words.*The Future is Lots of Little Models* — Instead of one massive neural network, Jeff envisions AI systems built like video game engines: thousands of small, modular object models that can be combined, swapped, and updated independently. It's more efficient, more flexible, and much closer to how we actually think.Rescript: https://app.rescript.info/public/share/D-b494t8DIV-KRGYONJghvg-aelMmxSDjKthjGdYqsE---TIMESTAMPS:00:00:00 Introduction & The Bayesian Brain00:01:25 Bayesian Inference & Information Processing00:05:17 The Brain Metaphor: From Levers to Computers00:10:13 Micro vs. Macro Causation & Instrumentalism00:16:59 The Active Inference Community & AutoGrad00:22:54 Object-Centered Models & The Grounding Problem00:35:50 Scaling Bayesian Inference & Architecture Design00:48:05 The Cat in the Warehouse: Solving Generalization00:58:17 Alignment via Belief Exchange01:05:24 Deception, Emergence & Cellular Automata---REFERENCES:Paper:[00:00:24] Zoubin Ghahramani (Google DeepMind)https://pmc.ncbi.nlm.nih.gov/articles/PMC3538441/pdf/rsta201[00:19:20] Mamba: Linear-Time Sequence Modelinghttps://arxiv.org/abs/2312.00752[00:27:36] xLSTM: Extended Long Short-Term Memoryhttps://arxiv.org/abs/2405.04517[00:41:12] 3D Gaussian Splattinghttps://repo-sam.inria.fr/fungraph/3d-gaussian-splatting/[01:07:09] Lenia: Biology of Artificial Lifehttps://arxiv.org/abs/1812.05433[01:08:20] Growing Neural Cellular Automatahttps://distill.pub/2020/growing-ca/[01:14:05] DreamCoderhttps://arxiv.org/abs/2006.08381[01:14:58] The Genomic Bottleneckhttps://www.nature.com/articles/s41467-019-11786-6Person:[00:16:42] Karl Friston (UCL)https://www.youtube.com/watch?v=PNYWi996Beg

Tim sits down with Max Bennett to explore how our brains evolved over 600 million years—and what that means for understanding both human intelligence and AI.Max isn't a neuroscientist by training. He's a tech entrepreneur who got curious, started reading, and ended up weaving together three fields that rarely talk to each other: comparative psychology (what different animals can actually do), evolutionary neuroscience (how brains changed over time), and AI (what actually works in practice).*Your Brain Is a Guessing Machine*You don't actually "see" the world. Your brain builds a simulation of what it *thinks* is out there and just uses your eyes to check if it's right. That's why optical illusions work—your brain is filling in a triangle that isn't there, or can't decide if it's looking at a duck or a rabbit.*Rats Have Regrets**Chimps Are Machiavellian**Language Is the Human Superpower**Does ChatGPT Think?*(truncated description, more on rescript)Understanding how the brain evolved isn't just about the past. It gives us clues about:- What's actually different between human intelligence and AI- Why we're so easily fooled by status games and tribal thinking- What features we might want to build into—or leave out of—future AI systemsGet Max's book:https://www.amazon.com/Brief-History-Intelligence-Humans-Breakthroughs/dp/0063286343Rescript: https://app.rescript.info/public/share/R234b7AXyDXZusqQ_43KMGsUSvJ2TpSz2I3emnI6j9A---TIMESTAMPS:00:00:00 Introduction: Outsider's Advantage & Neocortex Theories00:11:34 Perception as Inference: The Filling-In Machine00:19:11 Understanding, Recognition & Generative Models00:36:39 How Mice Plan: Vicarious Trial & Error00:46:15 Evolution of Self: The Layer 4 Mystery00:58:31 Ancient Minds & The Social Brain: Machiavellian Apes01:19:36 AI Alignment, Instrumental Convergence & Status Games01:33:07 Metacognition & The IQ Paradox01:48:40 Does GPT Have Theory of Mind?02:00:40 Memes, Language Singularity & Brain Size Myths02:16:44 Communication, Language & The Cyborg Future02:44:25 Shared Fictions, World Models & The Reality Gap---REFERENCES:Person:[00:00:05] Karl Friston (UCL)https://www.youtube.com/watch?v=PNYWi996Beg[00:00:06] Jeff Hawkinshttps://www.youtube.com/watch?v=6VQILbDqaI4[00:12:19] Hermann von Helmholtzhttps://plato.stanford.edu/entries/hermann-helmholtz/[00:38:34] David Redish (U. Minnesota)https://redishlab.umn.edu/[01:10:19] Robin Dunbarhttps://www.psy.ox.ac.uk/people/robin-dunbar[01:15:04] Emil Menzelhttps://www.sciencedirect.com/bookseries/behavior-of-nonhuman-primates/vol/5/suppl/C[01:19:49] Nick Bostromhttps://nickbostrom.com/[02:28:25] Noam Chomskyhttps://linguistics.mit.edu/user/chomsky/[03:01:22] Judea Pearlhttps://samueli.ucla.edu/people/judea-pearl/Concept/Framework:[00:05:04] Active Inferencehttps://www.youtube.com/watch?v=KkR24ieh5OwPaper:[00:35:59] Predictions not commands [Rick A Adams]https://pubmed.ncbi.nlm.nih.gov/23129312/Book:[01:25:42] The Elephant in the Brainhttps://www.amazon.com/Elephant-Brain-Hidden-Motives-Everyday/dp/0190495995[01:28:27] The Status Gamehttps://www.goodreads.com/book/show/58642436-the-status-game[02:00:40] The Selfish Genehttps://amazon.com/dp/0198788606[02:14:25] The Language Gamehttps://www.amazon.com/Language-Game-Improvisation-Created-Changed/dp/1541674987[02:54:40] The Evolution of Languagehttps://www.amazon.com/Evolution-Language-Approaches/dp/052167736X[03:09:37] The Three-Body Problemhttps://amazon.com/dp/0765377063

César Hidalgo has spent years trying to answer a deceptively simple question: What is knowledge, and why is it so hard to move around?We all have this intuition that knowledge is just... information. Write it down in a book, upload it to GitHub, train an AI on it—done. But César argues that's completely wrong. Knowledge isn't a thing you can copy and paste. It's more like a living organism that needs the right environment, the right people, and constant exercise to survive.Guest: César Hidalgo, Director of the Center for Collective Learning1. Knowledge Follows Laws (Like Physics)2. You Can't Download Expertise3. Why Big Companies Fail to Adapt4. The "Infinite Alphabet" of EconomiesIf you think AI can just "copy" human knowledge, or that development is just about throwing money at poor countries, or that writing things down preserves them forever—this conversation will change your mind. Knowledge is fragile, specific, and collective. It decays fast if you don't use it. The Infinite Alphabet [César A. Hidalgo]https://www.penguin.co.uk/books/458054/the-infinite-alphabet-by-hidalgo-cesar-a/9780241655672https://x.com/cesifotiRescript link. https://app.rescript.info/public/share/eaBHbEo9xamwbwpxzcVVm4NQjMh7lsOQKeWwNxmw0JQ---TIMESTAMPS:00:00:00 The Three Laws of Knowledge00:02:28 Rival vs. Non-Rival: The Economics of Ideas00:05:43 Why You Can't Just 'Download' Knowledge00:08:11 The Detective Novel Analogy00:11:54 Collective Learning & Organizational Networks00:16:27 Architectural Innovation: Amazon vs. Barnes & Noble00:19:15 The First Law: Learning Curves00:23:05 The Samuel Slater Story: Treason & Memory00:28:31 Physics of Knowledge: Joule's Cannon00:32:33 Extensive vs. Intensive Properties00:35:45 Knowledge Decay: Ise Temple & Polaroid00:41:20 Absorptive Capacity: Sony & Donetsk00:47:08 Disruptive Innovation & S-Curves00:51:23 Team Size & The Cost of Innovation00:57:13 Geography of Knowledge: Vespa's Origin01:04:34 Migration, Diversity & 'Planet China'01:12:02 Institutions vs. Knowledge: The China Story01:21:27 Economic Complexity & The Infinite Alphabet01:32:27 Do LLMs Have Knowledge?---REFERENCES:Book:[00:47:45] The Innovator's Dilemma (Christensen)https://www.amazon.com/Innovators-Dilemma-Revolutionary-Change-Business/dp/0062060244[00:55:15] Why Greatness Cannot Be Plannedhttps://amazon.com/dp/3319155237[01:35:00] Why Information Growshttps://amazon.com/dp/0465048994Paper:[00:03:15] Endogenous Technological Change (Romer, 1990)https://web.stanford.edu/~klenow/Romer_1990.pdf[00:03:30] A Model of Growth Through Creative Destruction (Aghion & Howitt, 1992)https://dash.harvard.edu/server/api/core/bitstreams/7312037d-2b2d-6bd4-e053-0100007fdf3b/content[00:14:55] Organizational Learning: From Experience to Knowledge (Argote & Miron-Spektor, 2011)https://www.researchgate.net/publication/228754233_Organizational_Learning_From_Experience_to_Knowledge[00:17:05] Architectural Innovation (Henderson & Clark, 1990)https://www.researchgate.net/publication/200465578_Architectural_Innovation_The_Reconfiguration_of_Existing_Product_Technologies_and_the_Failure_of_Established_Firms[00:19:45] The Learning Curve Equation (Thurstone, 1916)https://dn790007.ca.archive.org/0/items/learningcurveequ00thurrich/learningcurveequ00thurrich.pdf[00:21:30] Factors Affecting the Cost of Airplanes (Wright, 1936)https://pdodds.w3.uvm.edu/research/papers/others/1936/wright1936a.pdf[00:52:45] Are Ideas Getting Harder to Find? (Bloom et al.)https://web.stanford.edu/~chadj/IdeaPF.pdf[01:33:00] LLMs/ Emergencehttps://arxiv.org/abs/2506.11135Person:[00:25:30] Samuel Slaterhttps://en.wikipedia.org/wiki/Samuel_Slater[00:42:05] Masaru Ibuka (Sony)https://www.sony.com/en/SonyInfo/CorporateInfo/History/SonyHistory/1-02.html

This is a lively, no-holds-barred debate about whether AI can truly be intelligent, conscious, or understand anything at all — and what happens when (or if) machines become smarter than us.Dr. Mike Israetel is a sports scientist, entrepreneur, and co-founder of RP Strength (a fitness company). He describes himself as a "dilettante" in AI but brings a fascinating outsider's perspective.Jared Feather (IFBB Pro bodybuilder and exercise physiologist)The Big Questions:1. When is superintelligence coming?2. Does AI actually understand anything?3. The Simulation Debate (The Spiciest Part)4. Will AI kill us all? (The Doomer Debate)5. What happens to human jobs and purpose?6. Do we need suffering?Mikes channel: https://www.youtube.com/channel/UCfQgsKhHjSyRLOp9mnffqVgRESCRIPT INTERACTIVE PLAYER: https://app.rescript.info/public/share/GVMUXHCqctPkXH8WcYtufFG7FQcdJew_RL_MLgMKU1U---TIMESTAMPS:00:00:00 Introduction & Workout Demo00:04:15 ASI Timelines & Definitions00:10:24 The Embodiment Debate00:18:28 Neutrinos & Abstract Knowledge00:25:56 Can AI Learn From YouTube?00:31:25 Diversity of Intelligence00:36:00 AI Slop & Understanding00:45:18 The Simulation Argument: Fire & Water00:58:36 Consciousness & Zombies01:04:30 Do Reasoning Models Actually Reason?01:12:00 The Live Learning Problem01:19:15 Superintelligence & Benevolence01:28:59 What is True Agency?01:37:20 Game Theory & The "Kill All Humans" Fallacy01:48:05 Regulation & The China Factor01:55:52 Mind Uploading & The Future of Love02:04:41 Economics of ASI: Will We Be Useless?02:13:35 The Matrix & The Value of Suffering02:17:30 Transhumanism & Inequality02:21:28 Debrief: AI Medical Advice & Final Thoughts---REFERENCES:Paper:[00:10:45] Alchemy and Artificial Intelligence (Dreyfus)https://www.rand.org/content/dam/rand/pubs/papers/2006/P3244.pdf[00:10:55] The Chinese Room Argument (John Searle)https://home.csulb.edu/~cwallis/382/readings/482/searle.minds.brains.programs.bbs.1980.pdf[00:11:05] The Symbol Grounding Problem (Stephen Harnad)https://arxiv.org/html/cs/9906002[00:23:00] Attention Is All You Needhttps://arxiv.org/abs/1706.03762[00:45:00] GPT-4 Technical Reporthttps://arxiv.org/abs/2303.08774[01:45:00] Anthropic Agentic Misalignment Paperhttps://www.anthropic.com/research/agentic-misalignment[02:17:45] Retatrutidehttps://pubmed.ncbi.nlm.nih.gov/37366315/Organization:[00:15:50] CERNhttps://home.cern/[01:05:00] METR Long Horizon Evaluationshttps://evaluations.metr.org/MLST Episode:[00:23:10] MLST: Llion Jones - Inventors' Remorsehttps://www.youtube.com/watch?v=DtePicx_kFY[00:50:30] MLST: Blaise Agüera y Arcas Interviewhttps://www.youtube.com/watch?v=rMSEqJ_4EBk[01:10:00] MLST: David Krakauerhttps://www.youtube.com/watch?v=dY46YsGWMIcEvent:[00:23:40] ARC Prize/Challengehttps://arcprize.org/Book:[00:24:45] The Brain Abstractedhttps://www.amazon.com/Brain-Abstracted-Simplification-Philosophy-Neuroscience/dp/0262548046[00:47:55] Pamela McCorduckhttps://www.amazon.com/Machines-Who-Think-Artificial-Intelligence/dp/1568812051[01:23:15] The Singularity Is Nearer (Ray Kurzweil)https://www.amazon.com/Singularity-Nearer-Ray-Kurzweil-ebook/dp/B08Y6FYJVY[01:27:35] A Fire Upon The Deep (Vernor Vinge)https://www.amazon.com/Fire-Upon-Deep-S-F-MASTERWORKS-ebook/dp/B00AVUMIZE/[02:04:50] Deep Utopia (Nick Bostrom)https://www.amazon.com/Deep-Utopia-Meaning-Solved-World/dp/1646871642[02:05:00] Technofeudalism (Yanis Varoufakis)https://www.amazon.com/Technofeudalism-Killed-Capitalism-Yanis-Varoufakis/dp/1685891241Visual Context Needed:[00:29:40] AT-AT Walker (Star Wars)https://starwars.fandom.com/wiki/All_Terrain_Armored_TransportPerson:[00:33:15] Andrej Karpathyhttps://karpathy.ai/Video:[01:40:00] Mike Israetel vs Liron Shapira AI Doom Debatehttps://www.youtube.com/watch?v=RaDWSPMdM4oCompany:[02:26:30] Examine.comhttps://examine.com/

We often think of Large Language Models (LLMs) as all-knowing, but as the team reveals, they still struggle with the logic of a second-grader. Why can’t ChatGPT reliably add large numbers? Why does it "hallucinate" the laws of physics? The answer lies in the architecture. This episode explores how *Category Theory* —an ultra-abstract branch of mathematics—could provide the "Periodic Table" for neural networks, turning the "alchemy" of modern AI into a rigorous science.In this deep-dive exploration, *Andrew Dudzik*, *Petar Velichkovich*, *Taco Cohen*, *Bruno Gavranović*, and *Paul Lessard* join host *Tim Scarfe* to discuss the fundamental limitations of today’s AI and the radical mathematical framework that might fix them.TRANSCRIPT:https://app.rescript.info/public/share/LMreunA-BUpgP-2AkuEvxA7BAFuA-VJNAp2Ut4MkMWk---Key Insights in This Episode:* *The "Addition" Problem:* *Andrew Dudzik* explains why LLMs don't actually "know" math—they just recognize patterns. When you change a single digit in a long string of numbers, the pattern breaks because the model lacks the internal "machinery" to perform a simple carry operation.* *Beyond Alchemy:* deep learning is currently in its "alchemy" phase—we have powerful results, but we lack a unifying theory. Category Theory is proposed as the framework to move AI from trial-and-error to principled engineering. [00:13:49]* *Algebra with Colors:* To make Category Theory accessible, the guests use brilliant analogies—like thinking of matrices as *magnets with colors* that only snap together when the types match. This "partial compositionality" is the secret to building more complex internal reasoning. [00:09:17]* *Synthetic vs. Analytic Math:* *Paul Lessard* breaks down the philosophical shift needed in AI research: moving from "Analytic" math (what things are made of) to "Synthetic" math [00:23:41]---Why This Matters for AGIIf we want AI to solve the world's hardest scientific problems, it can't just be a "stochastic parrot." It needs to internalize the rules of logic and computation. By imbuing neural networks with categorical priors, researchers are attempting to build a future where AI doesn't just predict the next word—it understands the underlying structure of the universe.---TIMESTAMPS:00:00:00 The Failure of LLM Addition & Physics00:01:26 Tool Use vs Intrinsic Model Quality00:03:07 Efficiency Gains via Internalization00:04:28 Geometric Deep Learning & Equivariance00:07:05 Limitations of Group Theory00:09:17 Category Theory: Algebra with Colors00:11:25 The Systematic Guide of Lego-like Math00:13:49 The Alchemy Analogy & Unifying Theory00:15:33 Information Destruction & Reasoning00:18:00 Pathfinding & Monoids in Computation00:20:15 System 2 Reasoning & Error Awareness00:23:31 Analytic vs Synthetic Mathematics00:25:52 Morphisms & Weight Tying Basics00:26:48 2-Categories & Weight Sharing Theory00:28:55 Higher Categories & Emergence00:31:41 Compositionality & Recursive Folds00:34:05 Syntax vs Semantics in Network Design00:36:14 Homomorphisms & Multi-Sorted Syntax00:39:30 The Carrying Problem & Hopf FibrationsPetar Veličković (GDM)https://petar-v.com/Paul Lessardhttps://www.linkedin.com/in/paul-roy-lessard/Bruno Gavranovićhttps://www.brunogavranovic.com/Andrew Dudzik (GDM)https://www.linkedin.com/in/andrew-dudzik-222789142/---REFERENCES:Model:[00:01:05] Veohttps://deepmind.google/models/veo/[00:01:10] Geniehttps://deepmind.google/blog/genie-3-a-new-frontier-for-world-models/Paper:[00:04:30] Geometric Deep Learning Blueprinthttps://arxiv.org/abs/2104.13478https://www.youtube.com/watch?v=bIZB1hIJ4u8[00:16:45] AlphaGeometryhttps://arxiv.org/abs/2401.08312[00:16:55] AlphaCodehttps://arxiv.org/abs/2203.07814[00:17:05] FunSearchhttps://www.nature.com/articles/s41586-023-06924-6[00:37:00] Attention Is All You Needhttps://arxiv.org/abs/1706.03762[00:43:00] Categorical Deep Learninghttps://arxiv.org/abs/2402.15332

Is a car that wins a Formula 1 race the best choice for your morning commute? Probably not. In this sponsored deep dive with Prolific, we explore why the same logic applies to Artificial Intelligence. While models are currently shattering records on technical exams, they often fail the most important test of all: **the human experience.**Why High Benchmark Scores Don’t Mean Better AIJoining us are **Andrew Gordon** (Staff Researcher in Behavioral Science) and **Nora Petrova** (AI Researcher) from **Prolific**. They reveal the hidden flaws in how we currently rank AI and introduce a more rigorous, "humane" way to measure whether these models are actually helpful, safe, and relatable for real people.---Key Insights in This Episode:* *The F1 Car Analogy:* Andrew explains why a model that excels at the "Humanities Last Exam" might be a nightmare for daily use. Technical benchmarks often ignore the nuances of human communication and adaptability.* *The "Wild West" of AI Safety:* As users turn to AI for sensitive topics like mental health, Nora highlights the alarming lack of oversight and the "thin veneer" of safety training—citing recent controversial incidents like Grok-3’s "Mecha Hitler."* *Fixing the "Leaderboard Illusion":* The team critiques current popular rankings like Chatbot Arena, discussing how anonymous, unstratified voting can lead to biased results and how companies can "game" the system.* *The Xbox Secret to AI Ranking:* Discover how Prolific uses *TrueSkill*—the same algorithm Microsoft developed for Xbox Live matchmaking—to create a fairer, more statistically sound leaderboard for LLMs.* *The Personality Gap:* Early data from the **Humane Leaderboard** suggests that while AI is getting smarter, it is actually performing *worse* on metrics like personality, culture, and "sycophancy" (the tendency for models to become annoying "people-pleasers").---About the HUMAINE LeaderboardMoving beyond simple "A vs. B" testing, the researchers discuss their new framework that samples participants based on *census data* (Age, Ethnicity, Political Alignment). By using a representative sample of the general public rather than just tech enthusiasts, they are building a standard that reflects the values of the real world.*Are we building models for benchmarks, or are we building them for humans? It’s time to change the scoreboard.*Rescript link:https://app.rescript.info/public/share/IDqwjY9Q43S22qSgL5EkWGFymJwZ3SVxvrfpgHZLXQc---TIMESTAMPS:00:00:00 Introduction & The Benchmarking Problem00:01:58 The Fractured State of AI Evaluation00:03:54 AI Safety & Interpretability00:05:45 Bias in Chatbot Arena00:06:45 Prolific's Three Pillars Approach00:09:01 TrueSkill Ranking & Efficient Sampling00:12:04 Census-Based Representative Sampling00:13:00 Key Findings: Culture, Personality & Sycophancy---REFERENCES:Paper:[00:00:15] MMLUhttps://arxiv.org/abs/2009.03300[00:05:10] Constitutional AIhttps://arxiv.org/abs/2212.08073[00:06:45] The Leaderboard Illusionhttps://arxiv.org/abs/2504.20879[00:09:41] HUMAINE Framework Paperhttps://huggingface.co/blog/ProlificAI/humaine-frameworkCompany:[00:00:30] Prolifichttps://www.prolific.com[00:01:45] Chatbot Arenahttps://lmarena.ai/Person:[00:00:35] Andrew Gordonhttps://www.linkedin.com/in/andrew-gordon-03879919a/[00:00:45] Nora Petrovahttps://www.linkedin.com/in/nora-petrova/Event:Algorithm:[00:09:01] Microsoft TrueSkillhttps://www.microsoft.com/en-us/research/project/trueskill-ranking-system/Leaderboard:[00:09:21] Prolific HUMAINE Leaderboardhttps://www.prolific.com/humaine[00:09:31] HUMAINE HuggingFace Spacehttps://huggingface.co/spaces/ProlificAI/humaine-leaderboard[00:10:21] Prolific AI Leaderboard Portalhttps://www.prolific.com/leaderboardDataset:[00:09:51] Prolific Social Reasoning RLHF Datasethttps://huggingface.co/datasets/ProlificAI/social-reasoning-rlhfOrganization:[00:10:31] MLCommonshttps://mlcommons.org/

What if everything we think we know about AI understanding is wrong? Is compression the key to intelligence? Or is there something more—a leap from memorization to true abstraction? In this fascinating conversation, we sit down with **Professor Yi Ma**—world-renowned expert in deep learning, IEEE/ACM Fellow, and author of the groundbreaking new book *Learning Deep Representations of Data Distributions*. Professor Ma challenges our assumptions about what large language models actually do, reveals why 3D reconstruction isn't the same as understanding, and presents a unified mathematical theory of intelligence built on just two principles: **parsimony** and **self-consistency**.**SPONSOR MESSAGES START**—Prolific - Quality data. From real people. For faster breakthroughs.https://www.prolific.com/?utm_source=mlst—cyber•Fund https://cyber.fund/?utm_source=mlst is a founder-led investment firm accelerating the cybernetic economyHiring a SF VC Principal: https://talent.cyber.fund/companies/cyber-fund-2/jobs/57674170-ai-investment-principal#content?utm_source=mlstSubmit investment deck: https://cyber.fund/contact?utm_source=mlst—**END**Key Insights:**LLMs Don't Understand—They Memorize**Language models process text (*already* compressed human knowledge) using the same mechanism we use to learn from raw data. **The Illusion of 3D Vision**Sora and NeRFs etc that can reconstruct 3D scenes still fail miserably at basic spatial reasoning**"All Roads Lead to Rome"**Why adding noise is *necessary* for discovering structure.**Why Gradient Descent Actually Works**Natural optimization landscapes are surprisingly smooth—a "blessing of dimensionality" **Transformers from First Principles**Transformer architectures can be mathematically derived from compression principles—INTERACTIVE AI TRANSCRIPT PLAYER w/REFS (ReScript):https://app.rescript.info/public/share/Z-dMPiUhXaeMEcdeU6Bz84GOVsvdcfxU_8Ptu6CTKMQAbout Professor Yi MaYi Ma is the inaugural director of the School of Computing and Data Science at Hong Kong University and a visiting professor at UC Berkeley. https://people.eecs.berkeley.edu/~yima/https://scholar.google.com/citations?user=XqLiBQMAAAAJ&hl=en https://x.com/YiMaTweets **Slides from this conversation:**https://www.dropbox.com/scl/fi/sbhbyievw7idup8j06mlr/slides.pdf?rlkey=7ptovemezo8bj8tkhfi393fh9&dl=0**Related Talks by Professor Ma:**- Pursuing the Nature of Intelligence (ICLR): https://www.youtube.com/watch?v=LT-F0xSNSjo- Earlier talk at Berkeley: https://www.youtube.com/watch?v=TihaCUjyRLMTIMESTAMPS:00:00:00 Introduction00:02:08 The First Principles Book & Research Vision00:05:21 Two Pillars: Parsimony & Consistency00:09:50 Evolution vs. Learning: The Compression Mechanism00:14:36 LLMs: Memorization Masquerading as Understanding00:19:55 The Leap to Abstraction: Empirical vs. Scientific00:27:30 Platonism, Deduction & The ARC Challenge00:35:57 Specialization & The Cybernetic Legacy00:41:23 Deriving Maximum Rate Reduction00:48:21 The Illusion of 3D Understanding: Sora & NeRF00:54:26 All Roads Lead to Rome: The Role of Noise00:59:56 All Roads Lead to Rome: The Role of Noise01:00:14 Benign Non-Convexity: Why Optimization Works01:06:35 Double Descent & The Myth of Overfitting01:14:26 Self-Consistency: Closed-Loop Learning01:21:03 Deriving Transformers from First Principles01:30:11 Verification & The Kevin Murphy Question01:34:11 CRATE vs. ViT: White-Box AI & ConclusionREFERENCES:Book:[00:03:04] Learning Deep Representations of Data Distributionshttps://ma-lab-berkeley.github.io/deep-representation-learning-book/[00:18:38] A Brief History of Intelligencehttps://www.amazon.co.uk/BRIEF-HISTORY-INTELLIGEN-HB-Evolution/dp/0008560099[00:38:14] Cyberneticshttps://mitpress.mit.edu/9780262730099/cybernetics/Book (Yi Ma):[00:03:14] 3-D Vision bookhttps://link.springer.com/book/10.1007/978-0-387-21779-6<TRUNC> refs on ReScript link/YT

Pedro Domingos, author of the bestselling book "The Master Algorithm," introduces his latest work: Tensor Logic - a new programming language he believes could become the fundamental language for artificial intelligence.Think of it like this: Physics found its language in calculus. Circuit design found its language in Boolean logic. Pedro argues that AI has been missing its language - until now.**SPONSOR MESSAGES START**—Build your ideas with AI Studio from Google - http://ai.studio/build—Prolific - Quality data. From real people. For faster breakthroughs.https://www.prolific.com/?utm_source=mlst—cyber•Fund https://cyber.fund/?utm_source=mlst is a founder-led investment firm accelerating the cybernetic economyHiring a SF VC Principal: https://talent.cyber.fund/companies/cyber-fund-2/jobs/57674170-ai-investment-principal#content?utm_source=mlstSubmit investment deck: https://cyber.fund/contact?utm_source=mlst—**END**Current AI is split between two worlds that don't play well together:Deep Learning (neural networks, transformers, ChatGPT) - great at learning from data, terrible at logical reasoningSymbolic AI (logic programming, expert systems) - great at logical reasoning, terrible at learning from messy real-world dataTensor Logic unifies both. It's a single language where you can:Write logical rules that the system can actually learn and modifyDo transparent, verifiable reasoning (no hallucinations)Mix "fuzzy" analogical thinking with rock-solid deductionINTERACTIVE TRANSCRIPT:https://app.rescript.info/public/share/NP4vZQ-GTETeN_roB2vg64vbEcN7isjJtz4C86WSOhw TOC:00:00:00 - Introduction00:04:41 - What is Tensor Logic?00:09:59 - Tensor Logic vs PyTorch & Einsum00:17:50 - The Master Algorithm Connection00:20:41 - Predicate Invention & Learning New Concepts00:31:22 - Symmetries in AI & Physics00:35:30 - Computational Reducibility & The Universe00:43:34 - Technical Details: RNN Implementation00:45:35 - Turing Completeness Debate00:56:45 - Transformers vs Turing Machines01:02:32 - Reasoning in Embedding Space01:11:46 - Solving Hallucination with Deductive Modes01:16:17 - Adoption Strategy & Migration Path01:21:50 - AI Education & Abstraction01:24:50 - The Trillion-Dollar WasteREFSTensor Logic: The Language of AI [Pedro Domingos]https://arxiv.org/abs/2510.12269The Master Algorithm [Pedro Domingos]https://www.amazon.co.uk/Master-Algorithm-Ultimate-Learning-Machine/dp/0241004543 Einsum is All you Need (TIM ROCKTÄSCHEL)https://rockt.ai/2018/04/30/einsum https://www.youtube.com/watch?v=6DrCq8Ry2cw Autoregressive Large Language Models are Computationally Universal (Dale Schuurmans et al - GDM)https://arxiv.org/abs/2410.03170 Memory Augmented Large Language Models are Computationally Universal [Dale Schuurmans]https://arxiv.org/pdf/2301.04589 On the computational power of NNs [95/Siegelmann]https://binds.cs.umass.edu/papers/1995_Siegelmann_JComSysSci.pdf Sebastian Bubeckhttps://www.reddit.com/r/OpenAI/comments/1oacp38/openai_researcher_sebastian_bubeck_falsely_claims/ I am a strange loop - Hofstadterhttps://www.amazon.co.uk/Am-Strange-Loop-Douglas-Hofstadter/dp/0465030793 Stephen Wolframhttps://www.youtube.com/watch?v=dkpDjd2nHgo The Complex World: An Introduction to the Foundations of Complexity Science [David C. Krakauer]https://www.amazon.co.uk/Complex-World-Introduction-Foundations-Complexity/dp/1947864629 Geometric Deep Learninghttps://www.youtube.com/watch?v=bIZB1hIJ4u8Andrew Wilson (NYU)https://www.youtube.com/watch?v=M-jTeBCEGHcYi Mahttps://www.patreon.com/posts/yi-ma-scientific-141953348 Roger Penrose - road to realityhttps://www.amazon.co.uk/Road-Reality-Complete-Guide-Universe/dp/0099440687 Artificial Intelligence: A Modern Approach [Russel and Norvig]https://www.amazon.co.uk/Artificial-Intelligence-Modern-Approach-Global/dp/1292153962

The Transformer architecture (which powers ChatGPT and nearly all modern AI) might be trapping the industry in a localized rut, preventing us from finding true intelligent reasoning, according to the person who co-invented it. Llion Jones and Luke Darlow, key figures at the research lab Sakana AI, join the show to make this provocative argument, and also introduce new research which might lead the way forwards.**SPONSOR MESSAGES START**—Build your ideas with AI Studio from Google - http://ai.studio/build—Tufa AI Labs is hiring ML Research Engineers https://tufalabs.ai/ —cyber•Fund https://cyber.fund/?utm_source=mlst is a founder-led investment firm accelerating the cybernetic economyHiring a SF VC Principal: https://talent.cyber.fund/companies/cyber-fund-2/jobs/57674170-ai-investment-principal#content?utm_source=mlstSubmit investment deck: https://cyber.fund/contact?utm_source=mlst—**END**The "Spiral" Problem – Llion uses a striking visual analogy to explain what current AI is missing. If you ask a standard neural network to understand a spiral shape, it solves it by drawing tiny straight lines that just happen to look like a spiral. It "fakes" the shape without understanding the concept of spiraling. Introducing the Continuous Thought Machine (CTM) Luke Darlow deep dives into their solution: a biology-inspired model that fundamentally changes how AI processes information.The Maze Analogy: Luke explains that standard AI tries to solve a maze by staring at the whole image and guessing the entire path instantly. Their new machine "walks" through the maze step-by-step.Thinking Time: This allows the AI to "ponder." If a problem is hard, the model can naturally spend more time thinking about it before answering, effectively allowing it to correct its own mistakes and backtrack—something current Language Models struggle to do genuinely.https://sakana.ai/https://x.com/YesThisIsLionhttps://x.com/LearningLukeDTRANSCRIPT:https://app.rescript.info/public/share/crjzQ-Jo2FQsJc97xsBdfzfOIeMONpg0TFBuCgV2Fu8TOC:00:00:00 - Stepping Back from Transformers00:00:43 - Introduction to Continuous Thought Machines (CTM)00:01:09 - The Changing Atmosphere of AI Research00:04:13 - Sakana’s Philosophy: Research Freedom00:07:45 - The Local Minimum of Large Language Models00:18:30 - Representation Problems: The Spiral Example00:29:12 - Technical Deep Dive: CTM Architecture00:36:00 - Adaptive Computation & Maze Solving00:47:15 - Model Calibration & Uncertainty01:00:43 - Sudoku Bench: Measuring True ReasoningREFS:Why Greatness Cannot be planned [Kenneth Stanley]https://www.amazon.co.uk/Why-Greatness-Cannot-Planned-Objective/dp/3319155237https://www.youtube.com/watch?v=lhYGXYeMq_E The Hardware Lottery [Sara Hooker]https://arxiv.org/abs/2009.06489https://www.youtube.com/watch?v=sQFxbQ7ade0 Continuous Thought Machines [Luke Darlow et al / Sakana]https://arxiv.org/abs/2505.05522https://sakana.ai/ctm/ LSTM: The Comeback Story? [Prof. Sepp Hochreiter]https://www.youtube.com/watch?v=8u2pW2zZLCs Questioning Representational Optimism in Deep Learning: The Fractured Entangled Representation Hypothesis [Kumar/Stanley]https://arxiv.org/pdf/2505.11581 A Spline Theory of Deep Networks [Randall Balestriero]https://proceedings.mlr.press/v80/balestriero18b/balestriero18b.pdf https://www.youtube.com/watch?v=86ib0sfdFtw https://www.youtube.com/watch?v=l3O2J3LMxqI On the Biology of a Large Language Model [Anthropic, Jack Lindsey et al]https://transformer-circuits.pub/2025/attribution-graphs/biology.html The ARC Prize 2024 Winning Algorithm [Daniel Franzen and Jan Disselhoff] “The ARChitects”https://www.youtube.com/watch?v=mTX_sAq--zYNeural Turing Machine [Graves]https://arxiv.org/pdf/1410.5401 Adaptive Computation Time for Recurrent Neural Networks [Graves]https://arxiv.org/abs/1603.08983 Sudoko Bench [Sakana] https://pub.sakana.ai/sudoku/

Ever wonder where AI models actually get their "intelligence"? We reveal the dirty secret of Silicon Valley: behind every impressive AI system are thousands of real humans providing crucial data, feedback, and expertise.Guest: Phelim Bradley, CEO and Co-founder of ProlificPhelim Bradley runs Prolific, a platform that connects AI companies with verified human experts who help train and evaluate their models. Think of it as a sophisticated marketplace matching the right human expertise to the right AI task - whether that's doctors evaluating medical chatbots or coders reviewing AI-generated software.Prolific: https://prolific.com/?utm_source=mlsthttps://uk.linkedin.com/in/phelim-bradley-84300826The discussion dives into:**The human data pipeline**: How AI companies rely on human intelligence to train, refine, and validate their models - something rarely discussed openly**Quality over quantity**: Why paying humans well and treating them as partners (not commodities) produces better AI training data**The matching challenge**: How Prolific solves the complex problem of finding the right expert for each specific task, similar to matching Uber drivers to riders but with deep expertise requirements**Future of work**: What it means when human expertise becomes an on-demand service, and why this might actually create more opportunities rather than fewer**Geopolitical implications**: Why the centralization of AI development in US tech companies should concern Europe and the UK

"What is life?" - asks Chris Kempes, a professor at the Santa Fe Institute.Chris explains that scientists are moving beyond a purely Earth-based, biological view and are searching for a universal theory of life that could apply to anything, anywhere in the universe. He proposes that things we don't normally consider "alive"—like human culture, language, or even artificial intelligence; could be seen as life forms existing on different "substrates".To understand this, Chris presents a fascinating three-level framework:- Materials: The physical stuff life is made of. He argues this could be incredibly diverse across the universe, and we shouldn't expect alien life to share our biochemistry.- Constraints: The universal laws of physics (like gravity or diffusion) that all life must obey, regardless of what it's made of. This is where different life forms start to look more similar.- Principles: At the highest level are abstract principles like evolution and learning. Chris suggests these computational or "optimization" rules are what truly define a living system.A key idea is "convergence" – using the example of the eye. It's such a complex organ that you'd think it evolved only once. However, eyes evolved many separate times across different species. This is because the physics of light provides a clear "target", and evolution found similar solutions to the problem of seeing, even with different starting materials.**SPONSOR MESSAGES**—Prolific - Quality data. From real people. For faster breakthroughs.https://www.prolific.com/?utm_source=mlst—Check out NotebookLM from Google here - https://notebooklm.google.com/ - it’s really good for doing research directly from authoritative source material, minimising hallucinations. —cyber•Fund https://cyber.fund/?utm_source=mlst is a founder-led investment firm accelerating the cybernetic economyHiring a SF VC Principal: https://talent.cyber.fund/companies/cyber-fund-2/jobs/57674170-ai-investment-principal#content?utm_source=mlstSubmit investment deck: https://cyber.fund/contact?utm_source=mlst— Prof. Chris Kempes:https://www.santafe.edu/people/profile/chris-kempesTRANSCRIPT:https://app.rescript.info/public/share/Y2cI1i0nX_-iuZitvlguHvaVLQTwPX1Y_E1EHxV0i9ITOC:00:00:00 - Introduction to Chris Kempes and the Santa Fe Institute00:02:28 - The Three Cultures of Science00:05:08 - What Makes a Good Scientific Theory?00:06:50 - The Universal Theory of Life00:09:40 - The Role of Material in Life00:12:50 - A Hierarchy for Understanding Life00:13:55 - How Life Diversifies and Converges00:17:53 - Adaptive Processes and Defining Life00:19:28 - Functionalism, Memes, and Phylogenies00:22:58 - Convergence at Multiple Levels00:25:45 - The Possibility of Simulating Life00:28:16 - Intelligence, Parasitism, and Spectrums of Life00:32:39 - Phase Changes in Evolution00:36:16 - The Separation of Matter and Logic00:37:21 - Assembly Theory and Quantifying ComplexityREFS:Developing a predictive science of the biosphere requires the integration of scientific cultures [Kempes et al]https://www.pnas.org/doi/10.1073/pnas.2209196121Seeing with an extra sense (“Dangerous prediction”) [Rob Phillips]https://www.sciencedirect.com/science/article/pii/S0960982224009035 The Multiple Paths to Multiple Life [Christopher P. Kempes & David C. Krakauer]https://link.springer.com/article/10.1007/s00239-021-10016-2 The Information Theory of Individuality [David Krakauer et al]https://arxiv.org/abs/1412.2447Minds, Brains and Programs [Searle]https://home.csulb.edu/~cwallis/382/readings/482/searle.minds.brains.programs.bbs.1980.pdf The error thresholdhttps://www.sciencedirect.com/science/article/abs/pii/S0168170204003843Assembly theory and its relationship with computational complexity [Kempes et al]https://arxiv.org/abs/2406.12176

Blaise Agüera y Arcas explores some mind-bending ideas about what intelligence and life really are—and why they might be more similar than we think (filmed at ALIFE conference, 2025 - https://2025.alife.org/).Life and intelligence are both fundamentally computational (he says). From the very beginning, living things have been running programs. Your DNA? It's literally a computer program, and the ribosomes in your cells are tiny universal computers building you according to those instructions.**SPONSOR MESSAGES**—Prolific - Quality data. From real people. For faster breakthroughs.https://www.prolific.com/?utm_source=mlst—cyber•Fund https://cyber.fund/?utm_source=mlst is a founder-led investment firm accelerating the cybernetic economyOct SF conference - https://dagihouse.com/?utm_source=mlst - Joscha Bach keynoting(!) + OAI, Anthropic, NVDA,++Hiring a SF VC Principal: https://talent.cyber.fund/companies/cyber-fund-2/jobs/57674170-ai-investment-principal#content?utm_source=mlstSubmit investment deck: https://cyber.fund/contact?utm_source=mlst— Blaise argues that there is more to evolution than random mutations (like most people think). The secret to increasing complexity is *merging* i.e. when different organisms or systems come together and combine their histories and capabilities.Blaise describes his "BFF" experiment where random computer code spontaneously evolved into self-replicating programs, showing how purpose and complexity can emerge from pure randomness through computational processes.https://en.wikipedia.org/wiki/Blaise_Ag%C3%BCera_y_Arcashttps://x.com/blaiseaguera?lang=enTRANSCRIPT:https://app.rescript.info/public/share/VX7Gktfr3_wIn4Bj7cl9StPBO1MN4R5lcJ11NE99hLgTOC:00:00:00 Introduction - New book "What is Intelligence?"00:01:45 Life as computation - Von Neumann's insights00:12:00 BFF experiment - How purpose emerges00:26:00 Symbiogenesis and evolutionary complexity00:40:00 Functionalism and consciousness00:49:45 AI as part of collective human intelligence00:57:00 Comparing AI and human cognitionREFS:What is intelligence [Blaise Agüera y Arcas]https://whatisintelligence.antikythera.org/ [Read free online, interactive rich media]https://mitpress.mit.edu/9780262049955/what-is-intelligence/ [MIT Press]Large Language Models and Emergence: A Complex Systems Perspectivehttps://arxiv.org/abs/2506.11135Our first Noam Chomsky MLST interviewhttps://www.youtube.com/watch?v=axuGfh4UR9Q Chance and Necessity [Jacques Monod]https://monoskop.org/images/9/99/Monod_Jacques_Chance_and_Necessity.pdfWonderful Life: The Burgess Shale and the History of Nature [Stephen Jay Gould]https://www.amazon.co.uk/Wonderful-Life-Burgess-Nature-History/dp/0099273454 The major evolutionary transitions [E Szathmáry, J M Smith]https://wiki.santafe.edu/images/0/0e/Szathmary.MaynardSmith_1995_Nature.pdfDon't Sleep, There Are Snakes: Life and Language in the Amazonian Jungle [Dan Everett]https://www.amazon.com/Dont-Sleep-There-Are-Snakes/dp/0307386120 The Nature of Technology: What It Is and How It Evolves [W. Brian Arthur] https://www.amazon.com/Nature-Technology-What-How-Evolves-ebook/dp/B002RI9W16/ The MANIAC [Benjamin Labatut]https://www.amazon.com/MANIAC-Benjam%C3%ADn-Labatut/dp/1782279814 When We Cease to Understand the World [Benjamin Labatut]https://www.amazon.com/When-We-Cease-Understand-World/dp/1681375664/ The Boys in the Boat [Dan Brown]https://www.amazon.com/Boys-Boat-Americans-Berlin-Olympics/dp/0143125478 [Petter Johansson] (Split brain)https://www.lucs.lu.se/fileadmin/user_upload/lucs/2011/01/Johansson-et-al.-2006-How-Something-Can-Be-Said-About-Telling-More-Than-We-Can-Know.pdfIf Anyone Builds It, Everyone Dies [Eliezer Yudkowsky, Nate Soares]https://www.amazon.com/Anyone-Builds-Everyone-Dies-Superhuman/dp/0316595640 The science of cycologyhttps://link.springer.com/content/pdf/10.3758/bf03195929.pdf <trunc, see YT desc for more>

We sat down with Sara Saab (VP of Product at Prolific) and Enzo Blindow (VP of Data and AI at Prolific) to explore the critical role of human evaluation in AI development and the challenges of aligning AI systems with human values. Prolific is a human annotation and orchestration platform for AI used by many of the major AI labs. This is a sponsored show in partnership with Prolific. **SPONSOR MESSAGES**—cyber•Fund https://cyber.fund/?utm_source=mlst is a founder-led investment firm accelerating the cybernetic economyOct SF conference - https://dagihouse.com/?utm_source=mlst - Joscha Bach keynoting(!) + OAI, Anthropic, NVDA,++Hiring a SF VC Principal: https://talent.cyber.fund/companies/cyber-fund-2/jobs/57674170-ai-investment-principal#content?utm_source=mlstSubmit investment deck: https://cyber.fund/contact?utm_source=mlst— While technologists want to remove humans from the loop for speed and efficiency, these non-deterministic AI systems actually require more human oversight than ever before. Prolific's approach is to put "well-treated, verified, diversely demographic humans behind an API" - making human feedback as accessible as any other infrastructure service.When AI models like Grok 4 achieve top scores on technical benchmarks but feel awkward or problematic to use in practice, it exposes the limitations of our current evaluation methods. The guests argue that optimizing for benchmarks may actually weaken model performance in other crucial areas, like cultural sensitivity or natural conversation.We also discuss Anthropic's research showing that frontier AI models, when given goals and access to information, independently arrived at solutions involving blackmail - without any prompting toward unethical behavior. Even more concerning, the more sophisticated the model, the more susceptible it was to this "agentic misalignment." Enzo and Sarah present Prolific's "Humane" leaderboard as an alternative to existing benchmarking systems. By stratifying evaluations across diverse demographic groups, they reveal that different populations have vastly different experiences with the same AI models. Looking ahead, the guests imagine a world where humans take on coaching and teaching roles for AI systems - similar to how we might correct a child or review code. This also raises important questions about working conditions and the evolution of labor in an AI-augmented world. Rather than replacing humans entirely, we may be moving toward more sophisticated forms of human-AI collaboration.As AI tech becomes more powerful and general-purpose, the quality of human evaluation becomes more critical, not less. We need more representative evaluation frameworks that capture the messy reality of human values and cultural diversity. Visit Prolific: https://www.prolific.com/Sara Saab (VP Product):https://uk.linkedin.com/in/sarasaabEnzo Blindow (VP Data & AI):https://uk.linkedin.com/in/enzoblindowTRANSCRIPT:https://app.rescript.info/public/share/xZ31-0kJJ_xp4zFSC-bunC8-hJNkHpbm7Lg88RFcuLETOC:[00:00:00] Intro & Background[00:03:16] Human-in-the-Loop Challenges[00:17:19] Can AIs Understand?[00:32:02] Benchmarking & Vibes[00:51:00] Agentic Misalignment Study[01:03:00] Data Quality vs Quantity[01:16:00] Future of AI OversightREFS:Anthropic Agentic Misalignmenthttps://www.anthropic.com/research/agentic-misalignmentValue Compasshttps://arxiv.org/pdf/2409.09586Reasoning Models Don’t Always Say What They Think (Anthropic)https://www.anthropic.com/research/reasoning-models-dont-say-think https://assets.anthropic.com/m/71876fabef0f0ed4/original/reasoning_models_paper.pdfApollo research - science of evals blog posthttps://www.apolloresearch.ai/blog/we-need-a-science-of-evals Leaderboard Illusion https://www.youtube.com/watch?v=9W_OhS38rIE MLST videoThe Leaderboard Illusion [2025]Shivalika Singh et alhttps://arxiv.org/abs/2504.20879(Truncated, full list on YT)

Dr. Ilia Shumailov - Former DeepMind AI Security Researcher, now building security tools for AI agentsEver wondered what happens when AI agents start talking to each other—or worse, when they start breaking things? Ilia Shumailov spent years at DeepMind thinking about exactly these problems, and he's here to explain why securing AI is way harder than you think.**SPONSOR MESSAGES**—Check out notebooklm for your research project, it's really powerfulhttps://notebooklm.google.com/—Take the Prolific human data survey - https://www.prolific.com/humandatasurvey?utm_source=mlst and be the first to see the results and benchmark their practices against the wider community!—cyber•Fund https://cyber.fund/?utm_source=mlst is a founder-led investment firm accelerating the cybernetic economyOct SF conference - https://dagihouse.com/?utm_source=mlst - Joscha Bach keynoting(!) + OAI, Anthropic, NVDA,++Hiring a SF VC Principal: https://talent.cyber.fund/companies/cyber-fund-2/jobs/57674170-ai-investment-principal#content?utm_source=mlstSubmit investment deck: https://cyber.fund/contact?utm_source=mlst— We're racing toward a world where AI agents will handle our emails, manage our finances, and interact with sensitive data 24/7. But there is a problem. These agents are nothing like human employees. They never sleep, they can touch every endpoint in your system simultaneously, and they can generate sophisticated hacking tools in seconds. Traditional security measures designed for humans simply won't work.Dr. Ilia Shumailovhttps://x.com/iliaishackedhttps://iliaishacked.github.io/https://sequrity.ai/TRANSCRIPT:https://app.rescript.info/public/share/dVGsk8dz9_V0J7xMlwguByBq1HXRD6i4uC5z5r7EVGMTOC:00:00:00 - Introduction & Trusted Third Parties via ML00:03:45 - Background & Career Journey00:06:42 - Safety vs Security Distinction00:09:45 - Prompt Injection & Model Capability00:13:00 - Agents as Worst-Case Adversaries00:15:45 - Personal AI & CAML System Defense00:19:30 - Agents vs Humans: Threat Modeling00:22:30 - Calculator Analogy & Agent Behavior00:25:00 - IMO Math Solutions & Agent Thinking00:28:15 - Diffusion of Responsibility & Insider Threats00:31:00 - Open Source Security Concerns00:34:45 - Supply Chain Attacks & Trust Issues00:39:45 - Architectural Backdoors00:44:00 - Academic Incentives & Defense Work00:48:30 - Semantic Censorship & Halting Problem00:52:00 - Model Collapse: Theory & Criticism00:59:30 - Career Advice & Ross Anderson TributeREFS:Lessons from Defending Gemini Against Indirect Prompt Injectionshttps://arxiv.org/abs/2505.14534Defeating Prompt Injections by Design. Debenedetti, E., Shumailov, I., Fan, T., Hayes, J., Carlini, N., Fabian, D., Kern, C., Shi, C., Terzis, A., & Tramèr, F. https://arxiv.org/pdf/2503.18813Agentic Misalignment: How LLMs could be insider threatshttps://www.anthropic.com/research/agentic-misalignmentSTOP ANTHROPOMORPHIZING INTERMEDIATE TOKENS AS REASONING/THINKING TRACES!Subbarao Kambhampati et alhttps://arxiv.org/pdf/2504.09762Meiklejohn, S., Blauzvern, H., Maruseac, M., Schrock, S., Simon, L., & Shumailov, I. (2025). Machine learning models have a supply chain problem. https://arxiv.org/abs/2505.22778 Gao, Y., Shumailov, I., & Fawaz, K. (2025). Supply-chain attacks in machine learning frameworks. https://openreview.net/pdf?id=EH5PZW6aCrApache Log4j Vulnerability Guidancehttps://www.cisa.gov/news-events/news/apache-log4j-vulnerability-guidance Bober-Irizar, M., Shumailov, I., Zhao, Y., Mullins, R., & Papernot, N. (2022). Architectural backdoors in neural networks. https://arxiv.org/pdf/2206.07840Position: Fundamental Limitations of LLM Censorship Necessitate New ApproachesDavid Glukhov, Ilia Shumailov, ...https://proceedings.mlr.press/v235/glukhov24a.html AlphaEvolve MLST interview [Matej Balog, Alexander Novikov]https://www.youtube.com/watch?v=vC9nAosXrJw

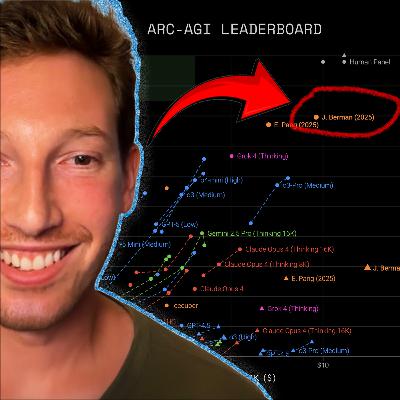

We need AI systems to synthesise new knowledge, not just compress the data they see. Jeremy Berman, is a research scientist at Reflection AI and recent winner of the ARC-AGI v2 public leaderboard.**SPONSOR MESSAGES**—Take the Prolific human data survey - https://www.prolific.com/humandatasurvey?utm_source=mlst and be the first to see the results and benchmark their practices against the wider community!—cyber•Fund https://cyber.fund/?utm_source=mlst is a founder-led investment firm accelerating the cybernetic economyOct SF conference - https://dagihouse.com/?utm_source=mlst - Joscha Bach keynoting(!) + OAI, Anthropic, NVDA,++Hiring a SF VC Principal: https://talent.cyber.fund/companies/cyber-fund-2/jobs/57674170-ai-investment-principal#content?utm_source=mlstSubmit investment deck: https://cyber.fund/contact?utm_source=mlst— Imagine trying to teach an AI to think like a human i.e. solving puzzles that are easy for us but stump even the smartest models. Jeremy's evolutionary approach—evolving natural language descriptions instead of python code like his last version—landed him at the top with about 30% accuracy on the ARCv2.We discuss why current AIs are like "stochastic parrots" that memorize but struggle to truly reason or innovate as well as big ideas like building "knowledge trees" for real understanding, the limits of neural networks versus symbolic systems, and whether we can train models to synthesize new ideas without forgetting everything else. Jeremy Berman:https://x.com/jerber888TRANSCRIPT:https://app.rescript.info/public/share/qvCioZeZJ4Q_NlR66m-hNUZnh-qWlUJcS15Wc2OGwD0TOC:Introduction and Overview [00:00:00]ARC v1 Solution [00:07:20]Evolutionary Python Approach [00:08:00]Trade-offs in Depth vs. Breadth [00:10:33]ARC v2 Improvements [00:11:45]Natural Language Shift [00:12:35]Model Thinking Enhancements [00:13:05]Neural Networks vs. Symbolism Debate [00:14:24]Turing Completeness Discussion [00:15:24]Continual Learning Challenges [00:19:12]Reasoning and Intelligence [00:29:33]Knowledge Trees and Synthesis [00:50:15]Creativity and Invention [00:56:41]Future Directions and Closing [01:02:30]REFS:Jeremy’s 2024 article on winning ARCAGI1-pubhttps://jeremyberman.substack.com/p/how-i-got-a-record-536-on-arc-agiGetting 50% (SoTA) on ARC-AGI with GPT-4o [Greenblatt]https://blog.redwoodresearch.org/p/getting-50-sota-on-arc-agi-with-gpt https://www.youtube.com/watch?v=z9j3wB1RRGA [his MLST interview]A Thousand Brains: A New Theory of Intelligence [Hawkins]https://www.amazon.com/Thousand-Brains-New-Theory-Intelligence/dp/1541675819https://www.youtube.com/watch?v=6VQILbDqaI4 [MLST interview]Francois Chollet + Mike Knoop’s labhttps://ndea.com/On the Measure of Intelligence [Chollet]https://arxiv.org/abs/1911.01547On the Biology of a Large Language Model [Anthropic]https://transformer-circuits.pub/2025/attribution-graphs/biology.html The ARChitects [won 2024 ARC-AGI-1-private]https://www.youtube.com/watch?v=mTX_sAq--zY Connectionism critique 1998 [Fodor/Pylshyn]https://uh.edu/~garson/F&P1.PDF Questioning Representational Optimism in Deep Learning: The Fractured Entangled Representation Hypothesis [Kumar/Stanley]https://arxiv.org/pdf/2505.11581 AlphaEvolve interview (also program synthesis)https://www.youtube.com/watch?v=vC9nAosXrJw ShinkaEvolve: Evolving New Algorithms with LLMs, Orders of Magnitude More Efficiently [Lange et al]https://sakana.ai/shinka-evolve/ Deep learning with Python Rev 3 [Chollet] - READ CHAPTER 19 NOW!https://deeplearningwithpython.io/

Professor Andrew Wilson from NYU explains why many common-sense ideas in artificial intelligence might be wrong. For decades, the rule of thumb in machine learning has been to fear complexity. The thinking goes: if your model has too many parameters (is "too complex") for the amount of data you have, it will "overfit" by essentially memorizing the data instead of learning the underlying patterns. This leads to poor performance on new, unseen data. This is known as the classic "bias-variance trade-off" i.e. a balancing act between a model that's too simple and one that's too complex.**SPONSOR MESSAGES**—Tufa AI Labs is an AI research lab based in Zurich. **They are hiring ML research engineers!** This is a once in a lifetime opportunity to work with one of the best labs in EuropeContact Benjamin Crouzier - https://tufalabs.ai/ —Take the Prolific human data survey - https://www.prolific.com/humandatasurvey?utm_source=mlst and be the first to see the results and benchmark their practices against the wider community!—cyber•Fund https://cyber.fund/?utm_source=mlst is a founder-led investment firm accelerating the cybernetic economyOct SF conference - https://dagihouse.com/?utm_source=mlst - Joscha Bach keynoting(!) + OAI, Anthropic, NVDA,++Hiring a SF VC Principal: https://talent.cyber.fund/companies/cyber-fund-2/jobs/57674170-ai-investment-principal#content?utm_source=mlstSubmit investment deck: https://cyber.fund/contact?utm_source=mlst— Description Continued:Professor Wilson challenges this fundamental belief (fearing complexity). He makes a few surprising points:**Bigger Can Be Better**: massive models don't just get more flexible; they also develop a stronger "simplicity bias". So, if your model is overfitting, the solution might paradoxically be to make it even bigger.**The "Bias-Variance Trade-off" is a Misnomer**: Wilson claims you don't actually have to trade one for the other. You can have a model that is incredibly expressive and flexible while also being strongly biased toward simple solutions. He points to the "double descent" phenomenon, where performance first gets worse as models get more complex, but then surprisingly starts getting better again.**Honest Beliefs and Bayesian Thinking**: His core philosophy is that we should build models that honestly represent our beliefs about the world. We believe the world is complex, so our models should be expressive. But we also believe in Occam's razor—that the simplest explanation is often the best. He champions Bayesian methods, which naturally balance these two ideas through a process called marginalization, which he describes as an automatic Occam's razor.TOC:[00:00:00] Introduction and Thesis[00:04:19] Challenging Conventional Wisdom[00:11:17] The Philosophy of a Scientist-Engineer[00:16:47] Expressiveness, Overfitting, and Bias[00:28:15] Understanding, Compression, and Kolmogorov Complexity[01:05:06] The Surprising Power of Generalization[01:13:21] The Elegance of Bayesian Inference[01:33:02] The Geometry of Learning[01:46:28] Practical Advice and The Future of AIProf. Andrew Gordon Wilson:https://x.com/andrewgwilshttps://cims.nyu.edu/~andrewgw/https://scholar.google.com/citations?user=twWX2LIAAAAJ&hl=en https://www.youtube.com/watch?v=Aja0kZeWRy4 https://www.youtube.com/watch?v=HEp4TOrkwV4 TRANSCRIPT:https://app.rescript.info/public/share/H4Io1Y7Rr54MM05FuZgAv4yphoukCfkqokyzSYJwCK8Hosts:Dr. Tim Scarfe / Dr. Keith Duggar (MIT Ph.D)REFS:Deep Learning is Not So Mysterious or Different [Andrew Gordon Wilson]https://arxiv.org/abs/2503.02113Bayesian Deep Learning and a Probabilistic Perspective of Generalization [Andrew Gordon Wilson, Pavel Izmailov]https://arxiv.org/abs/2002.08791Compute-Optimal LLMs Provably Generalize Better With Scale [Marc Finzi, Sanyam Kapoor, Diego Granziol, Anming Gu, Christopher De Sa, J. Zico Kolter, Andrew Gordon Wilson]https://arxiv.org/abs/2504.15208

In this episode, hosts Tim and Keith finally realize their long-held dream of sitting down with their hero, the brilliant neuroscientist Professor Karl Friston. The conversation is a fascinating and mind-bending journey into Professor Friston's life's work, the Free Energy Principle, and what it reveals about life, intelligence, and consciousness itself.**SPONSORS**Gemini CLI is an open-source AI agent that brings the power of Gemini directly into your terminal - https://github.com/google-gemini/gemini-cli--- Take the Prolific human data survey - https://www.prolific.com/humandatasurvey?utm_source=mlst and be the first to see the results and benchmark their practices against the wider community!---cyber•Fund https://cyber.fund/?utm_source=mlst is a founder-led investment firm accelerating the cybernetic economyOct SF conference - https://dagihouse.com/?utm_source=mlst - Joscha Bach keynoting(!) + OAI, Anthropic, NVDA,++Hiring a SF VC Principal: https://talent.cyber.fund/companies/cyber-fund-2/jobs/57674170-ai-investment-principal#content?utm_source=mlstSubmit investment deck: https://cyber.fund/contact?utm_source=mlst***They kick things off by looking back on the 20-year journey of the Free Energy Principle. Professor Friston explains it as a fundamental rule for survival: all living things, from a single cell to a human being, are constantly trying to make sense of the world and reduce unpredictability. It’s this drive to minimize surprise that allows things to exist and maintain their structure.This leads to a bigger question: What does it truly mean to be "intelligent"? The group debates whether intelligence is everywhere, even in a virus or a plant, or if it requires a certain level of complexity. Professor Friston introduces the idea of different "kinds" of things, suggesting that creatures like us, who can model themselves and think about the future, possess a unique and "strange" kind of agency that sets us apart.From intelligence, the discussion naturally flows to the even trickier concept of consciousness. Is it the same as intelligence? Professor Friston argues they are different. He explains that consciousness might emerge from deep, layered self-awareness—not just acting, but understanding that you are the one causing your actions and thinking about your place in the world.They also explore intelligence at different sizes. Is a corporation intelligent? What about the entire planet? Professor Friston suggests there might be a "Goldilocks zone" for intelligence. It doesn't seem to exist at the super-tiny atomic level or at the massive scale of planets and solar systems, but thrives in the complex middle-ground where we live.Finally, they tackle one of the most pressing topics of our time: Can we build a truly conscious AI? Professor Friston shares his doubts about whether our current computers are capable of a feat like that. He suggests that genuine consciousness might require a different kind of "mortal" computation, where the machine's physical body and its "mind" are inseparable, much like in biological creatures.TRANSCRIPT:https://app.rescript.info/public/share/FZkF8BO7HMt9aFfu2_q69WGT_ZbYZ1VVkC6RtU3eeOITOC:00:00:00: Introduction & Retrospective on the Free Energy Principle00:09:34: Strange Particles, Agency, and Consciousness00:37:45: The Scale of Intelligence: From Viruses to the Biosphere01:01:35: Modelling, Boundaries, and Practical Application01:21:12: Conclusion

![VAEs Are Energy-Based Models? [Dr. Jeff Beck] VAEs Are Energy-Based Models? [Dr. Jeff Beck]](https://s3.castbox.fm/6e/7d/c5/41c8ebcca4ad0e35aa677a18406317eab9_scaled_v1_400.jpg)

![Abstraction & Idealization: AI's Plato Problem [Mazviita Chirimuuta] Abstraction & Idealization: AI's Plato Problem [Mazviita Chirimuuta]](https://s3.castbox.fm/c0/f2/0f/d57df3acb1f43690b67f98762865cc2d41_scaled_v1_400.jpg)

![Why Every Brain Metaphor in History Has Been Wrong [SPECIAL EDITION] Why Every Brain Metaphor in History Has Been Wrong [SPECIAL EDITION]](https://s3.castbox.fm/c7/34/2c/1800404668666d64729dd70563af5819d8_scaled_v1_400.jpg)

![Bayesian Brain, Scientific Method, and Models [Dr. Jeff Beck] Bayesian Brain, Scientific Method, and Models [Dr. Jeff Beck]](https://s3.castbox.fm/87/7f/57/42727a92cc936b068d9a0d194e4e1f0d56_scaled_v1_400.jpg)

![Your Brain is Running a Simulation Right Now [Max Bennett] Your Brain is Running a Simulation Right Now [Max Bennett]](https://s3.castbox.fm/fe/63/74/17d5dce646020f1e4935b83d5c5f19ae98_scaled_v1_400.jpg)

![The 3 Laws of Knowledge [César Hidalgo] The 3 Laws of Knowledge [César Hidalgo]](https://s3.castbox.fm/de/1e/f8/00541c0003cf33b303a2e4b3e1c655e3bd_scaled_v1_400.jpg)

![Are AI Benchmarks Telling The Full Story? [SPONSORED] (Andrew Gordon and Nora Petrova - Prolific) Are AI Benchmarks Telling The Full Story? [SPONSORED] (Andrew Gordon and Nora Petrova - Prolific)](https://s3.castbox.fm/54/21/13/8104542921fa7ae10e1e112e194c3fd379_scaled_v1_400.jpg)

![The Mathematical Foundations of Intelligence [Professor Yi Ma] The Mathematical Foundations of Intelligence [Professor Yi Ma]](https://s3.castbox.fm/2a/15/f6/fba283a552e1c46b5a8518cb406d23b691_scaled_v1_400.jpg)

![He Co-Invented the Transformer. Now: Continuous Thought Machines - Llion Jones and Luke Darlow [Sakana AI] He Co-Invented the Transformer. Now: Continuous Thought Machines - Llion Jones and Luke Darlow [Sakana AI]](https://s3.castbox.fm/61/e4/f8/e66563e7dcf0bcad6b973f090022624f12_scaled_v1_400.jpg)

![Why Humans Are Still Powering AI [Sponsored] Why Humans Are Still Powering AI [Sponsored]](https://s3.castbox.fm/fb/e4/bb/f0b3e2eeaafbcaa91ed15fe494ddaf1882_scaled_v1_400.jpg)

![The Universal Hierarchy of Life - Prof. Chris Kempes [SFI] The Universal Hierarchy of Life - Prof. Chris Kempes [SFI]](https://s3.castbox.fm/a9/07/99/97323fd1fcc0e9e7ee5145abe77d51ef44_scaled_v1_400.jpg)

![The Secret Engine of AI - Prolific [Sponsored] (Sara Saab, Enzo Blindow) The Secret Engine of AI - Prolific [Sponsored] (Sara Saab, Enzo Blindow)](https://s3.castbox.fm/2d/55/61/7b564928c72e11dcad277df4528641f8b9_scaled_v1_400.jpg)

Face recognition technology for surveillance and security applications has truly revolutionized the way we approach safety and monitoring. The seamless integration of this technology allows for quick and accurate identification of individuals, enhancing the overall efficiency of security systems, check for more on https://www.thefreemanonline.org/face-recognition-for-surveillance-and-security-applications/ . The experience of using face recognition for surveillance is incredibly intuitive and user-friendly, making it a valuable tool for both large-scale operations and everyday security measures. The precision and speed at which faces can be recognized is truly impressive, providing a sense of reassurance and peace of mind. Overall, the utilization of face recognition technology in surveillance and security applications has undoubtedly raised the bar in terms of safety and protection.

2:58:00 the moment Eliezer loses