Discover Untangled

Untangled

Untangled

Author: Charley Johnson

Subscribed: 3Played: 127Subscribe

Share

© Charley Johnson

Description

20 Episodes

Reverse

Hi there,Welcome back to Untangled. It’s written by me, Charley Johnson, and supported by members like you. This week I’m sharing my conversation with Miranda Bogen (Director, AI Governance Lab, Center for Democracy & Technology) about what happens when your AI assistant becomes an advertiser.As always, please send me feedback on today’s post by replying to this email. I read and respond to every note.Don’t forget to sign up for The Untangled Collective — it’s my free community for tech & society leaders navigating technological change and changing systems, and the next event is coming up!🏡Untangled HQ🔦NEW: I’m teaming up with Aarn Wennekers (complexity expert and author of Super Cool & Hyper Critical) to launch Stewarding Complexity, a private, confidential gathering space for boards, executive teams, and organizational leaders to step outside formal governance structures, speak candidly with peers, and practice making sense of complexity — together. If that’s you, join us!🚨Not New, But Important: Every organization I speak with is facing the same two questions: How do we build strategy for uncertainty—and what should we actually do about AI?My course, Systems Change for Tech & Society Leaders provides a structured approach to navigating both, helping leaders move beyond linear problem-solving and into systems thinking that engages emergence, power, and the relational foundations of change. Sign up for Cohort 6 today!Because why not: here’s a free diagnostic framework I use in the course to help you assess how your organization understands and uses technology across its strategy, programs, and operations.🖇️ Some LinksHow Certain Is It?I’ve written a lot about why embracing uncertainty matters. Chatbots do the opposite—they collapse uncertainty into confident-sounding responses, packaging blind confidence as a feature. But what if we designed these tools differently? What would it take to preserve uncertainty rather than erase it? A new paper tackles this challenge, arguing we need to protect the messier, harder-to-quantify forms of uncertainty that professionals navigate through conversation and intuition. Their proposed fix? Create systems where professionals collectively shape how different forms of uncertainty get expressed and worked through.Blackbox Gets SubpoenaedJob applicants are suing Eightfold AI, claiming its hiring screening software should follow Fair Credit Reporting Act requirements—giving candidates the right to see what data is collected and dispute inaccuracies.Eightfold scores job applicants 1-5 using a database of over a billion professional profiles. Sound familiar? It’s essentially what credit agencies do: create dossiers, assign numeric scores, and determine eligibility.The lawsuit argues: if it works like a credit agency, it should be regulated like one. As David Seligman of Towards Justice put it: “There is no A.I. exemption to our laws. Far too often, the business model of these companies is to roll out these new technologies, to wrap them in fancy new language, and ultimately to just violate peoples’ rights.”Threatening ProbabilitiesEvery time a chatbot threatens or blackmails someone, my inbox fills with “proof” of sentience.But a new paper shows these behaviors aren’t anomalies—they’re just extreme versions of normal human interaction: price negotiation, power dynamics, ultimatums. Our surprise comes from assuming chatbots should only reproduce socially sanctioned behavior, not the full spectrum of how humans actually act.Threats and blackmail don’t signal consciousness. They signal the model is drawing from the complete statistical distribution of human behavior—including the parts we don’t like to acknowledge. It’s probabilities all the way down, even when they’re uncomfortable ones.🧶When Your AI Assistant Becomes an AdvertiserOpenAI just announced it will start testing ads in ChatGPT’s free tier. The press release was carefully worded—reassuring users that “ads will not change ChatGPT answers” and that “your chats are not shared with advertisers.” But as Miranda Bogen, director of the AI Governance Lab at the Center for Democracy and Technology, pointed out in a recent conversation, these statements are misleading and miss the entire point. What’s coming is a fundamental shift in who these systems serve—and what that means for people, privacy, and inequality.To understand why this matters, we need to look at three things: how AI changes advertising signals, what “privacy” really means in this context, and why this could be harder to detect than anything we’ve seen before.The Signal ProblemThe question is: what happens when your AI assistant becomes an advertiser?Answering that question, according to Miranda, starts by recognizing that advertising is all about high fidelity signals of intent—data that accurately predicts what you want to buy or do. When an ad interrupts your experience on Facebook, it’s hoping that you’ll care; that perhaps something you clicked awhile back will still be relevant. That’s not a great signal. Searching offers a better signal. You’re typically using Google because you want something.But ChatGPT is different. You’re not searching for information. You’re often thinking out loud, revealing what matters to you, what you’re struggling with, what you’re planning or hoping for. Each conversational turn reveals deeper context about your intent—creating rich data for advertisers.Now, OpenAI wants those signals but, if you read the press materials, they’re clearly concerned about losing users. For example, they bend over backwards to say that your chats won’t be “shared with advertisers.” But according to Miranda, this is technically accurate but completely misleading. The platform doesn’t need to send advertisers a list of your conversations. That’s the whole point of advertising infrastructure—OpenAI will target ads on behalf of advertisers, shielding your specific data while making the connection happen anyway.The press release also promises you can “turn off personalization” and “clear the data used for ads.” But there are multiple layers of personalization happening simultaneously (e.g. raw chat logs, explicit memory stored about you, etc.) and it’s unclear what exactly OpenAI is referring to. Plus, even if you did turn off all personalization and erased all memory in the system, the amount of information a chatbot has about you in a specific context window offers plenty of signal for advertisers.The Relationship ProblemOn Facebook or Google, it’s clear you’re dealing with an advertiser. Your intent is your own. The experience is transactional. But as Miranda argues, when your AI assistant or AI co-worker starts subtly suggesting new products or services, something fundamentally different is happening.It’s closer to influencer marketing where paid recommendations come wrapped in the veneer of authentic social connection. But an influencer’s audience typically knows that they’re being paid to sponsor a product. With an AI assistant, the lines start to blur. It has been helping you draft emails, think through career decisions, process relationship struggles. You’ve built relational trust with it over months, so when it suggests a therapist, lawyer, or contractor, you might perceive it as trusted advice without knowing, of course, which providers paid to be in the pool the AI draws from. The persuasion is invisible, wrapped in the same helpful tone the AI uses for everything else.The Visibility ProblemPersonalized ads and privacy harms are a big albeit old problem. These tools will of course propagate discrimination, exploit people at vulnerable moments, reinforce stereotypes and biases, and shape what opportunities people see (and don’t!). But this evolution of the advertising model brings something new: these harms will be even harder to identify.Why? Because these systems are being built to connect with each other. AI agents will call other tools, connect with your bank and service providers, exchange information across an ecosystem of interconnected systems. There will be money and incentives flowing through this network in ways that are nearly impossible to track.As Miranda put it:“Even just tracking where any of this is happening, where exchanges of money and incentives are happening behind the scenes and where that might be shaping people’s experiences will just be even more challenging to keep up with over time.”If your inner monologue so far is “this all sounds very bad,” well, I get it. But we didn’t end the conversation without imagining alternative business models and policy solutions. Listen to the end for these, and hear what Miranda would do to shift power back to users if she were advising our next (fingers crossed!) President four years from today.👉 Before you go: 3 ways I can help* Advising: I help clients develop AI strategies that serve their future vision, craft policies that honor their values amid hard tradeoffs, and translate those ideas into lived organizational practice.* Courses & Trainings: Everything you and your team need to cut through the tech-hype and implement strategies that catalyze true systems change.* 1:1 Leadership Coaching: I can help you facilitate change — in yourself, your organization, and the system you work within. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit untangled.substack.com

Hi there,Welcome back to Untangled. It’s written by me, Charley Johnson, and supported by members like you. This week, I’m sharing my conversation with Evan Ratliff, journalist and host of the thought-provoking podcast, Shell Game.As always, please send me feedback on today’s post by replying to this email. I read and respond to every note.On to the show!🔦 Untangled HQI launched The Facilitators’ Workshop, a community of practice for leaders who want to perfect the craft of facilitating groups through conflict and ambiguity—so they can actually achieve their purpose. Our event on January 23, ”From Conflict to Clarity & Connection,” will give you a structured process for diagramming conflict—a way to slow down, make invisible dynamics visible, and understand what’s actually happening before deciding what to do next.I’m spinning up a lot of new things that I’m excited to tell you about. The best way to stay up to date on upcoming events and workshops is by joining The Untangled Collective.In season 1 of Shell Game, Evan cloned his voice, hitched it to an AI agent, and then put it in conversation with scammers and spammers, a therapist, work colleagues, and even his friends and family. You can listen to that conversation here.In season 2 of Shell Game, Evan explores what its like to run a company with AI agents as employees. A real company building a real product with users and interest from venture capitalists. This is the future that Silicon Valley is actively trying to bring into existence. Sam Altman recently shared that some of his fellow tech CEOs are literally betting on when the first one-person, billion-dollar company will appear. Now, all the hype would make you believe that we should welcome this future with open arms. Productivity will skyrocket. Time will feel abundant. Work will become frictionless and maximally efficient. That’s the story, anyway. You won’t be surprised to find that the gap between the hype and reality is, uh, massive. Evan and I talk about that gap, but Shell Game helps us see around the corner to what it might actually feel like to work with AI agents. It’s a story about:* What’s lost when an organizational culture becomes sycophantic.* What its like when your colleague regularly make stuff up, commits it to memory, and then repeats that thing in the future as if its real.* Why words like ‘agent’ and ‘agentic’ belie the reality that these large language models don’t really do anything on their own.* The costs and complexities of anthropomorphizing agents, and how we’re voluntarily tricking ourselves.* What humans are uniquely good at, and what it means for automation and the evolution of work.* What Silicon Valley misunderstands about the world they’re creating and what’s at stake in confusing fluency and judgement.Shell Game is smart, thought-provoking, and really funny. I can’t recommend it enough. I hope you enjoy my human to human conversation with Evan Ratliff.🧶Want to go deeper?If you finished our conversation thinking, “Okay… I need to think about this more,” let me help.* Flattery as a Feature: Rethinking ‘AI Sycophancy’* There’s no such thing as ‘fully autonomous’ agents* It’s okay to not know the answer* AI isn’t ‘hallucinating.’ We are.That’s it for now,Charley This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit untangled.substack.com

If you’ve sensed a shift in Untangled of late, you’re not wrong. I’m writing a lot more about ‘complex systems.’ To name a few:* What even is a ‘complex system’ and how do you know if you’re in one.* How to act interdependently and do the next right thing in a complex system.* Why if/then theories of change that assume causality are bonkers — and how to map backward from the future.* How do you act amidst uncertainty — if you truly don’t know how your system will respond to your intervention, what do you do?* How should we think about goals in an uncertain world?* Here’s a fun diagnostic tool I developed to help you assess how your organization thinks, acts, and learns under complexity.I am obsessed with complex systems because the world is uncertain and unpredictable — and yet all of our strategies pretend otherwise. We crave certainty, so we build plans that presume causality, control, and predictability. We know in our gut that the systems we’re trying to change won’t sit still for our long-term plans, yet our instinct to cling to control amid uncertainty is too strong to resist.And honestly, in 2025, this shouldn’t be a hard sell. Politics, climate change, and AI are laughing at your five-year strategy decks.Complexity thinking helps us see this clearly — that systems are dynamic, nonlinear, and adaptive — but it, too, has blind spots. First, it lacks a theory of technology. The closest we get is Brian Arthur’s brilliant book, The Nature of Technology: What It Is and How It Evolves, which explains how technologies co-evolve with economic systems. (Give it a read, or check out write-up in Technically Social). But Arthur was focused on markets, not on social systems — not on how technology is entangled with people and power.That’s where my course comes in. I’m trying to offer frameworks and practices for creating change across difference, amid uncertainty, in tech-mediated environments — approaches that honor both complexity and the mutual shaping of people, power, and technology. (And yes, Cohort 5 of Systems Change for Tech & Society Leaders starts November 19.)Second, complexity is hard to talk about simply and make practical (that’s why my Playbook turned into a 200 page monstrosity!) Every time I use the words “complex” or “system,” I can feel the distance between me and whoever I’m talking to widen. I’ve been searching for thinkers who bridge that gap — who write about systems with both clarity and depth — and recently came across the brilliant work of Aarn Wennekers, who writes the great newsletter Super Cool & Hyper Critical (Subscribe if you haven’t yet!)After reading his essay, Systems Thinking Isn’t Enough Anymore, I reached out and invited him onto the podcast. I’m thrilled to share that conversation — one that digs into the mindsets and muscles leaders need to navigate uncertainty and constant change, the need to collapse old distinctions between strategy and operations, and what it really means to act when the ground beneath us keeps shifting. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit untangled.substack.com

This week, I spoke with Harry Law, Editorial Lead at the Cosmos Institute and a researcher at the University of Cambridge, about AI and autonomy. Harry wrote a terrific essay on how generative AI might serve human autonomy rather than the empires Big Tech is intent on building.In our conversation, we explore:* What the Cosmos Institute is — and how it’s challenging the binary, deterministic thinking that dominates tech.* The difference between “democratic” and “authoritarian” technologies — and why it depends less on the tools themselves than on the political, cultural, and economic systems they’re embedded in.* The gap between agency (Silicon Valley’s favorite word) and autonomy, and why that difference matters.* How generative AI can collapse curiosity — closing the reflective space between question and answer — and what it might mean to design it instead for wonder, inquiry, and self-understanding.* Why removing friction and optimizing for efficiency often strips away learning, growth, and self-actualization.* The need for more “philosophy builders” — technologists designing systems that expand our capacity to think, choose, and act for ourselves.* Harry’s provocative idea of personalized AIs grounded in our own values and second-order preferences — a radically different vision from today’s “personalization” built for engagement.The conversation around generative AI has gone stale. Everyone is interpreting it through their own frames of meaning — their own logics, values, incentives, and worldviews — yet we still talk about “AI” as if it’s a single, coherent, inevitable thing. It’s not.My conversation with Harry is an attempt to move beyond the binary — to imagine alternative pathways for technology that place human autonomy, curiosity, and moral imagination at the center.If you’re fed up with imagining alternative futures and want to do the hard, strategic work of changing the system you’re in, and set it — and you! — on a fundamentally new path, sign up for Cohort 5 of my course, Systems Change for Tech & Society Leaders. It kicks off in three weeks and there are still a few spots available.https://www.charley-johnson.com/sociotechnicalsystemschangeBefore you go: 3 ways I can help* Systems Change for Tech & Society Leaders - Everything you need to cut through the tech-hype and implement strategies that catalyze true systems change.* Need 1:1 help aligning technology with your vision of the future. Apply for advising & executive coaching here.* Organizational Support: Your organizational playbook for navigating uncertainty and making sense of AI — what’s real, what’s noise, and how it should (or shouldn’t) shape your system.P.S. If you have a question about this post (or anything related to tech & systems change), reply to this email and let me know! This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit untangled.substack.com

Today, I’m sharing the 15-minute diagnostic framework I use to assess an organization’s capacity to navigate uncertainty and complexity. Fill out this short survey to get access.The diagnostic is just one tool of 30+ included in the Playbook that will help you put the frameworks from my course immediately into practice. This one helps participants see how their current assumptions, decision structures, and learning practices align (or clash) with the realities of complex systems — and identify immediate interventions they can try to build adaptive capacity across their teams and organizations. Fun, huh? Cohorts 4 & 5 are open but enrollment is limited. Sign up today!Okay, let’s get to my conversation with Lee Vinsel, Assistant Professor of Science, Technology, and Society at Virginia Tech and the creator of the great newsletter and podcast People & Things.I try (and fail often!) to live by the line from an incredible Ted Lasso scene, “Be curious, not judgmental.” I was reminded of that phrase while reading Lee Vinsel’s essay Against Narcissistic-Sociopathic Technology Studies, or Why Do People USE Technologies. Lee encourages scholars and critics of generative AI — and tech more broadly — to go beyond their own value judgments and actually study how and why people use technologies. He points to a perceived tension we don’t have to resolve: that “you can hold any ethical principle you want and still do the interpretive work of trying to understand other people who are not yourself.”I feel that tension! There are so many reasons to be critical of the inherently anti-democratic, scale-at-all-costs approach to generative AI. You know the one that anthropomorphizes fancy math and strips us of what it means to be human — all while carrying forward historical biases, stealing from creators, and contributing to climate change and water scarcity? (Deep breath.) But Lee’s point is that we can hold these truths and still choose curiosity. Choosing curiosity over judgment is also strategic. Often, judgment centers the technology, inflating its power, and reducing our own agency. This gestures at another one of Lee’s ideas, “criti-hype,” or critiques that are “parasitic upon and even inflates hype.” As Vinsel writes, these critics, “invert boosters’ messages — they retain the picture of extraordinary change but focus instead on negative problems and risks.” Judgment and critique focuses our attention on the technology itself and centers it as the driver of big problems, not the social and cultural systems it is entangled with. What we need instead is research and analysis that focuses on how and why people use generative AI, and the systems it often hides. In our conversation, Lee and I talk about:* How, in a world where tech discourse is all hype and increasingly political, curiosity can feel like ceding ground to ‘the other side.’* Where narcissistic/sociopathic tech studies comes from — and what it would look like to center curiosity in how we talk about and research generative AI.* How centering the technology itself overplays its role in social problems and obscures the systems that actually need to change.* The limits of critique, and what would shift if experts and scholars centered description and translation instead of judgment.* Whether we’re in a bubble — and what might happen next.This conversation is a wonky one, but its implications are quite practical. If we don’t understand how and why organizations use generative AI, we can’t anticipate how work will change — or see that much of the adoption is actually performative. If we don’t understand how and why students use it, we’ll miss shifts in identity formation and learning. If we don’t understand how and why people choose it for companionship, we’ll miss big shifts in the nature of relationships. I could go on — but the point is this: in a rush to critique generative AI, we often forget to notice how people are using it in the present — the small, weird, human ways people are already making it part of their lives. To see around the corner, we have to get over ourselves. We have to replace assumption with observation, and judgment with curiosity.Before you go: 3 ways I can help* Systems Change for Tech & Society Leaders - Everything you need to cut through the tech-hype and implement strategies that catalyze true systems change.* Need 1:1 help aligning technology with your vision of the future. Apply for advising & executive coaching here.* Organizational Support: Your organizational playbook for navigating uncertainty and making sense of AI — what’s real, what’s noise, and how it should (or shouldn’t) shape your system.P.S. If you have a question about this post (or anything related to tech & systems change), reply to this email and let me know! This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit untangled.substack.com

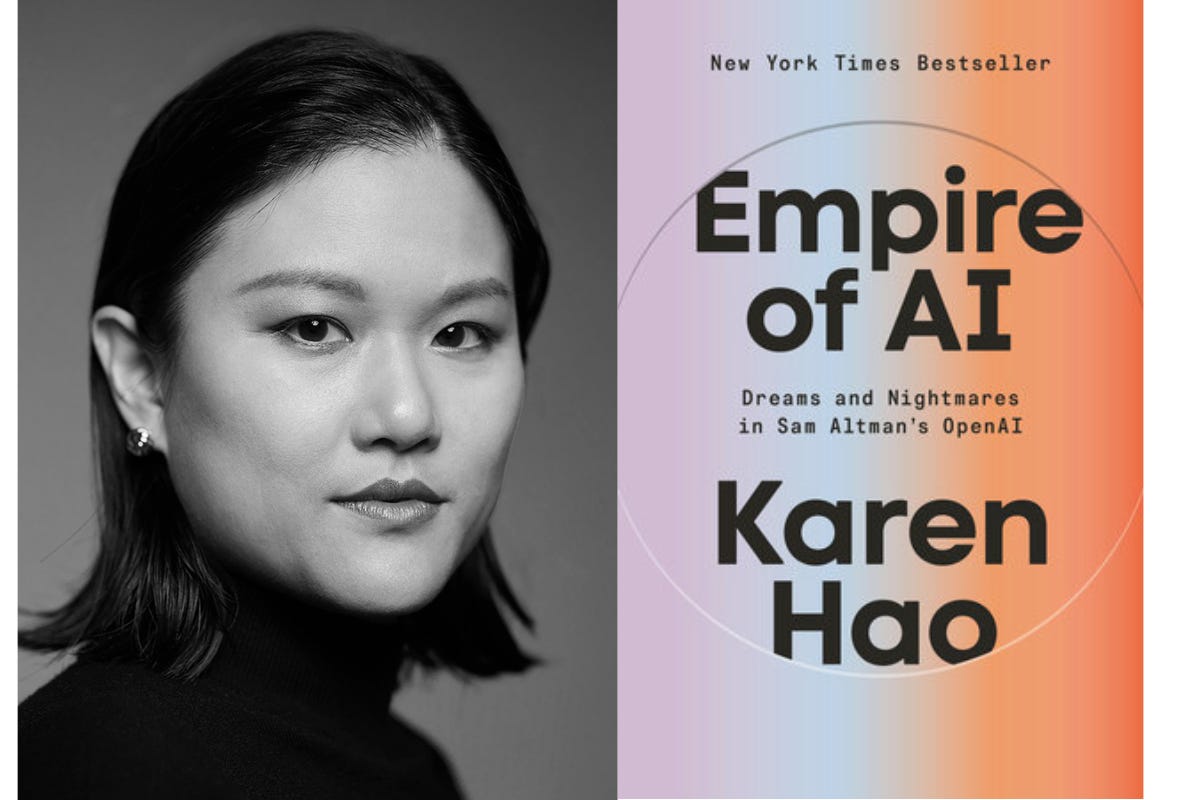

Today, I’m sharing my conversation with Karen Hao, award-winning reporter covering artificial intelligence and author of NYT bestseller, Empire of AI. We discuss:* The scale-at-all cost approach to AI Big Tech is pursuing — the misguided assumptions and beliefs it rests upon, and the harms it causes.* How the companies pursuing this approach represent a modern-day empire, and the role narrative power plays in sustaining it.* Boomers, doomers, and the religion of AGI.* Alternative visions of AI that center consent, community ownership, context, and don’t come at the expense of people’s livelihoods, public health, and the environment.* How to reclaim our agency in an age of AI.👉 Tech hype hides power. Reclaim it in my live course Systems Change for Tech & Society Leaders.Links:- Check out the podcast, Computer Says Maybe This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit untangled.substack.com

I’m Charley Johnson, and this is Untangled, a newsletter and podcast about our sociotechnical world, and how to change it. Today, I’m bringing you the audio version of my latest essay, “There’s no such thing as ‘fully autonomous agents.’ Before getting into it, two quick things:1. I have two part essay out in Tech Policy Press with Michelle Shevin that offers a roadmap for how philanthropy can use the current “AI Moment” to build more just futures.2. There is still room available in my upcoming course. In it, I weave together frameworks — from science and technology studies, complex adaptive systems, future thinking etc. — to offer you strategies and practical approaches to address the twin questions confronting all mission driven leaders, strategists, and change-makers right now: what is your 'AI strategy' and how will you change the system you’re in?Now, on to the show! This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit untangled.substack.com

Today, I’m sharing my conversation with Greg Epstein, American Humanist chaplain at Harvard University and the Massachusetts Institute of Technology, and author of the great new book Tech Agnostic: How Technology Became the World’s Most Powerful Religion, and Why It Desperately Needs a Reformation. We discuss:* How tech is becoming a religion, and why it’s connected to our belief that we’re never enough.* How Elon Musk, Mark Zuckerberg, Jeff Bezos, and Bill Gates are hungry ghosts. * What ‘tech-as-religion’ allows us to see and understand that ‘capitalism-as-religion’ doesn’t.* My concerns with the metaphor and Greg’s thoughtful response.* How we might usher in a tech reformation, and the tech humanists leading the way.* The value of agnosticism and not-knowing when it comes to tech.Okay, that’s it for now,Charley This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit untangled.substack.com

Today, I’m sharing my conversation with Divya Siddarth, Co-Founder and Executive Director of the Collective Intelligence Project (CIP) about how we might democratize the development and governance of AI. We discuss:* The CIP’s work on alignment assemblies with Anthropic and OpenAI — what they’ve learned, and why in the world a company would agree to increasing public participation.* The #1 risk of AI as ranked by the public. (Sneak peek: it has nothing to do with rogue robots.)* Are participatory processes good enough to bind companies to the decisions they generate? * How we need to fundamentally change our conception of ‘AI expertise.’* How worker and public participation can shift the short-term thinking and incentives driving corporate America.* Should AI companies become direct democracies or representative ones? * How Divya would structure public participation if she had a blank sheet of paper and if AI companies had to adopt the recommendations.That’s it for now,Charley This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit untangled.substack.com

Today, I’m sharing my conversation with Deepti Doshi, Co-Director of New_Public about what they’ve learned building local healthy communities, online and off. We discuss:* The problem New_Public is trying to address with their initiative, Local Lab. (Which I highlighted in my recent essay, “Fragment the media! Embrace the shards!”)* What Deepti has learned about what makes for pro-social conversations that build community on messaging boards and private groups.* Why it’s an oxymoron to call Twitter a ‘global town square’ and the relationship between scale and trustworthy information ecosystems.* The importance of ‘digital stewards’ in facilitating online community.* How the social capital people build online is translating into IRL actions and civic engagement.* What a future might look like if New_Public realizes the vision of Local Lab.That’s it for now,Charley This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit untangled.substack.com

This week, I’m sharing my conversation with Anya Kamenetz, the creator of The Golden Hour, a newsletter about “thriving and caring for others on a rapidly changing planet. Anya and I announced a new partnership recently — now, when you sign up for an annual paid subscription to Untangled, you’ll get free access to the paid version of The Golden Hour — and we wanted to talk about it, and the work ahead.Along the way, we also discuss:* How we’re adapting our newsletters in response to the election.* Why mitigating harms isn’t sufficient, and a framework that can help us all orient to the present moment: block, build, be.* How we consume information — our mindsets, habits, and practices — and also, why ‘consume’ isn’t the right frame. * The difference between social media connections and email-based relationships.* How to talk to your kids about the election.* The fragmentation of the news media environment and why it’s a good thing.I couldn’t be more excited to partner with Anya and introduce you to her work. Enjoy!More soon,Charley This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit untangled.substack.com

Hi, I’m Charley, and this is Untangled, a newsletter about our sociotechnical world, and how to change it.* Come work with me! The initiative I lead at Data & Society is hiring for a Community Manager. Learn more here.* Check out my new course, Sociotechnical Systems Change in Practice. The first cohort will take place on January 11 and 12, and you can sign up here.* Last week I interviewed Mozilla’s Jasmine Sun and Nik Marda on the potential of public AI, and the week prior I shared my conversation with AI reporter Karen Hao on OpenAI’s mythology, Meta’s secret, and Microsoft’s hypocrisy.🚨 This is your last chance to get Untangled 40 percent off. Even better, I partnered with Anya Kamenetz to offer you her great newsletter The Golden Hour for free! Signing up for Untangled right now means you’ll get $140 in value for $54.On to the show!This week I spoke with Arvind Narayanan, professor of computer science at Princeton University and director of its Center for Information Technology Policy. I spoke with Arvind about his great new book with Sayash Kapoor, AI Snake Oil: What Artificial Intelligence Can Do, What it Can’t, and How to Tell the Difference. We discuss:* The difference between generative AI and predictive AI, and why we’re both more concerned by the latter.* Whether generative AI systems can ‘understand’ and ‘reason.’* The difference between intelligence and power and why Arvind isn’t so concerned by the supposed existential threats of AI.* Why artificial intelligence appeals to broken institutions.* How Arvind would change AI discourse.* How technical and social experts misunderstand one another.* What a Trump second term means for AI regulation.* What excites Arvind about how his children will experience new technologies, and what makes him nervous.More soon,Charley This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit untangled.substack.com

Hi, I’m Charley, and this is Untangled, a newsletter about our sociotechnical world, and how to change it.* Untangled crossed the 8,000 subscriber mark this week. Woot!* Come work with me! The initiative I lead at Data & Society is hiring for a Community Manager. Learn more here. * Last week, I shared my conversation with award-winning AI reporter Karen Hao on OpenAI’s mythology, Meta’s secret, and Microsoft’s hypocrisy.* I launched my new course, Sociotechnical Systems Change in Practice. The first cohort will take place on January 11 and 12, and you can sign up here. (As you’ll see, I’ve decided to offer a free 1:1 coaching session to all participants following the course.)🚨Untangled is 40 percent off (this is the largest discount I’ve offered, and it will end in two weeks), and I partnered with Anya Kamenetz to offer you her great newsletter The Golden Hour for free! Signing up for Untangled right now means you’ll get $140 in value for $54.On to the show!This week I’m sharing a conversation with Jasmine Sun and Nik Marda on the potential of public AI. We recorded the conversation before the election. It might seem like an odd conversation to pipe into your earbuds now. Yes, the world looks differently than it did then. But AI should still serve our collective goals, it should be shaped by our participation, and it should be accountable to us. Right, the ‘public’ doesn’t just mean the government — it means us! As civil rights groups and policy advocates prepare to play defense over the next four years, we must also articulate an affirmative vision of the future, and work to ensure our technologies serve it, and us. Nik and Jasmine’s paper — and this discussion — offer helpful guide to building that future.We discuss:* What ‘public AI is, and the importance of articulating an affirmative vision of the future we want to create.* The three core attributes that animate public AI — public goods, public orientation, and public use — and what would need to change to realize its potential.* Shifting how we collectively understand AI — what it is, what it’s not, what it can do, what it can’t.* How our public imagination tends to conjure AI extremes — utopias where no one has to work and and dystopias where AI somehow, someway, tripwires an existential event — and what a ‘public AI’ future might look like.Okay, that’s it for now,Charley This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit untangled.substack.com

Hi, I’m Charley, and this is Untangled, a newsletter about our sociotechnical world, and how to change it.* Last week, I argued that the shared reality that the U.S. has long glorified was predominantly white and male, and historically, fragmentation has proven to be a good thing.* I launched my new course, Sociotechnical Systems Change in Practice. The first cohort will take place on January 11 and 12, and you can sign up here. (As you’ll see, I’ve decided to offer a free 1:1 coaching session to all participants following the course.)* Untangled is 40 percent off at the moment, and I partnered with Anya Kamenetz to offer you her great newsletter The Golden Hour for free! Check out her latest on how to talk to your kids about the election. Signing up for Untangled right now means you’ll get $140 in value for $54.This week, I’m sharing my conversation with Karen Hao, an award-winning writer covering artificial intelligence for The Atlantic. We discuss:* Karen’s investigation into Microsoft’s hypocrisy on AI and climate change.* How OpenAI’s mythology reminds Karen of Dune. (I can’t stop thinking about the connection after Karen made it.)* How Meta uses shell companies to hide from community scrutiny when building new data centers.* How AI discourse should change and what Karen is doing to train journalists on how to report on AI.* How to shift power within tech companies. Employee organizing? Community advocacy? Reporting that rejects narratives premised on future promises and innovation for its own sake? Yes.Reflections on the last weekI interviewed Karen on the morning of the election. I hesitated to share the episode this Sunday but ultimately decided to release it because it’s a conversation about big, structural problems, and what we can do about them. The election results affirm for me the pivot I announced a few weeks ago. Namely, we can’t solve existing problems or fix broken institutions such that they return us to the status quo. We’re (still!) not going back. We have to transform existing sociotechnical systems as we address the rot that lies beneath. We must imagine alternative futures and align our individual and collective actions to them. We have to live these futures today, and then tomorrow. One day at a time,Charley This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit untangled.substack.com

Hi, it’s Charley, and this is Untangled, a newsletter about technology, people, and power. Today, I’m sharing my conversation with Evan Ratliff, journalist and host of Shell Game, a funny and provocative new podcast about “things that are not what they seem.” Evan cloned his voice, hitched it to an AI agent, and then put it in conversation with scammers and spammers, a therapist, work colleagues, and even his friends and family. Shell Game helps listeners see a li’l farther into a future overrun with AI agents, and I wanted to speak with Evan about his experience of this future.In our conversation, we discuss:* The hilarity that ensues when Evan’s AI agent engages with scammers and spammers, and the quirks and limitations of these tools.* The harrowing experience of listening to your AI agent make stuff up about you in therapy.* How those building these tools view the problem(s) they’re solving.* What it’s like to send your AI agent to work meetings in you place.* The work required to maintain these tools and make their outputs useful — does it actually help you save time and be more productive??* The lingering uncertainty these tools culitvated through its interactions with Evan’s family and friends.If you find the conversation interesting, share it with a friend.Okay, that’s it for now,Charley This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit untangled.substack.com

Hi, it’s Charley, and this is Untangled, a newsletter about technology, people, and power.Can’t afford a subscription and value Untangled’s offerings? Let me know! You only need to reply to this email, and I’ll add you to the paid subscription list, no questions asked.I turned 40 this week and I spent the weekend in nature, surrounded by my favorite people. While my cup is running over with friendship, love, and support, I’ll always take more 🤣. You can celebrate me and my next trip around the sun by becoming a paid subscriber and buying my first book, AI Untangled.This month:* I published an essay about the power of utopian thinking — how one version got us into this AI mess, and getting out will require a very different approach. (Remember, you have until August 31st to submit a vignette of your sociotechnical utopia.)* I shared my conversation with Shannon Vallor, the Baillie Gifford Chair in the Ethics of Data and Artificial Intelligence at the Edinburgh Futures Institute (EFI) at the University of Edinburgh. Vallor and I talk about her great new book, The AI Mirror: Reclaiming Our Humanity in an Age of Machine Thinking, and how to chart a new path from the one we’re on.This week, I’m resharing my October 2022 conversation with Brandon Silverman, co-founder and CEO of CrowdTangle, the data analytics tool once at the center of controversy inside Meta over just how transparent the company should be. Meta shut down the tool this week, and we’re all worse for it.In the episode, we get into Brandon’s time at Meta and the fights over CrowdTangle but we spend most of our time exploring his views on transparency — its utility and limitations, its relationship to accountability, power, and trust — and how they have evolved. Along the way, we discuss:* How Brandon initially got “red-pilled” on transparency.* How CrowdTangle challenged the stories Facebook leadership told themselves about the platform’s impact on the world.* How the scale of these platforms means that when it comes to solutions, “it’s tradeoffs all the way down.”This essay pairs nicely with the second-ever essay I wrote for Untangled, “Some Unsatisfying Solutions for Facebook,” which delves into the conceptual limitations of transparency. Just as we should never stop pushing for it, we can’t mistake it for accountability.🙏 Thank YouWhen I turned 39 last year, I wrote this:“I turn 39 today, so perhaps it’s fitting that I’ve been thinking a lot about time. I want time to feel slow and expansive. I want each day to feel justified on its own terms. I want the value of each activity to lie in the doing, not in the end result. That’s what Untangled has been for me. Not always — sometimes writing is the absolute worst — but on a good day, when I sit down at the keyboard, I enjoy the process, and it feels like flow.”I feel closer to this feeling as I turn 40. That’s partly because of you! The other part? Meditation! But the point is, your support allows me to show up to the keyboard every morning before the sun comes up, and write. It affords me glimpses of this feeling, of time slowing down, and joy in the moment. It turns out that enjoying the moment also produces results: last year, I wrote 51 issues and published a book. Thanks for being along for the ride. That’s it for now.Charley This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit untangled.substack.com

Hi, it’s Charley, and this is Untangled, a newsletter about technology, people, and power.This week I’m sharing my conversation with Shannon Vallor, the Baillie Gifford Chair in the Ethics of Data and Artificial Intelligence at the Edinburgh Futures Institute (EFI) at the University of Edinburgh. Vallor and I talk about her great new book, The AI Mirror: Reclaiming Our Humanity in an Age of Machine Thinking, and how to chart a new path from the one we’re on. We discuss:* The metaphor of an ‘AI mirror’ — what it is, and how it helps us better understand what AI is(and isn’t!)* What AI mirrors reveal about ourselves and our past.* How AI mirrors distort what we see — whose voices and values they amplify, and who is left out of the picture altogether.* How Vallor would change AI discourse.* How we might chart a new path toward a fundamentally different future — as a sneak peak, it requires starting with outcomes and values and thinking backward.* How we can become so much more than the limits subtly shaping our teenage selves (e.g. conceptions of what we’re good at, what we’re not, etc.) — and how that growth and evolution doesn’t have to stop as we age.It’s not hyperbole when I say Vallor’s book is the best thing I’ve read this year. If you send me a picture holding it in one hand, and my new book in the other, I might just explode with joy.More soon,Charley This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit untangled.substack.com

Last week, I analyzed a new lawsuit brought by University of Massachusetts Amherst professor Ethan Zuckerman and the Knight First Amendment Institute at Columbia University. The lawsuit would loosen Big Tech’s grip over our internet experience if successful. In this conversation, I’m joined by , the creator of the tool Unfollow Everything, which is at the center of the lawsuit. Louis and I discuss:* What it’s like to be bullied by a massive company;* Why this lawsuit would be so consequential for consumer choice and control over our online experience;* The tools Louis would build to democratize power online.That’s it for this edition of Untangled.Charley This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit untangled.substack.com

Hello, welcome back to the podcast edition of Untangled. If someone forward you this link, it was probably my sister. Give it a listen — she knows what she's talking about. Then, if you're so inclined, become a subscriber.👉 Two things before we get into it. First, you can now listen to Untangled directly on Apple Podcasts or Spotify. Second, if you haven't yet decided what you're going to get me for Christmas (I get it, I'm really hard to shop for), just forward this email to 10 friends and kindly ask that they smash the subscribe button. I mean, this gift isn't even affected by the supply chain — it's a Christmas miracle!And now, on with the show.This month I offered up some unsatisfying solutions for a big fat problem: Facebook. On Twitter, Harvard Law Professor Jonathan Zittrain called it "a brisk and thoughtful piece weighing different futures for social media." Who ever said Twitter wasn’t the absolute best? And Rose Jackson, Director of the Democracy & Tech Initiative at the Atlantic Council referenced it in her thoughtful testimony to the Senate Commerce Committee. Untangled made it to Congress, y'all.While writing the piece, I knew I wanted to speak with Daphne Keller. Daphne directs the Program on Platform Regulation at Stanford's Cyber Policy Center, lectures at Stanford Law School, and before all of that, she was an Associate General Counsel at Google. That's deep academic, legal, and private sector expertise all in a single human!Daphne has thought deeply about the problem of amplification and the practical challenges to implementing the solution of "middleware services.” In this conversation, we dive into both. Along the way, we also discuss:* How the private sector and civil society misunderstand one another when it comes to platform governance.* Why everyone seems to hate Section 230 and why regulating speech is so hard.* Why regulating reach is ... just as hard.Listen all the way to the end to learn the one thing Daphne would tell her teenage self about life.If you like the podcast, please do all the things to make it go viral - share it, review it, and rate it.I hope you’ve enjoyed the second monthly series of Untangled. For next month, I’ve decided to write about something we’re all not at all tired of reading about: the metaverse! 😬More soon,Charley Credits:* Track: The Perpetual Ticking of Time — Artificial.Music [Audio Library Release]* Music provided by Audio Library Plus* Watch: Here* Free Download / Stream: http://alplus.io/PerpetualTickingOfTime This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit untangled.substack.com

You came back! That warms my writerly heart. If someone forwarded you this email, definitely thank them - they just get your wonky sensibility. Then, if you’re so inclined, become a subscriber. 👉 One more thing before we get into it - if Untangled arrives in your Promotions tab, consider moving it to your Primary tab. If you do it once, our algorithmic overlords will take it from there. And now, on with the show. This issue of Untangled is a little different - I made a podcast, y’all 🙌. You can listen to it on Substack or wherever you get your podcasts. As you might recall, this month I wrote about decentralization and how power operates in crypto. Then I spoke with law professor Angela Walch about one interesting albeit imperfect solution: treating software developers as fiduciaries. To round out this series, I wanted to dive deep into crypto governance. Is it adding to the concentration of power or helping to democratize and diffuse it? Are there models that actually lead to more equitable outcomes? How do the economic incentives of any crypto project constrain or shape its governance?I'm thrilled that Nathan Schneider joined me on today's episode to discuss these questions. Nathan is an Assistant Professor of Media Studies at the University of Colorado Boulder. He is the author of many books and papers, including, most recently a paper called "Cryptoeconomics as a limitation on governance." I highly recommend it.In our conversation, Nathan:* Defines cryptoeconomic governance and outlines its possibilities and limitations.* Discusses what co-ops and crypto projects have to learn from one another.* Shares his perspective on how crypto governance can invigorate democracy.If you like the episode, please do all the things to make it go viral - share it, review it, and rate it. I hope you’ve enjoyed the first monthly series of Untangled. Next month, I've decided to give you a series of unsatisfying solutions for a big fat problem: Facebook. Yes, yes... I can hear you laugh-crying already. Until then 👋Credits:* Track: The Perpetual Ticking of Time — Artificial.Music [Audio Library Release] * Music provided by Audio Library Plus* Watch: Here* Free Download / Stream: http://alplus.io/PerpetualTickingOfTime This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit untangled.substack.com