Discover The IT/OT Insider Podcast - Pioneers & Pathfinders

The IT/OT Insider Podcast - Pioneers & Pathfinders

The IT/OT Insider Podcast - Pioneers & Pathfinders

Author: By David Ariens and Willem van Lammeren

Subscribed: 4Played: 125Subscribe

Share

© Willem van Lammeren / David Ariens

Description

How can we really digitalize our Industry? Join us as we navigate through the innovations and challenges shaping the future of manufacturing and critical infrastructure. From insightful interviews with industry leaders to deep dives into transformative technologies, this podcast is your guide to understanding the digital revolution at the heart of the physical world. We talk about IT/OT Convergence and focus on People & Culture, not on the Buzzwords. To support the transformation, we discover which Technologies (AI! Cloud! IIoT!) can enable this transition.

itotinsider.substack.com

itotinsider.substack.com

41 Episodes

Reverse

Some guests make you pause halfway through the recording and think, “Okay… this one’s going to need a second listen.”That was the case with Dan Jeavons, president of Applied Computing, formerly VP of Computational Science and Digital Innovation at Shell — and one of the people who has quite literally been shaping how data, AI, and physics come together in industry.From ERP Reports to Foundation ModelsHe began, like so many, somewhere between spreadsheets and SAP.“The biggest value of having an integrated system is the fact that you have an integrated data layer,” he recalls. “I didn’t like the systems much — but the data was really interesting.”That curiosity led him from analytics experiments in R and MATLAB to building Shell’s first Advanced Analytics Center of Excellence — which, as he jokes, “was neither advanced nor excellent… but we got better quickly.”Thirteen years later, he was leading teams across AI, data science, and advanced physics modeling — and wrestling with a problem that every industrial data leader knows too well:“You either rely on physics and trade off flexibility, or you rely on statistics and trade off explainability.”What AI Looks Like From the Plant FloorDan has worked across the energy value chain — from offshore wells to refineries — and says something that surprises many:“From a data perspective, it all looks very similar.”Distributed control systems, process historians… “whether you’re on a platform in the North Sea or in a petrochemicals plant, the data architecture doesn’t really change,” he says.And that’s what makes the AI opportunity so big.If every facility generates data in roughly the same way, then algorithms can be adapted and scaled — not rebuilt from scratch each time.Why IT/OT Convergence Still Hasn’t HappenedAt one point, we asked the question: Has IT/OT convergence really happened?Dan didn’t hesitate:“No. We’re only scratching the surface.”He describes today’s operations as “a DCS at the heart of the operation, surrounded by siloed engineering processes — reliability, maintenance, safety — each with their own tool, using a fraction of the data.”Adding AI layers on top of that, he argues, is helpful but incomplete:“We’ve added a layer of intelligence on top of existing systems. But it hasn’t changed the work process yet.”True convergence, he says, will come when AI doesn’t just analyze the work — it redefines it.The Real Meaning of “Digital Twin”Few topics create more buzz (or confusion) than digital twins. Dan gives one of the clearest definitions we’ve heard:“A true digital twin must do three things: represent the physical world, be interrogable in real time, and run simulations that explain why and what next.”That’s a high bar…“The technology exists,” he says. “We just haven’t stitched it together yet.”Change Management: The Hardest PartDan’s third “impossible problem” isn’t technical — it’s human.“These facilities are extremely risky. They’ve run safely for 40 years. So when you say, ‘Let’s change everything,’ it’s a hard sell.”He lays out the classic resistance:* It works, don’t touch it.* We can’t risk downtime.* We’re here to deliver return on capital, not to experiment.And yet, as he points out:“Even with the way we run things today, we still have reliability problems, we still have safety exposure, and we’re losing expertise fast.”His conclusion is blunt:“Someone is going to figure this out — and when they do, they’ll be 50 % more efficient. If you’re not on that train when it happens… good luck.”Rethinking the Cloud DebateWhen the topic of cloud reliability came up (AWS outages, anyone?), Dan didn’t dodge.“The idea that you’re safe because you’re air-gapped is a fallacy,” he said flatly. “Most OT environments are already virtualized — effectively private clouds. The question isn’t if you’re exposed, it’s how well you manage it.”The challenge, he says, isn’t cyberthreats — it’s change management in the cloud era.“Continuous deployment doesn’t work in operations. We need cloud architectures that respect industrial change control — and OT vendors who step up to modern security standards.”From Use Cases to Foundation ModelsDan’s view of AI’s future is clear: we’re moving from narrow, use-case-specific algorithms to general-purpose foundation models that can reason across disciplines.“Before 2023, companies built algorithms for individual problems: corrosion, valves, compressors. Now, the next generation of models will handle all of them because they understand physics, language, and time series together.”He tells the story of Sam Tukra, his former colleague (now Applied Computing’s co-founder and Chief AI Officer alongside Callum Adamson) who figured out how to make those three domains “talk” to each other.“He built an agentic system that cross-validated physics, language, and time series. I was equal parts proud, frustrated, and amazed. Suddenly, you realize — this is it.”The result is Orbital, their platform that blends these layers — a system that can predict, explain, and reason across disciplines, from reliability to safety to economics.Looking AheadDan calls this convergence of physics and AI an “inflection point for industry.” He’s convinced that in the next decade, the companies who embrace it will operate differently — not because AI tells them what to do, but because it changes how they work.So that means that we need to plan for another podcast in a year or so from now ;)Thanks for listening!Stay Tuned for More!🚀 Join the ITOT.Academy →Subscribe to our podcast and blog to stay updated on the latest trends in Industrial Data, AI, and IT/OT convergence.🚀 See you in the next episode!Youtube: https://www.youtube.com/@TheITOTInsider Apple Podcasts: Spotify Podcasts: Disclaimer: The views and opinions expressed in this interview are those of the interviewee and do not necessarily reflect the official policy or position of The IT/OT Insider. This content is provided for informational purposes only and should not be seen as an endorsement by The IT/OT Insider of any products, services, or strategies discussed. We encourage our readers and listeners to consider the information presented and make their own informed decisions. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit itotinsider.substack.com

Today’s guest has lived what most companies are still figuring out: how to turn fragmented systems, manual Excel work, and well-intended “shadow IT” into a coherent Industrial DataOps strategy that actually delivers value.In this episode of the IT/OT Insider Podcast, we sat down with Rija Rakotoarisoa, Group IT Operations & Industry 4.0 Lead at Poclain Hydraulics, a French (international) independent group specializing in the design, manufacture and sale of hydrostatic / electrohydraulic transmissions: motors, pumps, valves, system for off-road or mobile machines and one of the global leaders in hydrostatic transmissions.If you’ve ever found yourself trying to bridge IT and OT while juggling standardization, culture change, and budget cuts… you’ll feel very at home in Rija’s story.From Developer to Industry 4.0 LeaderRija started his career firmly on the IT side: a master’s in computer science, developer turned IT manager, working in a plant where his job was to keep systems running and people connected. Then came the shift.“After five or six years, I felt like I had seen everything. I wanted to do something more than pure IT, something that had a direct impact on the business.”So he went back to school, this time for a master’s in finance. Not because he loved accounting, but because it was his way to “remove the geek tag.”“If you wanted to have more impact, you had to speak the business language.”That change paid off. Rija became both IT and finance manager at one of the company’s plants and learned firsthand what happens when you put technology in service of the business. He used automation to help teams understand their own costs, improve efficiency, and cut the manual data entry that was eating up hours every day.Lessons from Good and Bad ProjectsIn his later roles, including a global Industry 4.0 function, Rija saw dozens of digital projects across multiple plants. Some brilliant, others not so much.“A bad example is when a company rolls out something top-down. They say, ‘This is the strategy, you must implement it,’ without asking the real problems at the plant. It takes time, money, and in the end, nobody uses it.”Sound familiar?The good examples, he says, start from the other direction. From real operational pain points.“When you address the real problem in manufacturing - something that changes the day-to-day of the operational team - then they support you, they use it, and they apply it every day.”It sounds simple, but as he adds, “it’s not.” It takes change management, communication, and people inside each plant who carry the message and help build local momentum.Starting from a Digital GreenfieldWhen Rija joined Poclain Hydraulics, about 6 years ago, it was, as he puts it, “a digital greenfield.” The company had strong IT foundations (infrastructure, networks, ERP), but no consistent support for manufacturing systems yet.“There were many IT/OT projects managed only by operational people. They cared about the end result, but not the implications in term of IT constraints. In the end, you have a big nightmare.”In other words: well-intentioned local initiatives, zero standardization. The kind of environment where every plant has its own version of the truth.So where do you start when the elephant is that big?“We started with the most painful issue: the end-of-line quality control system. Each plant had its own version. We moved from local executable applications to a web-based, centralized one.”Then came work instructions, and so on and so on. It was a classic “bite-by-bite” transformation.How COVID Changed the GameLike many others, Poclain had big plans for a global MES rollout. And then COVID hit. Budgets froze, priorities shifted, and suddenly the grand plan was off the table.“We had to rethink everything. How can we do more with less? How can we use what we already have?”What followed was a shift from “big system thinking” to a more agile, best-of-breed approach.“I always say it’s not a happy event for everyone, but I thank COVID-19,” he laughs. “It forced us to be creative.”That creativity led to the Data Hub project: a pragmatic approach to connecting existing systems, automating data collection, and building live dashboards that operators could actually use.Building a DataOps MindsetThe guiding principle was simple: make data useful, make it live, and make it easy for non-IT users.“I didn’t want my team to be the bottleneck. The system should be usable by non-IT people.”That requirement drove their vendor evaluation which eventually led to selecting Litmus.io as their main Data Hub platform.“Since 2021, we’ve been implementing Litmus as our main data hub. Step by step, we break the silos and build on it.”But technology was only one part of the story. The harder part was governance and culture.“It took a lot of time to explain to top management that the Data Hub is just an enabler. It’s not magic. You need something meaningful for the people at the plants on top of it.”Standardization Without Killing FlexibilityToday, Poclain’s model combines global consistency with local agility.“We master the data model centrally and duplicate it for each site. Plants can adapt the templates locally by defining their equipments and their mappings, but the core remains the same.”The result?Faster rollouts, cleaner data, and dashboards that update automatically without anyone touching Excel.Rija’s model proves that digital transformation doesn’t have to mean disruption, just the right balance between structure and freedom, one data point at a time.Interested in knowing more about Litmus? A few months ago we published our 5 Step Playbook for a Painless DataOps Rollout:And have you already listened to our Industrial DataOps podcast with John Younes?Stay Tuned for More!🚀 Join the ITOT.Academy →Subscribe to our podcast and blog to stay updated on the latest trends in Industrial Data, AI, and IT/OT convergence.🚀 See you in the next episode!Youtube: https://www.youtube.com/@TheITOTInsider Apple Podcasts:Spotify Podcasts:Disclaimer: The views and opinions expressed in this interview are those of the interviewee and do not necessarily reflect the official policy or position of The IT/OT Insider. This content is provided for informational purposes only and should not be seen as an endorsement by The IT/OT Insider of any products, services, or strategies discussed. We encourage our readers and listeners to consider the information presented and make their own informed decisions. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit itotinsider.substack.com

📣 A quick reminder before we start: Our next ITOT.Academy kicks off on January 23, and our early bird offer is still available. Do you want to join our third group and learn how to bridge IT and OT? There is no better time than now! 👉 Check the curriculum on ITOT.Academy or in this previous article.When Anton Melander joined Northvolt in 2018, the company had around 100 employees and zero factories. The goal? Build Europe’s first large-scale lithium-ion battery production from scratch — and do it fast.“When I joined, there was nothing. I joined the digitalization department, which at the time was me and three others,” Anton told us. “We knew making batteries was fast — really fast — and with tight tolerances. Even microns of misalignment could lead to short circuits. So we wanted to be as data-driven as possible when scaling production.”From that tiny team, Northvolt’s digitalization function grew to over 140 people, while the company itself ballooned to 5,000 employees. It’s a rare story (and one that ended too soon, after Northvolt’s bankruptcy earlier this year). But in those years, Anton learned what it truly means to build an OT organization from nothing, and why many of those lessons are now shaping his next chapter as a startup founder.Thanks for reading The IT/OT Insider! Subscribe for free to receive new posts and support our work.Greenfield DecisionsNorthvolt’s early advantage was also its biggest challenge: a completely blank slate.“We had to figure out what to build and what to buy,” Anton said. “Off-the-shelf software meant long requirement lists, consultants, and change orders. Building in-house gave us flexibility, but it also meant taking full ownership.”At the time, OPC UA was only beginning to gain traction. Northvolt pushed its equipment suppliers to provide compliant servers and built its own IoT platform to handle the data. “We provisioned over a thousand gateways,” Anton recalled. “Just setting that up was a project in itself.”That internal platform became the backbone of production — the foundation of what they later called North Cloud, an internal MES connecting operations, quality, and material flow. “We built a lot ourselves,” Anton said, “but we still leveraged AWS for the cloud, and bought things like ERP and PLM. It was a mix, but a deliberate one.”From Projects to ProductsAs production ramped up, the company’s digital organization had to evolve.“In the beginning, we were completely project-based,” Anton explained. “But as production started, we realized that technology is one thing — it’s only useful if it actually helps people do their job. So we moved from a project-oriented way of working into a product-oriented one.”That shift (which many IT/OT teams wrestle with) required a mindset change. “Everyone wants their feature,” he said. “The backlog keeps growing forever. But not everything that sounds important actually moves the needle. You need to tie initiatives to measurable results: quality, throughput, yield.”He laughs looking back. “Sometimes senior stakeholders would say, ‘This is the most important thing,’ and you had to tell them: ‘It’s not going to increase throughput or quality.’ That’s the hard part.”His other big lesson? Master data. “You can’t calculate OEE if you don’t know the ideal cycle time. You can’t calculate downtime if you don’t know the planned uptime. It’s easy to draw perfect architecture diagrams, it’s harder to make them work in practice.”What Comes After NorthvoltAnton left Northvolt in 2023, before its final collapse, but the experience left him with two realizations: first, that building your own tech stack is both empowering and costly and second, that most manufacturers will never have that luxury.“More than 90 percent of manufacturing companies have fewer than 200 employees,” he said. “They can’t hire 140 people to build their own MES or IoT platform. And yet, they still need data.”That insight became the starting point for his new company, Ronja, which focuses on helping small and mid-sized manufacturers make sense of the data they already have.“We’ve spoken to more than 300 manufacturers since we started,” Anton told us. “Almost all of them say the same thing: they have lots of data, but they’re not using it. The problem isn’t collecting data, it’s getting value out of it.”Ronja’s approach isn’t to replace systems, but to sit on top of what exists, making data accessible to non-technical users. “In most factories,” he said, “data lives in Excel, in emails, in historians. We help people connect it, visualize it, and analyze it faster — without waiting for a two-year MES rollout that eats the entire budget.”Closing ThoughtsNorthvolt’s story is one of ambition and hard-earned lessons: a company that built everything from scratch, scaled fast, and still couldn’t outrun industrial reality. But its alumni, like Anton, carry those lessons forward.His takeaway applies to anyone working on digital transformation, from startups to global enterprises:“The closer you are to the shopfloor, the more unique every factory becomes. You can’t standardize everything — but you can make it easier to understand, to learn, and to improve.”And that, ultimately, is what industrial digitalization has always been about.Stay Tuned for More!🚀 Join the ITOT.Academy →Subscribe to our podcast and blog to stay updated on the latest trends in Industrial Data, AI, and IT/OT convergence.🚀 See you in the next episode!Youtube: https://www.youtube.com/@TheITOTInsider Apple Podcasts: Spotify Podcasts: Disclaimer: The views and opinions expressed in this interview are those of the interviewee and do not necessarily reflect the official policy or position of The IT/OT Insider. This content is provided for informational purposes only and should not be seen as an endorsement by The IT/OT Insider of any products, services, or strategies discussed. We encourage our readers and listeners to consider the information presented and make their own informed decisions. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit itotinsider.substack.com

In our earlier articles, we laid the groundwork for Industrial AI — breaking down the difference between classic AI, generative AI, and agentic AI. But frameworks alone don’t tell the full story. How do these ideas play out when you’re inside a real industrial company, tasked with building teams, getting budget, and making data actually deliver value?For that perspective, we sat down with Nathalie Rigouts, who until recently headed data and analytics at Borealis and is now Head of Business Applications Data and AI at Umicore. Nathalie brings a refreshing, pragmatic voice — someone who moved from finance into IT, and who knows first-hand the reality of building data capabilities in industry.From Finance to Data & AINathalie didn’t start in IT. Her background is in finance, where every month she wrestled with massive spreadsheets just to get accurate actuals. That pain, she recalls, was the start of her data journey:“Every month again, I was struggling with getting the correct actuals. And then of course, you have to make your forecast.”From implementing a financial planning tool, to establishing BI at Borealis, to eventually leading data and analytics, her path shows how close the link is between business need and IT capability. And she’s clear about the lesson: it’s not about technology for its own sake.“It’s not about implementing Microsoft Copilot. You’re not going to gain any sustainable advantage there. But if you can have a deep understanding of the processes in your company, and where data-driven solutions can help, that’s when you start to create value.”Start Small, Sell the SuccessOne of the recurring themes in Nathalie’s story is pragmatism. At Borealis, the team started in 2016 with literally one data scientist and a laptop. “Python notebooks on a laptop, and we started.”The key, she says, is to find enthusiastic allies and solve problems that matter. And once you do, don’t stay modest: market the success internally.“We often forget to sell our success. I would go everywhere and talk about small things we did. And that’s how you gain support for the next steps.”From that first laptop, the team grew, but only because each step came with visible, tangible wins that created pull from the business.Use Cases That MatterSo what are typical use cases in manufacturing? Nathalie sees three common ones:* Predictive maintenance: “If equipment fails often, anomaly detection and predictive maintenance are obvious first steps. But it’s not an easy nut to crack. Often, you don’t have enough failures to feed a model.”* Quality control with computer vision: mainstream, but effective. With enough annotated pictures, good vs bad quality can be classified quickly. The catch? Data Quality.* Logistics optimization: untangling shipping routes and optimizing delivery to customers with AI-based optimization models.These are concrete, valuable problems — and they also highlight the role of data governance. As she recalls with a smile:“We had beautifully annotated data — but all in Finnish. That’s when you realize governance is not optional.”GenAI: Efficiency or Attractiveness?When it comes to Generative AI, Nathalie is cautious. The business case is not always straightforward:“I tried to make the case for Microsoft Copilot. At €30 per user, that’s not small. Does it reduce workforce? No. At best, people spend more time on value-added activities. But what does that bring to the bottom line? Hard to say.”Yet she also sees why companies can’t ignore it.“Companies have to invest in it because it will determine their attractiveness as an employer. New graduates take these tools for granted. If you don’t offer them, you won’t attract talent.”She distinguishes between two levels: workplace efficiency (nice, but hard to quantify) and domain-specific models trained on your own IP. The latter, she believes, is where the real value lies. For example in pharma, where LLMs trained on internal knowledge can speed up R&D. “That’s when AI becomes a true digital co-worker.”Governance, Change, and LegislationOn governance, Nathalie doesn’t mince words:“It’s always the people, the processes, and the tools. The main component around which all of them center is the value case.”Her advice: don’t let your solutions depend on a single enthusiast, and don’t leave an escape hatch back to the old way of working. Change management is part of the job.And on legislation, she takes a positive view:“It’s an opportunity. It forces us to think about awareness, ethics, governance, documentation, monitoring. All things that make sense. Yes, it’s work, but it helps you get budget and build maturity.”Closing ThoughtsWhat we loved about Nathalie’s perspective is how grounded it is. No buzzwords, no silver bullets: just the reality of building teams, solving problems, and learning along the way. Whether it’s predictive maintenance, quality monitoring, or navigating the GenAI hype.Her closing reminder:“Keep it simple, be pragmatic. We built beautiful solutions with just scripting business rules. The business was happy, and nobody needed a fancy machine learning model.”Stay Tuned for More!🚀 Join the ITOT.Academy →Subscribe to our podcast and blog to stay updated on the latest trends in Industrial Data, AI, and IT/OT convergence.🚀 See you in the next episode!Youtube: https://www.youtube.com/@TheITOTInsider Apple Podcasts: Spotify Podcasts: Disclaimer: The views and opinions expressed in this interview are those of the interviewee and do not necessarily reflect the official policy or position of The IT/OT Insider. This content is provided for informational purposes only and should not be seen as an endorsement by The IT/OT Insider of any products, services, or strategies discussed. We encourage our readers and listeners to consider the information presented and make their own informed decisions. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit itotinsider.substack.com

When we talk about industrial connectivity, two names always come up: OPC UA and MQTT. They’re often mentioned in the same breath, as if they’re competitors. But as Kudzai Manditereza reminded us in our conversation, that’s a bit of a misconception. These protocols solve different problems, and understanding their history helps explain why they’re both so important today.OPC UA: From Printer Drivers to Industrial StandardsThe story of OPC goes back to the 1990s. At the time, every automation vendor shipped their own drivers, making integration a nightmare. The OPC Foundation stepped in to create a standardized interface — inspired, of all things, by Microsoft’s printer driver model. Just as Windows could talk to any printer through a standard interface, OPC offered a way for SCADA systems and historians to talk to PLCs without custom drivers.Thanks for reading The IT/OT Insider! Subscribe for free to receive our weekly articles directly in your mailbox!The first generation, known as OPC Classic (DA/HDA), was Windows-only and limited in scope. It solved the immediate problem but couldn’t handle the growing complexity of industrial data. Enter OPC UA (Unified Architecture): cross-platform, internet-capable, and built with powerful information modeling.This is where OPC really shines. As Kudzai put it:“The shop floor is full of objects — pumps, compressors, machines. OPC UA lets you model those objects, not just pass around raw tags.”That means a machine builder can ship a unit with a pre-built OPC UA information model, ready for plug-and-play integration.The OPC Foundation even created companion specifications for different industries, so a compressor in Germany “speaks” the same OPC language as a compressor in the US. No more reinventing interfaces for every project.MQTT: Born in the Oil Fields, Adopted by the InternetIf OPC UA came from printer drivers, MQTT came from oil pipelines (well… actually from the even older pub-sub newsgroups back when the internet was still something really special).In 1999, IBM engineers developed MQTT to monitor pipelines over unreliable, low-bandwidth satellite links. The key innovation was the publish/subscribe model: instead of clients constantly polling servers for updates, devices publish data to a central broker, and anyone interested can subscribe.This lightweight, bandwidth-efficient design made MQTT perfect for remote monitoring. But it didn’t stay confined to industry. In fact, one of its biggest early adopters was Facebook, who used MQTT in their Messenger platform. By the 2010s, MQTT had made its way back to industry, now riding the wave of IIoT and event-driven architectures.As Kudzai explained:“MQTT doesn’t tell you how to model your data. It’s a transport protocol. But its hierarchical topic structure maps naturally to concepts like the Unified Namespace (UNS).”Think of it like a Google Drive folder structure: data is organized into topics, and anyone can subscribe to the parts they care about.OPC UA vs MQTT: Different Tools, Different JobsSo should you pick OPC UA or MQTT? The answer is: both, but for different layers.* OPC UA excels close to the machines (Levels 0–2 in the Purdue Model). It provides a rich, standardized way to model and expose machine data. Perfect for SCADA, DCS, and local control.* MQTT shines at higher levels (L3/DMZ and above). It’s ideal for integrating thousands of devices into enterprise systems, feeding data lakes, or enabling event-driven architectures. And of course also for IIoT devices spread around the world!As Kudzai put it:“You’ll never control a pump with MQTT. But if you want to share events across your enterprise (machine status, recipes, quality data,…) MQTT is a great fit.”And that’s an important distinction. OPC UA is about structured access to objects. MQTT is about lightweight distribution of events. They don’t replace each other — they complement each other.Closing ThoughtsIndustrial connectivity isn’t about choosing one protocol over the other. It’s about using the right tool for the job. OPC UA and MQTT are part of the same toolbox — and when used together, they unlock scalable, reusable, event-driven architectures that finally let IT and OT speak the same language.As Kudzai summed it up:“The ability to reuse data is a huge factor. Once you stop thinking point-to-point and start thinking platform, that’s when scale happens.”… And we couldn't agree more!Also, take a look what HiveMQ has to offer: Stay Tuned for More!🚀 Join the ITOT.Academy →Subscribe to our podcast and blog to stay updated on the latest trends in Industrial Data, AI, and IT/OT convergence.🚀 See you in the next episode!Youtube: https://www.youtube.com/@TheITOTInsider Apple Podcasts: Spotify Podcasts: Disclaimer: The views and opinions expressed in this interview are those of the interviewee and do not necessarily reflect the official policy or position of The IT/OT Insider. This content is provided for informational purposes only and should not be seen as an endorsement by The IT/OT Insider of any products, services, or strategies discussed. We encourage our readers and listeners to consider the information presented and make their own informed decisions. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit itotinsider.substack.com

When Zev Arnold joined us on the podcast, he brought with him the kind of energy and clarity you rarely get from someone working at the intersection of industry, data, and transformation. As a principal director at Accenture’s Industry X, Zev has spent years working with oil & gas, utilities, mining, and life sciences companies—not just helping them digitize, but helping them make that digitization mean something operationally. “I help engineers and operators use data to improve the way they work,” he said, and that theme stayed with us throughout the conversation.Context is King (But only if the right person owns it)One of the most powerful insights from Zev is his perspective on data contextualization.He tells the story of a compressor engineer who wanted to track starts instead of doing maintenance on a fixed schedule. “Some compressors had start-stop tags, some had rotational speed. The structure of the data needed to support the engineer’s thinking, not the other way around.” That’s when Zev realized: contextualization only works when it’s driven by the user, not imposed by some else. “Give that hierarchy to the compressor engineer and say, this is yours. Own it.”In Zev’s model, self-service is the enabler. If engineers and operators can build their own analytics without writing Python or waiting for a dev team, that’s when transformation becomes real.Platforms that Empower, Not ObstructZev is quick to point out where industrial transformation often stumbles: platforms that weren’t built to scale use cases easily. “We had a platform that worked great for one use case. But every new use case required us to rebuild everything again.”“You want to catch that $50K event before it becomes an environmental incident. The person who understands the problem best is the engineer. We need to give them the tools to act.”The bigger picture? Zev sees a future where operators train and maintain AI systems—even simple expert systems that alert you when a tank overflows. That’s where AI becomes more than a buzzword and actually enters the DNA of industrial work.People, AI, and the Future of WorkZev introduces a compelling framing: people-to-people, people-to-AI, and AI-to-AI. That’s the triangle of future industrial collaboration. A model he borrowed from Paul Daugherty’s book Human + Machine.In this framing, AI isn’t replacing people. Instead, AI becomes part of their toolbox. “Even simple AI—like monitoring sump tank levels—needs someone to train and maintain it,” he says. That job doesn’t belong in a remote digital transformation office. It belongs on the floor, with the engineer who knows the equipment and the impact.Thanks for reading The IT/OT Insider! Subscribe for free to receive new posts and support our work.We just need to catch the real valueIs all this really worth it? Zev answers emphatically, yes. And he points to a hard number: EFORd, the forced outage rate in U.S. power generation. This is a metric used to assess the reliability of thermal power generating units, specifically measuring the probability of a unit being unavailable due to forced outages or deratings when there is a demand for power. It essentially indicates how often a generator is unable to produce power when it's needed. The EFORd rate in the US averages 7.5% (a theoretical 0 value would mean that there are no unplanned outages). If we could close that gap with better decisions, the industry could unlock over $100 billion in value.“And that’s just one industry,” Zev adds. “The ripple effects could be societal: better data centers, climate impact, lower energy bills, even job growth.”Final ThoughtsFrom the subtle distinctions between manufacturing types to the very real, tangible impact of good data and AI done right, we touched it all in this podcast. Whether it’s process or discrete, the message is clear: stop treating transformation like a side project. Get the right tools into the right hands—and let people do what they do best.As Zev put it, “On my worst days, I wonder, is this data really valuable? But 15 years in, I know it is. We just have to use it right.”Stay Tuned for More!🚀 Join the ITOT.Academy →Subscribe to our podcast and blog to stay updated on the latest trends in Industrial Data, AI, and IT/OT convergence.🚀 See you in the next episode!Youtube: https://www.youtube.com/@TheITOTInsider Apple Podcasts:Spotify Podcasts:Disclaimer: The views and opinions expressed in this interview are those of the interviewee and do not necessarily reflect the official policy or position of The IT/OT Insider. This content is provided for informational purposes only and should not be seen as an endorsement by The IT/OT Insider of any products, services, or strategies discussed. We encourage our readers and listeners to consider the information presented and make their own informed decisions. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit itotinsider.substack.com

📣 Have you already considered joining our ITOT.Academy ? We tell stories. We focus on concepts, not tools.On frameworks, not features.👉 Check out our podcast to learn more !If you’re still thinking of OT cybersecurity as “just” another IT checklist item, it’s time to rethink the whole game. In this episode of the IT/OT Insider podcast, David is joined by Danielle Jablanski — cybersecurity strategist, OT advocate, and all-around force in the industrial cyber world — for a grounded conversation on what cybersecurity in industrial systems really means, why it’s not a product or checklist, and how to approach it without getting lost in the buzzwords.Danielle brings not only deep knowledge but also practical field insight from her time at CISA, Nozomi Networks, and now STV.What is OT Cybersecurity Anyway?OT (Operational Technology) isn’t just ICS (Industrial Control Systems) anymore. “OT now represents a broad set of technologies that covers process automation, instrumentation and field devices, cyber-physical operations, and industrial control systems,” Danielle explains.From water utilities and power grids to baggage claim systems and digital parking meters, these systems form the backbone of our critical infrastructure. And unlike IT systems, the primary concern isn’t just data breaches—it’s real-world, physical consequences.“Segmentation is King”Danielle is clear: “For the last five or six years, I've always said segmentation is king. I still think it's paramount.” But that doesn’t mean it’s easy or one-size-fits-all.The problem? Too many organizations buy visibility tools but neglect the basics like firewall rules or sound architecture. As Danielle notes, “You can't do any type of root cause analysis if you're not incorporating your entire operation into your purview.”Her takeaway: start with effects-based thinking. “Focus on the effect of something rather than the means.”By the way, did you know our very first post on this blog was about the Purdue model? Check it out here:No More Choose-Your-Own-Adventure SecurityDanielle challenges a common trap: jumping into cybersecurity with no strategy. “There’s this leap to: I want a pen test, I want incident response, I want this, this, this. But are people even ready for a 150-page pen test that tells you everything you might want to fix over the next 10 years?”Instead, she advocates for needs assessments, crown jewel analysis, and understanding fault tolerance. She says, “You need to understand what is impossible, what is not plausible… you can't do that without really getting to root cause analysis.”Thanks for reading The IT/OT Insider! Subscribe for free to receive new posts and support our work.The Good, the Bad, and the Pointless DeliverablesWhen asked about good versus bad deliverables, Danielle doesn’t hold back: “A red flag? People rush to procure tools.” In contrast, green flags are often simple: “What forensic capacity do you have? What logs are you keeping? What’s your retention policy?”And watch out for this one: “Our integrator is responsible for cybersecurity.” That’s a red flag unless you’ve built a mechanism to test and verify that assumption.Starting a Career in OT SecurityFor anyone curious about stepping into the field, Danielle’s advice is encouraging and honest. “You can take any interested person and train them based on their interest and their aptitude.” She recommends free online resources like learn.automationcommunity.com and Grady Hillhouse’s Engineering in Plain Sight. Her bottom line? “Do whatever you're interested in and do it as much as your resources allow for.”Why It MattersThroughout the conversation, Danielle keeps it grounded: OT cybersecurity isn’t about buying the latest tool or chasing the latest threat report. It’s about resilience, design, human capacity, and real-world impact. “All the tools in the world are not going to help you if you haven’t built the scaffolding.”Or, to put it more bluntly: this isn’t a choose-your-own-adventure. It’s about picking a strategy and sticking to it.Let us know what you thought of this episode and if you want more cyber content, get in touch. Like we promised during the episode, this topic is too important and we haven’t touched OT Cyber Sec enough… So we’ll be launching a full cybersecurity series later this year.Extra Resources* Find Danielle on LinkedIn: https://www.linkedin.com/in/daniellejjablanski/* Free learning: learn.automationcommunity.com* Grady Hillhouse’s book: Engineering in Plain Sight* Copenhagen Industrial Cybersecurity Event : https://insightevents.dk/isc-cph/Danielle’s talk at SANS:And see also https://www.sans.org/blog/a-visual-summary-of-sans-ics-summit-2023/ for this stunning visual summary of her talk:Stay Tuned for More!🚀 Join the ITOT.Academy →Subscribe to our podcast and blog to stay updated on the latest trends in Industrial Data, AI, and IT/OT convergence.🚀 See you in the next episode!Youtube: https://www.youtube.com/@TheITOTInsider Apple Podcasts: Spotify Podcasts: Disclaimer: The views and opinions expressed in this interview are those of the interviewee and do not necessarily reflect the official policy or position of The IT/OT Insider. This content is provided for informational purposes only and should not be seen as an endorsement by The IT/OT Insider of any products, services, or strategies discussed. We encourage our readers and listeners to consider the information presented and make their own informed decisions. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit itotinsider.substack.com

In this episode of the IT/OT Insider Podcast, we welcome someone who doesn’t come from cloud platforms, data infrastructure, or connectivity layers. Instead, he brings something equally vital: operational wisdom.Raf Swinnen has spent his career inside factories. From Procter & Gamble to Kellogg's, and later Danone, Raf worked at the intersection of operations and transformation, guiding teams through continuous improvement and later, digital initiatives.What makes his perspective especially valuable? It’s grounded in Lean thinking. Not as a buzzword, but as a real discipline. One that requires a sharp understanding of processes, a respect for people on the floor, and a strong filter for what actually adds value.From Line Leader to Digital Change AgentRaf didn’t start in digital. He started on the floor: managing lines, people, safety, and performance. That experience shaped how he sees digital transformation today: as something that should support operations, not get in the way of them.At Danone, he led digital initiatives at the Rotselaar site (Belgium). The job wasn’t to implement more dashboards. It was to help teams use data to drive better decisions, without losing sight of the fundamentals.“Tech to the Back” — What Digital Should Learn from LeanOne of the most powerful takeaways from this episode is Raf’s principle of “Tech to the back.”“Digital solutions should not be front and center. People and processes should be. Tech should follow.”This is a strong antidote to the over-designed, solution-first approaches that often flood the industrial space. According to Raf, the biggest risk in digital projects isn’t the technology — it’s losing the problem along the way.Three C’s: Connect, Collaborate, and CoherenceAs part of his work with leadership teams, Raf often introduces what he calls the 3 C’s:* Clarity – Where are we going, and why?* Consistency – Are we reinforcing the same messages and systems?* Coherence – Do our tools, apps, and data work together?These are not slogans, they are essential behaviors for any transformation to stick. They also align closely with how we designed the ITOT.Academy, where cross-role learning and shared frameworks are front and center.One of Raf’s biggest contributions came through how he structured teams. In a newly created role as Digital Program Manager, he pulled in both IT and OT voices and even shifted reporting lines to foster true collaboration.He didn’t look for tech wizards. He looked for people with enthusiasm. People who wanted to make a difference. These became his digital ambassadors, key voices from every shift, every team.“When the night shift speaks up, you listen. They see the edge cases nobody else does.”Case Examples: Real Change Starts SmallRaf shared stories from his time at Danone, Kellogg’s, and P&G, where transformation didn’t come from big declarations — but from small, disciplined steps.At one plant, it was about helping teams make better use of their shift handovers.At another, it meant cleaning up data before launching another round of training.At Danone, the challenge was scaling good ideas without flattening local ownership.“Digital without context is noise. The real challenge is creating relevance at the point of use.”Digital with DisciplineRaf’s story is a reminder that digital transformation doesn’t start with technology, it starts with understanding the process. Listening to the people who run it, and designing with clarity and purpose. Whether it's Lean principles, cultural alignment, or simply asking better questions, his approach keeps the focus where it matters: on solving real problems in practical ways.In a time when industrial tech is advancing fast and buzzwords multiply by the day, it’s refreshing to hear someone say: let’s not forget why we’re doing this in the first place.If you’re working in digital, operations, or somewhere in between, this episode is a pause-and-reflect moment.And maybe also a nudge: to push tech to the back, and put people and purpose out front.Disclaimer: The views and opinions expressed in this interview are those of the interviewee and do not necessarily reflect the official policy or position of The IT/OT Insider. This content is provided for informational purposes only and should not be seen as an endorsement by The IT/OT Insider of any products, services, or strategies discussed. We encourage our readers and listeners to consider the information presented and make their own informed decisions. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit itotinsider.substack.com

📣 Quick note before we dive into all things open source: In our last episode, we announced the launch of the ITOT.Academy: a live on-line learning experience for professionals navigating the complex world of IT/OT collaboration. Our early bird seats are filling up fast. If you’re serious about gaining practical skills (not just theory), now’s the time to secure your spot. Don’t wait too long, the first cohorts start on August 29 and September 5 (each cohort consists of six 2 hour sessions and you receive all recordings). 👉 Full training program and registration via ITOT.AcademyIn this episode of the IT/OT Insider Podcast, we sit down with Alexander Krüger, co-founder and CEO of United Manufacturing Hub (UMH), to talk about something that’s both old and revolutionary in the industrial world: open source software.This isn’t about hobby projects or side experiments. It’s about why open source is playing an increasingly important role in how factories move data, scale operations, and reduce vendor lock-in. Alexander brings both a technical and business perspective and shares what happens when a mechanical engineer dives deep into the world of cloud-native data infrastructure.Not all Open Source is created equalMost industrial companies still equate reliability with paying a vendor and signing a service-level agreement. But Alexander challenges that mindset. His team originally built UMH because they were frustrated with how hard it was to try, test, and scale traditional industrial software.“We just wanted to get data from A to B in a factory, but realized that problem isn’t really solved yet. So we made it open source.”Alexander is quick to point out that choosing open source doesn’t automatically mean less risk, but it does mean different trade-offs. Key factors include:* Licensing clarity* Community health (Is it maintained? Is it active?)* Governance (Who controls the roadmap? What happens if they change direction?)He even brings up the infamous example of vendors repackaging tools like Node-RED under different names, then charging for them without giving proper credit (or worse, shipping outdated versions).“If you’re already bundling open source into your software, why not be honest about it?”What about reliability?If you’re an OT leader, you might still worry: who do I call at 2 a.m. when something breaks?Alexander’s answer: you should be asking that question about any software, open or proprietary. Because often, what fails isn’t the software itself, it’s the integrations someone built in a rush, or the one engineer who knew how things worked and then left the company.With open source, there’s at least transparency, control, and the ability to maintain continuity. You’re not locked out of your own systems.The Human Side: The rise of the hybrid engineerOne of the most interesting parts of the conversation was about who will make this all work. Alexander sees a new kind of engineer emerging: someone with a background in OT, but who enjoys learning IT concepts, tinkering with Docker, and embracing DevOps practices.“We’re looking for people who used to live in TIA Portal but now run state of the art home automation in their free time.”This isn’t about turning everyone into a software developer. But it is about building a culture where people are open to learning from both sides and using modern ways of working and new tools to solve old problems.Stay Tuned for More!Subscribe to our podcast and blog to stay updated on the latest trends in Industrial Data, AI, and IT/OT convergence.🚀 See you in the next episode!Youtube: https://www.youtube.com/@TheITOTInsider Apple Podcasts: Spotify Podcasts: Disclaimer: The views and opinions expressed in this interview are those of the interviewee and do not necessarily reflect the official policy or position of The IT/OT Insider. This content is provided for informational purposes only and should not be seen as an endorsement by The IT/OT Insider of any products, services, or strategies discussed. We encourage our readers and listeners to consider the information presented and make their own informed decisions. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit itotinsider.substack.com

Discover the program and claim your seat here: https://itot.academy 🎙️ In this special episode of the IT/OT Insider Podcast, David and Willem officially announce the launch of the ITOT.Academy!After years of conversations with IT/OT professionals, consultants, and technology vendors, one thing became clear: there’s a huge need for practical, vendor-neutral education to help people work together across IT and OT boundaries.The ITOT.Academy is designed to fill that gap.What you’ll learn in this episode:Why we created the AcademyWho it's for: OT teams, IT teams, consultants, vendorsThe structure of the program: short, live, interactive sessionsWhy it's not about convergence but collaborationWhen the first groups will startHow to sign up and join the first cohorts🚀 Learn more and sign up at https://itot.academy🎧 Subscribe for more honest conversations on bridging IT and OT.Chapters00:00 Introduction to ITOT Academy01:38 Feedback from Subscribers03:47 Target Audience for Training07:24 Training Format and Structure11:19 Core Concepts of the Training13:32 Interactive Sessions and Wrap-Up14:37 Launch Details and Closing Remarks This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit itotinsider.substack.com

It is episode 31 and we’re finally tackling a topic that somehow hadn’t made the spotlight yet: IoT. And we couldn’t have asked for two better guests to help us dive into it: Olivier Bloch and Ryan Kershaw.This is not your usual shiny, buzzword-heavy conversation about the Internet of Things. Olivier and Ryan bring decades of hands-on experience from both sides of the IT/OT divide: Olivier from embedded systems, developer tooling, and cloud platforms, Ryan from the shop floor, instrumentation, and operational systems. Together, they’re building bridges where others see walls.IoT 101Olivier kicks things off with a useful reset:"IoT is anything that has compute and isn’t a traditional computer. But more importantly, it’s the layer that lets these devices contribute to a bigger system: by sharing data, receiving commands, and acting in context."Olivier has seen IoT evolve from standalone embedded devices to edge-connected machines, then cloud-managed fleets, and now towards context-aware, autonomous systems that require real-time decision-making.Ryan, meanwhile, brings us back to basics:"When I started, a pH sensor gave you one number. Now, it gives you twelve: pH, temperature, calibration life, glass resistance... The challenge isn’t getting the data. It’s knowing what to do with it."Infrastructure Convergence: The Myth of the One-Size-Fits-All PlatformWe asked the obvious question: after all these years, why hasn’t “one platform to rule them all” emerged for IoT?Olivier’s take is straightforward:"All the LEGO bricks are out there. The hard part is assembling them for your specific need. Most platforms try to do too much or don’t understand the OT context."You can connect anything these days. The real question is: should you? Start small, solve a problem, and build trust from there.Why Firewalls are no longer enoughAnother highlight: their views on security and zero trust in industrial environments.Olivier and Ryan both agree: the old-school "big fat firewall" between IT and OT isn’t enough."You’re not just defending a perimeter anymore. You need to assume compromise and secure each device, user, and transaction individually."So what is Zero Trust, exactly? It’s a cybersecurity model that assumes no device, user, or system should be automatically trusted, whether it’s inside or outside the network perimeter. Instead of relying on a single barrier like a firewall, Zero Trust requires continuous verification of every request, with fine-grained access control, identity validation, and least-privilege permissions. It’s a mindset shift: never trust, always verify.They also emphasize that zero trust doesn’t mean "connect everything." Sometimes the best security strategy is to not connect at all, or to use non-intrusive sensors instead of modifying legacy equipment.Brownfield vs. Greenfield: Two different journeysWhen it comes to industrial IoT, where you start has everything to do with what you can do.Greenfield projects, like new plants or production lines, offer a clean slate. You can design the network architecture from the ground up, choose modern protocols like MQTT, and enforce consistent naming and data modeling across all assets. This kind of environment makes it much easier to build a scalable, reliable IoT system with fewer compromises.Brownfield environments are more common and significantly more complex. These sites are full of legacy PLCs, outdated SCADA systems, and equipment that was never meant to connect to the internet. The challenge is not just technical. It's also cultural, operational, and deeply embedded in the way people work."In brownfield, you can’t rip and replace. You have to layer on carefully, respecting what works while slowly introducing what’s new," said Ryan.Olivier added that in either case, the mistake is the same: moving too fast without thinking ahead."The mistake people make in brownfield is to start too scrappy. It’s tempting to just hack something together. But you’ll regret it later when you need to scale or secure it."Their advice is simple:Even if you're solving one problem, design like you will solve five. That means using structured data models, modular components, and interfaces that can evolve.Final ThoughtsThis episode was a first deep dive into real-world IoT—not just the buzzwords, but the architecture, trade-offs, and decision-making behind building modern industrial systems.From embedded beginnings to UNS ambitions, Thing-Zero is showing that the future of IoT isn’t about more tech. It’s about making better choices, backed by cross-disciplinary teams who understand both shop floor realities and enterprise demands.To learn more, visit thing-zero.com and check out Olivier’s YouTube channel “The IoT Show” for insightful and developer-focused content.Stay Tuned for More!Subscribe to our podcast and blog to stay updated on the latest trends in Industrial Data, AI, and IT/OT convergence.🚀 See you in the next episode!Youtube: https://www.youtube.com/@TheITOTInsider Apple Podcasts: Spotify Podcasts: Disclaimer: The views and opinions expressed in this interview are those of the interviewee and do not necessarily reflect the official policy or position of The IT/OT Insider. This content is provided for informational purposes only and should not be seen as an endorsement by The IT/OT Insider of any products, services, or strategies discussed. We encourage our readers and listeners to consider the information presented and make their own informed decisions. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit itotinsider.substack.com

Today, we have the pleasure of speaking with Nikki Gonzales, Director of Business Development at Weintek USA, co-founder of the Automation Ladies podcast, and co-organizer of OT SCADA CON—a conference focused on the gritty, real-world challenges of industrial automation.Unlike many of our guests who often come from cloud-first, data-driven digitalization backgrounds, Nikki brings a refreshing and much-needed OT floor-level perspective. Her world is HMI screens, SCADA systems, manufacturers, machine builders, and the hard truths about where industry transformation actually stands today.What’s an HMI and Why Does It Matter?In Nikki’s words, an HMI is:"The bridge between the operator, the machine, and the greater plant network."It’s often misunderstood as just a touchscreen replacement for buttons—but Nikki highlights that a modern HMI can do much more:* Act as a gateway between isolated machines and plant-level networks.* Enable remote access, alarm management, and contextual data sharing.* Help standardize connectivity in mixed-vendor environments.The HMI is often the first step in connecting legacy equipment to broader digital initiatives.Industry 3.0 vs. Industry 4.0: Ground Reality CheckWhile the industry buzzes with Industry 4.0 (and 5.0 🙃) concepts, Nikki’s view from the field is sobering:"Most small manufacturers are still living in Industry 3.0—or earlier. They have mixed equipment, proprietary protocols, and minimal digitalization."For the small manufacturers Nikki works with, transformation isn't about launching huge digital projects. It’s about taking incremental steps:* Upgrading a handful of sensors.* Introducing remote monitoring.* Standardizing alarm management.* Gradually building operational visibility."Transformation for small companies isn’t about fancy AI. It’s about survival—staying competitive, keeping workers, and staying in business."With labor shortages, supply chain pressures, and rising cybersecurity threats, smaller manufacturers must adapt—but they have to do it in a way that is affordable, modular, and low-risk.UNS, SCADA, and the State of ConnectivityNikki also touched on how concepts like UNS (Unified Namespace) are being discussed:"Everyone talks about UNS and cloud-first strategies. But in reality, most plants still have islands of automation. They have to bridge old PLCs, proprietary protocols, and aging SCADA systems first."While UNS represents a desirable goal—a real-time, unified data model accessible across the enterprise—many manufacturers are years (or even decades) away from making that a reality without significant groundwork first.In this world, HMI upgrades, standardized communication protocols (like MQTT), and targeted SCADA modernization become the critical building blocks.The Human Challenge: Culture and WorkforceBeyond the technology, Nikki highlighted the human side of transformation:* Younger generations aren't attracted to repetitive, low-tech manufacturing jobs.* Manual, isolated processes make hiring and retention even harder.* Manufacturers must rethink how technology supports not just efficiency, but employee satisfaction.The future of manufacturing depends not just on smarter machines—but on designing operations that attract and empower the next generation of workers.Organizing a Conference from Scratch: OT SCADA CONBefore wrapping up, we asked Nikki about organizing OT SCADA CON."You need a little naivety, a lot of persistence, and the right partners. We jumped first, then figured out how to build the plane on the way down."OT SCADA CON is designed by practitioners for practitioners—short technical sessions, no vendor pitches, no buzzword bingo. Just real, practical advice for the engineers, integrators, and plant technicians who make industrial operations work.Final ThoughtsIn a world obsessed with the future, Nikki reminds us:You can't build Industry 4.0 without first fixing Industry 3.0.And fixing it starts with respecting the complexity, valuing the small steps, and supporting the people on the ground who keep manufacturing running.If you want to learn more about Nikki’s work, visit automationladies.io and check out OT SCADA CON, taking place July 23–25, 2025.Stay Tuned for More!Subscribe to our podcast and blog to stay updated on the latest trends in Industrial Data, AI, and IT/OT convergence.🚀 See you in the next episode!Youtube: https://www.youtube.com/@TheITOTInsider Apple Podcasts: Spotify Podcasts: Disclaimer: The views and opinions expressed in this interview are those of the interviewee and do not necessarily reflect the official policy or position of The IT/OT Insider. This content is provided for informational purposes only and should not be seen as an endorsement by The IT/OT Insider of any products, services, or strategies discussed. We encourage our readers and listeners to consider the information presented and make their own informed decisions. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit itotinsider.substack.com

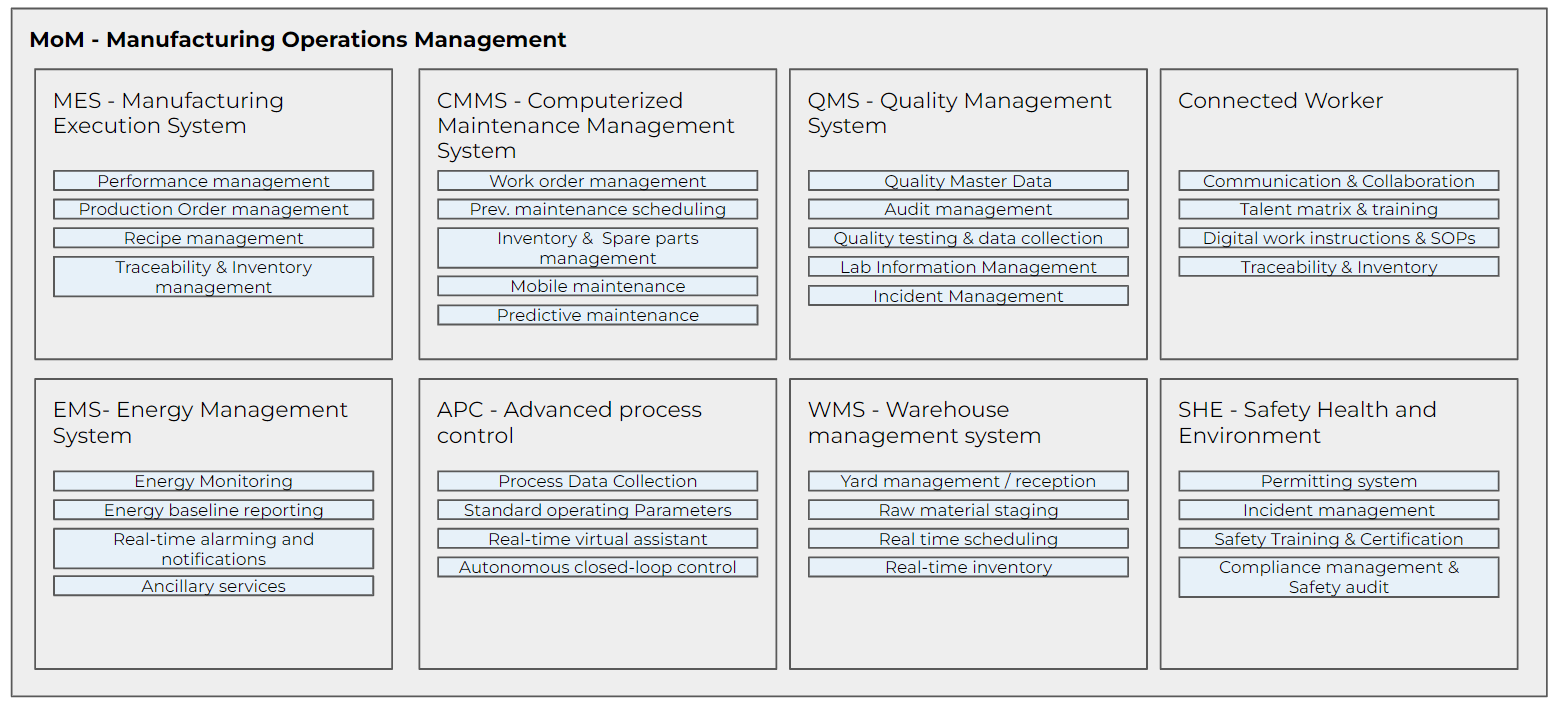

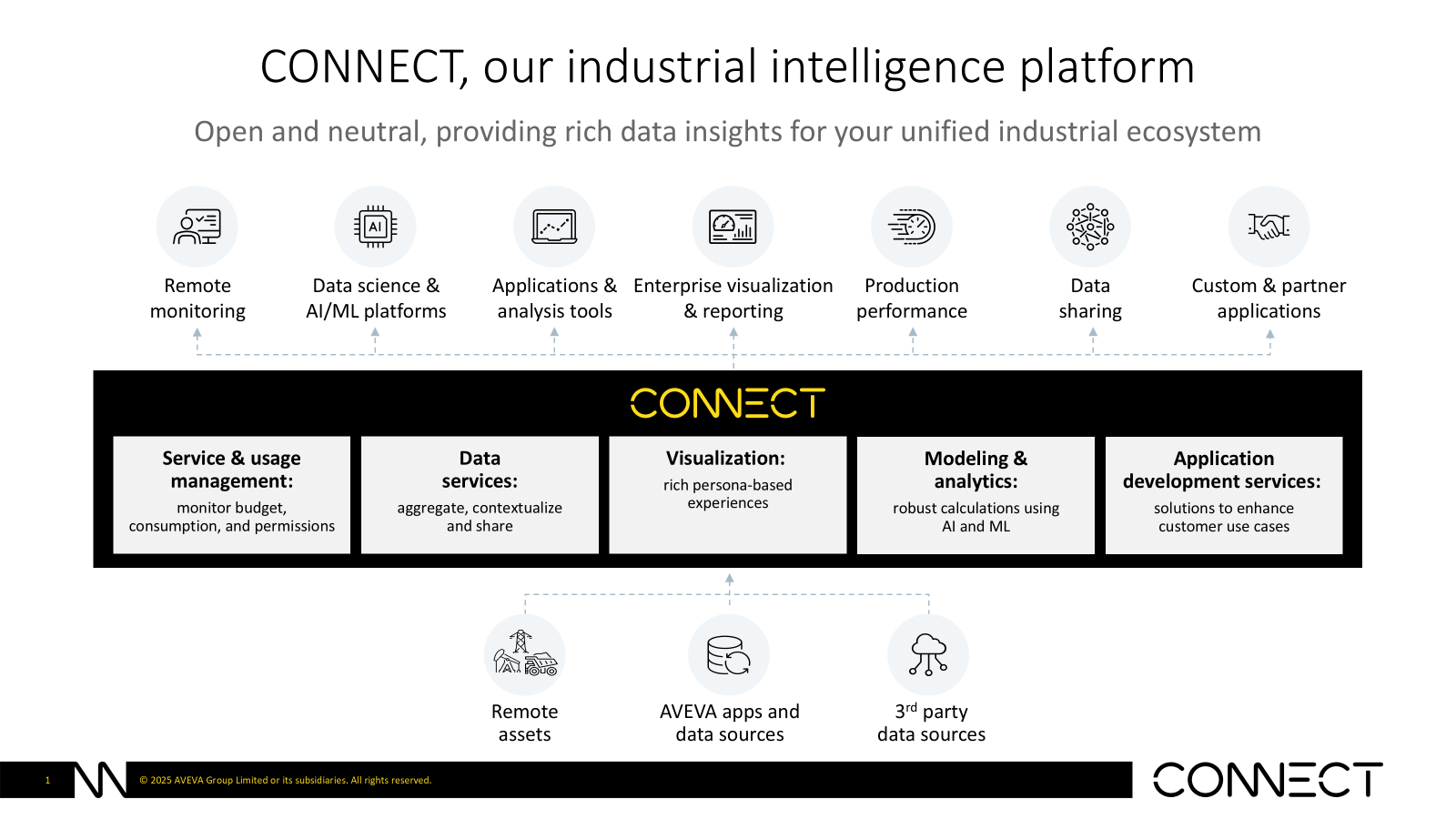

Welcome to another episode of the IT/OT Insider Podcast. Today, we’re diving into the world of Manufacturing Execution Systems (MES) and Manufacturing Operations Management (MOM) with Matt Barber, VP & GM MES at Infor. With over 15 years of experience, Matt has helped companies worldwide implement MES solutions, and he’s now on a mission to educate the world about MES through his website, MESMatters.com .MES is a topic that sparks a lot of debate, confusion, and, in many cases, hesitation. Where does it fit in a manufacturing tech stack? How does it relate to ERP, Planning Systems, Quality Systems, or industrial data platforms? And what’s the real difference between MES and MOM?These are exactly the questions we’re tackling today.Thanks for reading The IT/OT Insider! Subscribe for free to receive new posts and support our work.MES vs. MOM: What’s the Difference?Matt opens the discussion by addressing one of the misconceptions in the industry-what actually defines an MES, and how it differs from MOM."An MES is a specific type of application that focuses on production-related activities-starting and stopping production orders, tracking downtime, recording scrap, and calculating OEE. That’s the core of MES."But MOM is broader. It extends beyond production into quality management, inventory tracking, and maintenance. MOM isn’t a single application but rather a framework that connects multiple operational functions.Many MES vendors include some MOM capabilities, but few solutions cover all aspects of production, quality, inventory, and maintenance in one system. That’s why companies need to carefully evaluate what they need when selecting a solution.How Do Companies Start with MES?Not every company wakes up one day and decides, “We need MES.” The journey often starts with a single pain point-a need for OEE tracking, real-time visibility, or better quality control.Matt outlines two main approaches:* Step-by-step approach* Companies start with a single use case, such as tracking downtime and production efficiency.* Once they see value, they expand into areas like quality control, inventory tracking, or maintenance scheduling.* This approach minimizes risk and allows for quick wins.* Enterprise-wide standardization* Larger companies often take a broader approach, aiming to standardize MES across all sites.* The goal is to ensure consistent processes, better data integration, and a unified system for all operators.* While it requires more planning and investment, it creates a cohesive manufacturing strategy.Both approaches are valid, but Matt emphasizes that even if companies start small, they should have a long-term vision of how MES will fit into their broader Industry 4.0 strategy.The Role of OEE in MESOEE (Overall Equipment Effectiveness) is one of the most common starting points for MES discussions. It measures how much good production output a company achieves compared to its theoretical maximum.The three key factors:* Availability – How much time machines were available for production.* Performance – How efficiently the machines ran during that time.* Quality – How much of the output met quality standards."You don’t necessarily need an MES to track OEE. Some companies do it in spreadsheets or standalone IoT platforms. But if you want real-time OEE tracking that integrates with production orders, material usage, and quality data, MES is the natural solution."People and Process: The Hardest Part of MES ImplementationOne of the biggest challenges in MES projects isn’t the technology-it’s people and process change.Matt shares a common issue:"Operators often have their own way of doing things. They know how to work around inefficiencies. But when an MES system is introduced, it enforces a standardized way of working, and that’s where resistance can come in."To make MES adoption successful, companies must:* Get leadership buy-in – A clear vision from the top ensures the project gets the necessary resources and support.* Engage operators early – Including shop floor workers in the process design increases adoption and usability.* Define clear roles – Having global MES champions and local site super-users ensures both standardization and flexibility."You can have the best MES system in the world, but if no one uses it, it’s worthless."How the MES Market is ChangingMES has been around for decades, but the industry is evolving rapidly. Matt highlights three major trends:* The rise of configurable MES* Historically, MES projects required custom coding and long implementation times.* Now, companies like Infor are offering out-of-the-box, configurable MES platforms that can be set up in days instead of months.* Companies that offer configurable OTB applications (like Infor) are able to offer quick prototyping for manufacturing processes, ensuring customers benefit from agility and quick value realisation.* The split between cloud-based MES and on-premise solutions* Many legacy MES systems were designed to run on-premise with deep integrations to shop floor equipment.* However, cloud-based MES is growing, especially in multi-site enterprises that need centralized management and analytics.* Matt recognises the importance of cloud based applications, but highlights that there will always be at least a small on-premise part of the architecture for connecting to machines and other shopfloor equipment.* MES vs. the rise of “build-it-yourself” platforms* Some smaller manufacturers opt for the “do-it-yourself” approach, creating their own MES-Light applications by layering in various technologies and software platforms.* This trend is more common in smaller manufacturers that need flexibility and are comfortable developing their own industrial applications.* However, for enterprise-wide standardization, an OTB configurable MES platform provides the best scalability and consistency, and the most advanced platforms allow end-users to configure it themselves through master data, reports, and dashboards.MES and Industrial Data PlatformsA big topic in manufacturing today is the role of data platforms. Should MES be the central hub for all manufacturing data, or should it feed into an enterprise-wide data lake?Matt explains the shift:"Historically, MES data was stored inside MES and maybe shared with ERP. But now, with the rise of AI and advanced analytics, manufacturers want all their industrial data in one place, accessible for enterprise-wide insights."This has led to two key changes:* MES systems are increasingly required to push data into (industrial) data platforms.* Companies are focusing on data contextualization, ensuring that production data, quality data, and maintenance data are all aligned for deeper analysis."MES is still critical, but it’s no longer just an execution layer-it’s a key source of contextualized data for AI and machine learning."Where to Start with MESFor companies considering MES, Matt offers some practical advice:* Understand your industry needs – Different MES solutions are better suited for different industries (food & beverage, automotive, pharma, etc.).* Start with a clear business case – Whether it’s reducing downtime, improving quality, or optimizing material usage, have a clear goal.* Choose between out-of-the-box vs. build-your-own – Large enterprises may benefit from standardized MES, while smaller companies might prefer DIY industrial platforms.* Don’t ignore change management – Successful MES projects require strong collaboration between IT, OT, and shop floor operators."It’s hard. But it’s worth it."Final ThoughtsMES is evolving faster than ever, blending traditional execution functions with modern cloud analytics. Whether companies take a step-by-step or enterprise-wide approach, MES remains a critical piece of the smart manufacturing puzzle.For more MES insights, check out mesmatters.com or Matt’s LinkedIn page, and don’t forget to subscribe to IT/OT Insider for the latest discussions on bridging IT and OT.Stay Tuned for More!Subscribe to our podcast and blog to stay updated on the latest trends in Industrial Data, AI, and IT/OT convergence.🚀 See you in the next episode!Youtube: https://www.youtube.com/@TheITOTInsider Apple Podcasts: Spotify Podcasts: This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit itotinsider.substack.com

In this episode of the IT/OT Insider Podcast, where we’re taking a short detour from our usual deep dives into industrial things to explore something broader-but equally vital: how enterprises evolve.We’re joined by Stephen Fishman and Matt McLarty, authors of the book Unbundling the Enterprise, published by IT Revolution. Stephen is North America Field CTO at Boomi, and Matt is the company’s Global CTO. But more importantly for this conversation-they’re long-time collaborators with a shared passion for modularity, APIs, and systems thinking.We’ll talk about the power of preparation over prediction, about how modular systems and composable strategies can future-proof organizations, and-most unexpectedly-how happy accidents (yes, “OOOPs”) can unlock unexpected success.Thanks for reading The IT/OT Insider! Subscribe for free to receive new posts and support our work.From Creative Writing to Enterprise ArchitectureStephen and Matt first connected over a decade ago, when Stephen was leading app development at Cox Automotive and Matt was heading up the API Academy at CA Technologies. Their collaboration grew from a shared curiosity: why were APIs making some companies wildly successful, and why did that success often seem... unplanned?They didn’t want to write yet another how-to book on APIs. Instead, they wanted to tell the bigger story-about why companies who invested in modularity were able to respond faster, seize opportunities more easily, and unlock new business models.“We wanted to bridge the gap between architects and the business. Help tech teams articulate why they want to build things in a modular way-and help business folks understand the financial value behind those decisions.” – Stephen FishmanOOOPs: The Power of Happy AccidentsOne of the big themes in their book is what the authors call OOOPs-not a typo, but an acronym.“Google Maps is the classic story,” Stephen explains. “People started scraping the APIs and using them in ways Google never planned-until they turned it into a massive business. That was a happy accident. And it happened again and again.”So they gave those happy accidents a structure-Optionality, Opportunism, and Optimization.* Optionality: Modular systems open the door to future opportunities you can’t yet predict.* Opportunism: You need ways to identify where to unbundle or where to apply APIs first.* Optimization: Continuously measuring and refining based on real usage and feedback.This framework makes the case that modularity isn’t just a technical preference-it’s a business strategy.Read more about OOOps in this article.S-Curves, Options, and Becoming the HouseAnother concept that runs through the book is the S-curve of growth-the idea that all successful innovations follow a familiar pattern: slow start, rapid rise, plateau, and eventual decline.Most companies ride that first curve too long, betting too heavily on what worked yesterday. The challenge is recognizing when you’ve peaked-and investing in what comes next.“Most people don’t know where they are on the S-curve,” says Stephen. “They think they’re still climbing, but they’re really on the plateau.”That’s where optionality comes in again: the ability to explore multiple futures at low cost, hedging your bets without breaking the bank. They borrow the idea of “convex tinkering”: placing lots of small, low-cost bets with the potential for high upside.“Casinos don’t gamble,” Stephen says. “They set the rules. They optimize for asymmetric value. That’s what this book is trying to teach organizations-how to become the house.”We also wrote about the importance of having cost effective ways to work with data in this previous post:Unbundling is Not Just for Big TechYou might think this is a book for Google, Amazon, or SaaS unicorns-but the lessons apply to every enterprise. Even in manufacturing.“The automotive world has always understood modularity,” Stephen says. “Platforms existed in car design before they existed in tech. When you separate chassis from body and engine, you gain flexibility and efficiency.”And the same applies in IT and OT.* Building platforms of reusable APIs and services* Designing products and processes with change in mind* Investing in capabilities close to revenue, not just internal shared servicesEven internal IT teams benefit from this mindset. Once a solution is decontextualized and reusable, it can scale across departments and generate asymmetric value internally-without needing to sell to the outside world.All Organization Designs Suck (and That’s Okay)A memorable quote in the book comes from an interview with David Rice (SVP Product and Engineering at Cox Automotive):“All organization designs suck”It’s a reminder that there’s no perfect org chart, no flawless model. Instead, success comes from designing your systems, your teams, and your investments with awareness of their limits-and building flexibility around them.“APIs aren’t a silver bullet. Neither is GenAI. But if you design your systems, teams, and investments around modularity and resilience, you’re better prepared for whatever future emerges.”We highly recommend the book Team Topologies as further read on this topic.Final ThoughtsUnbundling the Enterprise is not a technical manual. It’s a mindset. A playbook for organizations that want to survive disruption, scale intelligently, and embrace change-without betting everything on a single future.The ideas in this book are especially relevant for those working on digital transformation in complex industries. It’s not always about moving fast-it’s about moving smart, building for change, and staying ready.You can find the book on IT Revolution or wherever great tech books are sold. And be sure to check out their companion article on OOOPs on the IT Revolution blog.Until next time and stay modular! 🙂Want More Conversations Like This?Subscribe to our podcast and blog to stay updated on the latest trends in Industrial Data, AI, and IT/OT convergence.🚀 See you in the next episode!Youtube: https://www.youtube.com/@TheITOTInsider Apple Podcasts: Spotify Podcasts: This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit itotinsider.substack.com