Discover Technoskeptic Podcast

Technoskeptic Podcast

Technoskeptic Podcast

Author: Mo Lotman, Art Keller, Elizabeth Brunner

Subscribed: 1Played: 11Subscribe

Share

© Technoskeptic, Inc.

Description

The Technoskeptic examines the use and misuse of technology and technology's long-term effects on culture and society.

technoskeptic.substack.com

technoskeptic.substack.com

16 Episodes

Reverse

Art Keller speaks with Holly Elmore, the Executive Director of the AI safety non-profit Pause AI. We cover a lot of ground, from the disturbing AI scheming in the recent Apollo Research report-to the much more dubious and reprehensible scheming of AI *luminaries* such as Sam Altman and Yann LeCun. We also cover the politics of AI, how AI companies publicly beg for regulation while privately treating any attempt at even mild regulation as an affront, and the strange beliefs that infest Silicon Valley like nowhere else on earth! If you’d like to hear more about PauseAI’s mission you can visit the Pause AI Discord. You can follow their work on Twitter @pauseaius. We also touch on how, while the public overwhelmingly supports work on AI safety, AI safety orgs are battling to get the word out against the Silicon Valley venture capitalists with bankrolls in the $100s of billions. If you’d like to help level that playing field, you can donate to PauseAI. Get full access to The Technoskeptic Magazine at technoskeptic.substack.com/subscribe

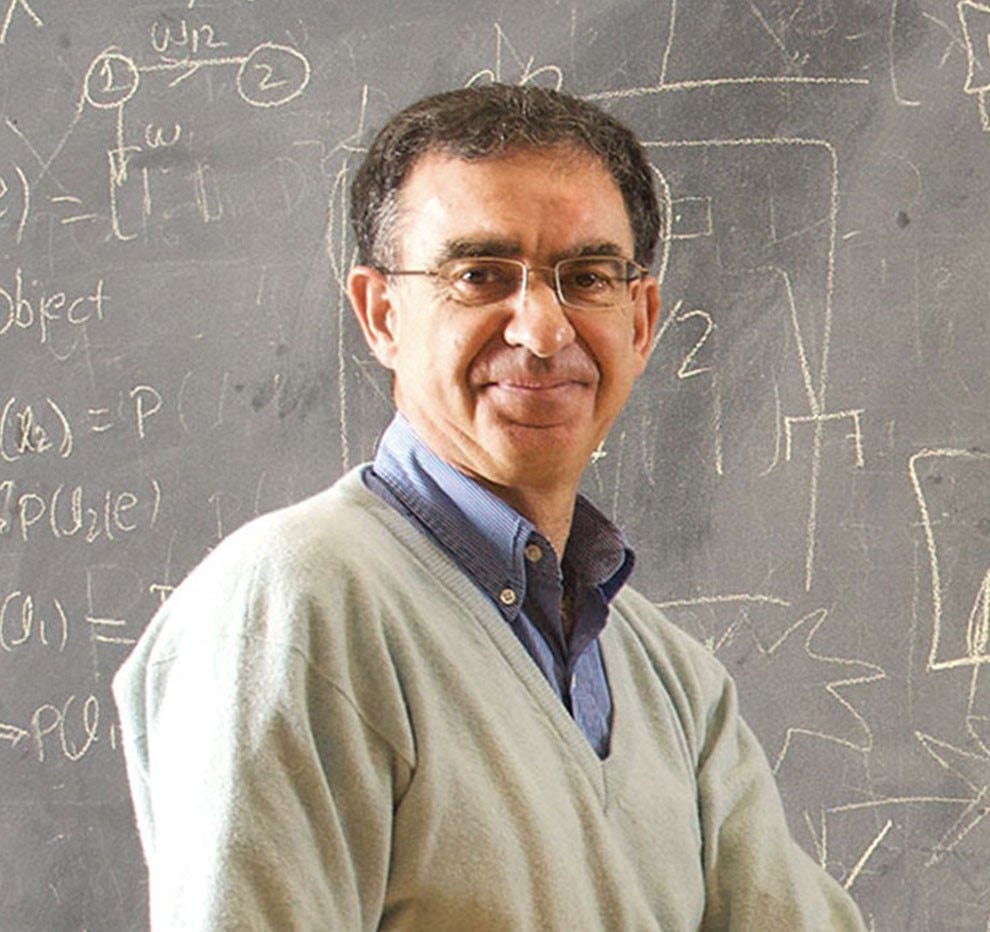

Today we speak with Dr. Roman Yampolskiy. Dr Yampolskiy is a tenured Professor in the Department of Computer Engineering and Computer Science at the Speed School of Engineering at the University of Louisville, where he is the founder and current director of the Cyber Security Lab. Dr. Yampolski is an expert in AI safety and the author of many books including, “AI: Unexplainable, Unpredictable, Uncontrollable.” We cover a lot of ground. Is Yann LeCun of Meta AI’s analogy of how to build safe AI valid? How can AI optimists can go on a podcast and admit Large Language Model AI is so insecure as to be “exploitable by default,” and yet be enthusiastic boosters of it? Is Gary Marcus right that the performance of LLM AI is topping out? We also touch on the tunnel vision in AI companies around AI risk. What is the role of Silicon Valley venture capitalists with a religious vision of AI and billions of dollars to push it, versus the beleaguered community pushing for AI safety work that goes past lip service?There was a delay of some months between recording and releasing this episode, which is a long time in the world of AI. Some references may be slightly dated, e.g. when we talked about AI as a learning aid at the beginning of the podcast, the study that said students that use AI do worse on tests had not yet been released. Get full access to The Technoskeptic Magazine at technoskeptic.substack.com/subscribe

Tim Kasser spent over two decades studying the relationship between materialism and well-being and penned the highly cited book, The High Price of Materialism. Mo Lotman interviewed Kasser while he was still a professor at Knox College-Knox has since been promoted to Professor Emeritus and retired. Professor Kasser and Mo discuss what types of values coincide with healthy relationships to ourselves and the environment. It is impossible to hear this podcast without thinking, “Do I really want to tether myself to a ‘delivery system’ that injects unhealthy values into every area of life?” Kasser also asks a crucial question about what is and is very deliberately not covered by the advertising-fueled media. Get full access to The Technoskeptic Magazine at technoskeptic.substack.com/subscribe

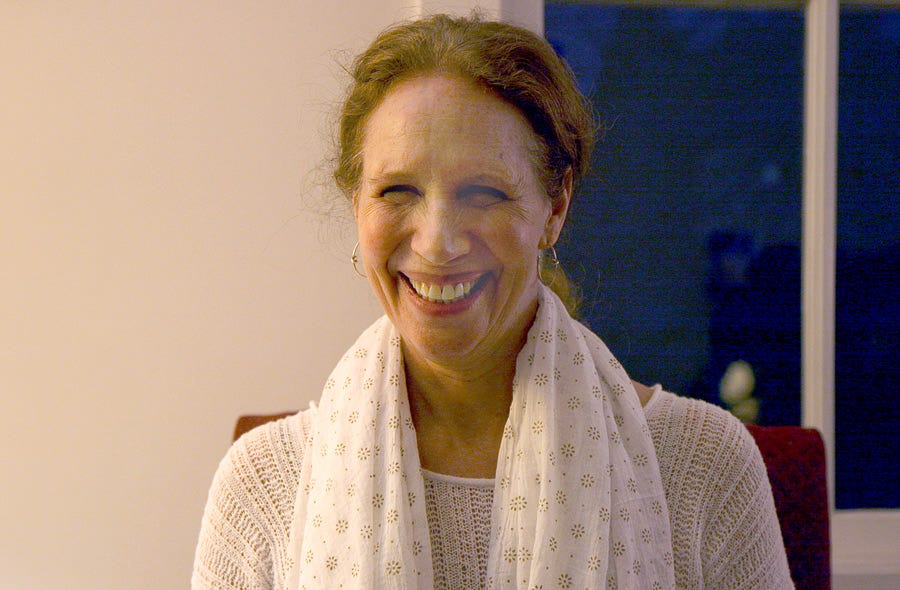

Catherine Steiner-Adair is a clinical and developmental psychologist whose empathic 2013 book The Big Disconnect was one of the first big warning signs about tech’s effect on growing children.Mo Lotman speaks with Steiner-Adair about the invisible costs of tech-how it can impair the brain development of children, and may also hinder bonding between child and parent. Many concerned parents look at their kids and think, “What is tech doing to them?” Steiner-Adair thinks it’s also worth asking, “What is tech doing to our family?” Get full access to The Technoskeptic Magazine at technoskeptic.substack.com/subscribe

Courtesy MITMo Lotman interviewed Tomaso Poggio, Director of MIT’s Center for Minds, Brains, and Machines. Poggio explained the basics of how AI models work, why we’re still a long way from the dystopian fears of robot overlords, but also that the threat to jobs is real. (Editor’s Note: This podcast was originally recorded at an interesting moment in AI development: after machine learning and neural networks had started to make impressive gains, but before the appearance of Large Language Models like Chat GPT. Without giving any interview spoilers, it is fascinating with hindsight to hear what predictions of Professor Poggio’s were undermined by the unanticipated explosion of LLM AI.) Get full access to The Technoskeptic Magazine at technoskeptic.substack.com/subscribe

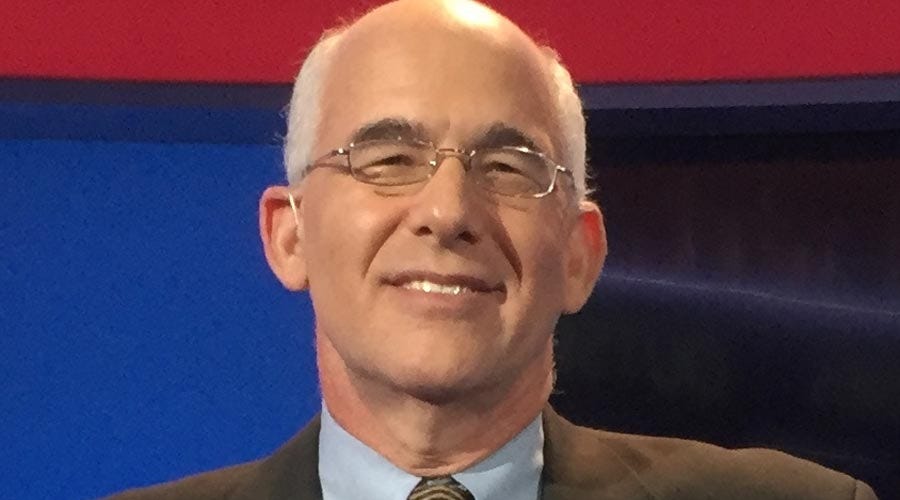

In mid-2017, Mo Lotmon spoke to journalist and media critic Bill Powers. Powers authored a bestselling book, Hamlet’s BlackBerry, about stepping away from tech. Powers was one of the first to publicly and loudly speak about the need to get away from “screens” and move to have “screen Sabbaths.”At the time he spoke to Mo Lotman, Powers was at MIT’s Media Lab trying to increase the quality of social media. Powers is now at the Max-Planck Institute for Human Development in Berlin.(Editors Note: In listening to this a few times in preparing to re-release it in 2024, it is hard to ignore that Powers’ 2017 optimism about the ability of social media to be moved in a positive direction did not pan out. He noted that in working at the MIT media lab, the technologists, i.e. the people who ran Twitter, were humanized to him. Once he got to know them, he realized they saw the problems their tools had created. But did they, really? Seven years later, the only changes at Twitter (now X) and Facebook (now Meta) are purely cosmetic. Yes, there have been some changes to content moderation algorithms-but those algorithms are still optimized to deliver toxic yet addictive sludge. Very probably, social media can’t be less disruptive to society and mental health, as long as social media’s business model is to harvest attention to sell ads and collect user info to sell to data brokers.) Get full access to The Technoskeptic Magazine at technoskeptic.substack.com/subscribe

Danielle Allen is James Bryant Conant University Professor at Harvard University and Director of the Allen Lab for Democracy Renovation at the Kennedy School's Center for Democratic Governance and Innovation.* She spoke with Mo Lotman about Eudaimonia aka “the good life.” They explore the emphasis in American education on STEM (science, technology, engineering, math), and the social costs of not teaching social studies and history. Regardless of your politics, Allen’s diagnosis that we have inadequate education in civics and history is something most Americans will agree on as we head into 2024’s turbulent election season. *At the time this interview was recorded, Allen was the director of the Safra Center for Ethics at Harvard. She is now assembling research for the Democracy Renovation project for Our Common Purpose, a commission for the American Academy of Arts & Sciences. Get full access to The Technoskeptic Magazine at technoskeptic.substack.com/subscribe

Mo Lotman speaks with psychiatrist, professor, and author David Greenfield, founder of the Center for Internet and Technology Addiction. Dr. Greenfield was one of the first medical professionals to recognize and study the addictive qualities of the Internet. Twenty-five years later his societal diagnosis has gone from outlier to mainstream. He explains what we know, what it means, and what can be done. This podcast was originally recorded pre-COVID, but the topics covered dovetail nicely with the work on how technology hacks our dopamine reward system of Dr. Anna Lembke. Her book, Dopamine Nation, is a great follow-on to this interview with Dr. Greenfield. Many of you will have seen Lembke in the documentary “The Social Dilemma” discussing the addictive nature of social media. Get full access to The Technoskeptic Magazine at technoskeptic.substack.com/subscribe

We were delighted to speak to Will Reusch, a high school teacher with 15 years of experience ranging from under-resourced inner-city schools to elite private academies. We discuss using social media intentionally rather than in default mode (aka to anxiety-producing excess), where tech helps and hurts in the classroom, and Will’s podcast Cylinder Radio. Will covers controversial topics with an emphasis on dialogue and civility and NOT on dunking in the “culture war.” Will also relates from personal experience how to (gently) get back at your bully when he makes you do his homework! Reusch’s guide for using social media in a way that adds value rather than anger and anxiety is “The Social Solution.” He has also worked with Heterodox Acadamy, founded by Jon Haidt to promote viewpoint diversity in academia, on developing materials for high school students. When he’s not teaching high school and hosting Cylinder Radio, Will is also building a scaleable homeschooling curriculum. Get full access to The Technoskeptic Magazine at technoskeptic.substack.com/subscribe

Narayan Liebenson, a guiding teacher at the Cambridge Insight Meditation Center in Cambridge, Massachusetts, speaks to Mo Lotman about the benefits of mindfulness and attention. We’ve suspected for years that technology fragments attention to the detriment of our peace of mind and mental health. But is the mindfulness championed so thoughtfully by Liebenson mere mystical woo-woo, or a serious remedy? The neuroscience is in, and the answer is yes. Meditation quiets the posterior cingulate cortex, where many of our distracted thoughts, ruminations, and cravings live. Get full access to The Technoskeptic Magazine at technoskeptic.substack.com/subscribe

Tim Wu is the Julius Silver Professor of Law, Science and Technology at Columbia University, and a renowned scholar on communications networks, having coined the phrase “net neutrality.” He has written extensively on technology and its impact on society and has served both inside and outside of government and academia. Mo Lotman discusses Wu’s 2016 book The Attention Merchants: The Epic Scramble to Get Inside Our Heads. Attention Merchants is a history of advertising, and it’s not pretty. Mo and Tim discuss our declining private spheres, the current state of the Internet, and the effects of what Wu calls The Cycle, as new communications technologies inevitably move from open to closed. Wu also proved prescient in this interview, describing the deterioration of the advertising model for online content, and how subscription content was a ray of hope (which the success of Substack has since validated). They also discuss Wu’s 2010 book, The Master Switch. When this interview first ran, Wu was still writing, The Curse of Bigness: Antitrust in the New Gilded Age, released in 2018. In 2021, Wu was appointed Special Assistant to the President for Technology and Competition Policy. Get full access to The Technoskeptic Magazine at technoskeptic.substack.com/subscribe

We recently ran a lengthy print interview with Michaela Headmistress Katharine Birbalsingh about her school’s Digital Detox program and parents’ struggles in implementing it, Choosing Smart Kids over Smartphones. This podcast is the conversation we recorded with Michaela’s Headmistress as part of updating our 2019 with her. Some of the material in this podcast appeared in the “Smart Kids” article, but we also covered some spicy topics in this podcast, including some speculation on why her successful school draws so much flak, that we didn’t have space for in the Smart Kids article. Get full access to The Technoskeptic Magazine at technoskeptic.substack.com/subscribe

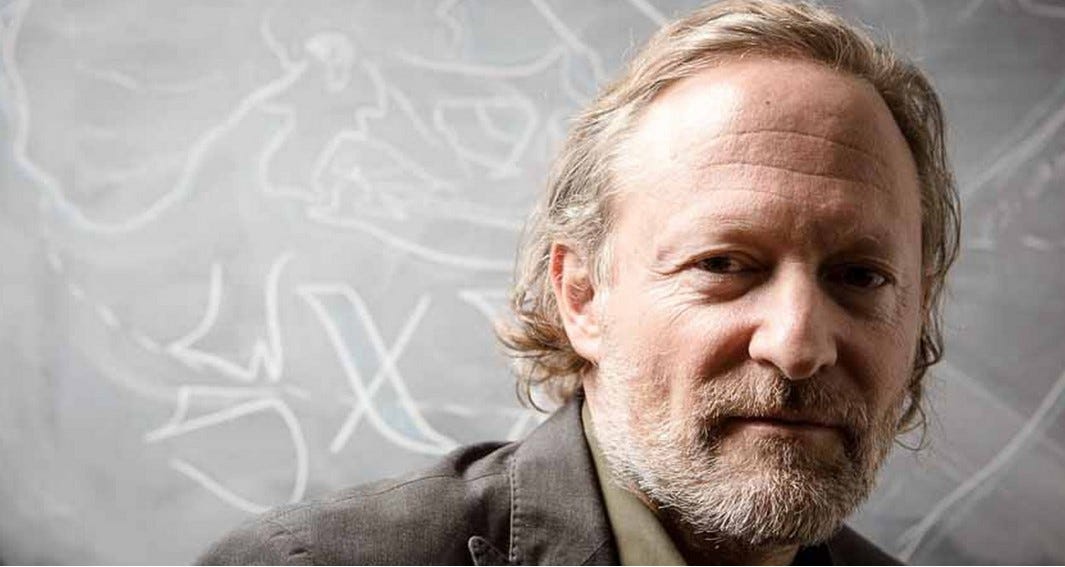

David Krakauer is President and Professor of Complex Systems at the Santa Fe Institute, a private, not-for-profit, independent research and education center. Krakauer focuses on the evolutionary history of information-processing mechanisms in biology and culture. He chatted with The Technoskeptic to discuss complementary vs. competitive cognitive artifacts—that is, how technologies extend or suppress our capabilities. The study of human stupidity is (no kidding) one of David Krakauer’s specialties. One emergent question: will reliance on AI make us dumber and less creative?This podcast was recorded pre-COVID, but the conversation has only become more relevant in the last year. Consider recent reporting that college students are using Chat GPT 4 to do their homework. Large Language Models (LLM) like Chat GPT can whip up a college essay or even a whole book by synthesizing huge swaths of the internet. But what does that kind of use do for real learning and the creation of crucial long-term memory? Can LLMs make novel discoveries in medicine, physics, or chemistry? (Note: so far, they can’t.) We’ve come to depend on scientific advances to improve our standard of living. Krakauer airs his concerns on AI’s impact on real scientific discovery as we sprint into the AI age—whether we’re ready for it or not. Get full access to The Technoskeptic Magazine at technoskeptic.substack.com/subscribe

Mo Lotman interviews mathematician Cathy O’Neil. O’Neil worked in the private sector to make the algorithmic systems that automatically judge and score us. Deeply troubled by what she saw, she went on to write the 2016 bestseller Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy. She shares her insider’s look at how algorithms are gaming our world, with the worst consequences for those who can least afford them. Nobody escapes the grasp of algorithms in modern life. Per our recent article on Yelp, small business owners who have fallen afoul of Yelp’s manipulative algorithmic ranking have seen their businesses lose vast sums of money or be destroyed. If you live in the wrong neighborhood, algorithms may give you a worse credit rating, see that you spend more time in prison if you commit a crime, or even show you entirely different web pages when you are shopping online. O’Neil’s insights on algorithms are important in their own right, but algorithms are also the base layer of the AI that will be given more and more control of our society. What algorithms and AI have in common is the GIGO (Garbage In, Garbage Out) Problem: if you put in bad data, you get bad decisions. The other commonality is both are frequently “black boxes,” which is to say, even the people who create algorithms and AI often cannot explain the decisions they generate. O’Neil originally founded O'Neil Risk Consulting & Algorithmic Auditing to make algorithms fairer and more transparent, but has since expanded that to include assessing AI risks. (For further reading, here’s a 20-second primer from the CMS Wire article AI vs. Algorithms what’s the difference?“An algorithm is a set of instructions — a preset, rigid, coded recipe that gets executed when it encounters a trigger. AI on the other hand — which is an extremely broad term covering a myriad of AI specializations and subsets — is a group of algorithms that can modify its algorithms and create new algorithms in response to learned inputs and data as opposed to relying solely on the inputs it was designed to recognize as triggers. This ability to change, adapt and grow based on new data, is described as ‘intelligence.’ ”) Get full access to The Technoskeptic Magazine at technoskeptic.substack.com/subscribe

Kentaro ToyamaAt the Technsoskeptic Magazine, we often find that technologists provide the most salient critiques of technology’s effects on society. In this episode, Mo Lotman speaks with Kentaro Toyama, computer scientist, Professor of Community Information at the University of Michigan, and author of the book “Geek Heresy: Rescuing Social Change from the Cult of Technology.” After spending five years in India attempting to deploy technology to aid international development, Toyama came back disillusioned. He chats with the Technoskeptic about his experience there and how his years of teaching led him to understand how technology often exacerbates, rather than levels, underlying disparities. Toyama also delves into the rather…odd trend displayed by Silicon Valley elites of keeping their children far away from the same technology they frenetically urge everybody else’s children to adopt.With a bit of a detour into the wisdom of Dr. Suess, the discussion wends its way into some philosophical territory, examining how technology can make it harder to maintain the heart, mind, and will to be good people. This podcast originally aired in late 2016. Get full access to The Technoskeptic Magazine at technoskeptic.substack.com/subscribe

Cal Newport, Deep Work, Productivity and Focus Get full access to The Technoskeptic Magazine at technoskeptic.substack.com/subscribe