Discover Data Engineering Weekly

Data Engineering Weekly

Data Engineering Weekly

Author: Ananth Packkildurai

Subscribed: 48Played: 185Subscribe

Share

© Ananth Packkildurai

Description

21 Episodes

Reverse

Semantic layers have been with us for decades—sometimes buried inside BI tools, living in analysts’ heads. But as data complexity grows and AI pushes its way into the stack, the conversation is shifting. In a recent conversation with David Jayatillake, a long-time data leader with experience at Cube, Delphi Labs, and multiple startups, we explored how semantic layers move from BI lock-in to invisible, AI-driven infrastructure—and why that matters for the future of metrics and knowledge management.What Exactly Is a Semantic Layer?Every company already has a semantic layer. Sometimes it’s software; sometimes it’s in people’s heads. When an analyst translates a stakeholder’s question into SQL, they’re acting as a human semantic layer. A software semantic layer encodes this process so SQL is generated consistently and automatically.David’s definition is sharp: a semantic layer is a knowledge graph plus a compiler. The knowledge graph stores entities, metrics, and relationships; the compiler translates requests into SQL.From BI Tools to Independent LayersBI tools were the first place semantic layers showed up: Business Objects, SSAS, Looker, and Power BI. This works fine for smaller orgs, but quickly creates vendor lock-in for enterprises juggling multiple BI tools and warehouses.Independent semantic layers emerged to solve this. By abstracting the logic outside BI, companies can ensure consistency across Tableau, Power BI, Excel, and even embedded analytics in customer-facing products. Tools like Cube and DBT metrics aim to play that role.Why Are They Hard to Maintain?The theory is elegant: define once, use everywhere. But two big issues keep surfacing:* Constant change. Business definitions evolve. A revenue formula that works today may be obsolete tomorrow.* Standardization. Each vendor proposes their standard—DBT metrics, LookML, Malloy. History tells us one “universal” standard usually spawns another to unify the rest.Performance complicates things further—BI vendors optimize their compilers differently, making interoperability tricky.Culture and Team OwnershipA semantic layer is useless without cultural buy-in. Product teams must emit clean events and define success metrics. Without it, the semantic layer starves.Ownership varies: sometimes product engineering owns it end-to-end with embedded data engineers; other times, central data teams or hybrid models step in. What matters is aligning metrics with product outcomes.Data Models vs. Semantic LayersDimensional modeling (Kimball, Data Vault) makes data neat and joinable. But models alone don’t enforce consistent definitions. Without a semantic layer, organizations drift into “multiple versions of the truth.”Beyond Metrics: Metric TreesSemantic layers can also encode metric trees—hierarchies explaining why a metric changed. Example: revenue = ACV × deals. If revenue drops, metric trees help trace whether ACV or deal count is responsible. This goes beyond simple dimension slicing and powers real root cause analysis.Where AI Changes the GameMaintaining semantic layers has always been their weak point. AI changes that:* Dynamic extensions: AI can generate new metrics on demand.* Governance by design: Instead of hallucinating answers, AI can admit “I don’t know” or propose a new definition.* Invisible semantics: Users query in natural language, and AI maintains and optimizes the semantic layer behind the scenes.Executives demanding “AI access to data” are accelerating this shift. Text-to-SQL alone fails without semantic context. With a semantic layer, AI can deliver governed, consistent answers instantly.Standardization Might Not MatterWill the industry settle on a single semantic standard? Maybe not—and that’s okay. Standards like Model Context Protocol (MCP) allow AI to translate across formats. SQL remains the execution layer, while semantics bridge business logic. Cube, DBT, Malloy, or Databricks metric views can all coexist if AI smooths the edges.When Do You Need One?Two clear signals:* Inconsistency: Teams struggle to agree on fundamental metrics such as revenue or churn.* Speed: Stakeholders wait weeks for analyst queries that could be answered in seconds with semantic + AI.If either pain point resonates, it’s time to consider a semantic layer.Looking AheadDavid sees three big shifts coming soon:* Iceberg is a universal storage format—true multi-engine querying across DuckDB, Databricks, and others.* Invisible semantics. Baked into tools, maintained by AI, no more “selling” semantic layers.* AI-native access. Semantic layers are the primary interface between humans, AI, and data.Final ThoughtsSemantic layers aren’t new—they’ve quietly powered BI tools and lived in analysts’ heads for years. What’s new is the urgency: executives want AI to answer questions instantly, and that requires a consistent semantic foundation. As David Jayatillake reminds us, the journey is from BI lock-in to invisible semantics—semantic layers that are dynamic, governed, and maintained by AI. The question is no longer if your organization needs one, but when you’ll make the shift—and whether your semantic layer will keep pace with the AI-driven future of data. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit www.dataengineeringweekly.com

Data Engineering Weekly recently hosted Jacopo Tagliabue, CTO of Bauplan, for an insightful podcast exploring innovative solutions in data engineering. Jacopo shared valuable perspectives drawn from his entrepreneurial journey, his experience building multiple companies, and his deep understanding of data engineering challenges. This extensive conversation spanned the complexities of data engineering and showcased Bauplan’s unique approach to tackling industry pain points.Entrepreneurial Journey and Problem IdentificationJacopo opened the discussion by highlighting the personal and professional experiences that led him to create Bauplan. Previously, he built a company specializing in Natural Language Processing (NLP) at a time when NLP was still maturing as a technology. After selling this initial venture, Jacopo immersed himself deeply in data engineering, navigating through complex infrastructures involving Apache Spark, Airflow, and Snowflake.He recounted the profound frustration of managing complicated and monolithic data stacks that, despite their capabilities, came with significant operational overhead. Transitioning from Apache Spark to Snowflake provided some relief, yet it introduced new limitations, particularly concerning Python integration and vendor lock-in. Recognizing the industry-wide need for simplicity, Bauplan was conceptualized to offer engineers a straightforward and efficient alternative.Bauplan’s Core Abstraction - Functions vs. Traditional ETLAt Bauplan’s core is the decision to use functions as the foundational building block. Jacopo explained how traditional ETL methodologies typically demand extensive management of infrastructure and impose high cognitive overhead on engineers. By contrast, functions offer a much simpler, modular approach. They enable data engineers to focus purely on business logic without worrying about complex orchestration or infrastructure.The Bauplan approach distinctly separates responsibilities: engineers handle code and business logic, while Bauplan’s platform takes charge of data management, caching, versioning, and infrastructure provisioning. Jacopo emphasized that this separation significantly enhances productivity and allows engineers to operate efficiently, creating modular and easily maintainable pipelines.Data Versioning, Immutability, and ReproducibilityJacopo firmly underscored the importance of immutability and reproducibility in data pipelines. He explained that data engineering historically struggles with precisely reproducing pipeline states, especially critical during debugging and auditing. Bauplan directly addresses these challenges by automatically creating immutable snapshots of both the code and data state for every job executed. Each pipeline execution receives a unique job ID, guaranteeing precise reproducibility of the pipeline’s state at any given moment.This method enables engineers to easily debug issues without impacting the production environment easily easily, thereby streamlining maintenance tasks and enhancing reliability. Jacopo highlighted this capability as central to achieving robust data governance and operational clarity.Branching, Collaboration, and Conflict ResolutionBauplan integrates Git-like branching capabilities tailored explicitly for data engineering workflows. Jacopo detailed how this capability allows engineers to experiment, collaborate, and innovate safely in isolated environments. Branching provides an environment where engineers can iterate without fear of disrupting ongoing operations or production pipelines.Jacopo explained that Bauplan handles conflicts conservatively. If two engineers attempt to modify the same table or data concurrently, Bauplan requires explicit rebasing. While this strict conflict-resolution policy may appear cautious, it maintains data integrity and prevents unexpected race conditions. Bauplan ensures that each branch is appropriately isolated, promoting clean, structured collaboration.Apache Arrow and Efficient Data ShufflingEfficiency in data shuffling, especially between pipeline functions, was another critical topic. Jacopo praised Apache Arrow’s role as the backbone of Bauplan’s data interchange strategy. Apache Arrow's zero-copy transfer capability significantly boosts data movement speed, removing traditional bottlenecks associated with serialization and data transfers.Jacopo illustrated how Bauplan leverages Apache Arrow to facilitate rapid data exchanges between functions, dramatically outperforming traditional systems like Airflow. By eliminating the need for intermediate serialization, Bauplan achieves significant performance improvements and streamlines data processing, enabling rapid, efficient pipelines.Vertical Scaling and System PerformanceFinally, the conversation shifted to vertical scaling strategies employed by Bauplan. Unlike horizontally distributed systems like Apache Spark, Bauplan strategically focuses on vertical scaling, which simplifies infrastructure management and optimizes resource utilization. Jacopo explained that modern cloud infrastructures now offer large compute instances capable of handling substantial data volumes efficiently, negating the complexity typically associated with horizontally distributed systems.He clarified Bauplan’s current operational range as optimally suited for pipeline data volumes typically between 10GB and 100 100GB. This range covers a vast majority of standard enterprise use cases, making Bauplan a highly suitable and effective solution for most organizations. Jacopo stressed that although certain specialized scenarios still require distributed computing platforms, the majority of pipelines benefit immensely from Bauplan’s simplified, vertically scaled approach.In summary, Jacopo Tagliabue offered a compelling vision of Bauplan’s mission to simplify and enhance data engineering through functional abstractions, immutable data versioning, and efficient, vertically scaled operations. Bauplan presents an innovative solution designed explicitly around the real-world challenges data engineers face, promising significant improvements in reliability, performance, and productivity in managing modern data pipelines. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit www.dataengineeringweekly.com

In our latest episode of Data Engineering Weekly, co-hosted by Aswin, we explored the practical realities of AI deployment and data readiness with our distinguished guest, Avinash Narasimha, AI Solutions Leader at Koch Industries. This discussion shed significant light on the maturity, challenges, and potential that generative AI and data preparedness present in contemporary enterprises.Introducing Our Guest: Avinash NarasimhaAvinash Narasimha is a seasoned professional with over two decades of experience in data analytics, machine learning, and artificial intelligence. His focus at Koch Industries involves deploying and scaling various AI solutions, with particular emphasis on operational AI and generative AI. His insights stem from firsthand experience in developing robust AI frameworks that are actively deployed in real-world applications.Generative AI in Production: Reality vs. HypeOne key question often encountered in the industry revolves around the maturity of generative AI in actual business scenarios. Addressing this concern directly, Avinash confirmed that generative AI has indeed crossed the pilot threshold and is actively deployed in several production scenarios at Koch Industries. Highlighting their early adoption strategy, Avinash explained that they have been on this journey for over two years, emphasizing an established continuous feedback loop as a critical component in maintaining effective generative AI operations.Production Readiness and DeploymentDeployment strategies for AI, particularly for generative models and agents, have undergone significant evolution. Avinash described the systematic approach based on his experience: * Beginning with rigorous experimentation* Transitioning smoothly into scalable production environments* Incorporating robust monitoring and feedback mechanisms. The result is a successful deployment of multiple generative AI solutions, each carefully managed and continuously improved through iterative processes.The Centrality of Data ReadinessDuring our conversation, we explored the significance of data readiness, a pivotal factor that influences the success of AI deployment. Avinash emphasized data readiness as a fundamental component that significantly impacts the timeline and effectiveness of integrating AI into production systems.He emphasized the following:- Data Quality: Consistent and high-quality data is crucial. Poor data quality frequently acts as a bottleneck, restricting the performance and reliability of AI models.- Data Infrastructure: A Robust data infrastructure is necessary to support the volume, velocity, and variety of data required by sophisticated AI models.- Integration and Accessibility: The ease of integrating and accessing data within the organization significantly accelerates AI adoption and effectiveness.Challenges in Data ReadinessAvinash openly discussed challenges that many enterprises face concerning data readiness, including fragmented data ecosystems, legacy systems, and inadequate data governance. He acknowledged that while the journey toward optimal data readiness can be arduous, organizations that systematically address these challenges see substantial improvements in their AI outcomes.Strategies for Overcoming Data ChallengesAvinash also offered actionable insights into overcoming common data-related obstacles:- Building Strong Data Governance: A robust governance framework ensures that data remains accurate, secure, and available when needed, directly enhancing AI effectiveness.- Leveraging Cloud Capabilities: He noted recent developments in cloud-based infrastructure as significant enablers, providing scalable and sophisticated tools for data management and model deployment.- Iterative Improvement: Regular feedback loops and iterative refinement of data processes help gradually enhance data readiness and AI performance.Future Outlook: Trends and ExpectationsLooking ahead, Avinash predicted increased adoption of advanced generative AI tools and emphasized ongoing improvements in model interpretability and accountability. He expects enterprises will increasingly prioritize explainable AI, balancing performance with transparency to maintain trust among stakeholders.Moreover, Avinash highlighted the anticipated evolution of data infrastructure to become more flexible and adaptive, catering specifically to the unique demands of generative AI applications. He believes this evolution will significantly streamline the adoption of AI across industries.Key Takeaways- Generative AI is Ready for Production: Organizations, particularly those that have been proactive in their adoption, have successfully integrated generative AI into production, highlighting its maturity beyond experimental stages.- Data Readiness is Crucial: Effective AI deployment is heavily dependent on the quality, accessibility, and governance of data within organizations.- Continuous Improvement: Iterative feedback and continuous improvements in data readiness and AI deployment strategies significantly enhance performance and outcomes.Closing ThoughtsOur discussion with Avinash Narasimha provided practical insights into the real-world implementation of generative AI and the critical role of data readiness. His experience at Koch Industries illustrates not only the feasibility but also the immense potential generative AI holds for enterprises willing to address data challenges and deploy AI thoughtfully and systematically.Stay tuned for more insightful discussions on Data Engineering Weekly.All rights reserved, ProtoGrowth Inc., India. I have provided links for informational purposes and do not suggest endorsement. All views expressed in this newsletter are my own and do not represent current, former, or future employers’ opinions. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit www.dataengineeringweekly.com

The modern data stack constantly evolves, with new technologies promising to solve age-old problems like scalability, cost, and data silos. Apache Iceberg, an open table format, has recently generated significant buzz. But is it truly revolutionary, or is it destined to repeat the pitfalls of past solutions like Hadoop?In a recent episode of the Data Engineering Weekly podcast, we delved into this question with Daniel Palma, Head of Marketing at Estuary and a seasoned data engineer with over a decade of experience. Danny authored a thought-provoking article comparing Iceberg to Hadoop, not on a purely technical level, but in terms of their hype cycles, implementation challenges, and the surrounding ecosystems. This blog post expands on that insightful conversation, offering a critical look at Iceberg's potential and the hurdles organizations face when adopting it.Hadoop: A Brief History LessonFor those unfamiliar with Hadoop's trajectory, it's crucial to understand the context. In the mid-2000s, Hadoop emerged as a groundbreaking solution for processing massive datasets. It promised to address key pain points:* Scaling: Handling ever-increasing data volumes.* Cost: Reducing storage and processing expenses.* Speed: Accelerating data insights.* Data Silos: Breaking down barriers between data sources.Hadoop achieved this through distributed processing and storage, using a framework called MapReduce and the Hadoop Distributed File System (HDFS). However, while the promise was alluring, the reality proved complex. Many organizations struggled with Hadoop's operational overhead, leading to high failure rates (Gartner famously estimated that 80% of Hadoop projects failed). The complexity stemmed from managing distributed clusters, tuning configurations, and dealing with issues like the "small file problem."Iceberg: The Modern ContenderApache Iceberg enters the scene as a modern table format designed for massive analytic datasets. Like Hadoop, it aims to tackle scalability, cost, speed, and data silos. However, Iceberg focuses specifically on the table format layer, offering features like:* Schema Evolution: Adapting to changing data structures without rewriting tables.* Time Travel: Querying data as it existed at a specific time.* ACID Transactions: Ensuring data consistency and reliability.* Partition Evolution: Changing data partitioning without breaking existing queries.Iceberg's design addresses Hadoop's shortcomings, particularly data consistency and schema evolution. But, as Danny emphasizes, an open table format alone isn't enough.The Ecosystem Challenge: Beyond the Table FormatIceberg, by itself, is not a complete solution. It requires a surrounding ecosystem to function effectively. This ecosystem includes:* Catalogs: Services that manage metadata about Iceberg tables (e.g., table schemas, partitions, and file locations).* Compute Engines: Tools that query and process data stored in Iceberg tables (e.g., Trino, Spark, Snowflake, DuckDB).* Maintenance Processes: Operations that optimize Iceberg tables, such as compacting small files and managing metadata.The ecosystem is where the comparison to Hadoop becomes particularly relevant. Hadoop also had a vast ecosystem (Hive, Pig, HBase, etc.), and managing this ecosystem was a significant source of complexity. Iceberg faces a similar challenge.Operational Complexity: The Elephant in the RoomDanny highlights operational complexity as a major hurdle for Iceberg adoption. While the Iceberg itself simplifies some aspects of data management, the surrounding ecosystem introduces new challenges:* Small File Problem (Revisited): Like Hadoop, Iceberg can suffer from small file problems. Data ingestion tools often create numerous small files, which can degrade performance during query execution. Iceberg addresses this through table maintenance, specifically compaction (merging small files into larger ones). However, many data ingestion tools don't natively support compaction, requiring manual intervention or dedicated Spark clusters.* Metadata Overhead: Iceberg relies heavily on metadata to track table changes and enable features like time travel. If not handled correctly, managing this metadata can become a bottleneck. Organizations need automated processes for metadata cleanup and compaction.* Catalog Wars: The catalog choice is critical, and the market is fragmented. Major data warehouse providers (Snowflake, Databricks) have released their flavors of REST catalogs, leading to compatibility issues and potential vendor lock-in. The dream of a truly interoperable catalog layer, where you can seamlessly switch between providers, remains elusive.* Infrastructure Management: Setting up and maintaining an Iceberg-based data lakehouse requires expertise in infrastructure-as-code, monitoring, observability, and data governance. The maintenance demands a level of operational maturity that many organizations lack.Key Considerations for Iceberg AdoptionIf your organization is considering Iceberg, Danny stresses the importance of careful planning and evaluation:* Define Your Use Case: Clearly articulate your specific needs. Are you prioritizing performance, cost, or both? What are your data governance and security requirements? Your answers will influence your choices for storage, computing, and cataloging.* Evaluate Compatibility: Ensure your existing infrastructure and tools (query engines, data ingestion pipelines) are compatible with Iceberg and your chosen catalog.* Consider Cloud Vendor Lock-in: Be mindful of potential lock-in, especially with catalogs. While Iceberg is open, cloud providers have tightly coupled implementation specific to their ecosystem.* Build vs. Buy: Decide whether you have the resources to build and maintain your Iceberg infrastructure or if a managed service is better. Many organizations prefer to outsource table maintenance and catalog management to avoid operational overhead.* Talent and Expertise: Do you have the in-house expertise to manage Spark clusters (for compaction), configure query engines, and manage metadata? If not, consider partnering with consultants or investing in training.* Start the Data Governance Process: Don't wait until the last minute to build the data governance framework. You must create the framework and processes before jumping into adoption.The Catalog Conundrum: Beyond Structured DataThe role of the catalog is evolving. Initially, catalogs focused on managing metadata for structured data in Iceberg tables. However, the vision is expanding to encompass unstructured data (images, videos, audio) and AI models. This "catalog of catalogs" or "uber catalog" approach aims to provide a unified interface for accessing all data types.The benefits of a unified catalog are clear: simplified data access, consistent semantics, and easier integration across different systems. However, building such a catalog is complex, and the industry is still grappling with the best approach.S3 Tables: A New Player?Amazon's recent announcement of S3 Tables raised eyebrows. These tables combine object storage with a table format, offering a highly managed solution. However, they are currently limited in terms of interoperability. They don't support external catalogs, making integrating them into existing Iceberg-based data stacks difficult. The jury is still unsure whether S3 Tables will become a significant player in the open table format landscape.Query Engine ConsiderationsChoosing the right query engine is crucial for performance and cost optimization. While some engines like Snowflake boast excellent performance with Iceberg tables (with minimal overhead compared to native tables), others may lag. Factors to consider include:* Performance: Benchmark different engines with your specific workloads.* Cost: Evaluate the cost of running queries on different engines.* Scalability: Ensure the engine can handle your anticipated data volumes and query complexity.* Compatibility: Verify compatibility with your chosen catalog and storage layer.* Use Case: Different engines excel at different tasks. Trino is popular for ad-hoc queries, while DuckDB is gaining traction for smaller-scale analytics.Is Iceberg Worth the Pain?The ultimate question is whether the benefits of Iceberg outweigh the complexities. For many organizations, especially those with limited engineering resources, fully managed solutions like Snowflake or Redshift might be a more practical starting point. These platforms handle the operational overhead, allowing teams to focus on data analysis rather than infrastructure management.However, Iceberg can be a compelling option for organizations with specific requirements (e.g., strict data residency rules, a need for a completely open-source stack, or a desire to avoid vendor lock-in). The key is approaching adoption strategically, clearly understanding the challenges, and a plan to address them.The Future of Table Formats: Consolidation and AbstractionDanny predicts consolidation in the table format space. Managed service providers will likely bundle table maintenance and catalog management with their Iceberg offerings, simplifying the developer experience. The next step will be managing the compute layer, providing a fully end-to-end data lakehouse solution.Initiatives like Apache XTable aim to provide a standardized interface on top of different table formats (Iceberg, Hudi, Delta Lake). However, whether such abstraction layers will gain widespread adoption remains to be seen. Some argue that standardizing on a single table format is a simpler approach.Iceberg's Role in Event-Driven Architectures and Machine LearningBeyond traditional analytics, Iceberg has the potential to contribute significantly to event-driven architectures and machine learning. Its features, such as time travel, ACID transactions, and data versioning, make it a suitable backend for streaming systems and change data capture (CDC) pipelines.Unsolved ChallengesSeveral challe

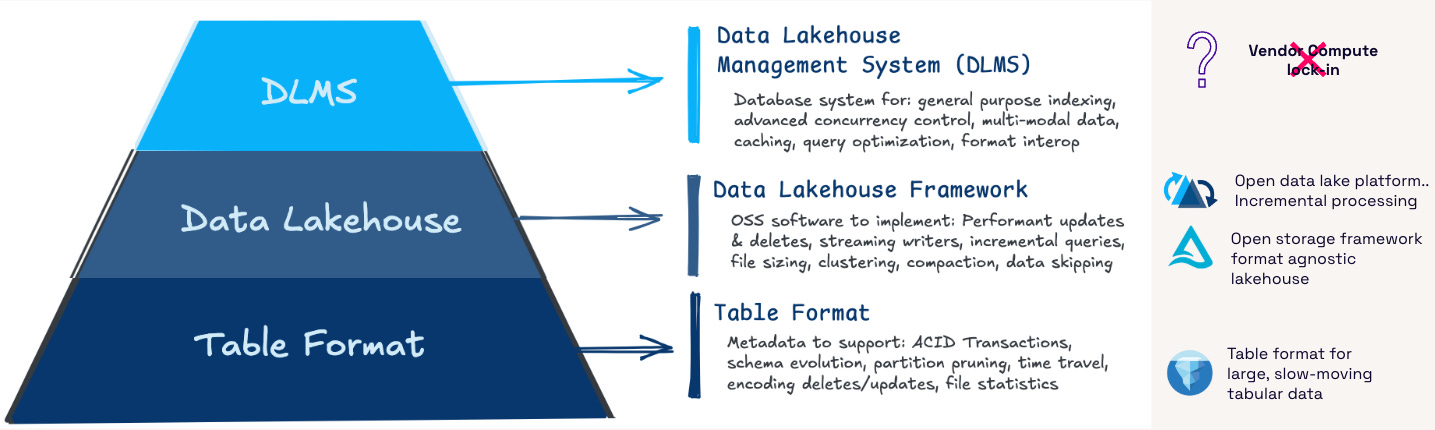

Lakehouse architecture represents a major evolution in data engineering. It combines data lakes' flexibility with data warehouses' structured reliability, providing a unified platform for diverse data workloads ranging from traditional business intelligence to advanced analytics and machine learning. Roy Hassan, a product leader at Upsolver, now Qlik, offers a comprehensive reality check on Lakehouse implementations, shedding light on their maturity, challenges, and future directions.Defining Lakehouse ArchitectureA Lakehouse is not a specific product, tool, or service but an architectural framework. This distinction is critical because it allows organizations to tailor implementations to their needs and technological environments. For instance, Databricks users inherently adopt a Lakehouse approach by storing data in object storage, managing it with the Delta Lake format, and analyzing it directly on the data lake.Assessing the Maturity of Lakehouse ImplementationsThe adoption and maturity of Lakehouse implementations vary across cloud platforms and ecosystems:Databricks: Many organizations have built mature Lakehouse implementations using Databricks, leveraging its robust capabilities to handle diverse workloads.Amazon Web Services (AWS): While AWS provides services like Athena, Glue, Redshift, and EMR to access and process data in object storage, many users still rely on traditional data lakes built on Parquet files. However, a growing number are adopting Lakehouse architectures with open table formats such as Iceberg, which has gained traction within the AWS ecosystem.Azure Fabric: Built on the Delta Lake format, Azure Fabric offers a vertically integrated Lakehouse experience, seamlessly combining storage, cataloging, and computing resources.Snowflake: Organizations increasingly use Snowflake in a Lakehouse-oriented manner, storing data in S3 and managing it with Iceberg. While new workloads favor Iceberg, most existing data remains within Snowflake’s internal storage.Google BigQuery: The Lakehouse ecosystem in Google Cloud is still evolving. Many users prefer to keep their workloads within BigQuery due to its simplicity and integrated storage.Despite these differences in maturity, the industry-wide adoption of Lakehouse architectures continues to expand, and their implementation is becoming increasingly sophisticated.Navigating Open Table Formats: Iceberg, Delta Lake, and HudiDiscussions about open table formats often spark debate, but each format offers unique strengths and is backed by a dedicated engineering community:Iceberg and Delta Lake share many similarities, with ongoing discussions about potential standardization.Hudi specializes in streaming use cases and optimizing real-time data ingestion and processing. [Listen to The Future of Data Lakehouses: A Fireside Chat with Vinoth Chandar - Founder CEO Onehouse & PMC Chair of Apache Hudi]Most modern query engines support Delta Lake and Iceberg, reinforcing their prominence in the Lakehouse ecosystem. While Hudi and Paimon have smaller adoption, broader query engine support for all major formats is expected over time.Examining Apache XTable’s RoleApache XTable aims to improve interoperability between different table formats. While the concept is practical, its long-term relevance remains uncertain. As the industry consolidates around fewer preferred formats, converting between them may introduce unnecessary complexity, latency, and potential points of failure—especially at scale.Challenges and Criticisms of Lakehouse ArchitectureOne common criticism of Lakehouse architecture is its lower abstraction level than traditional databases. Developers often need to understand the underlying file system, whereas databases provide a more seamless experience by abstracting storage management. The challenge is to balance Lakehouse's flexibility and traditional databases' ease of use.Best Practices for Lakehouse AdoptionA successful Lakehouse implementation starts with a well-defined strategy that aligns with business objectives. Organizations should:• Establish a clear vision and end goals.• Design a scalable and efficient architecture from the outset.• Select the right open table format based on workload requirements.The Significance of Shared StorageShared storage is a foundational principle of Lakehouse architecture. Organizations can analyze data using multiple tools and platforms by storing it in a single location and transforming it once. This approach reduces costs, simplifies data management, and enhances agility by allowing teams to choose the most suitable tool for each task.Catalogs: Essential Components of a LakehouseCatalogs are crucial in Lakehouse implementations as metadata repositories describing data assets. These catalogs fall into two categories:Technical catalogs, which focus on data management and organization.Business catalogs, which provide a business-friendly view of the data landscape.A growing trend in the industry is the convergence of technical and business catalogs to offer a unified view of data across the organization. Innovations like the Iceberg REST catalog specification have advanced catalog management by enabling a decoupled and standardized approach.The Future of Catalogs: AI and Machine Learning IntegrationIn the coming years, AI and machine learning will drive the evolution of data catalogs. Automated data discovery, governance, and optimization will become more prevalent, allowing organizations to unlock new AI-powered insights and streamline data management processes.The Changing Role of Data Engineers in the AI EraThe rise of AI is transforming the role of data engineers. Traditional responsibilities like building data pipelines are shifting towards platform engineering and enabling AI-driven data capabilities. Moving forward, data engineers will focus on:• Designing and maintaining AI-ready data infrastructure.• Developing tools that empower software engineers to leverage data more effectively.Final ThoughtsLakehouse architecture is rapidly evolving, with growing adoption across cloud ecosystems and advancements in open table formats, cataloging, and AI integration. While challenges remain—particularly around abstraction and complexity—the benefits of flexibility, cost efficiency, and scalability make it a compelling approach for modern data workloads.Organizations investing in a Lakehouse strategy should prioritize best practices, stay informed about emerging trends, and build architectures that support current and future data needs.All rights reserved ProtoGrowth Inc, India. I have provided links for informational purposes and do not suggest endorsement. All views expressed in this newsletter are my own and do not represent current, former, or future employers’ opinions. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit www.dataengineeringweekly.com

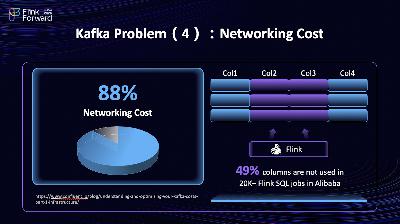

Fluss is a compelling new project in the realm of real-time data processing. I spoke with Jark Wu, who leads the Fluss and Flink SQL team at Alibaba Cloud, to understand its origins and potential. Jark is a key figure in the Apache Flink community, known for his work in building Flink SQL from the ground up and creating Flink CDC and Fluss.You can read the Q&A version of the conversation here, and don’t forget to listen to the podcast. What is Fluss and its use cases?Fluss is a streaming storage specifically designed for real-time analytics. It addresses many of Kafka's challenges in analytical infrastructure. The combination of Kafka and Flink is not a perfect fit for real-time analytics; the integration of Kafka and Lakehouse is very shallow. Fluss is an analytical Kafka that builds on top of Lakehouse and integrates seamlessly with Flink to reduce costs, achieve better performance, and unlock new use cases for real-time analytics.How do you compare Fluss with Apache Kafka?Fluss and Kafka differ fundamentally in design principles. Kafka is designed for streaming events, but Fluss is designed for streaming analytics.Architecture DifferenceThe first difference is the Data Model. Kafka is designed to be a black box to collect all kinds of data, so Kafka doesn't have built-in schema and schema enforcement; this is the biggest problem when integrating with schematized systems like Lakehouse. In contrast, Fluss adopts a Lakehouse-native design with structured tables, explicit schemas, and support for all kinds of data types; it directly mirrors the Lakehouse paradigm. Instead of Kafka's topics, Fluss organizes data into database tables with partitions and buckets. This Lakehouse-first approach eliminates the friction of using Lakehouse as a deep storage for Fluss.The second difference is the Storage Model. Fluss introduces Apache Arrow as its columnar log storage model for efficient analytical queries, whereas Kafka persists data as unstructured and row-oriented logs for efficient sequence scans. Analytics requires strong data-skipping ability in storage, so sequence scanning is not common; columnar pruning and filter pushdown are basic functionalities of analytical storage. Among the 20,000 Flink SQL jobs at Alibaba, only 49% of columns of Kafka data are read on average.The third difference is Data Mutability: Fluss natively supports real-time updates (e.g., row-level modifications) through LSM tree mechanisms and provides read-your-writes consistency with milli-second latency and high throughput. While Kafka primarily handles append-only streams, the Kafka compacted topic only provides a weak update semantic that compact will keep at least one value for a key, not only the latest.The fourth difference is the Lakehouse Architecture. Fluss embraces the Lakehouse Architecture. Fluss uses Lakehouse as a tiered storage, and data will be converted and tiered into data lakes periodically; Fluss only retains a small portion of recent data. So you only need to store one copy of data for your streaming and Lakehouse. But the true power of this architecture is it provides a union view of Streaming and Lakehouse, so whether it is a Kafka client or a query engine on Lakehouse, they all can visit the streaming data and Lakehouse data as a union view as a single table. It brings powerful analytics to streaming data users.On the other hand, it provides second-level data insights for Lakehouse users. Most importantly, you only need to store one copy of data for your streaming and Lakehouse, which reduces costs. In contrast, Kafka's tiered storage only stores Kafka log segments in remote storage; it is only a storage cost optimization for Kafka and has nothing to do with Lakehouse.The Lakehouse storage serves as the historical data layer for the streaming storage, which is optimized for storing long-term data with minute-level latencies. On the other hand, streaming storage serves as the real-time data layer for Lakehouse storage, which is optimized for storing short-term data with millisecond-level latencies. The data is shared and is exposed as a single table. For streaming queries on the table, it firstly uses the Lakehouse storage as historical data to have efficient catch-up read performance and then seamlessly transitions to the streaming storage for real-time data, ensuring no duplicate data is read. For batch queries on the table, streaming storage supplements real-time data for Lakehouse storage, enabling second-level freshness for Lakehouse analytics. This capability, termed Union Read, allows both layers to work in tandem for highly efficient and accurate data access.Confluent Tableflow can bridge Kafka and Iceberg data, but that is just a data movement that data integration tools like Fivetran or Airbyte can also achieve. Tableflow is a Lambda Architecture that uses two separate systems (streaming and batch), leading to challenges like data inconsistency, dual storage costs, and complex governance. On the other hand, Fluss is a Kappa Architecture; it stores one copy of data and presents it as a stream or a table, depending on the use case. Benefits:* Cost and Time Efficiency: no longer need to move data between system* Data Consistency: reduces the occurrence of similar-yet-different datasets, leading to fewer data pipelines and simpler data management.* Analytics on Stream* Freshness on LakehouseWhen to use Kafka Vs. FlussKafka is a general-purpose distributed event streaming platform optimized for high-throughput messaging and event sourcing. It excels in event-driven architectures and data pipelines. Fluss is tailored for real-time analytics. It works with streaming processing like Flink and Lakehouse formats like Iceberg and Paimon.How do you compare Fluss with OLAP Engines like Apache Pinot?Architecture: Pinot is an OLAP database that supports storing offline and real-time data and supports low-latency analytical queries. In contrast, Fluss is a storage to store real-time streaming data but doesn't provide OLAP abilities; it utilizes external query engines to process/analyze data, such as Flink and StarRocks/Spark/Trino (on the roadmap). Therefore, Pinot has additional query servers for OLAP serving, and Fluss has fewer components.Pinot is a monolithic architecture that provides complete capabilities from storage to computation. Fluss is used in a composable architecture that can plug multiple engines into different scenarios. The rise of Iceberg and Lakehouse has proven the power of composable architecture. Users use Parquet as the file format and Iceberg as the table format, Fluss on top of Iceberg as the real-time data layer, Flink for streaming processing, and StarRocks/Trino for OLAP queries. Fluss in the architecture can augment the existing Lakehouse with mill-second-level fresh data insights.API: The API of Fluss is RPC protocols like Kafka, which provides an SDK library, and query engines like Flink provide SQL API. Pinot provides SQL for OLAP queries and BI tool integrations.Streaming reads and writes: Fluss provides comprehensive streaming reads and writes like Kafka, but Pinot doesn't natively support them. Pinot connects to external streaming systems to ingest data using a pull-based mechanism and doesn't support a push-based mechanism.When to use Fluss vs Apache Pinot?If you want to build streaming analytics streaming pipelines, use Fluss (and usually Flink together). If you want to build OLAP systems for low-latency complex queries, use Pinot. If you want to augment your Lakehouse with streaming data, use Fluss.How is Fluss integrated with Apache Flink?Fluss focuses on storing streaming data and does not offer streaming processing capabilities. On the other hand, Flink is the de facto standard for streaming processing. Fluss aims to be the best storage for Flink and real-time analytics. The vision behind the integration is to provide users with a seamless streaming warehouse or streaming database experience. This requires seamless integration and in-depth optimization from storage to computation. For instance, Fluss already supports all of Flink's connector interfaces, including catalog, source, sink, lookup, and pushdown interfaces.In contrast, Kafka can only implement the source and sink interfaces. Our team is the community's core contributor to Flink SQL; we have the most committers and PMC members. We are committed to advancing the deep integration and optimization of Flink SQL and Fluss.Can you elaborate on Fluss's internal architecture?A Fluss cluster consists of two main processes: the CoordinatorServer and the TabletServer. The CoordinatorServer is the central control and management component. It maintains metadata, manages tablet allocation, lists nodes, and handles permissions. The TabletServer stores data and provides I/O services directly to users. The Fluss architecture is similar to the Kafka broker and uses the same durability and leader-based replication mechanism.Consistency: A table creation will request CoordinatorServer, which creates the metadata and assigns replicas to TabeltServers (three replicas by default), one of which is the leader. The replica leader writes the incoming logs and replica followers fetch logs from the replica leader. Once all replicas replicate the log, the log write response will be successfully returned.Fault Tolerance: If the TabletServer fails, CoordinatorServer will assign a new leader from the replica list, and it becomes the new leader to accept new read/write requests. Once a failed TabeltServer comes back, it catches up with the logs from the new leader.Scalability: Fluss can scale up linearly by adding TabletServers.How did Fluss implement the columnar storage?Let’s start with why we need columnar storage for streaming data. Fluss is designed for real-time analytics. In analytical queries, it's common that only a portion of the columns are read, and a filter condition can prune a significant amount of data. This applies to streaming analytics

Exploring the Evolution of Lakehouse Technology: A Conversation with Vinoth Chandar and Onehouse CEOIn this episode, Ananth, author of Data Engineering Weekly and CEO of Onehouse, discusses the latest developments in the Lakehouse technology space, particularly focusing on Apache Hudi, Iceberg, and Delta Lake. They discuss the intricacies of building high-scale data ecosystems, the impact of table format standardization, and technical advances in incremental processing and indexing. The conversation delves into the role of open source in shaping the future of data engineering and addresses community questions about integrating various databases and improving operational efficiency.00:00 Introduction and New Year Greetings01:19 Introduction to Apache Hudi and Its Impact02:22 Challenges and Innovations in Data Engineering04:16 Technical Deep Dive: Hudi's Evolution and Features05:57 Comparing Hudi with Other Data Formats13:22 Hudi 1.0: New Features and Enhancements20:37 Industry Perception and the Future of Data Formats24:29 Technical Differentiators and Project Longevity26:05 Open Standards and Vendor Games26:41 Standardization and Data Platforms28:43 Competition and Collaboration in Data Formats33:38 Future of Open Source and Data Community36:14 Technical Questions from the Audience47:26 Closing Remarks and Future Outlook This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit www.dataengineeringweekly.com

Agents of Change: Navigating 2025 with AI and Data InnovationIn this episode of Dew, the hosts and guests discuss their predictions for 2025, focusing on the rise and impact of agentic AI. The conversation covers three main categories:1. The role of agent AI2. The future workforce dynamic involving human and AI agent3. Innovations in data platforms heading into 2025.Highlights include insights from Ashwin and our special guest, Rajesh, on building robust agent systems, strategies for data engineers and AI engineers to remain relevant, data quality and observability, and the evolving landscape of Lakehouse architectures.The discussion also discusses the challenges of integrating multi-agent systems and the economic implications of AI sovereignty and data privacy.00:00 Introduction and Predictions for 202501:49 Exploring Agentic AI04:44 The Evolution of AI Models16:36 Enterprise Data and AI Integration25:06 Managing AI Agents36:37 Opportunities in AI and Agent Development38:02 The Evolving Role of AI and Data Engineers38:31 Managing AI Agents and Data Pipelines39:05 The Future of Data Scientists in AI40:03 Multi-Agent Systems and Interoperability44:09 Economic Viability of Multi-Agent Systems47:06 Data Platforms and Lakehouse Implementations53:14 Data Quality, Observability, and Governance01:02:20 The Rise of Multi-Cloud and Multi-Engine Systems01:06:21 Final Thoughts and Future Outlook This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit www.dataengineeringweekly.com

Welcome to another insightful edition of Data Engineering Weekly. As we approach the end of 2023, it's an opportune time to reflect on the key trends and developments that have shaped the field of data engineering this year. In this article, we'll summarize the crucial points from a recent podcast featuring Ananth and Ashwin, two prominent voices in the data engineering community.Understanding the Maturity Model in Data EngineeringA significant part of our discussion revolved around the maturity model in data engineering. Organizations must recognize their current position in the data maturity spectrum to make informed decisions about adopting new technologies. This approach ensures that adopting new tools and practices aligns with the organization's readiness and specific needs.The Rising Impact of AI and Large Language Models2023 witnessed a substantial impact of AI and large language models in data engineering. These technologies are increasingly automating processes like ETL, improving data quality management, and evolving the landscape of data tools. Integrating AI into data workflows is not just a trend but a paradigm shift, making data processes more efficient and intelligent.Lake House Architectures: The New FrontierLakehouse architectures have been at the forefront of data engineering discussions this year. The key focus has been interoperability among different data lake formats and the seamless integration of structured and unstructured data. This evolution marks a significant step towards more flexible and powerful data management systems.The Modern Data Stack: A Critical EvaluationThe modern data stack (MDS) has been a hot topic, with debates around its sustainability and effectiveness. While MDS has driven hyper-specialization in product categories, challenges in integration and overlapping tool categories have raised questions about its long-term viability. The future of MDS remains a subject of keen interest as we move into 2024.Embracing Cost OptimizationCost optimization has emerged as a priority in data engineering projects. With the shift to cloud services, managing costs effectively while maintaining performance has become a critical concern. This trend underscores the need for efficient architectures that balance performance with cost-effectiveness.Streaming Architectures and the Rise of Apache FlinkStreaming architectures have gained significant traction, with Apache Flink leading the way. Its growing adoption highlights the industry's shift towards real-time data processing and analytics. The support and innovation around Apache Flink suggest a continued focus on streaming architectures in the coming year.Looking Ahead to 2024As we look towards 2024, there's a sense of excitement about the potential changes in fundamental layers like S3 Express and the broader impact of large language models. The anticipation is for more intelligent data platforms that effectively combine AI capabilities with human expertise, driving innovation and efficiency in data engineering.In conclusion, 2023 has been a year of significant developments and shifts in data engineering. As we move into 2024, we will likely focus on refining these trends and exploring new frontiers in AI, lake house architectures, and streaming technologies. Stay tuned for more updates and insights in the next editions of Data Engineering Weekly. Happy holidays, and here's to a groundbreaking 2024 in data engineering! This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit www.dataengineeringweekly.com

Welcome to another episode of Data Engineering Weekly. Aswin and I select 3 to 4 articles from each edition of Data Engineering Weekly and discuss them from the author’s and our perspectives.On DEW #133, we selected the following articleLakeFs: How to Implement Write-Audit-Publish (WAP)I wrote extensively about the WAP pattern in my latest article, An Engineering Guide to Data Quality - A Data Contract Perspective. Super excited to see a complete guide on implementing the WAP pattern in Iceberg, Hudi, and of course, with LakeFs.https://lakefs.io/blog/how-to-implement-write-audit-publish/Jatin Solanki: Vector Database - Concepts and examplesStaying with the vector search, a new class of Vector Databases is emerging in the market to improve the semantic search experiences. The author writes an excellent introduction to vector databases and their applications.https://blog.devgenius.io/vector-database-concepts-and-examples-f73d7e683d3ePolicy Genius: Data Warehouse Testing Strategies for Better Data QualityData Testing and Data Observability are widely discussed topics in Data Engineering Weekly. However, both techniques test once the transformation task is completed. Can we test SQL business logic during the development phase itself? Perhaps unit test the pipeline?The author writes an exciting article about adopting unit testing in the data pipeline by producing sample tables during the development. We will see more tools around the unit test framework for the data pipeline soon. I don’t think testing data quality on all the PRs against the production database is not a cost-effective solution. We can do better than that, tbh.https://medium.com/policygenius-stories/data-warehouse-testing-strategies-for-better-data-quality-d5514f6a0dc9 This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit www.dataengineeringweekly.com

Welcome to another episode of Data Engineering Weekly. Aswin and I select 3 to 4 articles from each edition of Data Engineering Weekly and discuss them from the author’s and our perspectives.On DEW #132, we selected the following articleCowboy Ventures: The New Generative AI Infra StackGenerative AI has taken the tech industry by storm. In Q1 2023, a whopping $1.7B was invested into gen AI startups. Cowboy ventures unbundle the various categories of Generative AI infra stack here.https://medium.com/cowboy-ventures/the-new-infra-stack-for-generative-ai-9db8f294dc3fCoinbase: Databricks cost management at CoinbaseEffective cost management in data engineering is crucial as it maximizes the value gained from data insights while minimizing expenses. It ensures sustainable and scalable data operations, fostering a balanced business growth path in the data-driven era. Coinbase writes one case about cost management for Databricks and how they use the open-source overwatch tool to manage Databrick’s cost.https://www.coinbase.com/blog/databricks-cost-management-at-coinbaseWalmart: Exploring an Entity Resolution Framework Across Various Use CasesEntity resolution, a crucial process that identifies and links records representing the same entity across various data sources, is indispensable for generating powerful insights about relationships and identities. This process, often leveraging fuzzy matching techniques, not only enhances data quality but also facilitates nuanced decision-making by effectively managing relationships and tracking potential matches among data records. Walmart writes about the pros and cons of approaching fuzzy matching with rule-based and ML-based matching.https://medium.com/walmartglobaltech/exploring-an-entity-resolution-framework-across-various-use-cases-cb172632e4aeMatt Palmer: What's the hype behind DuckDB?So DuckDB, Is it hype? or does it have the real potential to bring architectural changes to the data warehouse? The author explains how DuckDB works and the potential impact of DuckDB in Data Engineering.https://mattpalmer.io/posts/whats-the-hype-duckdb/ This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit www.dataengineeringweekly.com

Welcome to another episode of Data Engineering Weekly. Aswin and I select 3 to 4 articles from each edition of Data Engineering Weekly and discuss them from the author’s and our perspectives.On DEW #131, we selected the following articleRamon Marrero: DBT Model Contracts - Importance and Pitfallsdbt introduces model contract with 1.5 release. There were a few critics of the dbt model implementation, such as The False Promise of dbt Contracts. I found the argument made in the false promise of the dbt contract surprising, especially the below comments.As a model owner, if I change the columns or types in the SQL, it's usually intentional. - My immediate no reaction was, Hmm, Not really.However, as with any initial system iteration, the dbt model contract implementation has pros and cons. I’m sure it will evolve as the adoption increases. The author did an amazing job writing a balanced view of dbt model contract.https://medium.com/geekculture/dbt-model-contracts-importance-and-pitfalls-20b113358ad7Instacart: How Instacart Ads Modularized Data Pipelines With Lakehouse Architecture and SparkInstacart writes about its journey of building its ads measurement platform. A couple of thing stands out for me in the blog.* The Event store is moving from S3/ parquet storage to DeltaLake storage—a sign of LakeHouse format adoption across the board.* Instacart adoption of Databricks ecosystem along with Snowflake.* The move to rewrite SQL into a composable Spark SQL pipeline for better readability and testing.https://tech.instacart.com/how-instacart-ads-modularized-data-pipelines-with-lakehouse-architecture-and-spark-e9863e28488dTimo Dechau: The extensive guide for Server-Side TrackingThe blog is an excellent overview of server-side event tracking. The author highlights how the event tracking is always close to the UI flow than the business flow and all the possible things wrong with frontend event tracking. A must-read article if you’re passionate about event tracking like me.Credit Saison: Using Jira to Automate Updations and Additions of Glue TablesThis Schema change could’ve been a JIRA ticket!!!I found the article excellent workflow automation on top of the familiar ticketing system, JIRA. The blog narrates the challenges with Glue Crawler and how selectively applying the db changes management using JIRA help to overcome its technical debt of running 6+ hours custom crawler.https://medium.com/credit-saison-india/using-jira-to-automate-updations-and-additions-of-glue-tables-58d39adf9940 This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit www.dataengineeringweekly.com

Welcome to another episode of Data Engineering Weekly. Aswin and I select 3 to 4 articles from each edition of Data Engineering Weekly and discuss them from the author’s and our perspectives.On DEW #129, we selected the following articleDoorDash identifies Five big areas for using Generative AI.Generative AI took the industry by storm, and every company is trying to figure out what it means to them. DoorDash writes about its discovery of Generative AI and its application to boost its business.* The assistance of customers in completing tasks* Better tailored and interactive discovery [Recommendation]* Generation of personalized content and merchandising* Extraction of structured information* Enhancement of employee productivityhttps://doordash.engineering/2023/04/26/doordash-identifies-five-big-areas-for-using-generative-ai/Mikkel Dengsøe: Europe data salary benchmark 2023Fascinating findings on Europe’s data salary among various countries. The key findings are* German-based roles pay lower.* London and Dublin-based roles have the highest compensations. The Dublin sample is skewed to more senior roles, with 55% of reported salaries being senior, which is more indicative of the sample than jobs in Dublin paying higher than in London.* The top 75% percentile jobs in Amsterdam, London, and Dublin pay nearly 50% more than those in Berlinhttps://medium.com/@mikldd/europe-data-salary-benchmark-2023-b68cea57923dTrivago: Implementing Data Validation with Great Expectations in Hybrid EnvironmentsThe article by Trivago discusses the integration of data validation with Great Expectations. It presents a well-balanced case study that emphasizes the significance of data validation and the necessity for sophisticated statistical validation methods.https://tech.trivago.com/post/2023-04-25-implementing-data-validation-with-great-expectations-in-hybrid-environments.htmlExpedia: How Expedia Reviews Engineering Is Using Event Streams as a Source Of Truth“Events as a source of truth” is a simple but powerful idea to persist the state of the business entity as a sequence of state-changing events. How to build such a system? Expedia writes about the review stream system to demonstrate how it adopted the event-first approach.https://medium.com/expedia-group-tech/how-expedia-reviews-engineering-is-using-event-streams-as-a-source-of-truth-d3df616cccd8 This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit www.dataengineeringweekly.com

Welcome to another episode of Data Engineering Weekly. Aswin and I select 3 to 4 articles from each edition of Data Engineering Weekly and discuss them from the author’s and our perspectives. On DEW #124, we selected the following articledbt: State of Analytics Engineeringdbt publishes the state of analytical [data???🤔] engineering. If you follow Data Engineering Weekly, We actively talk about data contracts & how data is a collaboration problem, not just an ETL problem. The state of analytical engineering survey validates it as two of the top 5 concerns are data ownership & collaboration between the data producer & consumer. Here are the top 5 key learnings from the report.* 46% of respondents plan to invest more in data quality and observability this year— the most popular area for future investment.* Lack of coordination between data producers and data consumers is perceived by all respondents to be this year’s top threat to the ecosystem.* Data and analytics engineers are most likely to believe they have clear goals and are most likely to agree their work is valued.* 71% of respondents rated data team productivity and agility positively, while data ownership ranked as a top concern for most.* Analytics leaders are most concerned with stakeholder needs. 42% say their top concern is “Data isn’t where business users need it.”https://www.getdbt.com/state-of-analytics-engineering-2023/Rittman Analytics: ChatGPT, Large Language Models and the Future of dbt and Analytics ConsultingVery fascinating to read about the potential impact of LLM in the future of dbt and analytical consulting. The author predicts we are at the beginning of the industrial revolution of computing.Future iterations of generative AI, public services such as ChatGPT, and domain-specific versions of these underlying models will make IT and computing to date look like the spinning jenny that was the start of the industrial revolution.🤺🤺🤺🤺🤺🤺🤺🤺🤺May the best LLM wins!! 🤺🤺🤺🤺🤺🤺https://www.rittmananalytics.com/blog/2023/3/26/chatgpt-large-language-models-and-the-future-of-dbt-and-analytics-consultingLinkedIn: Unified Streaming And Batch Pipelines At LinkedIn: Reducing Processing time by 94% with Apache BeamOne of the curses of adopting Lambda Architecture is the need for rewriting business logic in both streaming and batch pipelines. Spark attempt to solve this by creating a unified RDD model for streaming and batch; Flink introduces the table format to bridge the gap in batch processing. LinkedIn writes about its experience adopting Apache Beam’s approach, where Apache Beam follows unified pipeline abstraction that can run in any target data processing runtime such as Samza, Spark & Flink.https://engineering.linkedin.com/blog/2023/unified-streaming-and-batch-pipelines-at-linkedin--reducing-procWix: How Wix manages Schemas for Kafka (and gRPC) used by 2000 microservicesWix writes about managing schema for 2000 (😬) microservices by standardizing schema structure with protobuf and Kafka schema registry. Some exciting reads include patterns like an internal Wix Docs approach & integration of the documentation publishing as part of the CI/ CD pipelines.https://medium.com/wix-engineering/how-wix-manages-schemas-for-kafka-and-grpc-used-by-2000-microservices-2117416ea17b This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit www.dataengineeringweekly.com

Welcome to another episode of Data Engineering Weekly Radio. Ananth and Aswin discussed a blog from BuzzFeed that shares lessons learned from building products powered by generative AI. The blog highlights how generative AI can be integrated into a company's work culture and workflow to enhance creativity rather than replace jobs. BuzzFeed provided their employees with intuitive access to APIs and integrated the technology into Slack for better collaboration.Some of the lessons learned from BuzzFeed's experience include:* Getting the technology into the hands of creative employees to amplify their creativity.* Effective prompts are a result of close collaboration between writers and engineers.* Moderation is essential and requires building guardrails into the prompts.* Demystifying the technical concepts behind the technology can lead to better applications and tools.* Educating users about the limitations and benefits of generative AI.* The economics of using generative AI can be challenging, especially for hands-on business models.The conversation also touched upon the non-deterministic nature of generative AI systems, the importance of prompt engineering, and the potential challenges in integrating generative AI into data engineering workflows. As technology progresses, it is expected that the economics of generative AI will become more favorable for businesses.https://tech.buzzfeed.com/lessons-learned-building-products-powered-by-generative-ai-7f6c23bff376Moving on, We discuss the importance of on-call culture in data engineering teams. We emphasize the significance of data pipelines and their impact on businesses. With a focus on communication, ownership, and documentation, we highlight how data engineers should prioritize and address issues in data systems.We also discuss the importance of on-call rotation, runbooks, and tools like PagerDuty and Airflow to streamline alerts and responses. Additionally, we mention the value of having an on-call handoff process, where one engineer summarizes their experiences and alerts during their on-call period, allowing for improvements and a better understanding of common issues.Overall, this conversation stresses the need for a learning culture within data engineering teams, focusing on building robust systems, improving team culture, and increasing productivity.https://towardsdatascience.com/how-to-build-an-on-call-culture-in-a-data-engineering-team-7856fac0c99Finally, Ananth and Aswin discuss an article about adopting dimensional data modeling in hyper-growth companies. We appreciate the learning culture and emphasize balancing speed, maturity, scale, and stability.We highlight how dimensional modeling was initially essential due to limited computing and expensive storage. However, as storage became cheaper and computing more accessible, dimensional modeling was often overlooked, leading to data junkyards. In the current landscape, it's important to maintain business-aware domain-driven data marts and acknowledge that dimensional modeling still has a role.The conversation also touches upon the challenges of tracking slowly changing dimensions and the responsibility of data architects, engineers, and analytical engineers in identifying and implementing such dimensions. We discuss the need for a fine balance between design thinking and experimentation and stress the importance of finding the right mix of correctness and agility for each company.https://medium.com/whatnot-engineering/same-data-sturdier-frame-layering-in-dimensional-data-modeling-at-whatnot-5e6a548ee713 This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit www.dataengineeringweekly.com

DBT Reimagined by Pedram NavidThe challenge with this, having the Jinja templating, I found out two things. One is like; it is on runtime. So you have to build it and then run some simulations to understand whether you did it correctly or not.Jinja Templates also add cognitive load. The developers have to know how the Jinja template will work; how SQL will work, and it becomes a bit difficult to read and understand.In this conversation with Aswin, we discuss the article "DBT Reimagined" by Pedram Navid. We talked about the strengths and weaknesses of DBT and what we would like to see in a future version of the tool.Aswin agrees with Pedram Navid that a DSL would be better than a templated language for DBT. He also points out that the Jinja templating system can be difficult to read and understand.I agree with both Aswin and Pedram Navid. A DSL would be a great way to improve DBT. It would make the tool more powerful and easier to use.I'm also interested in a native programming language for DBT. It would allow developers to write their own custom functions and operators, giving them even more flexibility in using the tool.The conversation shifts to the advantages of DSL over templated code, and they discuss other tools like SQL Mesh, Malloy, and an internal tool by Criteo. I believe that more experimentation with SQL is needed.Overall, the article "DBT Reimagined" is a valuable contribution to discussing the future of data transformation tools. It raises some important questions about the strengths and weaknesses of DBT and offers some interesting ideas for how to improve.Change Data Capture at Brex by Jun Zhaohttps://medium.com/brexeng/change-data-capture-at-brex-c71263616dd7Aswin provided a great definition of CDC, explaining it as a mechanism to listen to database replication logs and capture, stream, and reproduce data in real time🕒. He shared his first encounter with CDC back in 2013, working on a Proof of Concept (POC) for a bank🏦.Aswin explains that CDC is a way to capture changes made to data in a database. This can be useful for a variety of reasons, such as:* Auditing: CDC can be used to track changes made to data, which can be useful for auditing purposes.* Compliance: CDC can be used to ensure that data complies with regulations.* Data replication: CDC can replicate data from one database to another.* Data integration: CDC can be used to integrate data from multiple sources.Aswin also discusses some of the challenges of using the CDC, such as:* Complexity: CDC can be a complex process to implement.* Cost: CDC can be a costly process to implement.* Performance: CDC can impact the performance of the database.So, in a summary of the conversation about change data capture (CDC):* CDC is a way to capture changes made to data in a database.* CDC can be used for various purposes, such as auditing, compliance, data replication, and integration.* CDC can be implemented using a variety of tools, such as Debezium.* Some of the challenges of the CDC include latency, cost, and performance.* CDC can’t carry business context, which can be expensive to recreate. * Overall, CDC is a valuable tool for data engineers.On Data Products and How to describe them by Max Illishttps://medium.com/@maxillis/on-data-products-and-how-to-describe-them-76ae1b7abda4The library example is close to heart for Aswin since his father started his career as a librarian! 📖👨💻 Aswin highlights Max's broad definition of data products, including data sets, tables, views, APIs, and machine learning models. Anand agrees that BI dashboards can also be data products. 📊🔍We emphasize the importance of exposing tribal knowledge and democratizing the data product world. Max's journey from skeptic to believer in data products is very admirable. 🌟📝We dive into data products' structural and behavioral properties and Max's detailed description of build-time and runtime properties. They also appreciate the idea of reference queries to facilitate data consumption. 🧩🚀In conclusion, Max's blog post on data products is one of the best written up on data products around! Big thanks to Max for sharing his thoughts! 🙌 This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit www.dataengineeringweekly.com

Hey folks, have you heard about the Data Council conference in Austin? The three-day event was jam-packed with exciting discussions and innovative ideas on data engineering and infrastructure, data science and algorithms, MLOps, generative AI, streaming infrastructure, analytics, and data culture and community. "People are so nice in the data community. Meeting them and brainstorming with many ideas and various thought processes is amazing. It was an amazing experience; The conference is mostly like a jam of different thought processes, ideas, and entrepreneurship.The keynote by Shrishanka from AcrylData talked about how data catalogs are becoming the control center for pipelines, a game-changer for the industry.I also had a chance to attend a session on Malloy, a new way of thinking about SQL queries. It was experimental but had some cool ideas on abstracting complicated SQL queries. ChatGPT will change the game in terms of data engineering jobs and productivity. Charge GPT, for example, has improved my productivity by 60%. And generative AI is becoming so advanced that it can produce dynamic SQL code in just a few lines.But of course, with all this innovation and change, there are still questions about the future. Will Snowflake and Databricks outsource data governance experience to other companies? Will the modern data stack become more mature and consolidated? These are the big questions we need to ask as we move forward in the world of data. The talk by Uber on their Ubermetric system migrating from ElasticSearch to Apache Pinot - which, by the way, is an incredibly flexible and powerful system. We also chatted about Pinot's semi-structured storage support, which is important in modern data engineering. Now, let's talk about something (non)controversial: the idea that big data is dead. DuckDB brought up three intriguing points to back up this claim. * Not every company has Big Data.* The availability of instances with higher memory is becoming a commodity* Even with the companies have big data; they do only incremental processing, which can be small enough Abhi Sivasailam presented a thought-provoking approach to metric standardization. He introduced the concept of "metric trees" - connecting high-level metrics to other metrics and building semantics around them. The best part? You can create a whole tree structure that shows the impact of one metric on another. Imagine the possibilities! You could simulate your business performance by tweaking the metric tree, which is mind-blowing!Another amazing talk was about cross-company data exchange, where Pardis discussed various ways companies share data, like APIs, file uploads, or even Snowflake sharing. But the real question is: How do we deal with revenue sharing, data governance, and preventing sensitive data leaks? Pardis's startup General Folders, is tackling this issue, becoming the "Dropbox" of data exchange. How cool is that?To wrap it up, three key learnings from the conference were:* The intriguing idea is that "big data is dead" and how it impacts data infrastructure architecture.* Data Catalog as a control plane for modern data stack? Is it a dream or reality?* The growing importance of data contracts and the fascinating idea of metric trees.Overall, the Data Council conference was an incredible experience, and I can't wait to see what they have in store for us next year. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit www.dataengineeringweekly.com