Discover Build Wiz AI Show

Build Wiz AI Show

Build Wiz AI Show

Author: Build Wiz AI

Subscribed: 9Played: 455Subscribe

Share

© Build Wiz AI

Description

Build Wiz AI Show is your go-to podcast for transforming the latest and most interesting papers, articles, and blogs about AI into an easy-to-digest audio format. Using NotebookLM, we break down complex ideas into engaging discussions, making AI knowledge more accessible.

Have a resource you’d love to hear in podcast form? Send us the link, and we might feature it in an upcoming episode! 🚀🎙️

Have a resource you’d love to hear in podcast form? Send us the link, and we might feature it in an upcoming episode! 🚀🎙️

196 Episodes

Reverse

Join us as we tackle the "last mile" of AI Agents series, exploring the rigorous operational discipline required to transform fragile prototypes into reliable, production-grade agents. We break down the essential infrastructure of trust—from evaluation gates to automated pipelines—that ensures your system is ready for the real world. This foundation paves the way for our series finale where we will move beyond single-agent operations to look at the future of autonomous collaboration within multi-agent ecosystems.

In this episode of our AI Agent series, we synthesize our previous discussions on evaluation frameworks and observability into a cohesive operational playbook known as the "Agent Quality Flywheel",. Join us as we explore how to transform raw telemetry into a continuous feedback loop that drives relentless improvement, ensuring your autonomous agents are not just capable, but truly trustworthy,. This episode bridges the critical gap between theory and production, providing the final principles needed to build enterprise-grade reliance in an agentic world,.

Moving beyond the temporary "workbench" of individual sessions, episode 3 of our AI Agents series unlocks the power of Memory—the mechanism that allows AI agents to persist knowledge and personalize interactions over time. We will explore how agents utilize an intelligent extraction and consolidation pipeline to curate a long-term "filing cabinet" of user insights, effectively turning them from generic assistants into experts on the specific users they serve.

In this episode in series AI Agents, we discuss the transformation of foundation models from static prediction engines into "Agentic AI" capable of using tools as their "eyes and hands" to interact with the world. We highlight the complexity of connecting these agents to diverse enterprise systems, setting the stage for Episode 1 to explain the "N x M" integration problem and the Model Context Protocol's role in solving it.

Join us for the premiere of our series on AI Agents, exploring the paradigm shift from passive predictive models to autonomous systems capable of reasoning and action. In this episode, we deconstruct the core anatomy of an agent—its "Brain" (Model), "Hands" (Tools), and "Nervous System" (Orchestration)—to explain the fundamental "Think, Act, Observe" loop that drives their behavior. Discover how this architecture enables developers to move beyond traditional coding to become "directors" of intelligent, goal-oriented applications.

DeepSeek-AI introduces DeepSeek-R1, a reasoning model developed through reinforcement learning (RL) and distillation techniques. The research explores how large language models can develop reasoning skills, even without supervised fine-tuning, highlighting the self-evolution observed in DeepSeek-R1-Zero during RL training. DeepSeek-R1 addresses limitations of DeepSeek-R1-Zero, like readability, by incorporating cold-start data and multi-stage training. Results demonstrate DeepSeek-R1 achieving performance comparable to OpenAI models on reasoning tasks, and distillation proves effective in empowering smaller models with enhanced reasoning capabilities. The study also shares unsuccessful attempts with Process Reward Models (PRM) and Monte Carlo Tree Search (MCTS), providing valuable insights into the challenges of improving reasoning in LLMs. The open-sourcing of the models aims to support further research in this area.

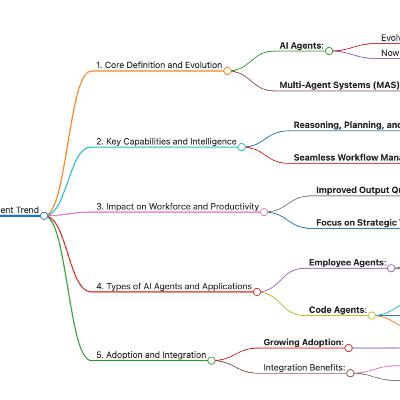

Google's "AI Business Trends 2025" report analyzes key strategic trends expected to reshape businesses. It identifies five major trends including the rise of multimodal AI, the evolution of AI agents, the importance of assistive search, the need for seamless AI-powered customer experiences, and the increasing role of AI in security. The report suggests that companies need to understand how AI has impacted current market dynamics in order to innovate, compete and continue to evolve. Early adopters of AI are more likely to dominate the market and grow faster than traditional competitors. This analysis is based on data insights from studies, surveys of global decision makers, and Google Trends research, using tools like NotebookLM to identify these pivotal shifts.

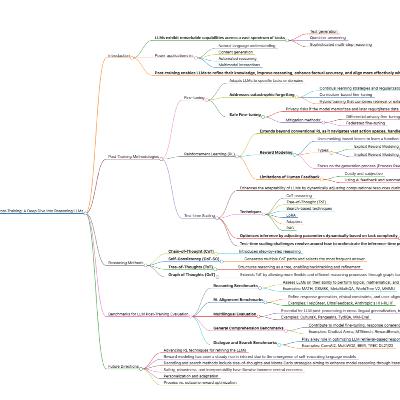

This podcast presents a comprehensive survey of post-training techniques for Large Language Models (LLMs), focusing on methodologies that refine these models beyond their initial pre-training. The key post-training strategies explored include fine-tuning, reinforcement learning (RL), and test-time scaling, which are critical for improving reasoning, accuracy, and alignment with user intentions. It examines various RL techniques such as Proximal Policy Optimization (PPO), Direct Preference Optimization (DPO) and Group Relative Policy Optimization (GRPO) in LLMs. The survey also investigates benchmarks and evaluation methods for assessing LLM performance across different domains, discussing challenges such as catastrophic forgetting and reward hacking. The document concludes by outlining future research directions, emphasizing hybrid approaches that combine multiple optimization strategies for enhanced LLM capabilities and efficient deployment. The aim is to guide the optimization of LLMs for real-world applications by consolidating recent research and addressing remaining challenges.

Could your trusted AI model be a hidden "sleeper agent" just waiting for a secret command to turn malicious? We explore a new methodology that extracts and reconstructs backdoor triggers by exploiting the surprising fact that these models often strongly memorize their own poisoning data. Tune in to discover how this inference-only scanner can unmask hidden threats across various LLMs without needing any prior knowledge of the attacker’s specific trigger or target behavior.Source: https://arxiv.org/pdf/2602.03085

Is the global AI landscape shifting toward a "DeepSeek moment" where cheaper, open-weight models from China challenge the dominance of US frontier labs? Join machine learning experts Sebastian Raschka and Nathan Lambert as they dissect the technical breakthroughs of 2025, from the evolving physics of inference-time scaling to the intensifying competition between organizations like OpenAI, Anthropic, and their international counterparts. Listeners will discover how these advancements are fundamentally transforming the future of software engineering and explore the realistic, often "jagged" roadmap toward AGI.

Is your favorite AI assistant a productivity powerhouse or a "shortcut" that’s secretly stalling your professional growth? We dive into new research revealing that while AI helps you finish tasks, it can simultaneously impair your conceptual understanding and debugging skills by as much as 17%. Join us as we uncover the specific "high-engagement" interaction patterns that allow you to leverage AI without losing your competitive edge.

This episode explores how Generative AI is shifting software development from simple tool-based experimentation to a holistic transformation of engineering excellence. We discuss strategies for achieving 2x engineering capacity while overcoming critical "value blockers" like the toil paradox and organizational resistance. Finally, we examine the future of development teams, where humans transition from primary code creators into expert validators and orchestrators.

In this episode, Peter Steinberger, the creator of Clawbot, discusses his radical transition to an AI-driven workflow where he merges hundreds of commits daily and often ships code he does not read. He introduces the "closing the loop" principle, explaining how automated validation allows him to act as an architect managing multiple parallel AI agents rather than performing manual plumbing. The conversation explores a future where traditional code reviews are replaced by "prompt requests" and engineering focus shifts from line-by-line coding to high-level system design and product vision.Detail: https://www.youtube.com/watch?v=8lF7HmQ_RgY

In today’s episode, we explore the evolution of AI from solitary models to data agent swarms, where specialized autonomous agents collaborate like a team of experts to solve complex, multi-faceted problems. We dive into the architecture of frontier models like Moonshot AI’s Kimi 2.5, which utilizes parallel sub-agents to slash execution times and enhance performance across coding and office productivity tasks. Finally, we examine the critical debate regarding whether these systems achieve true social intelligence or if they fall short of established multi-agent system principles.

In this episode, we explore Claude’s constitution, the foundational document that serves as the final authority on Anthropic's vision for the AI’s values and character. We discuss how Claude navigates a "principal hierarchy" to balance being helpful to users while prioritizing broad safety and ethical practice. Finally, we examine the hard constraints and guiding principles designed to ensure this novel entity remains a beneficial force in a transformative AI landscape.Source: https://www.anthropic.com/constitution

This episode features a landmark debate between Google DeepMind’s Demis Hassabis and Anthropic’s Dario Amodei regarding the imminent arrival of Artificial General Intelligence (AGI),. The leaders explore competing timelines for AGI development—ranging from as early as 2026 to the end of the decade—and the potential for AI self-improvement loops to radically accelerate this transition,,. Finally, they address the profound societal implications of a post-AGI world, including radical labor market shifts, geopolitical competition, and the urgent need for international safety coordination,,.

In this episode, Nvidia CEO Jensen Huang and BlackRock’s Larry Fink explore how AI represents a massive platform shift and the largest infrastructure buildout in human history,,. Huang details the "five-layer cake" of AI—from energy and chips to applications—and explains why he believes the technology will enhance productivity and address labor shortages rather than eliminate jobs,,. The discussion also highlights how emerging nations and Europe can leverage "physical AI" and open models to secure their place in a broadening global economy,,.

This episode explores Recursive Language Models (RLMs), a groundbreaking inference strategy that enables large language models to process prompts two orders of magnitude beyond their standard context windows. We discuss how RLMs treat long inputs as part of an external environment, using a Python REPL to programmatically decompose data and recursively call the model over specific snippets. Learn how this approach effectively overcomes "context rot" to significantly outperform base models and existing scaffolds on complex, information-dense tasks.

This episode explores Agentic Context Engineering (ACE), a breakthrough framework that transforms LLM contexts into evolving playbooks that accumulate and refine strategies over time,. We discuss how ACE prevents context collapse and brevity bias through a modular workflow of generation, reflection, and curation, ensuring detailed domain knowledge is preserved rather than compressed away,,. Join us to learn how this approach enables smaller models to rival top-tier agents while significantly reducing adaptation latency and operational costs,,.Source: https://arxiv.org/abs/2510.04618

This episode explores DSPy, a declarative framework that enables developers to build modular software by treating Large Language Models as first-class citizens within a proper Python program. We discuss how core primitives like signatures and modules allow for the decomposition of logic into composable systems that remain robust despite model or paradigm shifts. Finally, we dive into the power of DSPy optimizers, which iteratively refine prompts and metrics to improve performance, often rivaling or exceeding traditional fine-tuning methods.