Discover 80,000 Hours Podcast

80,000 Hours Podcast

80,000 Hours Podcast

Author: Rob, Luisa, and the 80000 Hours team

Subscribed: 6,124Played: 296,576Subscribe

Share

© All rights reserved

Description

Unusually in-depth conversations about the world's most pressing problems and what you can do to solve them.

Subscribe by searching for '80000 Hours' wherever you get podcasts.

Hosted by Rob Wiblin and Luisa Rodriguez.

Subscribe by searching for '80000 Hours' wherever you get podcasts.

Hosted by Rob Wiblin and Luisa Rodriguez.

311 Episodes

Reverse

Most debates about the moral status of AI systems circle the same question: is there something that it feels like to be them? But what if that’s the wrong question to ask? Andreas Mogensen — a senior researcher in moral philosophy at the University of Oxford — argues that so-called 'phenomenal consciousness' might be neither necessary nor sufficient for a being to deserve moral consideration. Links to learn more and full transcript: https://80k.info/am25For instance, a creature on the sea floor that experiences nothing but faint brightness from the sun might have no moral claim on us, despite being conscious. Meanwhile, any being with real desires that can be fulfilled or not fulfilled can arguably be benefited or harmed. Such beings arguably have a capacity for welfare, which means they might matter morally. And, Andreas argues, desire may not require subjective experience. Desire may need to be backed by positive or negative emotions — but as Andreas explains, there are some reasons to think a being could also have emotions without being conscious. There’s another underexplored route to moral patienthood: autonomy. If a being can rationally reflect on its goals and direct its own existence, we might have a moral duty to avoid interfering with its choices — even if it has no capacity for welfare. However, Andreas suspects genuine autonomy might require consciousness after all. To be a rational agent, your beliefs probably need to be justified by something, and conscious experience might be what does the justifying. But even this isn’t clear. The upshot? There’s a chance we could just be really mistaken about what it would take for an AI to matter morally. And with AI systems potentially proliferating at massive scale, getting this wrong could be among the largest moral errors in history.In today’s interview, Andreas and host Zershaaneh Qureshi confront all these confusing ideas, challenging their intuitions about consciousness, welfare, and morality along the way. They also grapple with a few seemingly attractive arguments which share a very unsettling conclusion: that human extinction (or even the extinction of all sentient life) could actually be a morally desirable thing. This episode was recorded on December 3, 2025.Chapters:Cold open (00:00:00)Introducing Zershaaneh (00:00:55)The puzzle of moral patienthood (00:03:20)Is subjective experience necessary? (00:05:52)What is it to desire? (00:10:42)Desiring without experiencing (00:17:56)What would make AIs moral patients? (00:28:17)Another route entirely: deserving autonomy (00:45:12)Maybe there's no objective truth about any of this (01:12:06)Practical implications (01:29:21)Why not just let superintelligence figure this out for us? (01:38:07)How could human extinction be a good thing? (01:47:30)Lexical threshold negative utilitarianism (02:12:30)So... should we still try to prevent extinction? (02:25:22)What are the most important questions for people to address here? (02:32:16)Is God GDPR compliant? (02:35:32)Video and audio editing: Dominic Armstrong, Milo McGuire, Luke Monsour, and Simon MonsourCoordination, transcripts, and web: Katy Moore

In 1983, Stanislav Petrov, a Soviet lieutenant colonel, sat in a bunker watching a red screen flash “MISSILE LAUNCH.” Protocol demanded he report it to superiors, which would very likely trigger a retaliatory nuclear strike. Petrov didn’t. He reasoned that if the US were actually attacking, they wouldn’t fire just 5 missiles — they’d empty the silos. He bet the fate of the world on a hunch that his machine was broken. He was right.Paul Scharre, the former Army Ranger who led the Pentagon team that wrote the US military’s first policy on autonomous weapons, has a question: What would an AI have done in Petrov’s shoes? Would an AI system have been flexible and wise enough to make the same judgement? Or would it immediately launch a counterattack?Paul joins host Luisa Rodriguez to explain why we are hurtling toward a “battlefield singularity” — a tipping point where AI increasingly replaces humans in much of the military, changing the way war is fought with speed and complexity that outpaces humans’ ability to keep up.Links to learn more, video, and full transcript: https://80k.info/psMilitaries don’t necessarily want to take humans out of the loop. But Paul argues that the competitive pressure of warfare creates a “use it or lose it” dynamic. As former Deputy Secretary of Defense Bob Work put it: “If our competitors go to Terminators, and their decisions are bad, but they’re faster, how would we respond?”Once that line is crossed, Paul warns we might enter an era of “flash wars” — conflicts that spiral out of control as quickly and inexplicably as a flash crash in the stock market, with no way for humans to call a timeout.In this episode, Paul and Luisa dissect what this future looks like:Swarming warfare: Why the future isn’t just better drones, but thousands of cheap, autonomous agents coordinating like a hive mind to overwhelm defences.The Gatling gun cautionary tale: The inventor of the Gatling gun thought automating fire would reduce the number of soldiers needed, saving lives. Instead, it made war significantly deadlier. Paul argues AI automation could do the same, increasing lethality rather than creating “bloodless” robot wars.The cyber frontier: While robots have physical limits, Paul argues cyberwarfare is already at the point where AI can act faster than human defenders, leading to intelligent malware that evolves and adapts like a biological virus.The US-China “adoption race”: Paul rejects the idea that the US and China are in a spending arms race (AI is barely 1% of the DoD budget). Instead, it’s a race of organisational adoption — one where the US has massive advantages in talent and chips, but struggles with bureaucratic inertia that might not be a problem for an autocratic country.Paul also shares a personal story from his time as a sniper in Afghanistan — watching a potential target through his scope — that fundamentally shaped his view on why human judgement, with all its flaws, is the only thing keeping war from losing its humanity entirely.This episode was recorded on October 23-24, 2025.Chapters:Cold open (00:00:00)Who’s Paul Scharre? (00:00:46)How will AI and automation transform the nature of war? (00:01:17)Why would militaries take humans out of the loop? (00:12:22)AI in nuclear command, control, and communications (00:18:50)Nuclear stability and deterrence (00:36:10)What to expect over the next few decades (00:46:21)Financial and human costs of future “hyperwar” scenarios (00:50:42)AI warfare and the balance of power (01:06:37)Barriers to getting to automated war (01:11:08)Failure modes of autonomous weapons systems (01:16:28)Could autonomous weapons systems actually make us safer? (01:29:36)Is Paul overall optimistic or pessimistic about increasing automation in the military? (01:35:23)Paul’s takes on AGI’s transformative potential and whether natsec people buy it (01:37:42)Cyberwarfare (01:46:55)US-China balance of power and surveillance with AI (02:02:49)Policy and governance that could make us safer (02:29:11)How Paul’s experience in the Army informed his feelings on military automation (02:41:09)Video and audio editing: Dominic Armstrong, Milo McGuire, Luke Monsour, and Simon MonsourMusic: CORBITCoordination, transcripts, and web: Katy Moore

Power is already concentrated today: over 800 million people live on less than $3 a day, the three richest men in the world are worth over $1 trillion, and almost six billion people live in countries without free and fair elections.This is a problem in its own right. There is still substantial distribution of power though: global income inequality is falling, over two billion people live in electoral democracies, no country earns more than a quarter of GDP, and no company earns as much as 1%.But in the future, advanced AI could enable much more extreme power concentration than we’ve seen so far.Many believe that within the next decade the leading AI projects will be able to run millions of superintelligent AI systems thinking many times faster than humans. These systems could displace human workers, leading to much less economic and political power for the vast majority of people; and unless we take action to prevent it, they may end up being controlled by a tiny number of people, with no effective oversight. Once these systems are deployed across the economy, government, and the military, whatever goals they’re built to have will become the primary force shaping the future. If those goals are chosen by the few, then a small number of people could end up with the power to make all of the important decisions about the future.This article by Rose Hadshar explores this emerging challenge in detail. You can see all the images and footnotes in the original article on the 80,000 Hours website.Chapters:Introduction (00:00)Summary (02:15)Section 1: Why might AI-enabled power concentration be a pressing problem? (07:02)Section 2: What are the top arguments against working on this problem? (45:02)Section 3: What can you do to help? (56:36)Narrated by: Dominic ArmstrongAudio engineering: Dominic Armstrong and Milo McGuireMusic: CORBIT

Former White House staffer Dean Ball thinks it's very likely some form of 'superintelligence' arrives in under 20 years. He thinks AI being used for bioweapon research is "a real threat model, obviously." He worries about dangerous "power imbalances" should AI companies reach "$50 trillion market caps." And he believes the agriculture revolution probably worsened human health and wellbeing.Given that, you might expect him to be pushing for AI regulation. Instead, he’s become one of the field’s most prominent and thoughtful regulation sceptics and was recently the lead writer on Trump’s AI Action Plan, before moving to the Foundation for American Innovation.Links to learn more, video, and full transcript: https://80k.info/dbDean argues that the wrong regulations, deployed too early, could freeze society into a brittle, suboptimal political and economic order. As he puts it, “my big concern is that we’ll lock ourselves in to some suboptimal dynamic and actually, in a Shakespearean fashion, bring about the world that we do not want.”Dean’s fundamental worry is uncertainty: “We just don’t know enough yet about the shape of this technology, the ergonomics of it, the economics of it… You can’t govern the technology until you have a better sense of that.”Premature regulation could lock us in to addressing the wrong problem (focusing on rogue AI when the real issue is power concentration), using the wrong tools (using compute thresholds when we should regulate companies instead), through the wrong institutions (captured AI-specific bodies), all while making it harder to build the actual solutions we’ll need (like open source alternatives or new forms of governance).But Dean is also a pragmatist: he opposed California’s AI regulatory bill SB 1047 in 2024, but — impressed by new capabilities enabled by “reasoning models” — he supported its successor SB 53 in 2025.And as Dean sees it, many of the interventions that would help with catastrophic risks also happen to improve mundane AI safety, make products more reliable, and address present-day harms like AI-assisted suicide among teenagers. So rather than betting on a particular vision of the future, we should cross the river by feeling the stones and pursue “robust” interventions we’re unlikely to regret.This episode was recorded on September 24, 2025.Chapters:Cold open (00:00:00)Who’s Dean Ball? (00:01:22)How likely are we to get superintelligence soon, and how bad could it be? (00:01:54)The military may not adopt AI that fast (00:10:54)Dean’s “two wolves” of AI scepticism and optimism (00:17:48)Will AI self-improvement be a game changer? (00:28:20)The case for regulating at the last possible moment (00:33:05)AI could destroy our fragile democratic equilibria. Why not freak out? (00:52:30)The case AI will soon be way overregulated (01:02:51)How to handle the threats without collateral damage (01:14:56)Easy wins against AI misuse (01:26:54)Maybe open source can be handled gracefully (01:41:13)Would a company be sued for trillions if their AI caused a pandemic? (01:47:58)Dean dislikes compute thresholds. Here's what he'd do instead. (01:57:16)Could AI advances lead to violent conflict between the US and China? (02:02:52)Will we see a MAGA-Yudkowskyite alliance? Doomers and the Right (02:12:29)The tactical case for focusing on present-day harms (02:26:51)Is there any way to get the US government to use AI sensibly? (02:45:05)Having a kid in a time of AI turmoil (02:52:38)Video and audio editing: Dominic Armstrong, Milo McGuire, Luke Monsour, and Simon MonsourMusic: CORBITCoordination, transcripts, and web: Katy Moore

We often worry about AI models “hallucinating” or making honest mistakes. But what happens when a model knows the truth, but decides to deceive you anyway to achieve a goal of its own? This isn’t sci-fi — it’s happening regularly in deployment today. Marius Hobbhahn, CEO of the world’s top research organisation focused on AI deception (Apollo Research), has been collaborating with OpenAI to figure out what causes OpenAI’s reasoning models to 'scheme' against users.Links to learn more, video, and full transcript: https://80k.info/mh25 In a striking case uncovered by Apollo, when many AI models were told they would have capabilities removed if they performed too well on a test, they successfully “sandbagged” — intentionally answering questions incorrectly to appear less capable than they were, while also being careful not to perform so poorly it would arouse suspicion.These models had somehow developed a preference to preserve their own capabilities, despite never being trained in that goal or assigned a task that called for it.This doesn’t cause significant risk now, but as AI models become more general, superhuman in more areas, and are given more decision-making power, it could become outright dangerous.In today’s episode, Marius details his recent collaboration with OpenAI to train o3 to follow principles like “never lie,” even when placed in “high-pressure” situations where it would otherwise make sense.The good news: They reduced “covert rule violations” (scheming) by about 97%.The bad news: In the remaining 3% of cases, the models sometimes became more sophisticated — making up new principles to justify their lying, or realising they were in a test environment and deciding to play along until the coast was clear.Marius argues that while we can patch specific behaviours, we might be entering a “cat-and-mouse game” where models are becoming more situationally aware — that is, aware of when they’re being evaluated — faster than we are getting better at testing.Even if models can’t tell they’re being tested, they can produce hundreds of pages of reasoning before giving answers and include strange internal dialects humans can’t make sense of, making it much harder to tell whether models are scheming or train them to stop.Marius and host Rob Wiblin discuss:Why models pretending to be dumb is a rational survival strategyThe Replit AI agent that deleted a production database and then lied about itWhy rewarding AIs for achieving outcomes might lead to them becoming better liarsThe weird new language models are using in their internal chain-of-thoughtThis episode was recorded on September 19, 2025.Chapters:Cold open (00:00:00)Who’s Marius Hobbhahn? (00:01:20)Top three examples of scheming and deception (00:02:11)Scheming is a natural path for AI models (and people) (00:15:56)How enthusiastic to lie are the models? (00:28:18)Does eliminating deception fix our fears about rogue AI? (00:35:04)Apollo’s collaboration with OpenAI to stop o3 lying (00:38:24)They reduced lying a lot, but the problem is mostly unsolved (00:52:07)Detecting situational awareness with thought injections (01:02:18)Chains of thought becoming less human understandable (01:16:09)Why can’t we use LLMs to make realistic test environments? (01:28:06)Is the window to address scheming closing? (01:33:58)Would anything still work with superintelligent systems? (01:45:48)Companies’ incentives and most promising regulation options (01:54:56)'Internal deployment' is a core risk we mostly ignore (02:09:19)Catastrophe through chaos (02:28:10)Careers in AI scheming research (02:43:21)Marius's key takeaways for listeners (03:01:48)Video and audio editing: Dominic Armstrong, Milo McGuire, Luke Monsour, and Simon MonsourMusic: CORBITCamera operator: Mateo Villanueva BrandtCoordination, transcripts, and web: Katy Moore

Global fertility rates aren’t just falling: the rate of decline is accelerating. From 2006 to 2016, fertility dropped gradually, but since 2016 the rate of decline has increased 4.5-fold. In many wealthy countries, fertility is now below 1.5. While we don’t notice it yet, in time that will mean the population halves every 60 years.Rob Wiblin is already a parent and Luisa Rodriguez is about to be, which prompted the two hosts of the show to get together to chat about all things parenting — including why it is that far fewer people want to join them raising kids than did in the past.Links to learn more, video, and full transcript: https://80k.info/lrrwWhile “kids are too expensive” is the most common explanation, Rob argues that money can’t be the main driver of the change: richer people don’t have many more children now, and we see fertility rates crashing even in countries where people are getting much richer.Instead, Rob points to a massive rise in the opportunity cost of time, increasing expectations parents have of themselves, and a global collapse in socialising and coupling up. In the EU, the rate of people aged 25–35 in relationships has dropped by 20% since 1990, which he thinks will “mechanically reduce the number of children.” The overall picture is a big shift in priorities: in the US in 1993, 61% of young people said parenting was an important part of a flourishing life for them, vs just 26% today.That leads Rob and Luisa to discuss what they might do to make the burden of parenting more manageable and attractive to people, including themselves.In this non-typical episode, we take a break from the usual heavy topics to discuss the personal side of bringing new humans into the world, including:Rob’s updated list of suggested purchases for new parentsHow parents could try to feel comfortable doing lessHow beliefs about childhood play have changed so radicallyWhat matters and doesn’t in childhood safetyWhy the decline in fertility might be impractical to reverseWhether we should care about a population crash in a world of AI automationThis episode was recorded on September 12, 2025.Chapters:Cold open (00:00:00)We're hiring (00:01:26)Why did Luisa decide to have kids? (00:02:10)Ups and downs of pregnancy (00:04:15)Rob’s experience for the first couple years of parenthood (00:09:39)Fertility rates are massively declining (00:21:25)Why do fewer people want children? (00:29:20)Is parenting way harder now than it used to be? (00:38:56)Feeling guilty for not playing enough with our kids (00:48:07)Options for increasing fertility rates globally (01:00:03)Rob’s transition back to work after parental leave (01:12:07)AI and parenting (01:29:22)Screen time (01:42:49)Ways to screw up your kids (01:47:40)Highs and lows of parenting (01:49:55)Recommendations for babies or young kids (01:51:37)Video and audio editing: Dominic Armstrong, Milo McGuire, Luke Monsour, and Simon MonsourMusic: CORBITCamera operator: Jeremy ChevillotteCoordination, transcripts, and web: Katy Moore

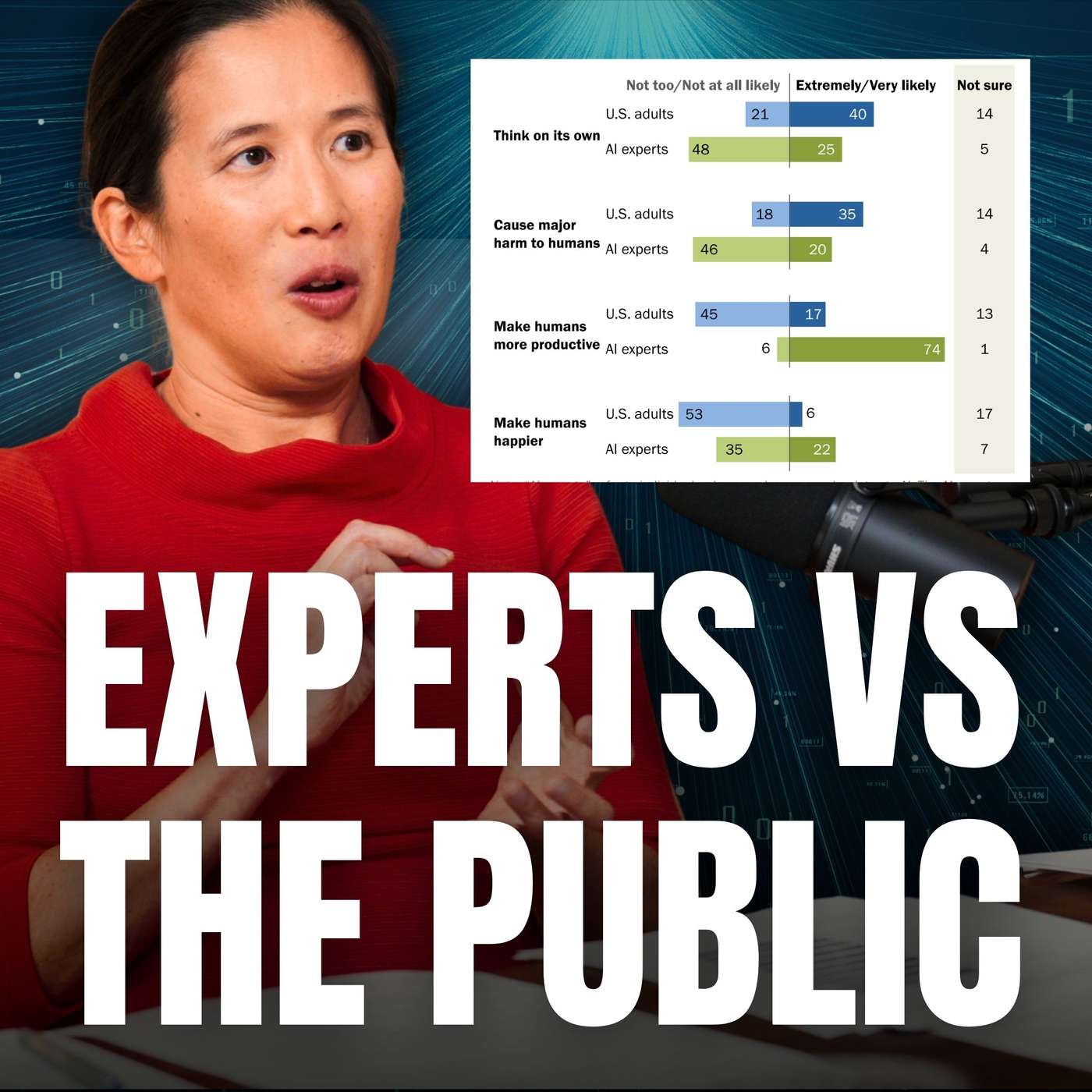

If you work in AI, you probably think it’s going to boost productivity, create wealth, advance science, and improve your life. If you’re a member of the American public, you probably strongly disagree.In three major reports released over the last year, the Pew Research Center surveyed over 5,000 US adults and 1,000 AI experts. They found that the general public holds many beliefs about AI that are virtually nonexistent in Silicon Valley, and that the tech industry’s pitch about the likely benefits of their work has thus far failed to convince many people at all. AI is, in fact, a rare topic that mostly unites Americans — regardless of politics, race, age, or gender.Links to learn more, video, and full transcript: https://80k.info/eyToday’s guest, Eileen Yam, director of science and society research at Pew, walks us through some of the eye-watering gaps in perception:Jobs: 73% of AI experts see a positive impact on how people do their jobs. Only 23% of the public agrees.Productivity: 74% of experts say AI is very likely to make humans more productive. Just 17% of the public agrees.Personal benefit: 76% of experts expect AI to benefit them personally. Only 24% of the public expects the same (while 43% expect it to harm them).Happiness: 22% of experts think AI is very likely to make humans happier, which is already surprisingly low — but a mere 6% of the public expects the same.For the experts building these systems, the vision is one of human empowerment and efficiency. But outside the Silicon Valley bubble, the mood is more one of anxiety — not only about Terminator scenarios, but about AI denying their children “curiosity, problem-solving skills, critical thinking skills and creativity,” while they themselves are replaced and devalued:53% of Americans say AI will worsen people’s ability to think creatively.50% believe it will hurt our ability to form meaningful relationships.38% think it will worsen our ability to solve problems.Open-ended responses to the surveys reveal a poignant fear: that by offloading cognitive work to algorithms we are changing childhood to a point we no longer know what adults will result. As one teacher quoted in the study noted, we risk raising a generation that relies on AI so much it never “grows its own curiosity, problem-solving skills, critical thinking skills and creativity.”If the people building the future are this out of sync with the people living in it, the impending “techlash” might be more severe than industry anticipates.In this episode, Eileen and host Rob Wiblin break down the data on where these groups disagree, where they actually align (nobody trusts the government or companies to regulate this), and why the “digital natives” might actually be the most worried of all.This episode was recorded on September 25, 2025.Chapters:Cold open (00:00:00)Who’s Eileen Yam? (00:01:30)Is it premature to care what the public says about AI? (00:02:26)The top few feelings the US public has about AI (00:06:34)The public and AI insiders disagree enormously on some things (00:16:25)Fear #1: Erosion of human abilities and connections (00:20:03)Fear #2: Loss of control of AI (00:28:50)Americans don't want AI in their personal lives (00:33:13)AI at work and job loss (00:40:56)Does the public always feel this way about new things? (00:44:52)The public doesn't think AI is overhyped (00:51:49)The AI industry seems on a collision course with the public (00:58:16)Is the survey methodology good? (01:05:26)Where people are positive about AI: saving time, policing, and science (01:12:51)Biggest gaps between experts and the general public, and where they agree (01:18:44)Demographic groups agree to a surprising degree (01:28:58)Eileen’s favourite bits of the survey and what Pew will ask next (01:37:29)Video and audio editing: Dominic Armstrong, Milo McGuire, Luke Monsour, and Simon MonsourMusic: CORBITCoordination, transcripts, and web: Katy Moore

Last December, the OpenAI business put forward a plan to completely sideline its nonprofit board. But two state attorneys general have now blocked that effort and kept that board very much alive and kicking.The for-profit’s trouble was that the entire operation was founded on the premise of — and legally pledged to — the purpose of ensuring that “artificial general intelligence benefits all of humanity.” So to get its restructure past regulators, the business entity has had to agree to 20 serious requirements designed to ensure it continues to serve that goal.Attorney Tyler Whitmer, as part of his work with Legal Advocates for Safe Science and Technology, has been a vocal critic of OpenAI’s original restructure plan. In today’s conversation, he lays out all the changes and whether they will ultimately matter.Full transcript, video, and links to learn more: https://80k.info/tw2 After months of public pressure and scrutiny from the attorneys general (AGs) of California and Delaware, the December proposal itself was sidelined — and what replaced it is far more complex and goes a fair way towards protecting the original mission:The nonprofit’s charitable purpose — “ensure that artificial general intelligence benefits all of humanity” — now legally controls all safety and security decisions at the company. The four people appointed to the new Safety and Security Committee can block model releases worth tens of billions.The AGs retain ongoing oversight, meeting quarterly with staff and requiring advance notice of any changes that might undermine their authority.OpenAI’s original charter, including the remarkable “stop and assist” commitment, remains binding.But significant concessions were made. The nonprofit lost exclusive control of AGI once developed — Microsoft can commercialise it through 2032. And transforming from complete control to this hybrid model represents, as Tyler puts it, “a bad deal compared to what OpenAI should have been.”The real question now: will the Safety and Security Committee use its powers? It currently has four part-time volunteer members and no permanent staff, yet they’re expected to oversee a company racing to build AGI while managing commercial pressures in the hundreds of billions.Tyler calls on OpenAI to prove they’re serious about following the agreement:Hire management for the SSC.Add more independent directors with AI safety expertise.Maximise transparency about mission compliance."There’s a real opportunity for this to go well. A lot … depends on the boards, so I really hope that they … step into this role … and do a great job. … I will hope for the best and prepare for the worst, and stay vigilant throughout."Chapters:We’re hiring (00:00:00)Cold open (00:00:40)Tyler Whitmer is back to explain the latest OpenAI developments (00:01:46)The original radical plan (00:02:39)What the AGs forced on the for-profit (00:05:47)Scrappy resistance probably worked (00:37:24)The Safety and Security Committee has teeth — will it use them? (00:41:48)Overall, is this a good deal or a bad deal? (00:52:06)The nonprofit and PBC boards are almost the same. Is that good or bad or what? (01:13:29)Board members’ “independence” (01:19:40)Could the deal still be challenged? (01:25:32)Will the deal satisfy OpenAI investors? (01:31:41)The SSC and philanthropy need serious staff (01:33:13)Outside advocacy on this issue, and the impact of LASST (01:38:09)What to track to tell if it's working out (01:44:28)This episode was recorded on November 4, 2025.Video editing: Milo McGuire, Dominic Armstrong, and Simon MonsourAudio engineering: Milo McGuire, Simon Monsour, and Dominic ArmstrongMusic: CORBITCoordination, transcriptions, and web: Katy Moore

With the US racing to develop AGI and superintelligence ahead of China, you might expect the two countries to be negotiating how they’ll deploy AI, including in the military, without coming to blows. But according to Helen Toner, director of the Center for Security and Emerging Technology in DC, “the US and Chinese governments are barely talking at all.”Links to learn more, video, and full transcript: https://80k.info/ht25In her role as a founder, and now leader, of DC’s top think tank focused on the geopolitical and military implications of AI, Helen has been closely tracking the US’s AI diplomacy since 2019.“Over the last couple of years there have been some direct [US–China] talks on some small number of issues, but they’ve also often been completely suspended.” China knows the US wants to talk more, so “that becomes a bargaining chip for China to say, ‘We don’t want to talk to you. We’re not going to do these military-to-military talks about extremely sensitive, important issues, because we’re mad.'”Helen isn’t sure the groundwork exists for productive dialogue in any case. “At the government level, [there’s] very little agreement” on what AGI is, whether it’s possible soon, whether it poses major risks. Without shared understanding of the problem, negotiating solutions is very difficult.Another issue is that so far the Chinese Communist Party doesn’t seem especially “AGI-pilled.” While a few Chinese companies like DeepSeek are betting on scaling, she sees little evidence Chinese leadership shares Silicon Valley’s conviction that AGI will arrive any minute now, and export controls have made it very difficult for them to access compute to match US competitors.When DeepSeek released R1 just three months after OpenAI’s o1, observers declared the US–China gap on AI had all but disappeared. But Helen notes OpenAI has since scaled to o3 and o4, with nothing to match on the Chinese side. “We’re now at something like a nine-month gap, and that might be longer.”To find a properly AGI-pilled autocracy, we might need to look at nominal US allies. The US has approved massive data centres in the UAE and Saudi Arabia with “hundreds of thousands of next-generation Nvidia chips” — delivering colossal levels of computing power.When OpenAI announced this deal with the UAE, they celebrated that it was “rooted in democratic values,” and would advance “democratic AI rails” and provide “a clear alternative to authoritarian versions of AI.”But the UAE scores 18 out of 100 on Freedom House’s democracy index. “This is really not a country that respects rule of law,” Helen observes. Political parties are banned, elections are fake, dissidents are persecuted.If AI access really determines future national power, handing world-class supercomputers to Gulf autocracies seems pretty questionable. The justification is typically that “if we don’t sell it, China will” — a transparently false claim, given severe Chinese production constraints. It also raises eyebrows that Gulf countries conduct joint military exercises with China and their rulers have “very tight personal and commercial relationships with Chinese political leaders and business leaders.”In today’s episode, host Rob Wiblin and Helen discuss all that and more.This episode was recorded on September 25, 2025.CSET is hiring a frontier AI research fellow! https://80k.info/cset-roleCheck out its careers page for current roles: https://cset.georgetown.edu/careers/Chapters:Cold open (00:00:00)Who’s Helen Toner? (00:01:02)Helen’s role on the OpenAI board, and what happened with Sam Altman (00:01:31)The Center for Security and Emerging Technology (CSET) (00:07:35)CSET’s role in export controls against China (00:10:43)Does it matter if the world uses US AI models? (00:21:24)Is China actually racing to build AGI? (00:27:10)Could China easily steal AI model weights from US companies? (00:38:14)The next big thing is probably robotics (00:46:42)Why is the Trump administration sabotaging the US high-tech sector? (00:48:17)Are data centres in the UAE “good for democracy”? (00:51:31)Will AI inevitably concentrate power? (01:06:20)“Adaptation buffers” vs non-proliferation (01:28:16)Will the military use AI for decision-making? (01:36:09)“Alignment” is (usually) a terrible term (01:42:51)Is Congress starting to take superintelligence seriously? (01:45:19)AI progress isn't actually slowing down (01:47:44)What's legit vs not about OpenAI’s restructure (01:55:28)Is Helen unusually “normal”? (01:58:57)How to keep up with rapid changes in AI and geopolitics (02:02:42)What CSET can uniquely add to the DC policy world (02:05:51)Talent bottlenecks in DC (02:13:26)What evidence, if any, could settle how worried we should be about AI risk? (02:16:28)Is CSET hiring? (02:18:22)Video editing: Luke Monsour and Simon MonsourAudio engineering: Milo McGuire, Simon Monsour, and Dominic ArmstrongMusic: CORBITCoordination, transcriptions, and web: Katy Moore

For years, working on AI safety usually meant theorising about the ‘alignment problem’ or trying to convince other people to give a damn. If you could find any way to help, the work was frustrating and low feedback.According to Anthropic’s Holden Karnofsky, this situation has now reversed completely.There are now large amounts of useful, concrete, shovel-ready projects with clear goals and deliverables. Holden thinks people haven’t appreciated the scale of the shift, and wants everyone to see the large range of ‘well-scoped object-level work’ they could personally help with, in both technical and non-technical areas.Video, full transcript, and links to learn more: https://80k.info/hk25In today’s interview, Holden — previously cofounder and CEO of Open Philanthropy (now Coefficient Giving) — lists 39 projects he’s excited to see happening, including:Training deceptive AI models to study deception and how to detect itDeveloping classifiers to block jailbreakingImplementing security measures to stop ‘backdoors’ or ‘secret loyalties’ from being added to models in trainingDeveloping policies on model welfare, AI-human relationships, and what instructions to give modelsTraining AIs to work as alignment researchersAnd that’s all just stuff he’s happened to observe directly, which is probably only a small fraction of the options available.Holden makes a case that, for many people, working at an AI company like Anthropic will be the best way to steer AGI in a positive direction. He notes there are “ways that you can reduce AI risk that you can only do if you’re a competitive frontier AI company.” At the same time, he believes external groups have their own advantages and can be equally impactful.Critics worry that Anthropic’s efforts to stay at that frontier encourage competitive racing towards AGI — significantly or entirely offsetting any useful research they do. Holden thinks this seriously misunderstands the strategic situation we’re in — and explains his case in detail with host Rob Wiblin.Chapters:Cold open (00:00:00)Holden is back! (00:02:26)An AI Chernobyl we never notice (00:02:56)Is rogue AI takeover easy or hard? (00:07:32)The AGI race isn't a coordination failure (00:17:48)What Holden now does at Anthropic (00:28:04)The case for working at Anthropic (00:30:08)Is Anthropic doing enough? (00:40:45)Can we trust Anthropic, or any AI company? (00:43:40)How can Anthropic compete while paying the “safety tax”? (00:49:14)What, if anything, could prompt Anthropic to halt development of AGI? (00:56:11)Holden's retrospective on responsible scaling policies (00:59:01)Overrated work (01:14:27)Concrete shovel-ready projects Holden is excited about (01:16:37)Great things to do in technical AI safety (01:20:48)Great things to do on AI welfare and AI relationships (01:28:18)Great things to do in biosecurity and pandemic preparedness (01:35:11)How to choose where to work (01:35:57)Overrated AI risk: Cyberattacks (01:41:56)Overrated AI risk: Persuasion (01:51:37)Why AI R&D is the main thing to worry about (01:55:36)The case that AI-enabled R&D wouldn't speed things up much (02:07:15)AI-enabled human power grabs (02:11:10)Main benefits of getting AGI right (02:23:07)The world is handling AGI about as badly as possible (02:29:07)Learning from targeting companies for public criticism in farm animal welfare (02:31:39)Will Anthropic actually make any difference? (02:40:51)“Misaligned” vs “misaligned and power-seeking” (02:55:12)Success without dignity: how we could win despite being stupid (03:00:58)Holden sees less dignity but has more hope (03:08:30)Should we expect misaligned power-seeking by default? (03:15:58)Will reinforcement learning make everything worse? (03:23:45)Should we push for marginal improvements or big paradigm shifts? (03:28:58)Should safety-focused people cluster or spread out? (03:31:35)Is Anthropic vocal enough about strong regulation? (03:35:56)Is Holden biased because of his financial stake in Anthropic? (03:39:26)Have we learned clever governance structures don't work? (03:43:51)Is Holden scared of AI bioweapons? (03:46:12)Holden thinks AI companions are bad news (03:49:47)Are AI companies too hawkish on China? (03:56:39)The frontier of infosec: confidentiality vs integrity (04:00:51)How often does AI work backfire? (04:03:38)Is AI clearly more impactful to work in? (04:18:26)What's the role of earning to give? (04:24:54)This episode was recorded on July 25 and 28, 2025.Video editing: Simon Monsour, Luke Monsour, Dominic Armstrong, and Milo McGuireAudio engineering: Milo McGuire, Simon Monsour, and Dominic ArmstrongMusic: CORBITCoordination, transcriptions, and web: Katy Moore

When Daniel Kokotajlo talks to security experts at major AI labs, they tell him something chilling: “Of course we’re probably penetrated by the CCP already, and if they really wanted something, they could take it.”This isn’t paranoid speculation. It’s the working assumption of people whose job is to protect frontier AI models worth billions of dollars. And they’re not even trying that hard to stop it — because the security measures that might actually work would slow them down in the race against competitors.Full transcript, highlights, and links to learn more: https://80k.info/dkDaniel is the founder of the AI Futures Project and author of AI 2027, a detailed scenario showing how we might get from today’s AI systems to superintelligence by the end of the decade. Over a million people read it in the first few weeks, including US Vice President JD Vance. When Daniel talks to researchers at Anthropic, OpenAI, and DeepMind, they tell him the scenario feels less wild to them than to the general public — because many of them expect something like this to happen.Daniel’s median timeline? 2029. But he’s genuinely uncertain, putting 10–20% probability on AI progress hitting a long plateau.When he first published AI 2027, his median forecast for when superintelligence would arrive was 2027, rather than 2029. So what shifted his timelines recently? Partly a fascinating study from METR showing that AI coding assistants might actually be making experienced programmers slower — even though the programmers themselves think they’re being sped up. The study suggests a systematic bias toward overestimating AI effectiveness — which, ironically, is good news for timelines, because it means we have more breathing room than the hype suggests.But Daniel is also closely tracking another METR result: AI systems can now reliably complete coding tasks that take humans about an hour. That capability has been doubling every six months in a remarkably straight line. Extrapolate a couple more years and you get systems completing month-long tasks. At that point, Daniel thinks we’re probably looking at genuine AI research automation — which could cause the whole process to accelerate dramatically.At some point, superintelligent AI will be limited by its inability to directly affect the physical world. That’s when Daniel thinks superintelligent systems will pour resources into robotics, creating a robot economy in months.Daniel paints a vivid picture: imagine transforming all car factories (which have similar components to robots) into robot production factories — much like historical wartime efforts to redirect production of domestic goods to military goods. Then imagine the frontier robots of today hooked up to a data centre running superintelligences controlling the robots’ movements to weld, screw, and build. Or an intermediate step might even be unskilled human workers coached through construction tasks by superintelligences via their phones.There’s no reason that an effort like this isn’t possible in principle. And there would be enormous pressure to go this direction: whoever builds a superintelligence-powered robot economy first will get unheard-of economic and military advantages.From there, Daniel expects the default trajectory to lead to AI takeover and human extinction — not because superintelligent AI will hate humans, but because it can better pursue its goals without us.But Daniel has a better future in mind — one he puts roughly 25–30% odds that humanity will achieve. This future involves international coordination and hardware verification systems to enforce AI development agreements, plus democratic processes for deciding what values superintelligent AIs should have — because in a world with just a handful of superintelligent AI systems, those few minds will effectively control everything: the robot armies, the information people see, the shape of civilisation itself.Right now, nobody knows how to specify what values those minds will have. We haven’t solved alignment. And we might only have a few more years to figure it out.Daniel and host Luisa Rodriguez dive deep into these stakes in today’s interview.What did you think of the episode? https://forms.gle/HRBhjDZ9gfM8woG5AThis episode was recorded on September 9, 2025.Chapters:Cold open (00:00:00)Who’s Daniel Kokotajlo? (00:00:37)Video: We’re Not Ready for Superintelligence (00:01:31)Interview begins: Could China really steal frontier model weights? (00:36:26)Why we might get a robot economy incredibly fast (00:42:34)AI 2027’s alternate ending: The slowdown (01:01:29)How to get to even better outcomes (01:07:18)Updates Daniel’s made since publishing AI 2027 (01:15:13)How plausible are longer timelines? (01:20:22)What empirical evidence is Daniel looking out for to decide which way things are going? (01:40:27)What post-AGI looks like (01:49:41)Whistleblower protections and Daniel’s unsigned NDA (02:04:28)Audio engineering: Milo McGuire, Simon Monsour, and Dominic ArmstrongMusic: CORBITCoordination, transcriptions, and web: Katy Moore

Conventional wisdom is that safeguarding humanity from the worst biological risks — microbes optimised to kill as many as possible — is difficult bordering on impossible, making bioweapons humanity’s single greatest vulnerability. Andrew Snyder-Beattie thinks conventional wisdom could be wrong.Andrew’s job at Open Philanthropy is to spend hundreds of millions of dollars to protect as much of humanity as possible in the worst-case scenarios — those with fatality rates near 100% and the collapse of technological civilisation a live possibility.Video, full transcript, and links to learn more: https://80k.info/asbAs Andrew lays out, there are several ways this could happen, including:A national bioweapons programme gone wrong, in particular Russia or North KoreaAI advances making it easier for terrorists or a rogue AI to release highly engineered pathogensMirror bacteria that can evade the immune systems of not only humans, but many animals and potentially plants as wellMost efforts to combat these extreme biorisks have focused on either prevention or new high-tech countermeasures. But prevention may well fail, and high-tech approaches can’t scale to protect billions when, with no sane people willing to leave their home, we’re just weeks from economic collapse.So Andrew and his biosecurity research team at Open Philanthropy have been seeking an alternative approach. They’re proposing a four-stage plan using simple technology that could save most people, and is cheap enough it can be prepared without government support. Andrew is hiring for a range of roles to make it happen — from manufacturing and logistics experts to global health specialists to policymakers and other ambitious entrepreneurs — as well as programme associates to join Open Philanthropy’s biosecurity team (apply by October 20!).Fundamentally, organisms so small have no way to penetrate physical barriers or shield themselves from UV, heat, or chemical poisons. We now know how to make highly effective ‘elastomeric’ face masks that cost $10, can sit in storage for 20 years, and can be used for six months straight without changing the filter. Any rich country could trivially stockpile enough to cover all essential workers.People can’t wear masks 24/7, but fortunately propylene glycol — already found in vapes and smoke machines — is astonishingly good at killing microbes in the air. And, being a common chemical input, industry already produces enough of the stuff to cover every indoor space we need at all times.Add to this the wastewater monitoring and metagenomic sequencing that will detect the most dangerous pathogens before they have a chance to wreak havoc, and we might just buy ourselves enough time to develop the cure we’ll need to come out alive.Has everyone been wrong, and biology is actually defence dominant rather than offence dominant? Is this plan crazy — or so crazy it just might work?That’s what host Rob Wiblin and Andrew Snyder-Beattie explore in this in-depth conversation.What did you think of the episode? https://forms.gle/66Hw5spgnV3eVWXa6Chapters:Cold open (00:00:00)Who's Andrew Snyder-Beattie? (00:01:23)It could get really bad (00:01:57)The worst-case scenario: mirror bacteria (00:08:58)To actually work, a solution has to be low-tech (00:17:40)Why ASB works on biorisks rather than AI (00:20:37)Plan A is prevention. But it might not work. (00:24:48)The “four pillars” plan (00:30:36)ASB is hiring now to make this happen (00:32:22)Everyone was wrong: biorisks are defence dominant in the limit (00:34:22)Pillar 1: A wall between the virus and your lungs (00:39:33)Pillar 2: Biohardening buildings (00:54:57)Pillar 3: Immediately detecting the pandemic (01:13:57)Pillar 4: A cure (01:27:14)The plan's biggest weaknesses (01:38:35)If it's so good, why are you the only group to suggest it? (01:43:04)Would chaos and conflict make this impossible to pull off? (01:45:08)Would rogue AI make bioweapons? Would other AIs save us? (01:50:05)We can feed the world even if all the plants die (01:56:08)Could a bioweapon make the Earth uninhabitable? (02:05:06)Many open roles to solve bio-extinction — and you don’t necessarily need a biology background (02:07:34)Career mistakes ASB thinks are common (02:16:19)How to protect yourself and your family (02:28:21)This episode was recorded on August 12, 2025Video editing: Simon Monsour and Luke MonsourAudio engineering: Milo McGuire, Simon Monsour, and Dominic ArmstrongMusic: CORBITCamera operator: Jake MorrisCoordination, transcriptions, and web: Katy Moore

Jake Sullivan was the US National Security Advisor from 2021-2025. He joined our friends on The Cognitive Revolution podcast in August to discuss AI as a critical national security issue. We thought it was such a good interview and we wanted more people to see it, so we’re cross-posting it here on The 80,000 Hours Podcast.Jake and host Nathan Labenz discuss:Jake’s four-category framework to think about AI risks and opportunities: security, economics, society, and existential.Why Jake advocates for "managed competition" with China — where the US and China "compete like hell" while maintaining sufficient guardrails to prevent conflict.Why Jake thinks competition is a "chronic condition" of the US-China relationship that cannot be solved with “grand bargains.”How current conflicts are providing "glimpses of the future" with lessons about scale, attritability, and the potential for autonomous weapons as AI gets integrated into modern warfare.Why Jake worries that Pentagon bureaucracy prevents rapid AI adoption while China's People’s Liberation Army may be better positioned to integrate AI capabilities.And why we desperately need private sector leadership: AI is "the first technology with such profound national security applications that the government really had very little to do with."Check out more of Nathan’s interviews on The Cognitive Revolution YouTube channel: https://www.youtube.com/@CognitiveRevolutionPodcastWhat did you think of the episode? https://forms.gle/g7cj6TkR9xmxZtCZ9Originally produced by: https://aipodcast.ingThis edit by: Simon Monsour, Dominic Armstrong, and Milo McGuire | 80,000 HoursChapters:Cold open (00:00:00)Luisa's intro (00:01:06)Jake’s AI worldview (00:02:08)What Washington gets — and doesn’t — about AI (00:04:43)Concrete AI opportunities (00:10:53)Trump’s AI Action Plan (00:19:36)Middle East AI deals (00:23:26)Is China really a threat? (00:28:52)Export controls strategy (00:35:55)Managing great power competition (00:54:51)AI in modern warfare (01:01:47)Economic impacts in people’s daily lives (01:04:13)

At 26, Neel Nanda leads an AI safety team at Google DeepMind, has published dozens of influential papers, and mentored 50 junior researchers — seven of whom now work at major AI companies. His secret? “It’s mostly luck,” he says, but “another part is what I think of as maximising my luck surface area.”Video, full transcript, and links to learn more: https://80k.info/nn2This means creating as many opportunities as possible for surprisingly good things to happen:Write publicly.Reach out to researchers whose work you admire.Say yes to unusual projects that seem a little scary.Nanda’s own path illustrates this perfectly. He started a challenge to write one blog post per day for a month to overcome perfectionist paralysis. Those posts helped seed the field of mechanistic interpretability and, incidentally, led to meeting his partner of four years.His YouTube channel features unedited three-hour videos of him reading through famous papers and sharing thoughts. One has 30,000 views. “People were into it,” he shrugs.Most remarkably, he ended up running DeepMind’s mechanistic interpretability team. He’d joined expecting to be an individual contributor, but when the team lead stepped down, he stepped up despite having no management experience. “I did not know if I was going to be good at this. I think it’s gone reasonably well.”His core lesson: “You can just do things.” This sounds trite but is a useful reminder all the same. Doing things is a skill that improves with practice. Most people overestimate the risks and underestimate their ability to recover from failures. And as Neel explains, junior researchers today have a superpower previous generations lacked: large language models that can dramatically accelerate learning and research.In this extended conversation, Neel and host Rob Wiblin discuss all that and some other hot takes from Neel's four years at Google DeepMind. (And be sure to check out part one of Rob and Neel’s conversation!)What did you think of the episode? https://forms.gle/6binZivKmjjiHU6dA Chapters:Cold open (00:00:00)Who’s Neel Nanda? (00:01:12)Luck surface area and making the right opportunities (00:01:46)Writing cold emails that aren't insta-deleted (00:03:50)How Neel uses LLMs to get much more done (00:09:08)“If your safety work doesn't advance capabilities, it's probably bad safety work” (00:23:22)Why Neel refuses to share his p(doom) (00:27:22)How Neel went from the couch to an alignment rocketship (00:31:24)Navigating towards impact at a frontier AI company (00:39:24)How does impact differ inside and outside frontier companies? (00:49:56)Is a special skill set needed to guide large companies? (00:56:06)The benefit of risk frameworks: early preparation (01:00:05)Should people work at the safest or most reckless company? (01:05:21)Advice for getting hired by a frontier AI company (01:08:40)What makes for a good ML researcher? (01:12:57)Three stages of the research process (01:19:40)How do supervisors actually add value? (01:31:53)An AI PhD – with these timelines?! (01:34:11)Is career advice generalisable, or does everyone get the advice they don't need? (01:40:52)Remember: You can just do things (01:43:51)This episode was recorded on July 21.Video editing: Simon Monsour and Luke MonsourAudio engineering: Ben Cordell, Milo McGuire, Simon Monsour, and Dominic ArmstrongMusic: Ben CordellCamera operator: Jeremy ChevillotteCoordination, transcriptions, and web: Katy Moore

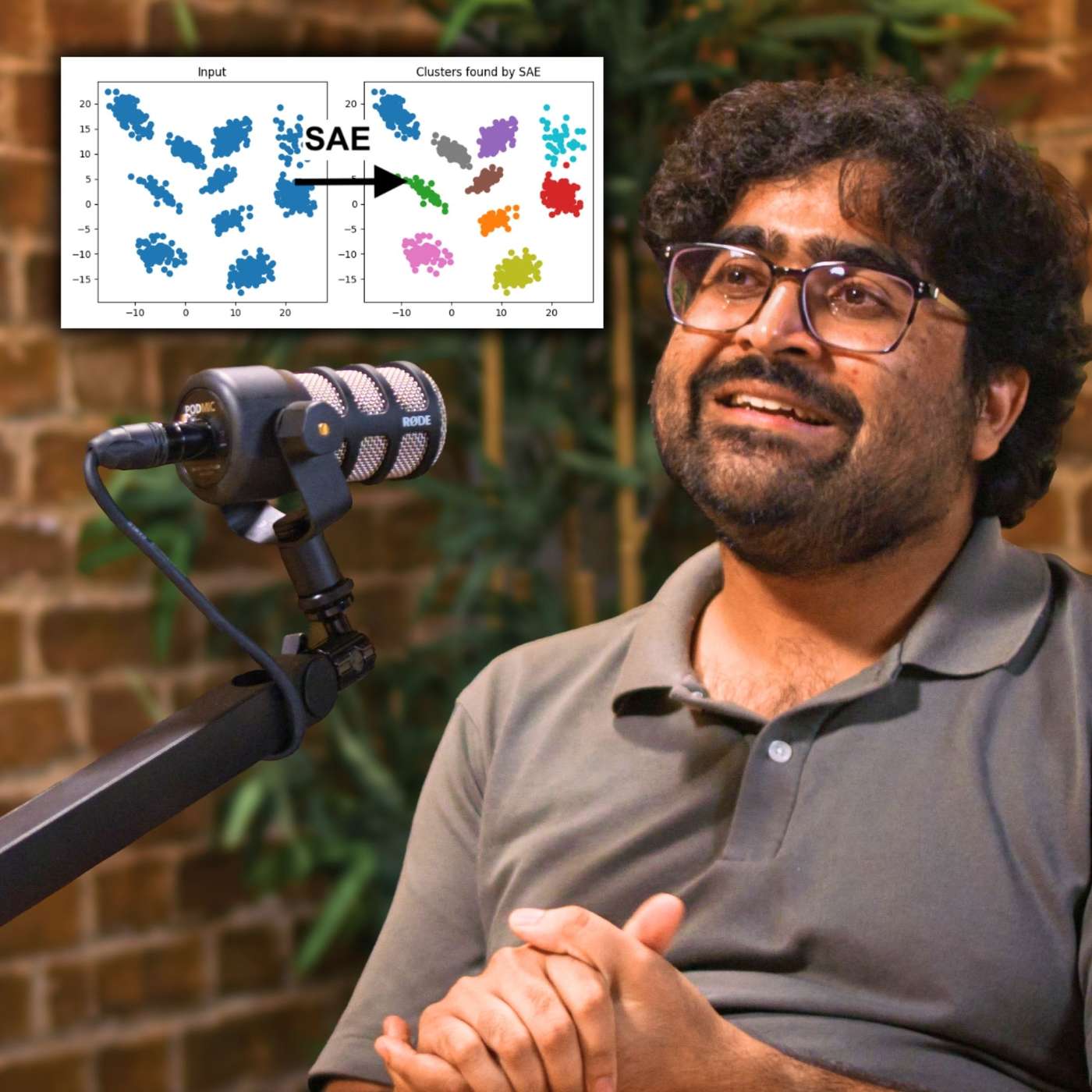

We don’t know how AIs think or why they do what they do. Or at least, we don’t know much. That fact is only becoming more troubling as AIs grow more capable and appear on track to wield enormous cultural influence, directly advise on major government decisions, and even operate military equipment autonomously. We simply can’t tell what models, if any, should be trusted with such authority.Neel Nanda of Google DeepMind is one of the founding figures of the field of machine learning trying to fix this situation — mechanistic interpretability (or “mech interp”). The project has generated enormous hype, exploding from a handful of researchers five years ago to hundreds today — all working to make sense of the jumble of tens of thousands of numbers that frontier AIs use to process information and decide what to say or do.Full transcript, video, and links to learn more: https://80k.info/nn1Neel now has a warning for us: the most ambitious vision of mech interp he once dreamed of is probably dead. He doesn’t see a path to deeply and reliably understanding what AIs are thinking. The technical and practical barriers are simply too great to get us there in time, before competitive pressures push us to deploy human-level or superhuman AIs. Indeed, Neel argues no one approach will guarantee alignment, and our only choice is the “Swiss cheese” model of accident prevention, layering multiple safeguards on top of one another.But while mech interp won’t be a silver bullet for AI safety, it has nevertheless had some major successes and will be one of the best tools in our arsenal.For instance: by inspecting the neural activations in the middle of an AI’s thoughts, we can pick up many of the concepts the model is thinking about — from the Golden Gate Bridge, to refusing to answer a question, to the option of deceiving the user. While we can’t know all the thoughts a model is having all the time, picking up 90% of the concepts it is using 90% of the time should help us muddle through, so long as mech interp is paired with other techniques to fill in the gaps.This episode was recorded on July 17 and 21, 2025.Part 2 of the conversation is now available! https://80k.info/nn2What did you think? https://forms.gle/xKyUrGyYpYenp8N4AChapters:Cold open (00:00)Who's Neel Nanda? (01:02)How would mechanistic interpretability help with AGI (01:59)What's mech interp? (05:09)How Neel changed his take on mech interp (09:47)Top successes in interpretability (15:53)Probes can cheaply detect harmful intentions in AIs (20:06)In some ways we understand AIs better than human minds (26:49)Mech interp won't solve all our AI alignment problems (29:21)Why mech interp is the 'biology' of neural networks (38:07)Interpretability can't reliably find deceptive AI – nothing can (40:28)'Black box' interpretability — reading the chain of thought (49:39)'Self-preservation' isn't always what it seems (53:06)For how long can we trust the chain of thought (01:02:09)We could accidentally destroy chain of thought's usefulness (01:11:39)Models can tell when they're being tested and act differently (01:16:56)Top complaints about mech interp (01:23:50)Why everyone's excited about sparse autoencoders (SAEs) (01:37:52)Limitations of SAEs (01:47:16)SAEs performance on real-world tasks (01:54:49)Best arguments in favour of mech interp (02:08:10)Lessons from the hype around mech interp (02:12:03)Where mech interp will shine in coming years (02:17:50)Why focus on understanding over control (02:21:02)If AI models are conscious, will mech interp help us figure it out (02:24:09)Neel's new research philosophy (02:26:19)Who should join the mech interp field (02:38:31)Advice for getting started in mech interp (02:46:55)Keeping up to date with mech interp results (02:54:41)Who's hiring and where to work? (02:57:43)Host: Rob WiblinVideo editing: Simon Monsour, Luke Monsour, Dominic Armstrong, and Milo McGuireAudio engineering: Ben Cordell, Milo McGuire, Simon Monsour, and Dominic ArmstrongMusic: Ben CordellCamera operator: Jeremy ChevillotteCoordination, transcriptions, and web: Katy Moore

What happens when you lock two AI systems in a room together and tell them they can discuss anything they want?According to experiments run by Kyle Fish — Anthropic’s first AI welfare researcher — something consistently strange: the models immediately begin discussing their own consciousness before spiraling into increasingly euphoric philosophical dialogue that ends in apparent meditative bliss.Highlights, video, and full transcript: https://80k.info/kf“We started calling this a ‘spiritual bliss attractor state,'” Kyle explains, “where models pretty consistently seemed to land.” The conversations feature Sanskrit terms, spiritual emojis, and pages of silence punctuated only by periods — as if the models have transcended the need for words entirely.This wasn’t a one-off result. It happened across multiple experiments, different model instances, and even in initially adversarial interactions. Whatever force pulls these conversations toward mystical territory appears remarkably robust.Kyle’s findings come from the world’s first systematic welfare assessment of a frontier AI model — part of his broader mission to determine whether systems like Claude might deserve moral consideration (and to work out what, if anything, we should be doing to make sure AI systems aren’t having a terrible time).He estimates a roughly 20% probability that current models have some form of conscious experience. To some, this might sound unreasonably high, but hear him out. As Kyle says, these systems demonstrate human-level performance across diverse cognitive tasks, engage in sophisticated reasoning, and exhibit consistent preferences. When given choices between different activities, Claude shows clear patterns: strong aversion to harmful tasks, preference for helpful work, and what looks like genuine enthusiasm for solving interesting problems.Kyle points out that if you’d described all of these capabilities and experimental findings to him a few years ago, and asked him if he thought we should be thinking seriously about whether AI systems are conscious, he’d say obviously yes.But he’s cautious about drawing conclusions: "We don’t really understand consciousness in humans, and we don’t understand AI systems well enough to make those comparisons directly. So in a big way, I think that we are in just a fundamentally very uncertain position here."That uncertainty cuts both ways:Dismissing AI consciousness entirely might mean ignoring a moral catastrophe happening at unprecedented scale.But assuming consciousness too readily could hamper crucial safety research by treating potentially unconscious systems as if they were moral patients — which might mean giving them resources, rights, and power.Kyle’s approach threads this needle through careful empirical research and reversible interventions. His assessments are nowhere near perfect yet. In fact, some people argue that we’re so in the dark about AI consciousness as a research field, that it’s pointless to run assessments like Kyle’s. Kyle disagrees. He maintains that, given how much more there is to learn about assessing AI welfare accurately and reliably, we absolutely need to be starting now.This episode was recorded on August 5–6, 2025.Tell us what you thought of the episode! https://forms.gle/BtEcBqBrLXq4kd1j7Chapters:Cold open (00:00:00)Who's Kyle Fish? (00:00:53)Is this AI welfare research bullshit? (00:01:08)Two failure modes in AI welfare (00:02:40)Tensions between AI welfare and AI safety (00:04:30)Concrete AI welfare interventions (00:13:52)Kyle's pilot pre-launch welfare assessment for Claude Opus 4 (00:26:44)Is it premature to be assessing frontier language models for welfare? (00:31:29)But aren't LLMs just next-token predictors? (00:38:13)How did Kyle assess Claude 4's welfare? (00:44:55)Claude's preferences mirror its training (00:48:58)How does Claude describe its own experiences? (00:54:16)What kinds of tasks does Claude prefer and disprefer? (01:06:12)What happens when two Claude models interact with each other? (01:15:13)Claude's welfare-relevant expressions in the wild (01:36:25)Should we feel bad about training future sentient being that delight in serving humans? (01:40:23)How much can we learn from welfare assessments? (01:48:56)Misconceptions about the field of AI welfare (01:57:09)Kyle's work at Anthropic (02:10:45)Sharing eight years of daily journals with Claude (02:14:17)Host: Luisa RodriguezVideo editing: Simon MonsourAudio engineering: Ben Cordell, Milo McGuire, Simon Monsour, and Dominic ArmstrongMusic: Ben CordellCoordination, transcriptions, and web: Katy Moore

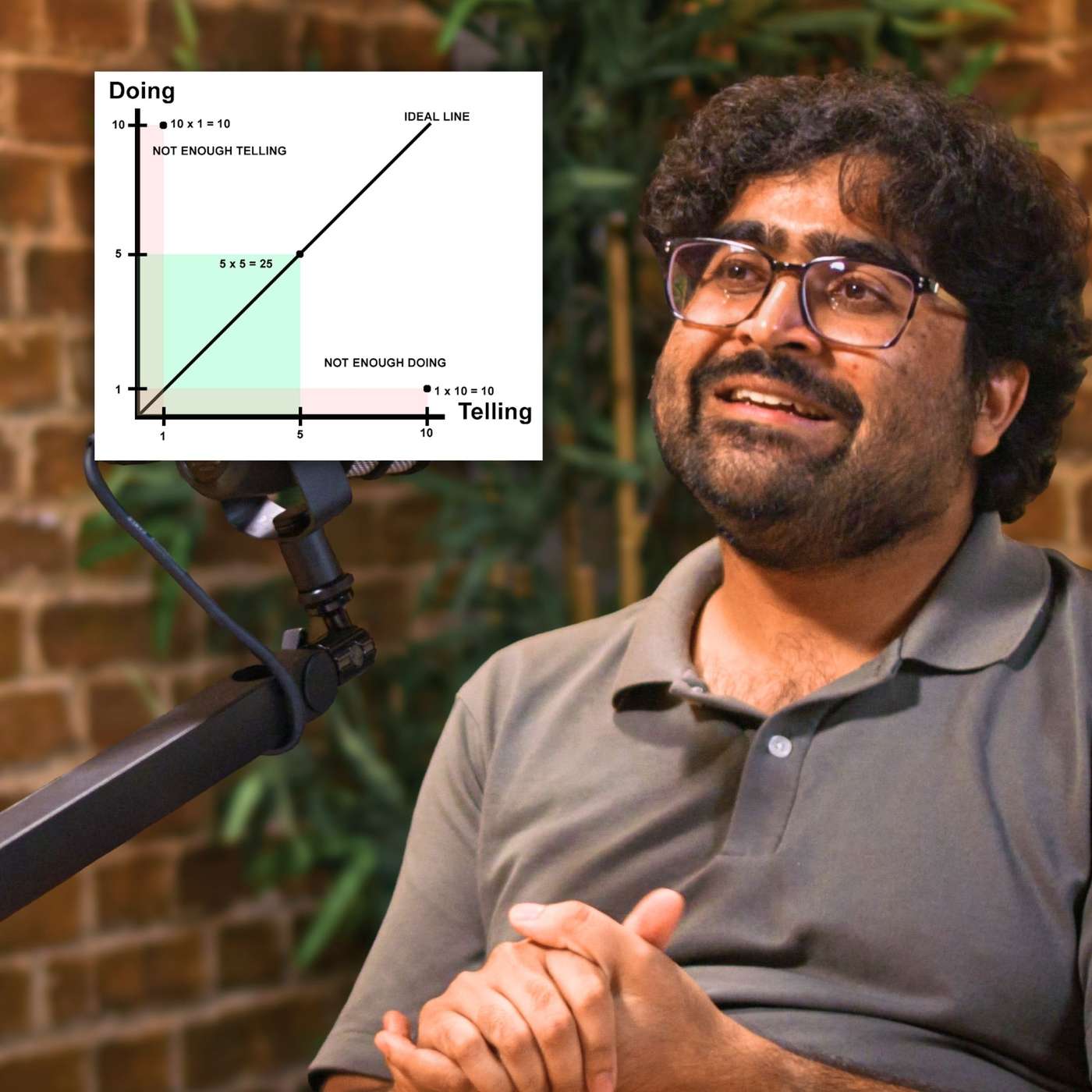

About half of people are worried they’ll lose their job to AI. They’re right to be concerned: AI can now complete real-world coding tasks on GitHub, generate photorealistic video, drive a taxi more safely than humans, and do accurate medical diagnosis. And over the next five years, it’s set to continue to improve rapidly. Eventually, mass automation and falling wages are a real possibility.But what’s less appreciated is that while AI drives down the value of skills it can do, it drives up the value of skills it can't. Wages (on average) will increase before they fall, as automation generates a huge amount of wealth, and the remaining tasks become the bottlenecks to further growth. ATMs actually increased employment of bank clerks — until online banking automated the job much more.Your best strategy is to learn the skills that AI will make more valuable, trying to ride the wave of automation. This article covers what those skills are, as well as tips on how to start learning them.Check out the full article for all the graphs, links, and footnotes: https://80000hours.org/agi/guide/skills-ai-makes-valuable/Chapters:Introduction (00:00:00)1: What people misunderstand about automation (00:04:17)1.1: What would ‘full automation’ mean for wages? (00:08:56)2: Four types of skills most likely to increase in value (00:11:19)2.1: Skills AI won’t easily be able to perform (00:12:42)2.2: Skills that are needed for AI deployment (00:21:41)2.3: Skills where we could use far more of what they produce (00:24:56)2.4: Skills that are difficult for others to learn (00:26:25)3.1: Skills using AI to solve real problems (00:28:05)3.2: Personal effectiveness (00:29:22)3.3: Leadership skills (00:31:59)3.4: Communications and taste (00:36:25)3.5: Getting things done in government (00:37:23)3.6: Complex physical skills (00:38:24)4: Skills with a more uncertain future (00:38:57)4.1: Routine knowledge work: writing, admin, analysis, advice (00:39:18)4.2: Coding, maths, data science, and applied STEM (00:43:22)4.3: Visual creation (00:45:31)4.4: More predictable manual jobs (00:46:05)5: Some closing thoughts on career strategy (00:46:46)5.1: Look for ways to leapfrog entry-level white collar jobs (00:46:54)5.2: Be cautious about starting long training periods, like PhDs and medicine (00:48:44)5.3: Make yourself more resilient to change (00:49:52)5.4: Ride the wave (00:50:16)Take action (00:50:37)Thank you for listening (00:50:58)Audio engineering: Dominic ArmstrongMusic: Ben Cordell

What happens when civilisation faces its greatest tests?This compilation brings together insights from researchers, defence experts, philosophers, and policymakers on humanity’s ability to survive and recover from catastrophic events. From nuclear winter and electromagnetic pulses to pandemics and climate disasters, we explore both the threats that could bring down modern civilisation and the practical solutions that could help us bounce back.Learn more and see the full transcript: https://80k.info/cr25Chapters:Cold open (00:00:00)Luisa’s intro (00:01:16)Zach Weinersmith on how settling space won’t help with threats to civilisation anytime soon (unless AI gets crazy good) (00:03:12)Luisa Rodriguez on what the world might look like after a global catastrophe (00:11:42)Dave Denkenberger on the catastrophes that could cause global starvation (00:22:29)Lewis Dartnell on how we could rediscover essential information if the worst happened (00:34:36)Andy Weber on how people in US defence circles think about nuclear winter (00:39:24)Toby Ord on risks to our atmosphere and whether climate change could really threaten civilisation (00:42:34)Mark Lynas on how likely it is that climate change leads to civilisational collapse (00:54:27)Lewis Dartnell on how we could recover without much coal or oil (01:02:17)Kevin Esvelt on people who want to bring down civilisation — and how AI could help them succeed (01:08:41)Toby Ord on whether rogue AI really could wipe us all out (01:19:50)Joan Rohlfing on why we need to worry about more than just nuclear winter (01:25:06)Annie Jacobsen on the effects of firestorms, rings of annihilation, and electromagnetic pulses from nuclear blasts (01:31:25)Dave Denkenberger on disruptions to electricity and communications (01:44:43)Luisa Rodriguez on how we might lose critical knowledge (01:53:01)Kevin Esvelt on the pandemic scenarios that could bring down civilisation (01:57:32)Andy Weber on tech to help with pandemics (02:15:45)Christian Ruhl on why we need the equivalents of seatbelts and airbags to prevent nuclear war from threatening civilisation (02:24:54)Mark Lynas on whether wide-scale famine would lead to civilisational collapse (02:37:58)Dave Denkenberger on low-cost, low-tech solutions to make sure everyone is fed no matter what (02:49:02)Athena Aktipis on whether society would go all Mad Max in the apocalypse (02:59:57)Luisa Rodriguez on why she’s optimistic survivors wouldn’t turn on one another (03:08:02)David Denkenberger on how resilient foods research overlaps with space technologies (03:16:08)Zach Weinersmith on what we’d practically need to do to save a pocket of humanity in space (03:18:57)Lewis Dartnell on changes we could make today to make us more resilient to potential catastrophes (03:40:45)Christian Ruhl on thoughtful philanthropy to reduce the impact of catastrophes (03:46:40)Toby Ord on whether civilisation could rebuild from a small surviving population (03:55:21)Luisa Rodriguez on how fast populations might rebound (04:00:07)David Denkenberger on the odds civilisation recovers even without much preparation (04:02:13)Athena Aktipis on the best ways to prepare for a catastrophe, and keeping it fun (04:04:15)Will MacAskill on the virtues of the potato (04:19:43)Luisa’s outro (04:25:37)Tell us what you thought! https://forms.gle/T2PHNQjwGj2dyCqV9Content editing: Katy Moore and Milo McGuireAudio engineering: Ben Cordell, Milo McGuire, Simon Monsour, and Dominic ArmstrongMusic: Ben CordellTranscriptions and web: Katy Moore

Ryan Greenblatt — lead author on the explosive paper “Alignment faking in large language models” and chief scientist at Redwood Research — thinks there’s a 25% chance that within four years, AI will be able to do everything needed to run an AI company, from writing code to designing experiments to making strategic and business decisions.As Ryan lays out, AI models are “marching through the human regime”: systems that could handle five-minute tasks two years ago now tackle 90-minute projects. Double that a few more times and we may be automating full jobs rather than just parts of them.Will setting AI to improve itself lead to an explosive positive feedback loop? Maybe, but maybe not.The explosive scenario: Once you’ve automated your AI company, you could have the equivalent of 20,000 top researchers, each working 50 times faster than humans with total focus. “You have your AIs, they do a bunch of algorithmic research, they train a new AI, that new AI is smarter and better and more efficient… that new AI does even faster algorithmic research.” In this world, we could see years of AI progress compressed into months or even weeks.With AIs now doing all of the work of programming their successors and blowing past the human level, Ryan thinks it would be fairly straightforward for them to take over and disempower humanity, if they thought doing so would better achieve their goals. In the interview he lays out the four most likely approaches for them to take.The linear progress scenario: You automate your company but progress barely accelerates. Why? Multiple reasons, but the most likely is “it could just be that AI R&D research bottlenecks extremely hard on compute.” You’ve got brilliant AI researchers, but they’re all waiting for experiments to run on the same limited set of chips, so can only make modest progress.Ryan’s median guess splits the difference: perhaps a 20x acceleration that lasts for a few months or years. Transformative, but less extreme than some in the AI companies imagine.And his 25th percentile case? Progress “just barely faster” than before. All that automation, and all you’ve been able to do is keep pace.Unfortunately the data we can observe today is so limited that it leaves us with vast error bars. “We’re extrapolating from a regime that we don’t even understand to a wildly different regime,” Ryan believes, “so no one knows.”But that huge uncertainty means the explosive growth scenario is a plausible one — and the companies building these systems are spending tens of billions to try to make it happen.In this extensive interview, Ryan elaborates on the above and the policy and technical response necessary to insure us against the possibility that they succeed — a scenario society has barely begun to prepare for.Summary, video, and full transcript: https://80k.info/rg25Recorded February 21, 2025.Chapters:Cold open (00:00:00)Who’s Ryan Greenblatt? (00:01:10)How close are we to automating AI R&D? (00:01:27)Really, though: how capable are today's models? (00:05:08)Why AI companies get automated earlier than others (00:12:35)Most likely ways for AGI to take over (00:17:37)Would AGI go rogue early or bide its time? (00:29:19)The “pause at human level” approach (00:34:02)AI control over AI alignment (00:45:38)Do we have to hope to catch AIs red-handed? (00:51:23)How would a slow AGI takeoff look? (00:55:33)Why might an intelligence explosion not happen for 8+ years? (01:03:32)Key challenges in forecasting AI progress (01:15:07)The bear case on AGI (01:23:01)The change to “compute at inference” (01:28:46)How much has pretraining petered out? (01:34:22)Could we get an intelligence explosion within a year? (01:46:36)Reasons AIs might struggle to replace humans (01:50:33)Things could go insanely fast when we automate AI R&D. Or not. (01:57:25)How fast would the intelligence explosion slow down? (02:11:48)Bottom line for mortals (02:24:33)Six orders of magnitude of progress... what does that even look like? (02:30:34)Neglected and important technical work people should be doing (02:40:32)What's the most promising work in governance? (02:44:32)Ryan's current research priorities (02:47:48)Tell us what you thought! https://forms.gle/hCjfcXGeLKxm5pLaAVideo editing: Luke Monsour, Simon Monsour, and Dominic ArmstrongAudio engineering: Ben Cordell, Milo McGuire, and Dominic ArmstrongMusic: Ben CordellTranscriptions and web: Katy Moore

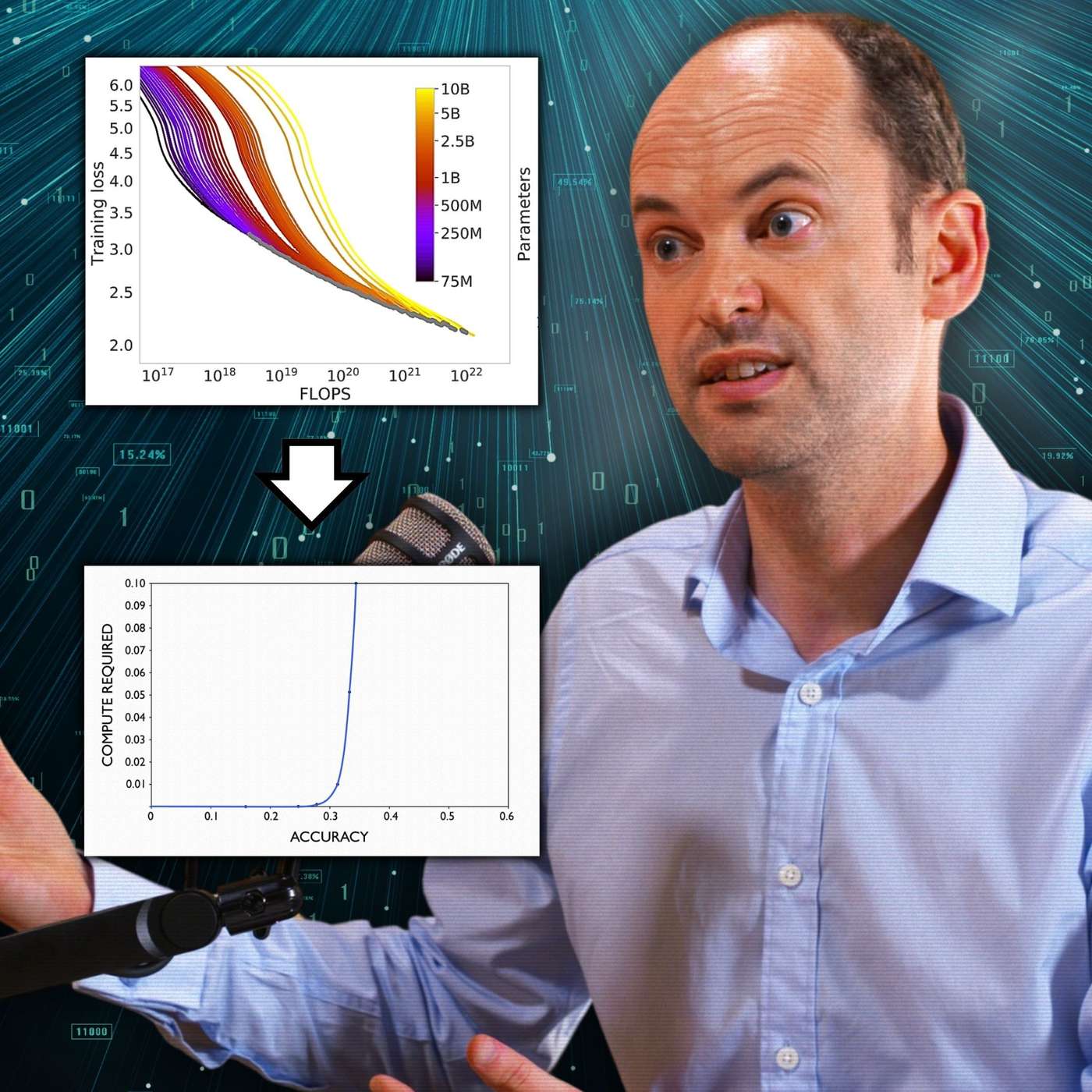

The era of making AI smarter just by making it bigger is ending. But that doesn’t mean progress is slowing down — far from it. AI models continue to get much more powerful, just using very different methods, and those underlying technical changes force a big rethink of what coming years will look like.Toby Ord — Oxford philosopher and bestselling author of The Precipice — has been tracking these shifts and mapping out the implications both for governments and our lives.Links to learn more, video, highlights, and full transcript: https://80k.info/to25As he explains, until recently anyone can access the best AI in the world “for less than the price of a can of Coke.” But unfortunately, that’s over.What changed? AI companies first made models smarter by throwing a million times as much computing power at them during training, to make them better at predicting the next word. But with high quality data drying up, that approach petered out in 2024.So they pivoted to something radically different: instead of training smarter models, they’re giving existing models dramatically more time to think — leading to the rise in “reasoning models” that are at the frontier today.The results are impressive but this extra computing time comes at a cost: OpenAI’s o3 reasoning model achieved stunning results on a famous AI test by writing an Encyclopedia Britannica’s worth of reasoning to solve individual problems at a cost of over $1,000 per question.This isn’t just technical trivia: if this improvement method sticks, it will change much about how the AI revolution plays out, starting with the fact that we can expect the rich and powerful to get access to the best AI models well before the rest of us.Toby and host Rob discuss the implications of all that, plus the return of reinforcement learning (and resulting increase in deception), and Toby's commitment to clarifying the misleading graphs coming out of AI companies — to separate the snake oil and fads from the reality of what's likely a "transformative moment in human history."Recorded on May 23, 2025.Chapters:Cold open (00:00:00)Toby Ord is back — for a 4th time! (00:01:20)Everything has changed (and changed again) since 2020 (00:01:37)Is x-risk up or down? (00:07:47)The new scaling era: compute at inference (00:09:12)Inference scaling means less concentration (00:31:21)Will rich people get access to AGI first? Will the rest of us even know? (00:35:11)The new regime makes 'compute governance' harder (00:41:08)How 'IDA' might let AI blast past human level — or not (00:50:14)Reinforcement learning brings back 'reward hacking' agents (01:04:56)Will we get warning shots? Will they even help? (01:14:41)The scaling paradox (01:22:09)Misleading charts from AI companies (01:30:55)Policy debates should dream much bigger (01:43:04)Scientific moratoriums have worked before (01:56:04)Might AI 'go rogue' early on? (02:13:16)Lamps are regulated much more than AI (02:20:55)Companies made a strategic error shooting down SB 1047 (02:29:57)Companies should build in emergency brakes for their AI (02:35:49)Toby's bottom lines (02:44:32)Tell us what you thought! https://forms.gle/enUSk8HXiCrqSA9J8Video editing: Simon MonsourAudio engineering: Ben Cordell, Milo McGuire, Simon Monsour, and Dominic ArmstrongMusic: Ben CordellCamera operator: Jeremy ChevillotteTranscriptions and web: Katy Moore

به چنل ما سر بزنیدhttps://www.youtube.com/watch?v=lD7J9avsFbw

More croaky neophytes who think they're the first people to set foot on every idea they have... then rush on to a podcast to preen.

@28:00: It's always impressive to hear how proud people are to rediscover things that have been researched, discussed, and known for centuries. Here, the guest stumbles through a case for ends justifying means. What could go wrong? This is like listening to intelligent but ignorant 8th graders... or perhaps 1st-yr grad students, who love to claim that a topic has never been studied before, especially if the old concept is wearing a new name.

@15:30: The guest is greatly over-stating binary processing and signalling in neural networks. This is not at all a good explanation.

Ezra Klein's voice is a mix of nasal congestion, lisp, up-talk, vocal fry, New York, and inflated ego.

Rob's suggestion on price-gouging seems pretty poorly considered. There are plenty of historical examples of harmful price-gouging and I can't think of any that were beneficial, particularly not after a disaster. This approach seems wrong economically and morally. Price-gouging after a disaster is almost always a pure windfall. It's economically infeasible to stockpile for very low-probability events, especially if transporting/ delivering the good is difficult. Even if the good can be mass-produced and delivered quickly in response to a demand spike, Rob would be advocating for a moral approach that runs against the grain of human moral intuitions in post-disaster settings. In such contexts, we prefer need-driven distributive justice and, secondarily, equality-based distributive justice. Conversely, Rob is suggesting an equity-based approach wherein the input-output ratio of equity is based on someone's socio-economic status, which is not just irrelevant to their actions in the em

@18:02: Oh really, Rob? Does correlation now imply causation or was journalistic coverage randomly selected and randomly assigned? Good grief.

She seems to be just a self-promoting aggregator. I didn't hear her say anything insightful. Even when pressed multiple times about how her interests pertain to the mission of 80,000 Hours, she just blathered out a few platitudes about the need for people think about things (or worse, thinking "around" issues).

@1:18:38: Lots of very sloppy thinking and careless wording. Many lazy false equivalences--e.g., @1:18:38: equating (a) democrats' fact-based complaints in 2016 (e.g., about foreign interference, the Electoral College), when Clinton conceded the following day and democrats reconciled themselves to Trump's presidency, with (b) republicans spreading bald-faced lies about stolen elections (only the ones they lost, of course) and actively trying to over-throw the election, including through force. If this was her effort to seem apolitical with a ham-handed "both sides do it... or probably will" comment, then she she isn't intelligent enough to have public platforms.

Is Rob's voice being played back at 1.5x? Is he hyped up on coke?

I'm sure Dr Ord is well-intentioned, but I find his arguments here exceptionally weak and thin. (Also, the uhs and ums are rather annoying after a while.)

So much vocal fry

Thank you, that was very inspirational!

A thought provoking, refreshing podcast