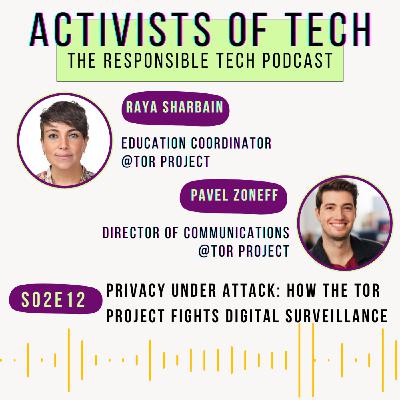

We’re all very used to being surveilled by now, especially through surveillance capitalism, or the commodification of our personal data - our age, location, mental state, shopping habits, tax bracket, are collected through various apps and websites and sold to thousands of third parties. On top of that, governments surveil their citizens, and it does not only happen in authoritarian States such as Russia, it also happens in the United States as well, where activists are watched by authorities during and after lawful protests. Looking at how pervasive tech enabled surveillance is, once you’re aware, it feels like living in a dystopia. Who needs to read Orwell’s 1984 when you can just look into civil society’s reports on mass surveillance or read the news? What we need are anti-surveillance alternatives, such as a search engine that, unlike Google, does not track you or any of your personal data, let alone sell them to whoever is willing to pay, and that addresses censorship, government firewalls, and empower users to access the open web. The good news is that it exists, and it’s called Tor: a web browser that protects users' privacy and anonymity by hiding their IP addresses and browsing activity by sending web traffic through a series of routers, called nodes, to anonymize it. The traffic is encrypted three times as it passes through the Tor network, a process conceptualized by the idea of "onion routing" that began in the 90s. The goal was to use the internet with as much privacy as possible, relying on a decentralized network. Today, the Tor Browser has become the world's strongest tool for privacy and freedom online. I had the pleasure to welcome not one, but two guests today from the Tor Project: Raya Sharbain is an Education Coordinator with the Tor Project, where she facilitates training for journalists and human rights defenders on Tor and Tails the anonymous operating system, and also develops and updates educational curricula on the Tor ecosystem, focusing on its use in circumventing network censorship and surveillance. Raya is a part-time Research Fellow with the Citizen Lab, focusing on targeted surveillance. Pavel Zoneff, with over a decade of experience working for some of the world’s leading tech brands, Pavel joined the Tor Project in 2023. As Director of Strategic Communications he supports the organization’s global outreach and advocacy efforts to champion unrestricted access to the open web and encrypted technologies.