Discover Build Wiz AI Show

Build Wiz AI Show

Build Wiz AI Show

Author: Build Wiz AI

Subscribed: 9Played: 403Subscribe

Share

© Build Wiz AI

Description

Build Wiz AI Show is your go-to podcast for transforming the latest and most interesting papers, articles, and blogs about AI into an easy-to-digest audio format. Using NotebookLM, we break down complex ideas into engaging discussions, making AI knowledge more accessible.

Have a resource you’d love to hear in podcast form? Send us the link, and we might feature it in an upcoming episode! 🚀🎙️

Have a resource you’d love to hear in podcast form? Send us the link, and we might feature it in an upcoming episode! 🚀🎙️

179 Episodes

Reverse

Join us as we tackle the "last mile" of AI Agents series, exploring the rigorous operational discipline required to transform fragile prototypes into reliable, production-grade agents. We break down the essential infrastructure of trust—from evaluation gates to automated pipelines—that ensures your system is ready for the real world. This foundation paves the way for our series finale where we will move beyond single-agent operations to look at the future of autonomous collaboration within multi-agent ecosystems.

In this episode of our AI Agent series, we synthesize our previous discussions on evaluation frameworks and observability into a cohesive operational playbook known as the "Agent Quality Flywheel",. Join us as we explore how to transform raw telemetry into a continuous feedback loop that drives relentless improvement, ensuring your autonomous agents are not just capable, but truly trustworthy,. This episode bridges the critical gap between theory and production, providing the final principles needed to build enterprise-grade reliance in an agentic world,.

Moving beyond the temporary "workbench" of individual sessions, episode 3 of our AI Agents series unlocks the power of Memory—the mechanism that allows AI agents to persist knowledge and personalize interactions over time. We will explore how agents utilize an intelligent extraction and consolidation pipeline to curate a long-term "filing cabinet" of user insights, effectively turning them from generic assistants into experts on the specific users they serve.

In this episode in series AI Agents, we discuss the transformation of foundation models from static prediction engines into "Agentic AI" capable of using tools as their "eyes and hands" to interact with the world. We highlight the complexity of connecting these agents to diverse enterprise systems, setting the stage for Episode 1 to explain the "N x M" integration problem and the Model Context Protocol's role in solving it.

Join us for the premiere of our series on AI Agents, exploring the paradigm shift from passive predictive models to autonomous systems capable of reasoning and action. In this episode, we deconstruct the core anatomy of an agent—its "Brain" (Model), "Hands" (Tools), and "Nervous System" (Orchestration)—to explain the fundamental "Think, Act, Observe" loop that drives their behavior. Discover how this architecture enables developers to move beyond traditional coding to become "directors" of intelligent, goal-oriented applications.

DeepSeek-AI introduces DeepSeek-R1, a reasoning model developed through reinforcement learning (RL) and distillation techniques. The research explores how large language models can develop reasoning skills, even without supervised fine-tuning, highlighting the self-evolution observed in DeepSeek-R1-Zero during RL training. DeepSeek-R1 addresses limitations of DeepSeek-R1-Zero, like readability, by incorporating cold-start data and multi-stage training. Results demonstrate DeepSeek-R1 achieving performance comparable to OpenAI models on reasoning tasks, and distillation proves effective in empowering smaller models with enhanced reasoning capabilities. The study also shares unsuccessful attempts with Process Reward Models (PRM) and Monte Carlo Tree Search (MCTS), providing valuable insights into the challenges of improving reasoning in LLMs. The open-sourcing of the models aims to support further research in this area.

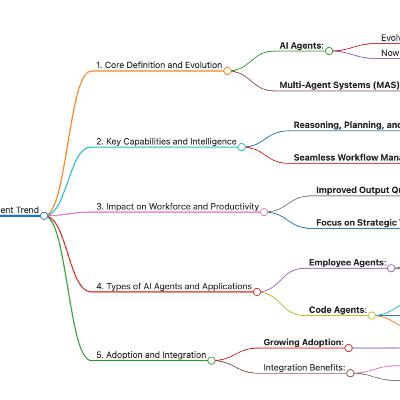

Google's "AI Business Trends 2025" report analyzes key strategic trends expected to reshape businesses. It identifies five major trends including the rise of multimodal AI, the evolution of AI agents, the importance of assistive search, the need for seamless AI-powered customer experiences, and the increasing role of AI in security. The report suggests that companies need to understand how AI has impacted current market dynamics in order to innovate, compete and continue to evolve. Early adopters of AI are more likely to dominate the market and grow faster than traditional competitors. This analysis is based on data insights from studies, surveys of global decision makers, and Google Trends research, using tools like NotebookLM to identify these pivotal shifts.

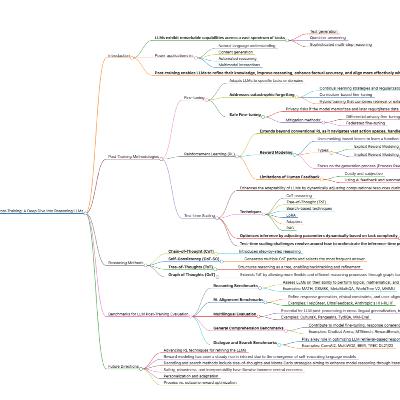

This podcast presents a comprehensive survey of post-training techniques for Large Language Models (LLMs), focusing on methodologies that refine these models beyond their initial pre-training. The key post-training strategies explored include fine-tuning, reinforcement learning (RL), and test-time scaling, which are critical for improving reasoning, accuracy, and alignment with user intentions. It examines various RL techniques such as Proximal Policy Optimization (PPO), Direct Preference Optimization (DPO) and Group Relative Policy Optimization (GRPO) in LLMs. The survey also investigates benchmarks and evaluation methods for assessing LLM performance across different domains, discussing challenges such as catastrophic forgetting and reward hacking. The document concludes by outlining future research directions, emphasizing hybrid approaches that combine multiple optimization strategies for enhanced LLM capabilities and efficient deployment. The aim is to guide the optimization of LLMs for real-world applications by consolidating recent research and addressing remaining challenges.

In this episode, drawing on insights from the sources, METR researcher Joel Becker explores the widening gap between AI’s exponential progress on benchmarks and its actual impact on real-world productivity. We examine a surprising study where expert developers were slowed down by 19% when using AI, challenging the assumption that benchmark success translates directly into immediate economic gains. The discussion investigates the "puzzle" of why low AI reliability and the complexity of high-context environments continue to hinder performance in the field compared to synthetic tests.Source: https://www.youtube.com/watch?v=RhfqQKe22ZA&list=TLGGeQVQrQpc6NgyODEyMjAyNQ

This episode explores a unified framework for adapting agentic AI systems, detailing how foundation models are specialized to plan, reason, and master external tools for complex tasks. We analyze the four adaptation paradigms—A1, A2, T1, and T2—revealing how modular tool optimization can be up to 70 times more data-efficient than traditional monolithic agent retraining. Discover the shift toward symbiotic ecosystems, where stable reasoning cores work in harmony with agile, evolving tools to drive breakthroughs in software development and scientific research.Source: https://www.arxiv.org/pdf/2512.16301

In this episode, we explore Agent-R1, a modular framework designed to transform Large Language Models from static text generators into autonomous agents capable of active environmental interaction. We dive into how extending the Markov Decision Process (MDP) framework enables these agents to master multi-turn dialogues, utilize external tools, and benefit from dense process rewards. Finally, we discuss how end-to-end reinforcement learning is setting new performance benchmarks in complex tasks like multi-hop reasoning by refining how models learn from their own actions.

In this episode, Andrew Ng and Lawrence Moroni discuss why now is the "golden age" for building a career in AI, highlighting how the complexity of tasks AI can perform is doubling as rapidly as every seven months,,. The conversation explores the shifting landscape of "vibe coding" and the evolving ratio between engineers and product managers, emphasizing that the new bottleneck in software development is deciding what to build rather than the coding itself,,. Finally, the experts offer practical advice on navigating a competitive job market by focusing on business value, managing technical debt, and preparing for the rise of "small AI" and self-hosted models,,,.Source: https://www.youtube.com/watch?v=AuZoDsNmG_s

This episode explores the critical July 2025 maturation point, where the initial generative AI hype gives way to the practical challenges of securing ROI and closing the "Data Readiness Gap". We break down the rise of Agentic AI and the strategic architectures essential for moving beyond disjointed pilot programs to translate initiatives into measurable financial value. Finally, we examine how leaders can navigate a divided global regulatory landscape to empower their workforce and unlock true "superagency".

This episode explores the rapid shift in enterprise AI adoption, highlighting how production-level agent deployment has surged as organizations move past initial experimental phases. Drawing on a study of thousands of use cases, we analyze the ROI of AI, distinguishing between common time savings and the high-impact, transformational benefits found in automation and risk reduction. We also examine why systematic, cross-organizational strategies are increasingly helping leaders realize significant returns on their investments within a one-to-three-year window.

The new Gemini interactions API unifies modern LLM requirements, moving past older stateless APIs to fully embrace agents and complex workflows. This major update simplifies multimodality and structured outputs, while offering optional server-side history to persist conversation memory. We explore using the API to call the Gemini research agent and leverage background execution for long-running inference loops.

Explore the emerging "year of agentic AI" through the first large-scale field study of Perplexity’s Comet browser, which analyzes millions of user interactions to understand how autonomous agents are reshaping daily workflows,. We discuss key findings revealing that early adoption is driven by educated, knowledge-intensive professionals who primarily use agents for productivity and learning tasks,. Tune in to learn how these "sticky" habits are evolving from simple queries to complex, cognitive actions that bridge the gap between information and execution,.

Monetizing AI is uniquely challenging, driven by rapid cost changes and intense customer demand for demonstrable ROI. We discuss essential frameworks, including selecting the right value metric—from resource-based tokens to outcome-based pricing—to ensure alignment between price and perceived value. Learn why continual experimentation is vital and how data-informed tools like Orb Simulations can help AI builders avoid "pricing on vibes".

The era of production AI agents is here. Drawing from the "2026 State of AI Agents Report," we examine how enterprises are shifting from basic task automation to complex, cross-functional systems that already deliver measurable ROI for 80% of organizations,,. Tune in to explore the top high-impact use cases—including coding, data analysis, and internal process automation—and the critical challenges leaders must overcome to scale these intelligent systems strategically in 2026,,.

Join us as Anthropic experts reveal why they stopped building agents and started building specialized "skills" instead, arguing that while general agents are brilliant, they often lack the domain expertise needed for consistent, real-world work. Skills are simply organized folders packaging procedural knowledge and scripts as tools to provide that missing expertise and important context. This scalable design is fostering a quickly growing ecosystem, enabling general agents like Claude to acquire new capabilities and organizational best practices instantly.

Dr. Werner Vogels delivered his final AWS re:Invent keynote in 2025, announcing his departure from the platform for new speakers. He assured developers that those who continue to evolve will not become obsolete despite AI transforming tasks. Vogels introduced a five-part framework for the modern "Renaissance Developer," highlighting curiosity, systems thinking, and polymathic knowledge. He stressed the need for precise communication given generative AI's ambiguity. Guest Claire Liguori supported this by detailing spec-driven development (SDD) in the Kiro IDE to manage natural language prompts. Vogels concluded by emphasizing that developers retain sole responsibility for software quality, requiring stronger verification mechanisms like rigorous code reviews for AI-generated work.