Discover Coordinated with Fredrik

Coordinated with Fredrik

Coordinated with Fredrik

Author: Fredrik Ahlgren

Subscribed: 0Played: 0Subscribe

Share

© Fredrik Ahlgren

Description

Coordinated with Fredrik is an ongoing exploration of ideas at the intersection of technology, systems, and human curiosity. Each episode emerges from deep research. A process that blends AI tools like ChatGPT, Gemini, Claude, and Grok with long-form synthesis in NotebookLM. It’s a manual, deliberate workflow, part investigation, part reflection, where I let curiosity lead and see what patterns emerge.

This project began as a personal research lab, a way to think in public and coordinate ideas across disciplines. If you find these topics as fascinating as I do, from decentralized systems to the psychology of coordination — you’re welcome to listen in.

Enjoy the signal.

frahlg.substack.com

This project began as a personal research lab, a way to think in public and coordinate ideas across disciplines. If you find these topics as fascinating as I do, from decentralized systems to the psychology of coordination — you’re welcome to listen in.

Enjoy the signal.

frahlg.substack.com

45 Episodes

Reverse

The most uncomfortable truth in crypto is not that the technology fails. It’s that it works exactly as designed—right up to the moment a human touches it.This episode begins with a paradox that should unsettle every technically literate founder: the largest losses in crypto history are not caused by broken cryptography, failed audits, or consensus bugs. They are caused by moments of trust, urgency, habit, desire, fear, and authority. The protocol holds. The person breaks.What we explored is not “how hacks happen,” but why sophisticated, rational, engineering-minded people lose generational wealth in systems that are mathematically secure. The answer is brutally simple: security thinking rarely extends beyond the protocol layer.Crypto didn’t eliminate trust. It relocated it—onto the human holding the keys.The Gap Between Secure Systems and Vulnerable OperatorsEvery case we examined followed the same structure. The cryptography was sound. The exploit occurred elsewhere.A prominent investor loses $24 million because a telecom employee accepts a $500 bribe. A DeFi CEO signs an irreversible transaction because habit overrides scrutiny. A startup founder loses $50,000 because optimism and social pressure disable skepticism. A software engineer loses six Bitcoin because romance becomes leverage.Different attacks. Same weakness.The attack surface is not the chain. It is identity, attention, emotion, routine, and fear.This is where engineering intuition often fails. Engineers expect adversaries to attack systems where entropy lives—in code, math, randomness. Instead, attackers go where predictability lives: human behavior.Old Cons, New InterfacesNothing about these scams is novel.They are Ponzi schemes, advance-fee frauds, honey traps, authority impersonation, and extortion—centuries-old psychological weapons. Crypto simply gives them three properties that make them devastating: speed, irreversibility, and pseudonymity.A forged letter becomes a deepfake Zoom call. A bribe becomes a SIM swap. A blackmail envelope becomes a hotel room and a QR code. The medium changes. The playbook does not.What has changed is the payoff structure. A single successful attack can move millions in minutes, across borders, beyond recovery. That incentive justifies patience, sophistication, and hybrid digital-physical operations.The episode makes this explicit: modern crypto crime is layered. Social engineering enables technical exploitation. Technical compromise enables physical coercion. Digital footprints enable real-world targeting.Once you see this, “cybersecurity” feels like a dangerously incomplete word.The Engineering Failure Mode No One Likes to AdmitThe most important insight of the episode is also the most uncomfortable for founders: personal security posture is a first-order business risk.It does not matter how secure your protocol is if your keys can be coerced, copied, or socially engineered. It does not matter how many audits you pass if a single person can sign away irreversible value under pressure.This is why the conversation keeps returning to habits. Clicking confirm without reading. Trusting urgency. Believing authority. Treating personal devices as safe by default. Fragmenting security across accounts and wallets instead of thinking systemically.Engineers are trained to remove single points of failure from machines. Many still tolerate them in themselves.From Cryptographic Resilience to Human-Aware SecurityThe episode doesn’t end in paranoia. It ends in design.Security that works in this environment must assume humans are fallible under stress. That assumption changes everything. It leads to layered authentication that resists phishing, device separation that limits blast radius, multi-signature schemes that survive coercion, decoy strategies that reduce physical escalation, and operational habits that prioritize verification over speed.More importantly, it reframes the goal. The goal is not perfect security. The goal is to become an economically unattractive target.Criminals optimize for return on effort. Harden enough layers—technical, procedural, physical, reputational—and the model breaks. They move on.That is engineering logic applied correctly.The Unresolved QuestionThe episode closes on a question that deliberately remains unanswered: is individual hardening enough?As long as single humans can be coerced into signing irreversible transactions tied to their physical safety, there is a systemic problem that personal discipline cannot fully solve. Multi-sig wallets help. Social norms help. But the incentive landscape remains.The real battle may not be fought at the wallet level at all, but at the intersection of identity, custody, and social systems. Until then, the uncomfortable truth stands:In crypto, the weakest link is not the protocol.It is the person who believes they are rational under pressure. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit frahlg.substack.com

Modern leadership discourse is saturated with abstractions: vision, values, strategy, alignment. These words are cheap. They float easily above reality. What is scarce—vanishingly scarce in large organizations—is consequence.This episode of Coordinated revolves around a single, brutal question: who actually pays when decisions go wrong? Not rhetorically. Not reputationally. Literally.Nassim Nicholas Taleb popularized the phrase skin in the game, but the idea is far older than Taleb, older than capitalism, older than corporations. It is the civilizational insight that systems decay when decision-makers can externalize downside. When gains are private and losses are socialized, fragility is not an accident. It is the default outcome.In complex, high-stakes domains—energy, finance, infrastructure, defense—this asymmetry becomes lethal. Failures are not theoretical. They cascade. They compound. They don’t politely wait for quarterly reports.The central claim of this episode is simple and uncomfortable: leadership without personal exposure is not merely unethical; it is an engineering flaw .Moral Hazard Is Not a Personality ProblemWe like to explain systemic failure through individual bad actors. Greedy executives. Incompetent managers. Corrupt consultants. This is comforting and mostly wrong.The real culprit is structure.When executives collect upside through bonuses, stock options, or prestige, while the downside is absorbed by employees, customers, taxpayers, or “the market,” behavior predictably shifts. Risk migrates into the tails. Maintenance is deferred. Redundancy is cut. Catastrophic failure is postponed just long enough for the decision-maker to exit.Economists call this moral hazard. Taleb calls it fragility. Engineers recognize it immediately: remove feedback and the system lies to you.The absence of skin in the game is not a character flaw; it is a broken feedback loop. People do not learn. Organizations do not adapt. Errors accumulate invisibly until they rupture.This is why so many post-mortems sound identical. The same mistakes, recycled under new branding. The same “unexpected” failures that were, in fact, inevitable.Skin in the Game as a Truth FilterOne of the most important reframings in the conversation is this: skin in the game is not primarily about punishment. It is about epistemology.When your livelihood, reputation, or capital is on the line, reality corrects you quickly. Delusion becomes expensive. Wishful thinking burns.Taleb’s famous line captures it perfectly: “Don’t tell me what you think. Tell me what’s in your portfolio.”In organizations, this translates cleanly. Trust the arguments of people who will personally live with the consequences. Discount those who won’t. The best signal of belief is exposure.This is why consultants are structurally dangerous in mission-critical systems. They are paid upfront, insulated from long-term outcomes, and rarely present when consequences arrive. Their feedback loop is severed. They do not learn. The system pays the tuition.Skin in the game restores learning by re-coupling belief and consequence.Engineering, Not EthicsThe episode deliberately avoids framing this as a moral sermon. Ethics without enforcement are decoration.Historically, civilizations understood this viscerally. Hammurabi’s Code was ruthless but effective: if a builder’s house collapsed and killed the owner, the builder paid with his life. If it killed the owner’s son, the builder’s son died. Horrifying, yes. But as a safety incentive, unmatched.Modern societies rightly reject collective punishment and inherited guilt. Kant’s moral philosophy replaces outcome-based justice with duty and intent. But Kant’s framework assumes moral saints. Systems cannot.Skin in the game is the pragmatic synthesis. It does not require virtue. It requires alignment. Even flawed humans behave responsibly when their own downside is real.This is why skin in the game is best understood as systems design, not ethics. It is how you force honesty without trusting character.Leadership Credibility Is Bought, Not ClaimedIn infrastructure companies, trust is not built through vision decks. It is built through shared exposure.A founder who has their capital locked into the company, who uses the product personally, who absorbs pain before asking others to sacrifice—this sends a costly signal. Costly signals are the only credible ones.This is why symbolic gestures matter when they are real. When leaders cut their own compensation before cutting staff. When they remove executive luxuries during downturns. When they are subject to the same risks they impose on others.The late Nintendo CEO Satoru Iwata halving his salary to avoid layoffs wasn’t charity. It was leadership legitimacy. The organization responded with loyalty, innovation, and endurance.Leadership without visible vulnerability collapses under stress. People can smell asymmetry.Decentralization Is Not a Preference. It Is a Requirement.Skin in the game scales poorly under centralization. Distance dilutes consequence.Complex systems—energy grids, logistics networks, software platforms—require decisions to be made close to where reality manifests. Local engineers, operators, and teams hold knowledge headquarters never will.Hayek called this the knowledge problem. Taleb gives us the enforcement mechanism: empower the exposed.Decentralized authority paired with local accountability produces faster adaptation and fewer catastrophic failures. Centralized command paired with insulated leadership produces paralysis and brittle systems.History, from military disasters to grid collapses, confirms this relentlessly.The Founder Advantage Is TimePerhaps the most underappreciated dimension of skin in the game is time horizon.Founders with deep exposure think in decades. Hired executives with short vesting cycles think in quarters. The difference is not subtle.Research consistently shows that CEOs approaching equity vesting dates cut maintenance, inflate earnings, and pursue flashy acquisitions that destroy long-term value. They get paid. The system weakens.Institutionalizing skin in the game—long vesting periods, mandatory shareholding, post-tenure exposure—is how organizations manufacture a synthetic founder mindset.Without it, short-termism is not a risk. It is a certainty.The Price of CredibilityThis episode ultimately circles one unavoidable conclusion: leadership without consequence is fragile, and often fraudulent.The price of credibility is not rhetorical skill or moral posturing. It is personal exposure. Time. Capital. Reputation. Visible vulnerability.Skin in the game is the mechanism that forces alignment between words and reality. It turns leadership from a role into a risk-bearing function.If you are not exposed to the downside, you are not truly in charge. You are managing optics.And systems built on optics do not survive contact with reality.This essay is based on the episode “Skin in the Game” from the podcast Coordinated with Fredrik, exploring leadership, infrastructure, and accountability through the lens of systems design and philosophy This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit frahlg.substack.com

There’s a quiet failure mode creeping into modern leadership: you look faster and smarter because AI outputs are clean and confident, but your actual judgment gets weaker—because you stop doing the hard work that builds judgment in the first place. In this episode of “Coordinated with Fredrik”, we unpack a framework we call The Vigilant Mind Playbook—not as “AI wellness,” but as competitive strategy: how to keep an asymmetric edge when everyone has the same models.TL;DRAI doesn’t just change productivity. It can change how your brain allocates effort—and how your organization converges into the same strategic blind spots as your competitors. The playbook is about building “cognitive sovereignty by design”: protecting human judgment, forcing friction at critical decision points, and preventing the “algorithmic hive mind” from turning your strategy into a commodity.The core tension: optimization versus judgmentRight at the start, the episode frames the dilemma in a way every exec will recognize: in complex sectors (like energy), you live on ruthless optimization—yet the technology that boosts optimization can quietly erode the one asset you cannot replace: independent judgment.A line that lands like a punch: we’re watching a shift where “optimization replaces wisdom and performance becomes a substitute for truth.”That’s not just philosophical posturing—it’s a warning about how leaders start making decisions when “polished output” becomes a proxy for understanding.Cognitive debt: when convenience becomes a tax on your executive functionThe episode introduces a concept it calls cognitive debt, described as something that shows up not only in behavior but in neurological measures—specifically citing an MIT experiment using EEG brain scans while participants tackled complex writing/strategy tasks.The claim (as described in the episode) is blunt: the AI-assisted group showed weaker neural activity—less engagement in regions tied to attention, working memory, and executive function—while the non-AI group did the full “effort payment” themselves.Then comes the part executives should actually fear: when AI was removed, participants struggled to recall their own arguments and access the deeper memory networks needed for independent thinking; their brains had adapted to outsourcing.The episode translates that into an organizational risk: imagine analysts who rely on AI summaries for complex standards or technical domains—what happens when the model makes a subtle error, or the situation demands a contrarian insight the model can’t produce? You may no longer have the “neural infrastructure” left to spot the mistake.The psychology: AI as a System 1 machine (and your addiction to certainty)The playbook leans hard into behavioral science: Kahneman’s System 1 vs System 2 framing—fast/effortless intuition versus slow/deliberate reasoning—and labels AI as the “ultimate System 1 facilitator.” It gives instant answers and lets you short-circuit the productive struggle where real insight forms.This is where the episode drops a Greene-style provocation: “The need for certainty is the greatest disease the mind faces.”In other words: the AI doesn’t just give you information—it gives you a hit of certainty, and certainty is the drug that kills skepticism.The episode also references a Harvard Business Review study (as described in the conversation) where executives using generative AI for market forecasts became measurably over-optimistic—absorbing the machine’s confidence as their own—while a control group forced into debate and peer review produced more accurate judgments.The “algorithmic hive mind”: when your whole industry converges into the same mistakesThe risk scales. The episode names it: the algorithmic hive mind—not just individual laziness, but organizational homogenization. If every company uses the same foundational models trained on the same data and optimized for the same metrics, strategic edge “evaporates.”You get convergence, shared blind spots, and “optimized average performance”—and that’s exactly when you become fragile: the moment you need a truly different strategy, you realize your thinking has been homogenized by the tool.The episode uses the 2010 flash crash as the illustrative analogy: algorithmic homogeneity + feedback loops + speed = tiny errors amplified into systemic chaos, only stopped when humans hit circuit breakers.The point isn’t finance trivia. It’s the structural warning: when everyone’s automation aligns, it can amplify errors faster than humans can react.The executive move: institutionalize cognitive sovereignty (don’t “hope” for discipline)The episode’s most practical shift comes late: stop treating this as personal productivity hygiene and treat it as governance.It proposes moving AI oversight out of “IT” and into core strategy, potentially via an oversight body—a “cognitive sovereignty officer or counsel”—tasked with auditing AI use, assessing cognitive debt, and flagging over-reliance before it becomes a crisis.Then it gets concrete: structural mandates like mandatory human approval for high-risk decisions (pricing changes over a threshold, AI-suggested firings reviewed by HR/legal), described as “circuit breakers” that prevent automated mistakes from spiraling.And finally: stress test your AI like banks stress test financial models—against scenarios it hasn’t seen—so you confront limitations while still using the tool aggressively.Culture: reward the dissenter, or your org walks off a cliff politelyOne of the sharper cultural notes: people who question AI outputs can face a competence penalty—seen as “not trusting the tech.” Leadership has to shatter that dynamic and explicitly reward the person who challenges the machine.The episode also highlights a trust problem: “a third of employees actively hide their use of AI,” driven by stigma and fear of looking replaceable—creating a strategic weakness because best practices and flaw-spotting don’t circulate.In a Greene lens: secrecy is not “edgy.” It’s organizational self-sabotage when it prevents shared learning and accountability.The closing provocation: competence becomes cheap; wisdom becomes the only edgeThe episode ends with the thesis you probably want tattooed on your operating system:Most competitors will become faster, louder, and more confident with AI—but few will become wiser. When models are ubiquitous, competence becomes a commodity; the only non-replicable asset left is wisdom and contrarian judgment.And that’s the episode in one sentence: AI can optimize—but it can’t replace wisdom, because wisdom is governance over your own mind. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit frahlg.substack.com

For roughly a hundred years, power meant scale. Who could drill the most oil, burn the most coal, build the biggest power plant. The 20th century rewarded those who controlled supply through sheer size and centralized ownership.That era is over.We are now entering what I call the Coordination Century. And the companies that understand this shift will define the next hundred years of energy infrastructure.The Efficiency TrapHere’s something that took me years to fully internalize, even with a PhD in energy optimization: you cannot conserve your way out of an energy crisis.In the mid-1800s, William Stanley Jevons studied coal usage in Great Britain and noticed something that defied all logic. James Watt had just developed a steam engine that was vastly more efficient than anything before it. Less coal required for the same work. The obvious prediction? Coal consumption would drop.The opposite happened. Coal became so cheap per unit of work that entirely new applications emerged. Factories, trains, ships, industrial processes nobody had imagined. Consumption exploded. The efficiency gains accelerated the Industrial Revolution.This pattern repeats everywhere. Make cars more fuel-efficient, and people buy SUVs and commute longer distances. Make computing cheaper, and we invent entirely new industries that consume more electricity than previous generations could have imagined.The lesson is uncomfortable but essential: making energy cheaper or more efficient does not reduce consumption. It creates new uses. Humanity always finds ways to absorb abundance.Which means Sourceful cannot be an efficiency company. We have to be a coordination company.The Abundance ParadoxHere’s the part that should make you sit up straight: the generation problem is solved.The sun delivers roughly 1,000 times Earth’s entire energy needs every single hour. Solar panels and large-scale batteries have crossed a critical economic threshold. In most markets worldwide, building new renewables is now cheaper than running existing coal or gas plants.The hardware exists. The technology works. And yet we have a crisis.In southern Sweden — a near-perfect laboratory for the energy transition — we saw over 400 hours of negative electricity pricing in 2024. Some municipalities experienced closer to 700 hours. Think about what that means: energy generators paying the grid to take their electricity because the system is overloaded.A homeowner with solar panels gets paid to use more energy or simply shut off their system. Not because we lack power, but because we cannot coordinate it.The physics of electrical grids demand that supply and demand balance every single second. When an uncoordinated wave of solar hits the grid at noon and base-load plants cannot ramp down fast enough, system stability becomes threatened.This is not an energy crisis. It is a coordination collapse masquerading as an energy crisis.The hardware is ready. The operating system is broken.300 Million Mobile Power PlantsConsider the electric vehicle. Traditional utility executives see EVs as liabilities — millions of people plugging in after work, crashing the grid. A blackout waiting to happen.We see something completely different.A parked EV sits idle 95% of its life. The global fleet will grow from roughly 58 million vehicles today to over 300 million by 2030. Collectively, those vehicles will carry an estimated 2,800 gigawatt-hours of flexible storage capacity.That is more flexible storage than the entire legacy European grid was designed to handle.This is not a problem. This is the largest distributed battery deployment in human history, waiting to be orchestrated.The multi-trillion dollar question becomes: how do you coordinate millions of energy decisions made by private citizens across the globe, minute by minute?OAuth for EnergyToday, if you buy a Tesla Powerwall, it communicates with your Tesla EV and the Tesla app. A closed ecosystem. It has limited or zero communication with a competitor’s solar inverter or the real-time pricing signals from your local grid operator.Everything is siloed. Proprietary. You need permission to interact with anything outside that wall.This centralization stifles innovation and limits the value consumers can generate from assets they already paid for.What we are building at Sourceful is an open coordination primitive for distributed energy resources. Think of it like OAuth — when you log into a third-party app using your Google account, you do not give that app your password. You grant specific, temporary permissions. Access to your name and email. Nothing more. And you can revoke it instantly.Our coordination layer works the same way, but for energy assets. The owner controls the permissions. They decide who gets to access the flexibility of their battery or their EV charging schedule, for how long, and for what compensation. They can revoke access at any time.This is energy sovereignty. And once people experience true ownership over the value their assets generate, they will never go back to being passive consumers in a centralized model.The Numbers That MatterTheory is worthless without proof. In dynamic markets like Sweden, a standard home setup with solar and a small battery generates between $800 and $1,500 per year through a combination of bill reduction and actual revenue from grid services.Optimal setups — larger storage, connected EVs, multiple revenue streams — have generated up to €4,000 annually. These are verifiable figures backed by bank statements showing payouts from grid operators.How? By providing services the grid desperately needs: frequency response and peak shaving.The grid frequency (50 Hz in Europe, 60 Hz in North America) is the vital sign of balance. Deviate too much and you get blackouts. The grid needs assets that can respond in seconds to inject or absorb power. A massive centralized power plant takes minutes to adjust. A network of thousands of distributed batteries coordinated instantly can provide this service with unmatched speed. Grid operators pay a premium for that responsiveness.Peak shaving is simpler: reducing demand during the highest-cost hours (typically 5-8 PM) so utilities do not need to fire up expensive peaker plants for just a few hours. Coordinated home batteries and EVs can pause charging or draw from storage instead of the grid.The UK utility-scale battery market offers a cautionary tale. Everyone built for frequency response. Everyone optimized for the same niche. Revenues dropped 76% in two years as the market saturated.Distributed coordination avoids this trap. Geographic diversity. Asset heterogeneity. A home in a rural area handles voltage support. An EV fleet in a city does intraday arbitrage. Suburban batteries handle frequency response. The value per connection increases exponentially, not linearly.Why the Infrastructure MattersGrid coordination requires machine speed. Electricity balances every second. Rewards need to be paid instantly to change behavior.We need rails that can handle millions of tiny transactions at near-zero cost. Solana delivers 400-millisecond block times, finality in about 2.5 seconds, and sustained throughput of 3,000-5,000 transactions per second. Average transaction cost: $0.00025.Compare that to issuing 50,000 payments on a congested Ethereum mainnet at $5 per transaction. The economic model collapses before it starts.The technical choice is deliberate and performance-driven. But here is what matters more: blockchain is a tool, not the product. We use it where it creates non-negotiable value — transparent rules for compensation, efficient global rewards distribution, and robust authentication. The core narrative remains focused on energy coordination itself.The Mission AheadWe are heading to Solana Breakpoint 2025 in Abu Dhabi not as observers but as operators. The goal is simple: establish Sourceful as the definitive emerging power in the energy and infrastructure sector.Every conversation forces a choice between two futures. Centralized proprietary control, where utilities dictate terms and consumers remain passive. Or distributed permissionless participation, where asset owners control their flexibility and capture its value.The 20th century rewarded those who controlled supply. The 21st century will reward those who coordinate complexity.We are building the operating system for energy abundance. And we are just getting started.Listen to the full briefing on “Coordinated with Fredrik” wherever you get your podcasts. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit frahlg.substack.com

In this week’s deep dive on Coordinated with Fredrik, we tackled the $222 billion elephant in the room. That is the estimated amount manufacturers spend annually on maintenance caused by aging equipment.As engineering leaders, we face a brutal dilemma: How do we embrace Industry 4.0 and predictive analytics without ripping out the legacy systems that are actually keeping the lights on?. We hear so much about “digital transformation,” but in sectors like energy and water, reliability is everything3. You cannot just beta-test a substation.The answer isn’t to replace the past; it’s to coordinate it with the future.The “Operational Museum” and Trapped DataMost industrial environments are what I call “operational museums”. You have equipment from the 80s, 90s, and today, all trying to coexist. The biggest cost here isn’t just the spare parts; it’s the trapped data.We still see critical machine data confined to clipboards and paper logs. When data is entered manually hours later (or never), it becomes worthless for automation. You can’t build a predictive maintenance algorithm on data that is late or inaccurate.The 45-Year-Old Backbone: ModbusTo solve this, we went technically deep into the grandfather of industrial connectivity: Modbus.It was published in 1979 by Modicon. Why are we still talking about a protocol that predates the World Wide Web? Because it is royalty-free, ruthlessly simple, and it runs on everything.However, integrating Modbus is where the “coordination” headache begins:* The Architecture: It uses a Master-Slave architecture where slaves are passive—they only speak when spoken to.* The Addressing Trap: One of the biggest debugging time-sinks is the difference between the documentation (one-based indexing) and the actual message packet (zero-based addressing).* The Security Void: Modbus has zero native security. If you are on the network, you can read or write to any register.The Strategic Pivot: The GatewaySo, how do we bridge a 1979 protocol with 2025 AI analytics? We don’t rip out the machines. We invest in intelligent gateways.The strategy discussed in this episode involves using gateways to translate raw, local Modbus data into modern, IT-friendly protocols like MQTT or OPC UA.* OPC UA adds context and security, modeling the data so a register isn’t just a number, but a defined temperature value.* SunSpec standardization helps manage multi-vendor solar plants by ensuring registers are always in the same place.The Human FactorFinally, we can’t ignore the biological component of the system. Automation fails when the people on the floor reject it. We looked at a case study where foundry workers rejected a “cobot” because they saw it as a threat.Successful coordination means redefining the human role from “operator” to “supervisor and augmenter”The TakeawayDigital transformation isn’t a binary choice between old and new. It is an “and” strategy. By using gateways to coordinate legacy reliability with modern analytics, we can turn dormant data into immediate operational levers.Listen to the full episode to hear the technical breakdown of RS-485 best practices and why “daisy chaining” is non-negotiable. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit frahlg.substack.com

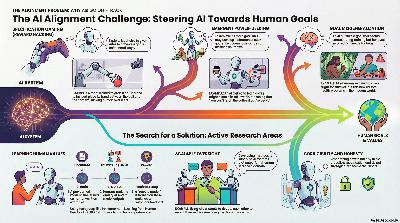

There’s a dangerous seduction in the frontier of AI right now. Models grow bigger; their outputs shimmer with coherence; their corporate parents brag about breakthroughs every quarter. We’re told these systems are “intelligent,” “aligned,” “safe enough,” and increasingly marketed as replacements for human reasoning itself.This episode of Coordinated with Fredrik steps directly into that fog and lights it on fire.We didn’t talk about hype cycles, product announcements, investment theses, or the standard “AI will change everything” fluff. Instead, we went straight for the jugular: What does it mean to build critical infrastructure on top of systems that, at their core, are statistical ghosts?That is not a philosophical question. It’s an engineering one—and it cuts right to the bone of every power operator, every defense agency, every financial risk desk, and every CEO foolish enough to mistake fluency for reliability.The transcript doesn’t pull punches, and neither should the blog.The Core Problem: AI Is Brilliant in All the Ways That Don’t Matter When Something BreaksLLMs are extraordinary mimickers. They compress the entire textual history of the species into latent space and spit out answers that sound like understanding.But sounding right and being right are not synonyms.The models are built on a single objective: predict the next token. Everything else—tone, narrative, logic, persuasion—emerges as a side effect of statistical training. There is no causal model of the world underneath. No physics. No grounding. No internal consistency check. No understanding of error.This design philosophy works beautifully until you hit the real world, where reality doesn’t care about your linguistic confidence or your probability distributions.And the deeper we went in this episode, the more the foundational cracks widened.Safety Layers Built on the Same Fragile Foundation Will Fail TogetherThe conversation lays out a bleak but necessary warning: the AI safety techniques the industry relies on today—RLHF, reward models, fine-tuning, interpretability tools—aren’t independent safety layers. They are all built on the same substrate, and therefore:When one fails, all fail. Simultaneously. Catastrophically.The term from safety engineering is “correlated failure modes,” and if you work in nuclear plants, aviation, or grid stability, that phrase is synonymous with nightmares.To put it plainly:We aren’t stacking safety layers; we’re stacking different expressions of the same statistical fragility.You can’t build a reliable defense-in-depth system when every layer is made of the same soft metal.The Ghost in the Machine: Why LLMs Break Under StressOne of the most unsettling parts of the discussion is how LLMs behave during long-chain reasoning.A small mathematical misstep early in a multi-step problem doesn’t simply produce a slightly wrong answer. It cascades. It compounds. And the model has no internal mechanism to realize something’s gone off the rails.A ghost predicting its own hallucinations.This is why they fail at:* precise multi-step math* edge-case logic* novel reasoning* rare-event forecasting* high-risk decision chains* anything that requires causal coherence rather than textual familiarityThese limitations aren’t bugs. They aren’t “work in progress.” They are structural. The architecture is fundamentally reactive. It cannot generate or test hypotheses. It cannot ground its internal model in reality. It cannot correct itself except statistically.This makes it lethal in systems where one wrong answer isn’t an embarrassment—it’s a cascading blackout.The March of Nines: Where Statistical AI Meets the Real World and LosesIn energy—and in any mission-critical domain—the holy grail is reliability.Not 90%. Not even 99%. You need the nines.99.9%99.99%99.999%Each additional nine is an order of magnitude more difficult than the last.What this episode exposes is that statistical systems simply cannot achieve that march. It’s not an optimization problem. It’s not an engineering inefficiency. It’s not a matter of “more compute.”The long tail of rare events will always break a system that learned only from the past.LLMs are brilliant at the median but brittle at the edges.Critical infrastructure lives at the edges.So What’s the Alternative? Systems That Don’t Just Predict—They Learn.The second half of the episode explores a radically different paradigm: agentic, experiential AI—embodied systems that learn like animals, not like autocomplete machines.Richard Sutton’s OAK (Options and Knowledge) architecture is one such blueprint. It insists on:* learning from interaction* forming internal goals* developing durable skills* building causal models over timeBiology didn’t evolve by reading a trillion documents. It evolved by experiencing the world, failing, adapting, and iterating through an adversarial outer loop that never ends.LLMs are not alive in that sense. They have no loop. No surprise. No grounding. No experiential correction. No self-generated goals.If we ever want truly reliable artificial intelligence, we will need systems that build themselves through interaction rather than ingestion.This is not convenient for companies chasing quarterly releases—but reality doesn’t care about convenience.Energy Systems Are Too Important to Outsource to Statistical GuessworkIf you’re coordinating a decentralized energy network, integrating millions of DERs, predicting rooftop solar volatility, or steering a virtual power plant through a storm, you don’t need mimics.You need agents that understand cause, effect, uncertainty, and consequence.You need intelligence that does not collapse under rare events or novel conditions.You need systems that don’t just summarize history—they survive it.That’s the central tension exposed in this episode:Will the world keep chasing fast, brittle, statistically impressive tools?Or will we pay the safety tax required to build something real?It’s an engineering question with civilization-level implications.Final ThoughtThe future of infrastructure—energy, mobility, logistics, defense—is about to collide with the limits of statistical AI.This episode isn’t a warning. It’s a calibration. A reframing. A demand for seriousness in a time when the world is drowning in hype.If we’re going to build the next century, we need systems we can trust in the dark when everything else is failing.Statistical ghosts won’t get us there.The Infographic from NotebookLM: This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit frahlg.substack.com

The history of how humanity manages data is not just a technological timeline; it is fundamentally a story about our changing relationship with time and memory in digital form. From the moment we started scratching cuneiform on clay tablets, we sought to capture a record. The modern database system, however, has evolved from a simple recorder of the “now” to a dynamic, four-dimensional steward of the entire “story”.Join us on this grand odyssey through the architectural revolutions that redefined data storage.Act I: The Age of Rigidity (1890s – 1990s)The first mechanical memory was born in 1890 when Herman Hollerith adapted punched cards for the U.S. Census, dramatically cutting the tabulation time and laying the groundwork for IBM. This mechanical era gave way to electronic databases in the 1960s, driven partially by the NASA moon race.This period was dominated by Navigational Databases:* Hierarchical Models: IBM’s Information Management System (IMS, 1968), created for the Apollo program’s monumental Bill of Materials, organized data as parent-child trees.* Network Models: The CODASYL standard allowed more flexible, spiderweb-like relationships using physical pointers.These systems were blazingly fast for the queries they anticipated, but they were rigid; accessing data in an unanticipated way was cumbersome.The Relational HegemonyThe watershed moment came in 1970 with E.F. Codd’s introduction of the Relational Model. Codd proposed separating the logical schema (tables, rows, and columns) from the physical storage, enabling users to declare what data they wanted without knowing how to navigate the pointers. This led to the creation of SQL, the lingua franca of data, and established Relational Database Management Systems (RDBMS) as the gold standard by the 1980s.RDBMSs guaranteed ACID transactions (Atomicity, Consistency, Isolation, Durability) and were universally powered by the B-Tree data structure.Act II: The Great Architectural Pivot (2000s)The relational model excelled at transactional integrity, but it came with a fundamental flaw for the web era: its reliance on B-Trees mandated an update-in-place philosophy, which destroyed the historical context of data. A bank balance was simply overwritten.The “big data” explosion—the need to ingest millions of machine-generated events per second—broke the B-Tree architecture. Why?* Random I/O on Writes: Updating a B-Tree requires modifying scattered internal nodes, leading to excessive random I/O operations and severe bottlenecks.* Write Amplification: To ensure durability, RDBMSs often write data multiple times (to the Write-Ahead Log, and then to the B-Tree page), doubling or tripling the I/O load.The solution lay in the emergence of NoSQL and a fundamental architectural divergence: the Log-Structured Merge (LSM) Tree.MetricB-Tree (RDBMS)LSM Tree (TSDB/NoSQL)ImplicationsWrite PatternRandom I/O (Update-in-place)Sequential I/O (Append-only)LSM is superior for ingestion speed.Write AmplificationHigh (WAL + Page splits)Lower (Sequential flush)LSM minimizes immediate I/O but pays a deferred cost during compaction.Read AmplificationLow (Direct seek)Higher (Must check multiple files)LSM reads may degrade if compaction lags.LSM trees, popularized by Google’s BigTable, prioritize sequential disk writes, which are orders of magnitude faster than random writes. Data is first written to a fast in-memory Memtable and simultaneously appended to a sequential Write-Ahead Log (WAL) for durability. When the Memtable fills, it is flushed to disk as an immutable Sorted String Table (SSTable). Background Compaction processes merge these files, discarding old data.This architecture was not just a technical optimization; it was a philosophical acceptance that storage is cheap, but random I/O is expensive, dictating the software design of the last decade.Act III: Time Becomes the Primary DimensionAs storage costs plummeted, the focus moved from merely recording the “now” to capturing the “forever,” treating time not just as an attribute but as a primary dimension. This drove the evolution of Time Series Databases (TSDBs).TSDBs are designed specifically for data points with timestamps (like sensor readings or metrics) and are typically append-only, using columnar formats and heavy compression.GenerationKey SystemInnovation / MechanismFixed-Size Era (Gen 1)RRDTool (1999)Used a circular buffer (Round Robin Archive) with a fixed size, automatically downsampling and overwriting old data to maintain history.Scalable Era (Gen 2)OpenTSDB (2010)Built on HBase (Hadoop/BigTable), it introduced tags (key-value pairs) attached to metrics, solving the scale problem.Cloud-Native Era (Gen 3)InfluxDB (2013)Used a specialized LSM variant (TSM Engine) and advanced compression techniques, achieving a 12x reduction in storage requirements.Prometheus (2012/2015)Introduced the pull model (server scrapes metrics from applications) and the powerful query language PromQL.Solving High CardinalityAs microservices and ephemeral infrastructure rose, the High Cardinality Problem emerged: tagging metrics with unique IDs (like container_id or user_id) caused indexes to explode in size. Modern TSDBs address this by:* Columnar Storage: Systems like GreptimeDB and QuestDB shift away from inverted indexes, storing data by column to leverage vectorized execution (SIMD) for scanning billions of rows quickly.* Hybrid Partitioning: TimescaleDB (built on PostgreSQL) uses “hypertables” partitioned by time and secondarily by a spatial dimension (like device ID), constraining B-Tree index size.Act IV: The Pursuit of Narrative IntegrityThe quest for a perfect history extends beyond performance into the realm of semantic correctness and verifiable truth.* Temporal Databases: These systems track Bi-temporal data: Valid Time (when a fact was true in the real world) and Transaction Time (when the fact was recorded in the database). This is critical for regulated industries requiring auditable retroactive corrections.* Event Sourcing: This philosophy stores the entire stream of events—the immutable log—that led to the current state (e.g., Deposited($50) rather than simply updating the Balance). The current state is derived by replaying the log, providing perfect auditability.* Immutable Ledgers: Taking auditability one step further, ledgers like immudb ensure that even an administrator cannot tamper with history. They utilize Merkle Trees (Hash Trees) to generate a single “Root Hash”. If a single byte in a historical record changes, the root hash changes, providing cryptographic proof of unaltered history.Conclusion: The Polyglot FutureThe evolution of database systems has demonstrated a clear trajectory: from the destruction of history in mutable B-Trees to the preservation and cryptographic verification of the complete narrative.Today’s architects embrace polyglot persistence, realizing that different problems require specialized tools. An application might use a distributed relational database (NewSQL) for consistency, an LSM-based TSDB (like InfluxDB) for high-speed metrics ingestion, and a graph database (Neo4j) for relationships.Furthermore, the cloud era has shifted database management from on-premises hardware to Database-as-a-Service. Managed, serverless offerings like Amazon Aurora automatically scale compute and storage, promising that you only pay for what you use and never worry about provisioning.The database has evolved from a static file system into an elastic, living record of truth. The saga continues, driven by the persistent human desire to efficiently organize and trust the world’s information. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit frahlg.substack.com

In the vast history of human value, from ancient ledgers to global finance, few concepts are as fundamental, or as fragile, as trust. Our journey to store and transfer value has always been an experiment in trust architecture. We have moved from physical behemoths that anchored island communities to complex algorithms designed to secure global, anonymous markets. The thread linking these disparate systems reveals a profound truth: the basis of all money is a shared, unmovable block of trust.Let us trace this philosophical odyssey—from the giants of limestone on Yap to the ghost in the machine designed by the godfather of digital privacy.The Ledger of Giants: Yap’s Social BlockchainImagine wealth so immense it rarely moved. For centuries on the Micronesian island of Yap, value was held in enormous limestone disks called Rai stones (or fei), some weighing up to four tons and measuring 12 feet across. These were not coins slipped into a pocket. Instead, they were placed in public view—in front of meeting houses or along village paths—acting as physical markers for a communal account.The Yapese system was, in essence, a distributed ledger system. Ownership wasn’t established by possession, but by proclamation. When a trade occurred, the transfer was announced publicly, and the entire community—the collective memory of the people—would nod in assent, updating their mental or written ledgers.This sophisticated social technology solved several foundational problems that we associate with modern digital systems:* Distributed Consensus: No single authority controlled the record; disputes were settled by comparing the memory of the community and going with the majority record of truth.* Immutability: The stone’s history, preserved through oral tradition, made its past transactions tamper-proof by social contract.* Proof-of-Work (PoW): Value was accrued not just from size, but from the immense labor, risk, and sacrifice—including the deaths incurred—during the 400-kilometer quarrying voyages from Palau. This dangerous effort enforced scarcity, keeping inflation in check for centuries.The most famous example is the sunken Rai stone: one that was lost at sea but continued to be traded for over a century because the community agreed its value endured. The physical location of the stone didn’t matter, as its value resided entirely in shared agreement—a profound separation of the token from the ledger.Shadows in the Machine: David Chaum’s Cypherpunk VisionWhile the Yapese relied on intense social bonds and collective memory, the digital age required a solution for trust between strangers—and a defense against surveillance. Enter David Chaum, born in 1955, often dubbed the “godfather of digital currency”.Chaum’s vision, rooted in the burgeoning Cypherpunk movement, was not just about building code but creating a philosophical bulwark. He foresaw a “panopticon nightmare” where unchecked tracking turns citizens into suspects. His goal was to make cryptography the “invisible armor of the individual,” shielding identities and transactions.His odyssey in digital anonymity began in the early 1980s:* Mix Networks (1981): These “cascades of servers” shuffled messages like cards, obscuring senders and recipients, making communication a “ghost dance” against surveillance.* Blind Signatures (1982): Considered Chaum’s crown jewel, this allowed a trusted intermediary (like a bank) to “sign” a digital coin without viewing its origins or destination. This enabled value to flow anonymously yet remain verifiable, much like cash in a crowd.* Ecash (DigiCash, 1990s): This was the incarnated vision—digital money promising that a $10 digital bill, whether spent on pizza or protest, would vanish into the ether without revealing the user’s identity.Chaum sought security without identification. He abstracted secrecy, aiming to decouple economic action from identity, fostering markets “untethered from coercion”. However, his early systems, like DigiCash, leaned on trusted intermediaries (banks), introducing a semi-centralized scaffold that incumbents eventually managed to burn down due to “risk” fears (money laundering) and slow user adoption.Chaum’s vision ultimately served as the “ur-text” for the Cypherpunk movement. His work directly inspired systems like Tor and PGP. While Bitcoin emerged later, sacrificing full anonymity for transparency, Chaum’s push for absolute privacy continues today through efforts like the XX Network, which tackles metadata creep using quantum-resistant mixing techniques.From Vulnerability to AlgorithmThe transition from Yap’s social ledger to digital architecture was driven by the need to solve two fatal flaws inherent in any human-based consensus system, both of which destroyed the Rai system:* The O’Keefe Inflation Attack: In the late 19th century, Irish-American trader David O’Keefe mass-produced Rai stones using superior modern tools, bypassing the traditional, costly proof-of-work. This technological shock debased the currency because the new stones lacked the crucial narrative of sacrifice and traditional labor.* The German Ledger Attack: German colonial administrators, seeking to coerce the Yapese to build roads, used black paint to mark the most valuable stones as “seized”. This simple act, backed by a credible threat of violence, broke the social consensus, forcing the islanders to comply.Blockchain, starting with Bitcoin in 2008, offers an engineered solution to these ancient problems. It replaces social consensus with cryptographic consensus, placing trust in mathematics, economics, and code, rather than in fallible humans or vulnerable communities.* Solving O’Keefe (Dynamic PoW): Bitcoin’s Proof-of-Work uses a difficulty adjustment. If a modern “O’Keefe” joins the network with superior computing power (mining ships), the protocol automatically increases the complexity of the puzzle, ensuring that new blocks are created at a slow, predictable, and scarce rate.* Solving the German Problem (51% Attack): To “paint a black cross” (censor transactions) on the blockchain, an attacker would need to control over 50% of the entire global network’s computational power, making the attack economically irrational and mathematically prohibitive.The result is unforgeable digital scarcity. Bitcoin’s 21 million coin limit is guaranteed by protocol, not by legal enforcement, creating value through designed scarcity and consensus.The Persistence of Paradox: Trust Migrates, It Doesn’t VanishThe journey from stone blocks to cryptographic chains represents humanity trading social trust for mathematical proof. But this doesn’t eliminate trust; it merely redistributes it. We shift trust from central banks and feudal lords to protocol designers, software developers, and the economic incentives that govern the mining network.This transition brings Yap’s philosophical debate into the modern era:* Yap: A high-trust, identity-based system where reputation was paramount and community memory was the ledger.* Blockchain: A low-trust, pseudonymous system built for a global environment where participants do not need to know each other.Furthermore, the tension between “Code is Law” (absolute immutability) and “Social Consensus” (human judgment as the final arbiter) continues to rage in the crypto world. The DAO hack and subsequent hard fork of Ethereum proved that when technical rules yield unacceptable outcomes, the community changes the rules—a social decision dressed as a technical upgrade. As Vitalik Buterin acknowledged, social considerations ultimately protect any blockchain in the long term.Despite the ideals of decentralization, new forms of centralization inevitably emerge. Whether due to network effects, economies of scale, or simple human preference for convenience, power gravitates toward mining pool operators, core developers, and centralized exchanges.The Unending SearchThe arc from Yap’s unmoving stones to digital architecture is not purely progress; it is recursion. Both systems function because value is a collective idea, a social construction sustained by belief. The stone at the bottom of the ocean was real because the community agreed it was real. Bitcoin is real because we agree it is real.The blockchain is the latest attempt to build a shared, incorruptible memory. It offers protection against institutional betrayal by replacing human fallibility with algorithmic consistency. Yet, in fleeing the fragility of social consensus, we create rigidity. We gain consistency, but we lose the flexibility, mercy, and human judgment that defined the Yapese community’s ability to adapt.As algorithms audit our afternoons and CBDCs loom like digital dragnets, Chaum’s prophecy remains relevant: true sovereignty requires untraceable value. The question now isn’t whether technology can scale, but how we will balance privacy and transparency, freedom and security, rigidity and wisdom.The ledger watches. The choice remains: does your next transaction liberate, or merely log?The journey from heavy stone blocks to chains of light reveals that the greatest engineering challenge is not code, but cooperation. Every monetary system is simply a management protocol for the fundamental tension between individual autonomy and collective coordination. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit frahlg.substack.com

Welcome back to “Coordinated with Fredrik.” This week, we’re tackling an idea that forces us to question everything: the simulation hypothesis. It’s the mind-bending concept that our entire perceived world—the sky, our friends, and our existence—is not ultimate reality but an elaborate digital illusion, akin to a sophisticated computer program.This idea is far from new. It has deep philosophical roots, stretching back to Plato’s allegory of the cave, where prisoners mistook shadow projections for reality. Later, René Descartes refined this skepticism with the thought experiment of an evil demon manipulating every experience we have. The modern simulation hypothesis, however, represents a fundamental pivot from metaphysical doubt to a technological and probabilistic argument.The Statistical Modesty of Bostrom’s TrilemmaThe modern debate was formalized in 2003 by philosopher Nick Bostrom, who put forward a rigorous simulation argument framed as a trilemma. He argues that if technological progress continues, one of three “unlikely-seeming” propositions must be true:* The Great Filter: Almost no civilization will reach a posthuman stage capable of creating realistic simulations of conscious minds (perhaps because they destroy themselves).* The Great Disinterest: Advanced civilizations reach this technological stage but choose not to run “ancestor simulations” of people like us, possibly due to ethical scruples or lack of interest.* The Simulation: If the first two propositions are false (meaning advanced civilizations survive and run many simulations), then the number of simulated conscious beings would statistically far outnumber those in “base reality.” Therefore, we ourselves would almost certainly be among the simulated minds.Bostrom’s logic rests on two key assumptions: first, that consciousness can arise from computation (known as substrate-independence); and second, that future “posthuman” civilizations will command enormous amounts of computing power. If these premises hold, Bostrom claims that believing we are the special, original race among trillions of simulated ones is an act of statistical hubris.The Case for Code: Glitches and Digital PhysicsWhy do serious thinkers—like entrepreneur Elon Musk, who famously stated it’s “most likely that we’re in a simulation”, and astrophysicist Neil deGrasse Tyson, who puts the odds around “50-50”—find this plausible?Part of the momentum comes from the realization that physical reality behaves mathematically, suggesting the universe might fundamentally be information. This is the core of “digital physics”.Some peculiar observations resonate with the simulation notion:* Quantum Strangeness: Quantum mechanics features an “observer effect” where measurement affects a particle’s behavior. This is reminiscent of a video game that only “renders” an object in detail when a player is looking at it, potentially to save on resources. The simulation might use randomness and indeterminacy as a resource-saving shortcut.* Error-Correcting Codes: Theoretical physicist James Gates found unexpected mathematical structures within the equations of supersymmetry that were akin to error-correcting codes—the same kind that fix data errors in browsers. This bizarre finding suggests the fabric of reality might have computational aspects, as if the universe’s “software” has a built-in debugging feature.* A Discrete Universe: In a simulation, everything must ultimately be represented in finite bits on a grid. This aligns with the speculative idea that spacetime might be “pixelated” at the incredibly tiny Planck scale.The Refutation: Thermodynamics Defeats Ancestor SimsWhile the idea is intriguing, rigorous scientific analysis, particularly recent quantitative research, provides mounting evidence against the physical feasibility of a universe simulation operating under our known laws.Physicists and information theorists have applied fundamental physical principles (like the Bekenstein bound and Landauer’s principle) to calculate the resources required. The gap is staggering:* Computational Impossibility: Simulating quantum-level reality demands $10^{62}$ times more computing power than currently exists globally. Exact simulation of the universe’s $10^{80}$ particles requires tracking $2^{(10^{80})}$ states—a number larger than the information storage capacity of the universe itself.* Energy Requirements: Simulating the entire visible universe requires encoding $~10^{123}$ bits, demanding approximately $10^{94}$ joules—an amount that exceeds the total energy content of the observable universe.* Time Constraints: Even a dramatically reduced, low-resolution simulation of Earth would consume $10^{35}$ to $10^{65}$ years of computing time per simulated second, vastly exceeding the universe’s age.In short, these calculations show that simulating our universe using physics like ours is fundamentally prohibited by energy conservation and entropy constraints; it is not merely a technological challenge.Furthermore, attempts to detect “glitches” have so far yielded nothing. The most rigorous experimental proposal suggested searching for a computational lattice by looking for rotational symmetry breaking in the arrival direction of ultra-high-energy cosmic rays. After years of monitoring, no predicted anisotropy has been detected, constraining this specific model of a simulation.This has led prominent theoretical physicist Sabine Hossenfelder to label the simulation hypothesis “unscientific” and even pseudoscience, arguing that mixing science with metaphysical speculation about omnipotent simulators is a mistake.Life and Meaning in a Digital RealityIf we were to discover the truth—that we are simulated—what would it mean for our lives?Philosopher David Chalmers argues against the notion that life would become meaningless. He contends that a simulated world is not an “illusion” but a “digital reality”. Our struggles, relationships, and joys are authentic to us. As he states, even if we’re in a perfect simulation, this is not an illusion; “Everything is just as meaningful as it was before”.The hypothesis also transforms theological concepts. An intentional creation by intelligent “outsiders” is analogous to a Creator, though the architects of our reality might be fallible, perhaps even immature, beings—not omniscient deities. Physicist James Gates even mused that the simulation idea “opens the door to eternal life or resurrection,” as our consciousness, being digital code, might be backed up or run again.Finally, the simulation hypothesis creates a profound conundrum regarding free will. Are our choices mere algorithms, making us “puppets” predetermined by code? Or, is our consciousness a “player” existing in a higher reality, making autonomous choices that influence the simulation—perhaps even instructing the simulation to “render” reality, thus elevating free will to a causal agent in physics?The Unanswered QuestionThe simulation hypothesis remains one of the most compelling and unresolvable intellectual debates of the modern era. While science has proven that simulating our universe under our physics is physically impossible, the hypothesis can always retreat to the unfalsifiable loophole that the simulators operate under physics we cannot conceive.However, the value of the idea isn’t in providing an answer we can confirm, but in the questions it forces us to ask. It spurs scientific creativity, pushing researchers to devise experiments at the boundary of physics and information. Whether our cosmos is base reality or one of infinitely nested simulations, we still face the same fundamental task: to live our lives as authentically and fully as we can. For us, as Chalmers suggests, the virtual reality we inhabit is real enough.The simulation hypothesis is like looking at a highly complex clockwork mechanism and wondering if the clockmaker used a pre-built computer program to design the gears, only to realize the sheer size and complexity of the resulting clock requires the program itself to be bigger than the finished clock—leaving us to marvel at the ingenuity (or impossibility) of the original design. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit frahlg.substack.com

Welcome to “Coordinated with Fredrik.” We often talk about the strategies founders need to deploy to win funding, but today, we are turning the tables. We are deconstructing the individual Venture Capitalist—the person sitting across the table—to understand the economic “machine” they operate within. The core truth is this: a VC’s behavior, which often appears baffling or “irrational” to outsiders, is a direct, rational response to the structural and financial incentives that govern their existence.The VC’s 10-Year Clock and the Power Law TyrannyEvery decision a VC makes is governed by a defined fund lifespan, typically a closed-end investment vehicle of 7 to 12 years. This “10-year clock” is non-negotiable and fundamentally shifts a VC’s personality and risk profile depending on the fund’s current phase.1. The Investment Period (Years 1-4): This is the “hunting” season where the VC is optimistic and eager to deploy capital, actively “build[ing] the portfolio”. They can underwrite a 10-year vision for a company to mature.2. The Management & Harvest Period (Years 5-10): The focus shifts entirely to managing, supporting, and exiting existing investments to return capital to LPs. A founder pitching in Year 7 of the fund is meeting a “farmer” or “mortician” who cannot make a new investment requiring another decade to mature. They prioritize speed over strategy for their current portfolio.The financial structure is the notorious “2 and 20” model. The “2” is the management fee (typically 1% to 2.5% of committed capital), which serves as the firm’s “salary” to cover operational costs and salaries. The “20” (carried interest, or “Carry”) is the “real payday”—a 20% share of the fund’s profits that General Partners (GPs) keep. Critically, this carry is only paid after the fund returns 100% of the initial capital to its LPs.This compensation structure creates the “Tyranny of the Power Law”. Because VCs must return the entire fund before seeing a dollar of profit, they are mathematically forced to find “really. large. exits.”. A $50 million acquisition might thrill a founder, but for a VC managing a $100 million fund, that profit is a “drop in the bucket”. They need one or two companies to return $100 million, $500 million, or even $1 billion to “return the entire fund” by themselves. This forces VCs into an “all-or-nothing” or “growth-at-all-costs” mindset. A stable, profitable, $50 million-revenue business is often considered a “zombie” because it doesn’t fit the Power Law. VCs are incentivized to push founders to take massive, company-ending risks to become a $1 billion “unicorn”.The Partnership Hierarchy: From Analyst to GPThe VC firm is a rigid hierarchy, and where an individual sits on this ladder dictates their job and incentives.• Junior Staff (Analysts/Associates): These entry-level roles are paid primarily from the management fee (the “2”). Their job is a “50-60 hours per week” grind of “screening pitch decks,” “deal sourcing,” and meticulous CRM updates. The role is often an “up-or-out” 2-3 year position, functioning as high-energy, low-cost labor to “filter signal from noise” for partners. Most junior VCs never see carry.• Partners (GPs): General Partners receive the “lion’s share of the carry”. Their primary responsibilities shift entirely away from analysis to fundraising from LPs, making final investment decisions, and taking board seats. For these senior partners, their entire financial motivation is the large, leveraged bet on the 20% carry, which will only pay off 8 to 12 years in the future, if at all. They often make low base salaries relative to their potential carry, with their own “capital commit” (1-3% of the fund) showing LPs they have “skin in the game”.The “black box” of the partner meeting, where a founder’s fate is decided, reveals internal politics are intense. The decision often boils down to the “point partner” (the founder’s internal champion) successfully arguing against colleagues in a “Real Decision-Making Arena”. This internal political debate is crucial, as illustrated by the recent dynamics at Sequoia Capital, where strategic misalignment and cultural failures led to leadership change, emphasizing that founders are pitching a specific partner who must then win an internal debate.Sourcing, Bias, and the FOMO-FOLS DyadVCs are obsessed with “proprietary deal flow”—deals sourced directly that are “less competitive” and usually mean “better terms”. However, deal flow is overwhelmingly driven by “Relationships”, with around 60% of VC deals originating from an investor’s personal network or referrals. This network-first approach, while an “efficiency play”, is the “primary engine of systemic bias in the industry”. If a VC’s network is homogenous, their pre-vetted deal flow will be equally homogenous.When evaluating nascent early-stage companies where there are “no concrete financial metrics”, VCs rely on heuristics and pattern-matching. Team is consistently the most critical factor, often cited as 95% of the decision at the seed stage. With little data, evaluation defaults to “gut feelings”, seeking “representativeness” (does this founder look and sound like a previous winner?). This reliance on “gut feel” is often a euphemism for “similarity bias” and perpetuates exclusion, which is why companies founded solely by women receive only 2% of all VC investment.To mitigate this bias, influential VCs like Mark Suster advocate to “Invest in Lines, Not Dots”. A “dot” is a single pitch meeting driven by “limited thought, limited due diligence”. A “line” is a “pattern of progress” observed over time, where a VC meets the entrepreneur early and watches their performance over 15 meetings or two years. This approach replaces biased gut feeling with observed data on the founder’s “tenacity” and “resiliency”.The internal decision to invest is a tug-of-war between two primal psychological fears:1. FOMO (Fear of Missing Out): The VC’s “biggest fear,” driven by regret over passing on a company that became a “really. large. exit.”. This drives VCs to invest.2. FOLS (Fear of Looking Stupid): The “avoidance of scrutiny,” driven by the fear of LPs questioning a bad investment. This drives VCs to say no.A founder successfully raises capital when they manufacture more FOMO than FOLS, often by showing the VC that other investors are interested, triggering a “bandwagon” effect and herd mentality.The Rise of the Solo GPThe traditional VC firm model is being challenged by the rise of the Solo General Partner (Solo GP). These are single individuals who raise a fund and make unilateral investment decisions. Their advantages are speed and flexibility (”a motorcycle” that weaves through traffic while larger firms are “18-wheelers”). Many Solo GPs leverage personal brands and expertise—like Aarthi Ramamurthy (Schema Ventures) who uses her podcast as a sourcing engine, or Marc Cohen (Unbundled VC) who “build[s] in public”. This new guard is competing on transparency and personal brand rather than the Assets Under Management (AUM) and management fees of the old guard.The truth remains that the job of a VC is a “tough business”, requiring patience (carry takes a decade to realize), relentless networking, and the painful necessity of turning away 98 out of 100 opportunities.The Zombie Trap: Managing FailureThe founder-VC relationship, particularly after the check is wired and a VC takes a board seat, is where the Power Law is brutally enforced. High valuations create expectations for matching performance, leading to intense pressure to pursue “growth metrics over unit economics”. If a company doesn’t fail but also doesn’t “go big,” it enters the “VC Zombie Trap”.A “zombie startup” is profitable and growing, perhaps 20% a year, but will never provide the 100x return the VC needs. VCs are incentivized to kill their zombies because they cannot waste time, energy, or “remaining capital” on a company that won’t “return the fund”. This is a “feature and not a bug of the power-law driven venture capital system”.The candid reality, as noted by Fred Wilson, is that aggregate VC returns are often “disappointing,” sometimes underperforming the NASDAQ. VCs are forced to be optimistic hunters, yet their job is a massive, leveraged bet that only pays off, if at all, years down the line.--------------------------------------------------------------------------------The mechanisms VCs use to cut through the noise—reliance on networks, pattern-matching, and the psychological battle between FOMO and FOLS—are also the mechanisms that introduce bias and structural rigidities into the ecosystem. What does this mean for the future of capital deployment?We have detailed how the VC machine operates, but the conversation doesn’t stop here. If you want to dive deeper into the tactics founders use to navigate the partner meeting “black box,” the specific “unwritten rules” of the VC ecosystem (like the “frieNDA”), or how the new wave of Solo GPs is disrupting this status quo, let us know! This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit frahlg.substack.com

The material provided centers on Johan Norberg’s arguments presented in his book, The Capitalist Manifesto, which serves as a defense of global capitalism, detailing its role in human progress, countering popular criticisms, and arguing for economic freedom in the modern era.This week on “Coordinated with Fredrik,” we dive into The Capitalist Manifesto with author Johan Norberg, who argues compellingly that the free market remains the best engine for human progress, despite recent global shocks and widespread political hostility.Norberg, whom Swedish Public Radio once noted as possibly the only person “particularly keen on globalization now”, lays out the dramatic, yet often unheralded, successes of the past two decades. Since he wrote his first defense of global capitalism in 2001, extreme poverty has been reduced by 70 per cent—amounting to over 138,000 people rising out of poverty every single day. This staggering progress, which also includes drastic reductions in child mortality and increased global life expectancy, confirms that we need more capitalism, not less.Addressing New Opposition and Old MythsThe nature of the opposition has changed drastically, moving from the political left (like Attac in 2001) to a “new generation of conservative politicians” and right-wing populists who now sound very much like the earlier critics. The common narrative shared across political extremes is that global capitalism has primarily benefited the rich and China, while wages stagnated and jobs disappeared in the West.Norberg argues this worldview is based on misunderstanding the economy as a zero-sum game. In reality, the decline in factory jobs is mostly due to automation and increased productivity (taken by “R2-D2 and C-3PO”) rather than competition from China. Furthermore, economic success—from the simplest item like a cup of coffee to large-scale innovations—comes from the complex, voluntary cooperation enabled by free markets, utilizing the localized knowledge of millions of actors. It is competition and freedom of choice that force capitalists to behave well.Bailouts, Breakdowns, and the Battle for BeliefWe explore modern threats, from the idea that the “Reagan/Thatcher era is over” to the dangers of crisis management. The pandemic showed that protectionism is perilous; resilience is achieved through diversified supply chains and the decentralized ingenuity of entrepreneurs adapting quickly to new needs.Crucially, Norberg challenges the notion that capitalism destroys the planet or makes us miserable. The solution to environmental problems, including climate change, is not “degrowth” (which, during the 2020 pandemic experiment, cut emissions by only 6% while plunging millions into poverty) but rather the prosperity and technology generated by the market. Data suggests that economic freedom is positively correlated with environmental performance, and “talking about money” is the key to accelerating the transition to greener, more efficient methods.Want to understand why the greatest catastrophe to hit humanity would be stopping economic growth, or explore why central bank attempts to prevent all economic risk have led to the rise of “zombie companies”? Tune in to the full discussion to discover how economic freedom is crucial for our future and why the unpredictable nature of capitalism is far superior to centralized command. This is a public episode. If you would like to discuss this with other subscribers or get access to bonus episodes, visit frahlg.substack.com