Discover Crazy Wisdom

Crazy Wisdom

Crazy Wisdom

Author: Stewart Alsop

Subscribed: 113Played: 6,068Subscribe

Share

© 2025 Stewart Alsop

Description

In his series "Crazy Wisdom," Stewart Alsop explores cutting-edge topics, particularly in the realm of technology, such as Urbit and artificial intelligence. Alsop embarks on a quest for meaning, engaging with others to expand his own understanding of reality and that of his audience. The topics covered in "Crazy Wisdom" are diverse, ranging from emerging technologies to spirituality, philosophy, and general life experiences. Alsop's unique approach aims to make connections between seemingly unrelated subjects, tying together ideas in unconventional ways.

517 Episodes

Reverse

In this episode of the Crazy Wisdom Podcast, host Stewart Alsop sits down with Mike Bakon to explore the fascinating intersection of hardware hacking, blockchain technology, and decentralized systems. Their conversation spans from Mike's childhood fascination with taking apart electronics in 1980s Poland to his current work with ESP32 microcontrollers, LoRa mesh networks, and Cardano blockchain development. They discuss the technical differences between UTXO and account-based blockchains, the challenges of true decentralization versus hybrid systems, and how AI tools are changing the development landscape. Mike shares his vision for incentivizing mesh networks through blockchain technology and explains why he believes mass adoption of decentralized systems will come through abstraction rather than technical education. The discussion also touches on the potential for creating new internet infrastructure using ad hoc mesh networks and the importance of maintaining truly decentralized, permissionless systems in an increasingly surveilled world. You can find Mike in Twitter as @anothervariable.Check out this GPT we trained on the conversationTimestamps00:00 Introduction to Hardware and Early Experiences02:59 The Evolution of AI in Hardware Development05:56 Decentralization and Blockchain Technology09:02 Understanding UTXO vs Account-Based Blockchains11:59 Smart Contracts and Their Functionality14:58 The Importance of Decentralization in Blockchain17:59 The Process of Data Verification in Blockchain20:48 The Future of Blockchain and Its Applications34:38 Decentralization and Trustless Systems37:42 Mainstream Adoption of Blockchain39:58 The Role of Currency in Blockchain43:27 Interoperability vs Bridging in Blockchain47:27 Exploring Mesh Networks and LoRa Technology01:00:25 The Future of AI and DecentralizationKey Insights1. Hardware curiosity drives innovation from childhood - Mike's journey into hardware began as a child in 1980s Poland, where he would disassemble toys like battery-powered cars to understand how they worked. This natural curiosity about taking things apart and understanding their inner workings laid the foundation for his later expertise in microcontrollers like the ESP32 and his deep understanding of both hardware and software integration.2. AI as a research companion, not a replacement for coding - Mike uses AI and LLMs primarily as research tools and coding companions rather than letting them write entire applications. He finds them invaluable for getting quick answers to coding problems, analyzing Git repositories, and avoiding the need to search through Stack Overflow, but maintains anxiety when AI writes whole functions, preferring to understand and write his own code.3. Blockchain decentralization requires trustless consensus verification - The fundamental difference between blockchain databases and traditional databases lies in the consensus process that data must go through before being recorded. Unlike centralized systems where one entity controls data validation, blockchains require hundreds of nodes to verify each block through trustless consensus mechanisms, ensuring data integrity without relying on any single authority.4. UTXO vs account-based blockchains have fundamentally different architectures - Cardano uses an extended UTXO model (like Bitcoin but with smart contracts) where transactions consume existing UTXOs and create new ones, keeping the ledger lean. Ethereum uses account-based ledgers that store persistent state, leading to much larger data requirements over time and making it increasingly difficult for individuals to sync and maintain full nodes independently.5. True interoperability differs fundamentally from bridging - Real blockchain interoperability means being able to send assets directly between different blockchains (like sending ADA to a Bitcoin wallet) without intermediaries. This is possible between UTXO-based chains like Cardano and Bitcoin. Bridges, in contrast, require centralized entities to listen for transactions on one chain and trigger corresponding actions on another, introducing centralization risks.6. Mesh networks need economic incentives for sustainable infrastructure - While technologies like LoRa and Meshtastic enable impressive decentralized communication networks, the challenge lies in incentivizing people to maintain the hardware infrastructure. Mike sees potential in combining blockchain-based rewards (like earning ADA for running mesh network nodes) with existing decentralized communication protocols to create self-sustaining networks.7. Mass adoption comes through abstraction, not education - Rather than trying to educate everyone about blockchain technology, mass adoption will happen when developers can build applications on decentralized infrastructure that users interact with seamlessly, without needing to understand the underlying blockchain mechanics. Users should be able to benefit from decentralization through well-designed interfaces that abstract away the complexity of wallets, addresses, and consensus mechanisms.

In this episode of the Crazy Wisdom Podcast, host Stewart Alsop speaks with Aaron Borger, founder and CEO of Orbital Robotics, about the emerging world of space robotics and satellite capture technology. The conversation covers a fascinating range of topics including Borger's early experience launching AI-controlled robotic arms to space as a student, his work at Blue Origin developing lunar lander software, and how his company is developing robots that can capture other spacecraft for refueling, repair, and debris removal. They discuss the technical challenges of operating in space - from radiation hardening electronics to dealing with tumbling satellites - as well as the broader implications for the space economy, from preventing the Kessler effect to building space-based recycling facilities and mining lunar ice for rocket fuel. You can find more about Aaron Borger’s work at Orbital Robots and follow him on LinkedIn for updates on upcoming missions and demos. Check out this GPT we trained on the conversationTimestamps00:00 Introduction to orbital robotics, satellite capture, and why sensing and perception matter in space 05:00 The Kessler Effect, cascading collisions, and why space debris is an economic problem before it is an existential one 10:00 From debris removal to orbital recycling and the idea of turning junk into infrastructure 15:00 Long-term vision of space factories, lunar ice, and refueling satellites to bootstrap a lunar economy 20:00 Satellite upgrading, servicing live spacecraft, and expanding today’s narrow space economy 25:00 Costs of collision avoidance, ISS maneuvers, and making debris capture economically viable 30:00 Early experiments with AI-controlled robotic arms, suborbital launches, and reinforcement learning in microgravity 35:00 Why deterministic AI and provable safety matter more than LLM hype for spacecraft control 40:00 Radiation, single event upsets, and designing space-safe AI systems with bounded behavior 45:00 AI, physics-based world models, and autonomy as the key to scaling space operations 50:00 Manufacturing constraints, space supply chains, and lessons from rocket engine software 55:00 The future of space startups, geopolitics, deterrence, and keeping space usable for humanityKey Insights1. Space Debris Removal as a Growing Economic Opportunity: Aaron Borger explains that orbital debris is becoming a critical problem with approximately 3,000-4,000 defunct satellites among the 15,000 total satellites in orbit. The company is developing robotic arms and AI-controlled spacecraft to capture other satellites for refueling, repair, debris removal, and even space station assembly. The economic case is compelling - it costs about $1 million for the ISS to maneuver around debris, so if their spacecraft can capture and remove multiple pieces of debris for less than that cost per piece, it becomes financially viable while addressing the growing space junk problem.2. Revolutionary AI Safety Methods Enable Space Robotics: Traditional NASA engineers have been reluctant to use AI for spacecraft control due to safety concerns, but Orbital Robotics has developed breakthrough methods combining reinforcement learning with traditional control systems that can mathematically prove the AI will behave safely. Their approach uses physics-based world models rather than pure data-driven learning, ensuring deterministic behavior and bounded operations. This represents a significant advancement over previous AI approaches that couldn't guarantee safe operation in the high-stakes environment of space.3. Vision for Space-Based Manufacturing and Resource Utilization: The long-term vision extends beyond debris removal to creating orbital recycling facilities that can break down captured satellites and rebuild them into new spacecraft using existing materials in orbit. Additionally, the company plans to harvest propellant from lunar ice, splitting it into hydrogen and oxygen for rocket fuel, which could kickstart a lunar economy by providing economic incentives for moon-based operations while supporting the growing satellite constellation infrastructure.4. Unique Space Technology Development Through Student Programs: Borger and his co-founder gained unprecedented experience by launching six AI-controlled robotic arms to space through NASA's student rocket programs while still undergraduates. These missions involved throwing and catching objects in microgravity using deep reinforcement learning trained in simulation and tested on Earth. This hands-on space experience is extremely rare and gave them practical knowledge that informed their current commercial venture.5. Hardware Challenges Require Innovative Engineering Solutions: Space presents unique technical challenges including radiation-induced single event upsets that can reset processors for up to 10 seconds, requiring "passive safe" trajectories that won't cause collisions even during system resets. Unlike traditional space companies that spend $100,000 on radiation-hardened processors, Orbital Robotics uses automotive-grade components made radiation-tolerant through smart software and electrical design, enabling cost-effective operations while maintaining safety.6. Space Manufacturing Supply Chain Constraints: The space industry faces significant manufacturing bottlenecks with 24-week lead times for space-grade components and limited suppliers serving multiple companies simultaneously. This creates challenges for scaling production - Orbital Robotics needs to manufacture 30 robotic arms per year within a few years. They've partnered with manufacturers who previously worked on Blue Origin's rocket engines to address these supply chain limitations and achieve the scale necessary for their ambitious deployment timeline.7. Emerging Space Economy Beyond Communications: While current commercial space activities focus primarily on communications satellites (with SpaceX Starlink holding 60% market share) and Earth observation, new sectors are emerging including AI data centers in space and orbital manufacturing. The convergence of AI, robotics, and space technology is enabling more sophisticated autonomous operations, from predictive maintenance of rocket engines using sensor data to complex orbital maneuvering and satellite servicing that was previously impossible with traditional control methods.

In this episode, Stewart Alsop sits down with Joe Wilkinson of Artisan Growth Strategies to talk through how vibe coding is changing who gets to build software, why functional programming and immutability may be better suited for AI-written code, and how tools like LLMs are reshaping learning, work, and curiosity itself. The conversation ranges from Joe’s experience living in China and his perspective on Chinese AI labs like DeepSeek, Kimi, Minimax, and GLM, to mesh networks, Raspberry Pi–powered infrastructure, decentralization, and what sovereignty might mean in a world where intelligence is increasingly distributed. They also explore hallucinations, AlphaGo’s Move 37, and why creative “wrongness” may be essential for real breakthroughs, along with the tension between centralized power and open access to advanced technology. You can find more about Joe’s work at https://artisangrowthstrategies.com and follow him on X at https://x.com/artisangrowth.Check out this GPT we trained on the conversationTimestamps00:00 – Vibe coding as a new learning unlock, China experience, information overload, and AI-powered ingestion systems05:00 – Learning to code late, Exercism, syntax friction, AI as a real-time coding partner10:00 – Functional programming, Elixir, immutability, and why AI struggles with mutable state15:00 – Coding metaphors, “spooky action at a distance,” and making software AI-readable20:00 – Raspberry Pi, personal servers, mesh networks, and peer-to-peer infrastructure25:00 – Curiosity as activation energy, tech literacy gaps, and AI-enabled problem solving30:00 – Knowledge work superpowers, decentralization, and small groups reshaping systems35:00 – Open source vs open weights, Chinese AI labs, data ingestion, and competitive dynamics40:00 – Power, safety, and why broad access to AI beats centralized control45:00 – Hallucinations, AlphaGo’s Move 37, creativity, and logical consistency in AI50:00 – Provenance, epistemology, ontologies, and risks of closed-loop science55:00 – Centralization vs decentralization, sovereign countries, and post-global-order shifts01:00:00 – U.S.–China dynamics, war skepticism, pragmatism, and cautious optimism about the futureKey InsightsVibe coding fundamentally lowers the barrier to entry for technical creation by shifting the focus from syntax mastery to intent, structure, and iteration. Instead of learning code the traditional way and hitting constant friction, AI lets people learn by doing, correcting mistakes in real time, and gradually building mental models of how systems work, which changes who gets to participate in software creation.Functional programming and immutability may be better aligned with AI-written code than object-oriented paradigms because they reduce hidden state and unintended side effects. By making data flows explicit and preventing “spooky action at a distance,” immutable systems are easier for both humans and AI to reason about, debug, and extend, especially as code becomes increasingly machine-authored.AI is compressing the entire learning stack, from software to physical reality, enabling people to move fluidly between abstract knowledge and hands-on problem solving. Whether fixing hardware, setting up servers, or understanding networks, the combination of curiosity and AI assistance turns complex systems into navigable terrain rather than expert-only domains.Decentralized infrastructure like mesh networks and personal servers becomes viable when cognitive overhead drops. What once required extreme dedication or specialist knowledge can now be done by small groups, meaning that relatively few motivated individuals can meaningfully change communication, resilience, and local autonomy without waiting for institutions to act.Chinese AI labs are likely underestimated because they operate with different constraints, incentives, and cultural inputs. Their openness to alternative training methods, massive data ingestion, and open-weight strategies creates competitive pressure that limits monopolistic control by Western labs and gives users real leverage through choice.Hallucinations and “mistakes” are not purely failures but potential sources of creative breakthroughs, similar to AlphaGo’s Move 37. If AI systems are overly constrained to consensus truth or authority-approved outputs, they risk losing the capacity for novel insight, suggesting that future progress depends on balancing correctness with exploratory freedom.The next phase of decentralization may begin with sovereign countries before sovereign individuals, as AI enables smaller nations to reason from first principles in areas like medicine, regulation, and science. Rather than a collapse into chaos, this points toward a more pluralistic world where power, knowledge, and decision-making are distributed across many competing systems instead of centralized authorities.

In this episode of the Crazy Wisdom podcast, host Stewart Alsop talks with Umair Siddiqui about a wide range of interconnected topics spanning plasma physics, aerospace engineering, fusion research, and the philosophy of building complex systems, drawing on Umair’s path from hands-on plasma experiments and nonlinear physics to founding and scaling RF plasma thrusters for small satellites at Phase Four; along the way they discuss how plasmas behave at material boundaries, why theory often breaks in real-world systems, how autonomous spacecraft propulsion actually works, what space radiation does to electronics and biology, the practical limits and promise of AI in scientific discovery, and why starting with simple, analog approaches before adding automation is critical in both research and manufacturing, grounding big ideas in concrete engineering experience. You can find Umair on Linkedin.Check out this GPT we trained on the conversationTimestamps00:00 Opening context and plasma rockets, early interests in space, cars, airplanes 05:00 Academic path into space plasmas, mechanical engineering, and hands-on experiments 10:00 Grad school focus on plasma physics, RF helicon sources, and nonlinear theory limits 15:00 Bridging fusion research and space propulsion, Department of Energy funding context 20:00 Spin-out to Phase Four, building CubeSat RF plasma thrusters and real hardware 25:00 Autonomous propulsion systems, embedded controllers, and spacecraft fault handling 30:00 Radiation in space, single-event upsets, redundancy vs rad-hard electronics 35:00 Analog-first philosophy, mechanical thinking, and resisting premature automation 40:00 AI in science, low vs high hanging fruit, automation of experiments and insight 45:00 Manufacturing philosophy, incremental scaling, lessons from Elon Musk and production 50:00 Science vs engineering, concentration of effort, power, and progress in discoveryKey InsightsOne of the central insights of the episode is that plasma physics sits at the intersection of many domains—fusion energy, space environments, and spacecraft propulsion—and progress often comes from working directly at those boundaries. Umair Siddiqui emphasizes that studying how plasmas interact with materials and magnetic fields revealed where theory breaks down, not because the math is sloppy, but because plasmas are deeply nonlinear systems where small changes can produce outsized effects.The conversation highlights how hands-on experimentation is essential to real understanding. Building RF plasma sources, diagnostics, and thrusters forced constant confrontation with reality, showing that models are only approximations. This experimental grounding allowed insights from fusion research to transfer unexpectedly into practical aerospace applications like CubeSat propulsion, bridging fields that rarely talk to each other.A key takeaway is the difference between science and engineering as intent, not method. Science aims to understand, while engineering aims to make something work, but in practice they blur. Developing space hardware required scientific discovery along the way, demonstrating that companies can and often must do real science to achieve ambitious engineering goals.Umair articulates a strong philosophy of analog-first thinking, arguing that keeping systems simple and mechanical for as long as possible preserves clarity. Premature digitization or automation can obscure understanding, consume mental bandwidth, and even lock in errors before the system is well understood.The episode offers a grounded view of automation and AI in science, framing it in terms of low- versus high-hanging fruit. AI excels at exploring large parameter spaces and finding optima, but humans are still needed to judge physical plausibility, interpret results, and set meaningful directions.Space engineering reveals harsh realities about radiation, cosmic rays, and electronics, where a single particle can flip a bit or destroy a transistor. This drives design trade-offs between radiation-hardened components and redundant systems, reinforcing how environment fundamentally shapes engineering decisions.Finally, the discussion suggests that scientific and technological progress accelerates with concentrated focus and resources. Whether through governments, institutions, or individuals, periods of rapid advancement tend to follow moments where attention, capital, and intent are sharply aligned rather than diffusely spread.

In this episode of Crazy Wisdom, Stewart Alsop sits down with Javier Villar for a wide-ranging conversation on Argentina, Spain’s political drift, fiat money, the psychology of crowds, Dr. Hawkins’ levels of consciousness, the role of elites and intelligence agencies, spiritual warfare, and whether modern technology accelerates human freedom or deepens control. Javier speaks candidly about symbolism, the erosion of sovereignty, the pandemic as a global turning point, and how spiritual frameworks help make sense of political theater.Check out this GPT we trained on the conversationTimestamps00:00 Stewart and Javier compare Argentina and Spain, touching on cultural similarity, Argentinization, socialism, and the slow collapse of fiat systems.05:00 They explore Brave New World conditioning, narrative control, traditional Catholics, and the psychology of obedience in the pandemic.10:00 Discussion shifts to Milei, political theater, BlackRock, Vanguard, mega-corporations, and the illusion of national sovereignty under a single world system.15:00 Stewart and Javier examine China, communism, spiritual structures, karmic cycles, Kali Yuga, and the idea of governments at war with their own people.20:00 They move into Revelations, Hawkins, calibrations, conspiracy labels, satanic vs luciferic energy, and elites using prophecy as a script.25:00 Conversation deepens into ego vs Satan, entrapment networks, Epstein Island, Crowley, Masonic symbolism, and spiritual corruption.30:00 They question secularism, the state as religion, technology, AI, surveillance, freedom of currency, and the creative potential suppressed by government.35:00 Ending with Bitcoin, stablecoins, network-state ideas, U.S. power, Argentina’s contradictions, and whether optimism is still warranted.Key InsightsArgentina and Spain mirror each other’s decline. Javier argues that despite surface differences, both countries share cultural instincts that make them vulnerable to the same political traps—particularly the expansion of the welfare state, the erosion of sovereignty, and what he calls the “Argentinization” of Spain. This framing turns the episode into a study of how nations repeat each other’s mistakes.Fiat systems create a controlled collapse rather than a dramatic one. Instead of Weimar-style hyperinflation, Javier claims modern monetary structures are engineered to “boil the frog,” preserving the illusion of stability while deepening dependency on the state. This slow-motion decline is portrayed as intentional rather than accidental.Political leaders are actors within a single global architecture of power. Whether discussing Milei, Trump, or European politics, Javier maintains that governments answer to mega-corporations and intelligence networks, not citizens. National politics, in this view, is theater masking a unified global managerial order.Pandemic behavior revealed mass submission to narrative control. Stewart and Javier revisit 2020 as a psychological milestone, arguing that obedience to lockdowns and mandates exposed a widespread inability to question authority. For Javier, this moment clarified who can perceive truth and who collapses under social pressure.Hawkins’ map of consciousness shapes their interpretation of good and evil. They use the 200 threshold to distinguish animal from angelic behavior, exploring whether ego itself is the “Satanic” force. Javier suggests Hawkins avoided explicit talk of Satan because most people cannot face metaphysical truth without defensiveness.Elites rely on symbolic power, secrecy, and coercion. References to Epstein Island, Masonic symbolism, and intelligence-agency entrapment support Javier’s view that modern control systems operate through sexual blackmail, ritual imagery, and hidden hierarchies rather than democratic mechanisms.Technology’s promise is strangled by state power. While Stewart sees potential in AI, crypto, and network-state ideas, Javier insists innovation is meaningless without freedom of currency, association, and exchange. Technology is neutral, he argues, but becomes a tool of surveillance and control when monopolized by governments.

In this episode of Crazy Wisdom, I—Stewart Alsop—sit down with Garrett Dailey to explore a wide-ranging conversation that moves from the mechanics of persuasion and why the best pitches work by attraction rather than pressure, to the nature of AI as a pattern tool rather than a mind, to power cycles, meaning-making, and the fracturing of modern culture. Garrett draws on philosophy, psychology, strategy, and his own background in storytelling to unpack ideas around narrative collapse, the chaos–order split in human cognition, the risk of “AI one-shotting,” and how political and technological incentives shape the world we're living through. You can find the tweet Stewart mentions in this episode here. Also, follow Garrett Dailey on Twitter at @GarrettCDailey, or find more of his pitch-related work on LinkedIn.Check out this GPT we trained on the conversationTimestamps00:00 Garrett opens with persuasion by attraction, storytelling, and why pitches fail with force. 05:00 We explore gravity as metaphor, the opposite of force, and the “ring effect” of a compelling idea. 10:00 AI as tool not mind; creativity, pattern prediction, hype cycles, and valuation delusions. 15:00 Limits of LLMs, slopification, recursive language drift, and cultural mimicry. 20:00 One-shotting, psychosis risk, validation-seeking, consciousness vs prediction. 25:00 Order mind vs chaos mind, solipsism, autism–schizophrenia mapping, epistemology. 30:00 Meaning, presence, Zen, cultural fragmentation, shared models breaking down. 35:00 U.S. regional culture, impossibility of national unity, incentives shaping politics. 40:00 Fragmentation vs reconciliation, markets, narratives, multipolarity, Dune archetypes. 45:00 Patchwork age, decentralization myths, political fracturing, libertarian limits. 50:00 Power as zero-sum, tech-right emergence, incentives, Vance, Yarvin, empire vs republic. 55:00 Cycles of power, kyklos, democracy’s decay, design-by-committee, institutional failure.Key InsightsPersuasion works best through attraction, not pressure. Garrett explains that effective pitching isn’t about forcing someone to believe you—it’s about creating a narrative gravity so strong that people move toward the idea on their own. This reframes persuasion from objection-handling into desire-shaping, a shift that echoes through sales, storytelling, and leadership.AI is powerful precisely because it’s not a mind. Garrett rejects the “machine consciousness” framing and instead treats AI as a pattern amplifier—extraordinarily capable when used as a tool, but fundamentally limited in generating novel knowledge. The danger arises when humans project consciousness onto it and let it validate their insecurities.Recursive language drift is reshaping human communication. As people unconsciously mimic LLM-style phrasing, AI-generated patterns feed back into training data, accelerating a cultural “slopification.” This becomes a self-reinforcing loop where originality erodes, and the machine’s voice slowly colonizes the human one.The human psyche operates as a tension between order mind and chaos mind. Garrett’s framework maps autism and schizophrenia as pathological extremes of this duality, showing how prediction and perception interact inside consciousness—and why AI, which only simulates chaos-mind prediction, can never fully replicate human knowing.Meaning arises from presence, not abstraction. Instead of obsessing over politics, geopolitics, or distant hypotheticals, Garrett argues for a Zen-like orientation: do what you're doing, avoid what you're not doing. Meaning doesn’t live in narratives about the future—it lives in the task at hand.Power follows predictable cycles—and America is deep in one. Borrowing from the Greek kyklos, Garrett frames the U.S. as moving from aristocracy toward democracy’s late-stage dysfunction: populism, fragmentation, and institutional decay. The question ahead is whether we’re heading toward empire or collapse.Decentralization is entropy, not salvation. Crypto dreams of DAOs and patchwork societies ignore the gravitational pull of power. Systems fragment as they weaken, but eventually a new center of order emerges. The real contest isn’t decentralization vs. centralization—it’s who will have the coherence and narrative strength to recentralize the pieces.

In this episode of Crazy Wisdom, Stewart Alsop talks with Aaron Lowry about the shifting landscape of attention, technology, and meaning—moving through themes like treasure-hunt metaphors for human cognition, relevance realization, the evolution of observational tools, decentralization, blockchain architectures such as Cardano, sovereignty in computation, the tension between scarcity and abundance, bioelectric patterning inspired by Michael Levin’s research, and the broader cultural and theological currents shaping how we interpret reality. You can follow Aaron’s work and ongoing reflections on X at aaron_lowry.Check out this GPT we trained on the conversationTimestamps00:00:00 Stewart and Aaron open with the treasure-hunt metaphor, salience landscapes, and how curiosity shapes perception. 00:05:00 They explore shifting observational tools, Hubble vs James Webb, and how data reframes what we think is real. 00:10:00 The conversation moves to relevance realization, missing “Easter eggs,” and the posture of openness. 00:15:00 Stewart reflects on AI, productivity, and feeling pulled deeper into computers instead of freed from them. 00:20:00 Aaron connects this to monetary policy, scarcity, and technological pressure. 00:25:00 They examine voice interfaces, edge computing, and trust vs convenience. 00:30:00 Stewart shares experiments with Raspberry Pi, self-hosting, and escaping SaaS dependence. 00:35:00 They discuss open-source, China’s strategy, and the economics of free models. 00:40:00 Aaron describes building hardware–software systems and sensor-driven projects. 00:45:00 They turn to blockchain, UTXO vs account-based, node sovereignty, and Cardano. 00:50:00 Discussion of decentralized governance, incentives, and transparency. 00:55:00 Geopolitics enters: BRICS, dollar reserve, private credit, and institutional fragility. 01:00:00 They reflect on the meaning crisis, gnosticism, reductionism, and shattered cohesion. 01:05:00 Michael Levin, bioelectric patterning, and vertical causation open new biological and theological frames. 01:10:00 They explore consciousness as fundamental, Stephen Wolfram, and the limits of engineered solutions. 01:15:00 Closing thoughts on good-faith orientation, societal transformation, and the pull toward wilderness.Key InsightsCuriosity restructures perception. Aaron frames reality as something we navigate more like a treasure hunt than a fixed map. Our “salience landscape” determines what we notice, and curiosity—not rigid frameworks—keeps us open to signals we would otherwise miss. This openness becomes a kind of existential skill, especially in a world where data rarely aligns cleanly with our expectations.Our tools reshape our worldview. Each technological leap—from Hubble to James Webb—doesn’t just increase resolution; it changes what we believe is possible. Old models fail to integrate new observations, revealing how deeply our understanding depends on the precision and scope of our instruments.Technology increases pressure rather than reducing it. Even as AI boosts productivity, Stewart notices it pulling him deeper into computers. Aaron argues this is systemic: productivity gains don’t free us; they raise expectations, driven by monetary policy and a scarcity-based economic frame.Digital sovereignty is becoming essential. The conversation highlights the tension between convenience and vulnerability. Cloud-based AI creates exposure vectors into personal life, while running local hardware—Raspberry Pis, custom Linux systems—restores autonomy but requires effort and skill.Blockchain architecture determines decentralization. Aaron emphasizes the distinction between UTXO and account-based systems, arguing that UTXO architectures (Bitcoin, Cardano) support verifiable edge participation, while account-based chains accumulate unwieldy state and centralize validation over time.Institutional trust is eroding globally. From BRICS currency moves to private credit schemes, both note how geopolitical maneuvers signal institutional fragility. The “few men in a room” dynamic persists, but now under greater stress, driving more people toward decentralization and self-reliance.Biology may operate on deeper principles than genes. Michael Levin’s work on bioelectric patterning opens the door to “vertical causation”—higher-level goals shaping lower-level processes. This challenges reductionism and hints at a worldview where consciousness, meaning, and biological organization may be intertwined in ways neither materialism nor traditional theology fully capture.

In this conversation, Stewart Alsop sits down with Ken Lowry to explore a wide sweep of themes running through Christianity, Protestant vs. Catholic vs. Orthodox traditions, the nature of spirits and telos, theosis and enlightenment, information technology, identity, privacy, sexuality, the New Age “Rainbow Bridge,” paganism, Buddhism, Vedanta, and the unfolding meaning crisis; listeners who want to follow more of Ken’s work can find him on his YouTube channel Climbing Mount Sophia and on Twitter under KenLowry8.Check out this GPT we trained on the conversationTimestamps00:00 Christianity’s tangled history surfaces as Stewart Alsop and Ken Lowry unpack Luther, indulgences, mediation, and the printing-press information shift.05:00 Luther’s encounters with the devil lead into talk of perception, hallucination, and spiritual influence on “main-character” lives.10:00 Protestant vs. Catholic vs. Orthodox worship styles highlight telos, Eucharist, liturgy, embodiment, and teaching as information.15:00 The Church as a living spirit emerges, tied to hierarchy, purpose, and Michael Levin’s bioelectric patterns shaping form.20:00 Spirits, goals, Dodgers-as-spirit, and Christ as the highest ordering spirit frame meaning and participation.25:00 Identity, self, soul, privacy, intimacy, and the internet’s collapse of boundaries reshape inner life.30:00 New Age, Rainbow Bridge, Hawkins’ calibration, truth-testing, and spiritual discernment enter the story.35:00 Stewart’s path back to Christianity opens discussion of enlightenment, Protestant legalism, Orthodox theosis, and healing.40:00 Emptiness, relationality, Trinity, and personhood bridge Buddhism and mystical Christianity.45:00 Suffering, desire, higher spirits, and orientation toward the real sharpen the contrast between simulation and reality.50:00 Technology, bodies, AI, and simulated worlds raise questions of telos, meaning, and modern escape.55:00 Neo-paganism, Hindu hierarchy of gods, Vedanta, and the need for a personal God lead toward Jesus as historical revelation.01:00:00 Buddha, enlightenment, theosis, the post-1945 world, Hitler as negative pole, and goodness as purpose close the inquiry.Key InsightsMediation and information shape the Church. Ken Lowry highlights how the printing press didn’t just spread ideas—it restructured Christian life by shifting mediation. Once information became accessible, individuals became the “interface” with Christ, fundamentally changing Protestant, Catholic, and Orthodox trajectories and the modern crisis of religious choice.The Protestant–Catholic–Orthodox split hinges on telos. Protestantism orients the service around teaching and information, while Catholic and Orthodox traditions culminate in the Eucharist, embodiment, and liturgy. This difference expresses two visions of what humans are doing in church: receiving ideas or participating in a transformative ritual that shapes the whole person.Spirits, telos, and hierarchy offer a map of reality. Ken frames spirits as real intelligible goals that pull people into coordinated action—seen as clearly in a baseball team as in a nation. Christ is the highest spirit because aiming toward Him properly orders all lower goals, giving a coherent vertical structure to meaning.Identity, privacy, and intimacy have transformed under the internet. The shift from soul → self → identity tracks changes in information technology. The internet collapses boundaries, creating unprecedented exposure while weakening the inherent privacy of intimate realities such as genuine lovemaking, which Ken argues can’t be made public without destroying its nature.New Age influences and Hawkins’ calibration reflect a search for truth. Stewart’s encounters with the Rainbow Bridge world, David Hawkins’ muscle-testing epistemology, and the escape from scientistic secularism reveal a cultural hunger for spiritual discernment in the absence of shared metaphysical grounding.Enlightenment and theosis may be the same mountain. Ken suggests that Buddhist enlightenment and Orthodox theosis aim at the same transformative reality: full communion with what is most real. The difference lies in Jesus as the concrete, personal revelation of God, offering a relational path rather than pure negation or emptiness.Secularism is shaped by powerfully negative telos. Ken argues that the modern world orients itself not toward the Good revealed in Christ but away from the Evil revealed in Hitler. Moving away from evil as a primary aim produces confusion, because only a positive vision of the Good can order desires, technology, suffering, and the overwhelming power of modern simulations.

On this episode of Crazy Wisdom, I, Stewart Alsop, sit down with Dax Raad, co-founder of OpenCode, for a wide-ranging conversation about open-source development, command-line interfaces, the rise of coding agents, how LLMs change software workflows, the tension between centralization and decentralization in tech, and even what it’s like to push the limits of the terminal itself. We talk about the future of interfaces, fast-feedback programming, model switching, and why open-source momentum—especially from China—is reshaping the landscape. You can find Dax on Twitter and check an example of what can be done using OpenCode in this tweet.Check out this GPT we trained on the conversationTimestamps00:00 Stewart Alsop and Dax Raad open with the origins of OpenCode, the value of open source, and the long-tail problem in coding agents. 05:00 They explore why command line interfaces keep winning, the universality of the terminal, and early adoption of agentic workflows. 10:00 Dax explains pushing the terminal with TUI frameworks, rich interactions, and constraints that improve UX. 15:00 They contrast CLI vs. chat UIs, discuss voice-driven reviews, and refining prompt-review workflows. 20:00 Dax lays out fast feedback loops, slow vs. fast models, and why autonomy isn’t the goal. 25:00 Conversation turns to model switching, open-source competitiveness, and real developer behavior. 30:00 They examine inference economics, Chinese open-source labs, and emerging U.S. efforts. 35:00 Dax breaks down incumbents like Google and Microsoft and why scale advantages endure. 40:00 They debate centralization vs. decentralization, choice, and the email analogy. 45:00 Stewart reflects on building products; Dax argues for healthy creative destruction. 50:00 Hardware talk emerges—Raspberry Pi, robotics, and LLMs as learning accelerators. 55:00 Dax shares insights on terminal internals, text-as-canvas rendering, and the elegance of the medium.Key InsightsOpen source thrives where the long tail matters. Dax explains that OpenCode exists because coding agents must integrate with countless models, environments, and providers. That complexity naturally favors open source, since a small team can’t cover every edge case—but a community can. This creates a collaborative ecosystem where users meaningfully shape the tool.The command line is winning because it’s universal, not nostalgic. Many misunderstand the surge of CLI-based AI tools, assuming it’s aesthetic or retro. Dax argues it’s simply the easiest, most flexible, least opinionated surface that works everywhere—from enterprise laptops to personal dev setups—making adoption frictionless.Terminal interfaces can be richer than assumed. The team is pushing TUI frameworks far beyond scrolling text, introducing mouse support, dialogs, hover states, and structured interactivity. Despite constraints, the terminal becomes a powerful “text canvas,” capable of UI complexity normally reserved for GUIs.Fast feedback loops beat “autonomous” long-running agents. Dax rejects the trend of hour-long AI tasks, viewing it as optimizing around model slowness rather than user needs. He prefers rapid iteration with faster models, reviewing diffs continuously, and reserving slower models only when necessary.Open-source LLMs are improving quickly—and economics matter. Many open models now approach the quality of top proprietary systems while being far cheaper and faster to serve. Because inference is capital-intensive, competition pushes prices down, creating real incentives for developers and companies to reconsider model choices.Centralization isn’t the enemy—lack of choice is. Dax frames the landscape like email: centralized providers dominate through convenience and scale, but the open protocols underneath protect users’ ability to choose alternatives. The real danger is ecosystems where leaving becomes impossible.LLMs dramatically expand what individuals can learn and build. Both Stewart and Dax highlight that AI enables people to tackle domains previously too opaque or slow to learn—from terminal internals to hardware tinkering. This accelerates creativity and lowers barriers, shifting agency back to small teams and individuals.

In this episode of Crazy Wisdom, I, Stewart Alsop, sit down with Argentine artist Mathilda Martin to explore the intimate connection between creativity, flow, and authenticity—from how swimming mirrors painting, to why art can heal, and what makes human-made art irreplaceable in the age of AI. We also touch on Argentina’s vibrant art scene, the shift in the art world after COVID, and the fine line between commercial and soulful creation. You can find Mathilda’s work on Instagram at @arte_mathilda.Check out this GPT we trained on the conversationTimestamps00:00 – Mathilda Martin joins Stewart Alsop to talk about art, creativity, and her upcoming exhibitions in Miami and Uruguay.05:00 – She shares how swimming connects to painting, describing water as calm, presence, and a source of flow and meditation.10:00 – They discuss art as therapy, childhood creativity, and overcoming fear by simply starting to create.15:00 – Mathilda reflects on her love for Van Gogh and feeling as the essence of authentic art, contrasting it with the coldness of AI.20:00 – The conversation turns to the value of human-made art and whether galleries can tell the difference between AI and real artists.25:00 – They explore Argentine authenticity, “chantas,” and what makes Argentina both chaotic and deeply real.30:00 – Mathilda talks about solidarity, community, and daily life in Buenos Aires amid political and economic instability.35:00 – She highlights Argentine muralists and how collaboration and scale transform artistic expression.40:00 – The pair discuss the commercialization of art, the “factory artist,” and staying true to feeling over fame.45:00 – Mathilda explains how COVID reshaped the art world, empowering independent artists to exhibit without galleries.50:00 – They end with art markets in Argentina vs. the U.S., her gallery in New York, and upcoming shows at Spectrum Miami and Punta del Este.Key InsightsArt and Water Share the Same Flow: Mathilda Martin reveals how swimming and painting both bring her into a meditative state she calls “the pause.” In the water, she feels the same stillness she experiences while painting — a total immersion in the present moment where the outside world disappears.Art Is a Form of Healing: Mathilda emphasizes that art is not just expression but medicine. She references the World Health Organization’s recognition that creativity benefits mental and physical health, describing painting as a space of emotional regulation and clarity.Human-Made Art Has Soul, AI Doesn’t: One of the episode’s most thought-provoking moments comes when Mathilda contrasts the warmth of human-made art with the cold precision of AI. She believes that while AI can replicate technique, it can’t replicate feeling — and that collectors will always value art infused with human emotion.Authenticity Defines Argentine Culture: Mathilda paints a vivid picture of Argentina as a land of contradictions — full of chaos, charm, and honesty. Argentines, she says, are “authentic, sometimes too direct,” a quality that shapes both their relationships and their art.COVID-19 Changed the Art World Forever: The pandemic disrupted the old gallery system and gave artists freedom to organize their own exhibitions. For Mathilda, this shift created independence, even if it also demanded new entrepreneurial skills.Commercial Success vs. Soulful Creation: Mathilda critiques “factory artists” who mass-produce work for fame or profit, contrasting them with artists who create from genuine emotion. The real challenge, she says, is maintaining authenticity in a system that rewards volume over vulnerability.Art as Connection and Presence: Beyond skill or aesthetics, Mathilda believes true art is about human connection — between artist, viewer, and the moment of creation itself. Whether painting, swimming, or teaching workshops, she views art as an ongoing conversation with life’s deeper flow.

On this episode of Crazy Wisdom, Stewart Alsop sits down with Guillermo Schulte to explore how AI is reshaping up-skilling, re-skilling, and the future of education through play, from learning games and gamification to emotional intelligence, mental health, and the coming wave of abundance and chaos that technology is accelerating; they also get into synchronous vs. asynchronous learning, human–AI collaboration, and how organizations can use data-driven game experiences for cybersecurity, onboarding, and ongoing training. To learn more about Guillermo’s work, check out TGAcompany.com, as well as TGA Entertainment on Instagram and LinkedIn.Check out this GPT we trained on the conversationTimestamps00:00 Stewart Alsop opens with Guillermo Schulte on up-skilling, re-skilling, and AI’s accelerating impact on work.05:00 They explore play-based learning, video games as education, and early childhood engagement through game mechanics.10:00 Conversation shifts to the overload in modern schooling, why play disappeared, and the challenge of scalable game-based learning.15:00 Guillermo contrasts synchronous vs asynchronous learning and how mobile access democratizes education.20:00 They reflect on boredom, creativity, novelty addiction, and how AI reshapes attention and learning.25:00 Discussion moves to AGI speculation, human discernment, taste, and embodied decision-making.30:00 They explore unpredictable technological leaps, exponential improvement, and the future of knowledge.35:00 Abundance, poverty decline, and chaos—both from scarcity and prosperity—and how societies adapt.40:00 Mental health, emotional well-being, and organizational responsibility become central themes.45:00 Technical training through games emerges: cybersecurity, Excel, and onboarding with rich data insights.50:00 Guillermo explains the upcoming platform enabling anyone to create AI-powered learning games and personalized experiences.Key InsightsAI is accelerating the urgency of up-skilling and re-skilling. Guillermo highlights how rapid technological change is transforming every profession, making continuous learning essential for remaining employable and adding value in a world where machines increasingly handle routine tasks.Play is humanity’s native learning tool—and video games unlock it for adults. He explains that humans are wired to learn through play, yet traditional education suppresses this instinct. Learning games reintroduce engagement, emotion, and curiosity, making education more intuitive and scalable.Gamified, asynchronous learning can democratize access. While synchronous interaction is powerful, Guillermo emphasizes that mobile-first, game-based learning allows millions—including those without resources—to gain skills anytime, closing gaps in opportunity and meritocracy.Emotional intelligence will matter more as AI takes over technical tasks. As AI becomes increasingly capable in logic-heavy fields, human strengths like empathy, leadership, creativity, and relationship-building become central to meaningful work and personal fulfillment.Novelty and boredom shape how we learn and think. They discuss how constant novelty can stunt creativity, while boredom creates the mental space for insight. Future learning systems will need to balance stimulation with reflection to avoid cognitive overload.Abundance will bring psychological challenges alongside material benefits. Stewart and Guillermo point out that while AI and robotics may create unprecedented prosperity, they may also destabilize identity and purpose, amplifying the already-growing mental health crisis.AI-powered game creation could redefine education entirely. Guillermo describes TGA’s upcoming platform that lets anyone transform documents into personalized learning games, using player data to adapt difficulty and style—potentially making learning more effective, accessible, and enjoyable than traditional instruction.

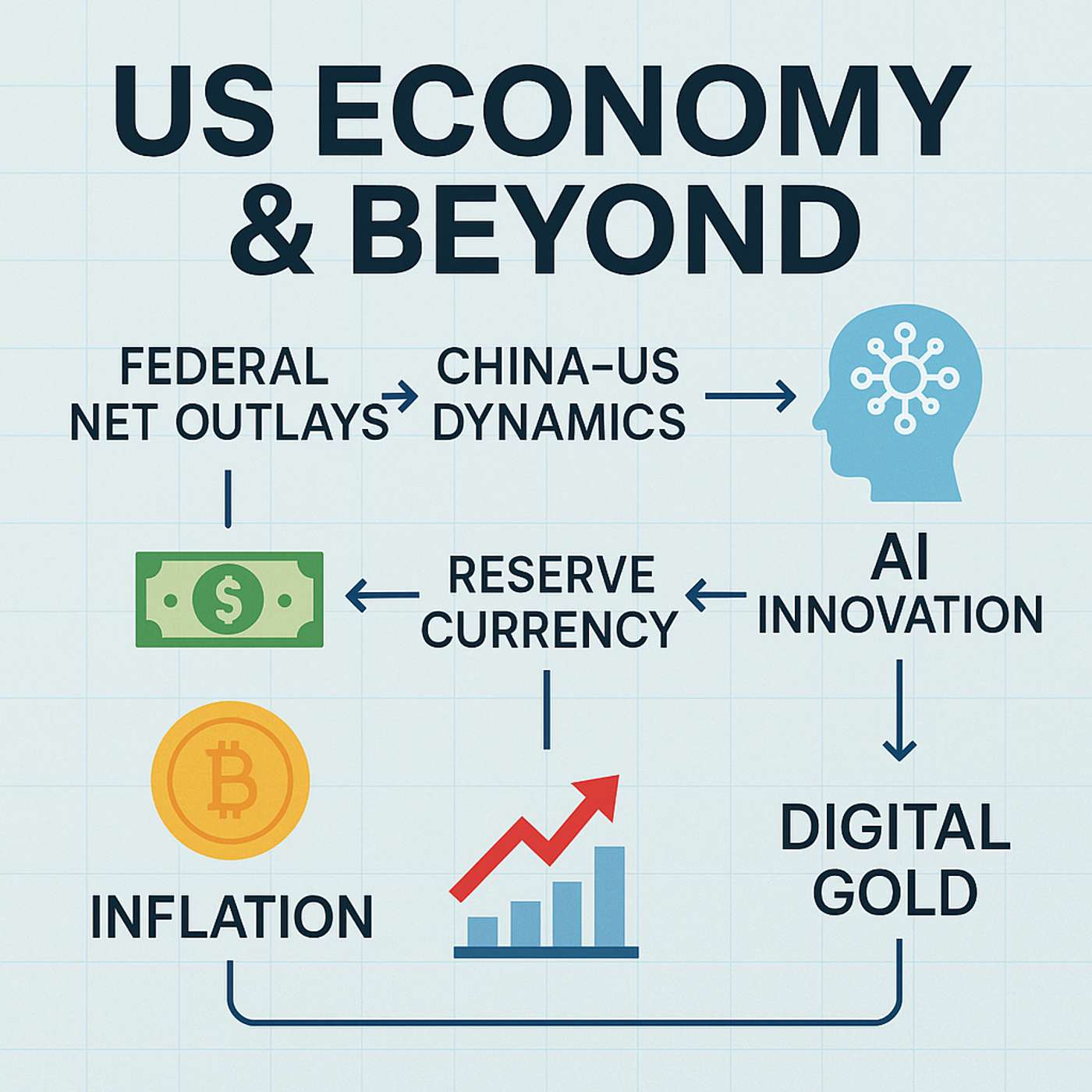

On this episode of Crazy Wisdom, Stewart Alsop sits down with Terrence Yang to explore the US economy through the lens of federal net outlays, inflation, and growth, moving into China–US economic and military dynamics, the role of the dollar as a reserve currency, and how China’s industrial and open-source AI strategies intersect with US innovation; they also get into Bitcoin’s governance, Bitcoin Core maintainers, and what long-term digital scarcity means for money, security, and decentralization. To learn more about Terrence’s work, you can find him on LinkedIn.Check out this GPT we trained on the conversationTimestamps00:00 Stewart and Terrence open with the US economy, federal net outlays, and why confidence matters more than doom narratives. 05:00 They compare debt-to-GDP, discuss budget surpluses, and how the US once grew out of large debt after WWII. 10:00 Terrence explains recurring revenue vs. one-time income, taxes, tariffs, and why sustainable growth is essential. 15:00 Conversation turns to China’s strategy, industrial buildup, rare earths, and provincial debt vs. national positioning. 20:00 They explore military power, aircraft carriers, nuclear subs, and how hard power supports reserve currency status. 25:00 Discussion of AI competition among Google, OpenAI, Claude, and China’s push for open-source standards. 30:00 Terrence raises concerns about open-source trust, model weights, and parallels with Bitcoin Core governance. 35:00 They examine maintainers, consensus rules, and how decentralization actually works in practice. 40:00 Terrence highlights Bitcoin as digital gold, its limits as money, and why volatility shapes adoption. 45:00 They close on unit of account, long-term holding strategies, and risks of panic selling during cycles.Key InsightsFederal net outlays reveal the real fiscal picture. Terrence Yang emphasizes that looking only at debt-to-GDP misses the deeper issue: the U.S. has run negative net outlays—more cash going out than coming in—for decades. He argues that sustainable recurring revenue, not one-time windfalls or asset sales, is what ultimately stabilizes a nation’s finances.Confidence is an economic force of its own. Terrence warns that cultural pessimism can damage the U.S. more than high debt. Drawing parallels to Japan’s post-1990 stagnation, he notes that when people stop taking risks, innovation slows and economies ossify. The U.S. thrives on risk-taking, immigration, and entrepreneurial experimentation—and needs to preserve that spirit.Inflation and growth are locked in a difficult balance. The conversation explores how current inflation remains above target while growth feels sluggish, creating a quasi-stagflation environment. Terrence questions whether the Federal Reserve should remain tied to a 2% target or adapt to new conditions, particularly when jobs and productivity remain uneven.China’s economic strategy is broad, deliberate, and deeply practical. From inviting Western VCs in the 1990s to absorbing semiconductor know-how and refining rare earth materials, China built an industrial base that now rivals or surpasses U.S. manufacturing in many domains. Yet its provincial and real-estate debt highlight structural weaknesses beneath the surface.The U.S. dollar’s dominance rests on military and institutional power. Terrence argues that reserve-currency status persists because the U.S. guarantees open trade routes and global security. Even countries with weak currencies prefer the dollar in black markets. Competitors like BRICS may want an alternative system, but replacing the dollar requires decades, not years.Open-source AI is becoming a geopolitical tool. China’s strategy of flooding the world with strong, free, open-source models mirrors Linux’s global influence. Terrence notes that trust and transparency matter, since open-source code still requires knowledgeable maintainers who can verify safety, intentions, and alignment. This dynamic is now a competitive front in the AI race.Bitcoin governance is both decentralized and fragile. Terrence explains that Bitcoin Core has very few maintainers and relies on a culture of trust, review, and distributed accountability. While Bitcoin works well as long-term “digital gold,” improvements are incremental, and the small number of developers poses systemic risks. He stresses that understanding governance—not just price—is crucial for anyone serious about Bitcoin’s future.

In this episode of Crazy Wisdom, host Stewart Alsop talks with Kevin Smith, co-founder of Snipd, about how AI is reshaping the way we listen, learn, and interact with podcasts. They explore Snipd’s vision of transforming podcasts into living knowledge systems, the evolution of machine learning from finance to large language models, and the broader connection between AI, robotics, and energy as the foundation for the next technological era. Kevin also touches on ideas like the bitter lesson, reinforcement learning, and the growing energy demands of AI. Listeners can try Snipd’s premium version free for a month using this promo link.Check out this GPT we trained on the conversationTimestamps00:00 – Stewart Alsop welcomes Kevin Smith, co-founder of Snipd, to discuss AI, podcasting, and curiosity-driven learning.05:00 – Kevin explains Snipd’s snipping feature, chatting with episodes, and future plans for voice interaction with podcasts.10:00 – They discuss vector search, embeddings, and context windows, comparing full-episode context to chunked transcripts.15:00 – Kevin shares his background in mathematics and economics, his shift from finance to machine learning, and early startup work in AI.20:00 – They explore early quant models versus modern machine learning, statistical modeling, and data limitations in finance.25:00 – Conversation turns to transformer models, pretraining, and the bitter lesson—how compute-based methods outperform human-crafted systems. 30:00 – Stewart connects this to RLHF, Scale AI, and data scarcity; Kevin reflects on reinforcement learning’s future. 35:00 – They pivot to Snipd’s podcast ecosystem, hidden gems like Founders Podcast, and how stories shape entrepreneurial insight. 40:00 – ETH Zurich, robotics, and startup culture come up, linking academia to real-world innovation. 45:00 – They close on AI, robotics, and energy as the pillars of the future, debating nuclear and solar power’s role in sustaining progress.Key InsightsPodcasts as dynamic knowledge systems: Kevin Smith presents Snipd as an AI-powered tool that transforms podcasts into interactive learning environments. By allowing listeners to “snip” and summarize meaningful moments, Snipd turns passive listening into active knowledge management—bridging curiosity, memory, and technology in a way that reframes podcasts as living knowledge capsules rather than static media.AI transforming how we engage with information: The discussion highlights how AI enables entirely new modes of interaction—chatting directly with podcast episodes, asking follow-up questions, and contextualizing information across an author’s full body of work. This evolution points toward a future where knowledge consumption becomes conversational and personalized rather than linear and one-size-fits-all.Vectorization and context windows matter: Kevin explains that Snipd currently avoids heavy use of vector databases, opting instead to feed entire episodes into large models. This choice enhances coherence and comprehension, reflecting how advances in context windows have reshaped how AI understands complex audio content.Machine learning’s roots in finance shaped early AI thinking: Kevin’s journey from quantitative finance to AI reveals how statistical modeling laid the groundwork for modern learning systems. While finance once relied on rigid, theory-based models, the machine learning paradigm replaced those priors with flexible, data-driven discovery—an essential philosophical shift in how intelligence is approached.The Bitter Lesson and the rise of compute: Together they unpack Richard Sutton’s “bitter lesson”—the idea that methods leveraging computation and data inevitably surpass those built from human intuition. This insight serves as a compass for understanding why transformers, pretraining, and scaling have driven recent AI breakthroughs.Reinforcement learning and data scarcity define AI’s next phase: Stewart links RLHF and the work of companies like Scale AI and Surge AI to the broader question of data limits. Kevin agrees that the next wave of AI will depend on reinforcement learning and simulated environments that generate new, high-quality data beyond what humans can label.The future hinges on AI, robotics, and energy: Kevin closes with a framework for the next decade: AI provides intelligence, robotics applies it to the physical world, and energy sustains it all. He warns that society must shift from fearing energy use to innovating in production—especially through nuclear and solar power—to meet the demands of an increasingly intelligent, interconnected world.

In this episode of Crazy Wisdom, host Stewart Alsop talks with Jessica Talisman, founder of Contextually and creator of the Ontology Pipeline, about the deep connections between knowledge management, library science, and the emerging world of AI systems. Together they explore how controlled vocabularies, ontologies, and metadata shape meaning for both humans and machines, why librarianship has lessons for modern tech, and how cultural context influences what we call “knowledge.” Jessica also discusses the rise of AI librarians, the problem of “AI slop,” and the need for collaborative, human-centered knowledge ecosystems. You can learn more about her work at Ontology Pipeline and find her writing and talks on LinkedIn.Check out this GPT we trained on the conversationTimestamps00:00 Stewart Alsop welcomes Jessica Talisman to discuss Contextually, ontologies, and how controlled vocabularies ground scalable systems.05:00 They compare philosophy’s ontology with information science, linking meaning, categorization, and sense-making for humans and machines.10:00 Jessica explains why SQL and Postgres can’t capture knowledge complexity and how neuro-symbolic systems add context and interoperability.15:00 The talk turns to library science’s split from big data in the 1990s, metadata schemas, and the FAIR principles of findability and reuse.20:00 They discuss neutrality, bias in corporate vocabularies, and why “touching grass” matters for reconciling internal and external meanings.25:00 Conversation shifts to interpretability, cultural context, and how Western categorical thinking differs from China’s contextual knowledge.30:00 Jessica introduces process knowledge, documentation habits, and the danger of outsourcing how-to understanding.35:00 They explore knowledge as habit, the tension between break-things culture and library design thinking, and early AI experiments.40:00 Libraries’ strategic use of AI, metadata precision, and the emerging role of AI librarians take focus.45:00 Stewart connects data labeling, Surge AI, and the economics of good data with Jessica’s call for better knowledge architectures.50:00 They unpack content lifecycle, provenance, and user context as the backbone of knowledge ecosystems.55:00 The talk closes on automation limits, human-in-the-loop design, and Jessica’s vision for collaborative consulting through Contextually.Key InsightsOntology is about meaning, not just data structure. Jessica Talisman reframes ontology from a philosophical abstraction into a practical tool for knowledge management—defining how things relate and what they mean within systems. She explains that without clear categories and shared definitions, organizations can’t scale or communicate effectively, either with people or with machines.Controlled vocabularies are the foundation of AI literacy. Jessica emphasizes that building a controlled vocabulary is the simplest and most powerful way to disambiguate meaning for AI. Machines, like people, need context to interpret language, and consistent terminology prevents the “hallucinations” that occur when systems lack semantic grounding.Library science predicted today’s knowledge crisis. Stewart and Jessica trace how, in the 1990s, tech went down the path of “big data” while librarians quietly built systems of metadata, ontologies, and standards like schema.org. Today’s AI challenges—interoperability, reliability, and information overload—mirror problems library science has been solving for decades.Knowledge is culturally shaped. Drawing from Patrick Lambe’s work, Jessica notes that Western knowledge systems are category-driven, while Chinese systems emphasize context. This cultural distinction explains why global AI models often miss nuance or moral voice when trained on limited datasets.Process knowledge is disappearing. The West has outsourced its “how-to” knowledge—what Jessica calls process knowledge—to other countries. Without documentation habits, we risk losing the embodied know-how that underpins manufacturing, engineering, and even creative work.Automation cannot replace critical thinking. Jessica warns against treating AI as “room service.” Automation can support, but not substitute, human judgment. Her own experience with a contract error generated by an AI tool underscores the importance of review, reflection, and accountability in human–machine collaboration.Collaborative consulting builds knowledge resilience. Through her consultancy, Contextually, Jessica advocates for “teaching through doing”—helping teams build their own ontologies and vocabularies rather than outsourcing them. Sustainable knowledge systems, she argues, depend on shared understanding, not just good technology.

In this episode of Crazy Wisdom, host Stewart Alsop sits down with Harry McKay Roper, founder of Imaginary Space, for a wide-ranging conversation on space mining, AI-driven software, crypto’s incorruptible potential, and the raw entrepreneurial energy coming out of Argentina. They explore how technologies like Anthropic’s Claude 4.5, programmable crypto protocols, and autonomous agents are reshaping economics, coding, and even law. Harry also shares his experiences building in Buenos Aires and why hunger and resilience define the city’s creative spirit. You can find Harry online at YouTube, Twitter, or Instagram under @HarryMcKayRoper.Check out this GPT we trained on the conversationTimestamps00:00 – Stewart Alsop welcomes Harry McKay Roper from Imaginary Space and they jump straight into space mining, Helium-3, and asteroid gold. 05:00 – They explore how Bitcoin could hold value when space mining floods markets and discuss China, America, and global geopolitics. 10:00 – Conversation shifts to Argentina, its economic scars, cultural resilience, and overrepresentation in startups and crypto. 15:00 – Harry reflects on living in Buenos Aires, poverty, and the city’s constant hustle and creative movement. 20:00 – The focus turns to AI, Claude 4.5, and the rise of autonomous droids and software-building agents. 25:00 – They discuss the collapse of SaaS, internal tools, and Harry’s experiments with AI-generated code and new workflows. 30:00 – Stewart compares China’s industry to America’s software economy, and Harry points to AI, crypto, and space as frontier markets. 35:00 – Talk moves to crypto regulation, uncorruptible judges, and blockchain systems like Kleros. 40:00 – They debate AI consciousness, embodiment, and whether a robot could meditate. 45:00 – The episode closes with thoughts on free will, universal verifiers, and a playful prediction market bet on autonomous software.Key InsightsSpace and Economics Are Colliding – Harry McKay Roper opens with the idea that space mining will fundamentally reshape Earth’s economy. The discovery of asteroids rich in gold and other minerals highlights how our notions of scarcity could collapse once space resources become accessible, potentially destroying the terrestrial gold economy and forcing humanity to redefine value itself.Bitcoin as the New Standard of Value – The conversation naturally ties this to Bitcoin’s finite nature. Stewart Alsop and Harry discuss how the flood of extraterrestrial gold could render traditional stores of value meaningless, while Bitcoin’s coded scarcity could make it the only incorruptible measure of worth in a future of infinite resources.China and the U.S. in Industrial Tug-of-War – They unpack the geopolitical tension between China’s industrial dominance and America’s financial hegemony. Harry argues the U.S. is waking up from decades of outsourcing, driven by China’s speed in robotics and infrastructure. This dynamic competition, he says, is good—it forces America to build again.Argentina’s Culture of Hunger and Resilience – Living in Buenos Aires reshaped Harry’s understanding of ambition. He contrasts Argentina’s hunger to survive and create with the complacency of wealthier nations, calling the Argentine spirit one of “movement.” Despite poverty, the city’s creative drive and humor make it a living example of resilience in scarcity.AI Is Making Custom Software Instant – Harry describes how Claude 4.5 and new AI coding tools like Lovable, Cursor, and GPT Engineer make building internal tools trivial. Instead of using SaaS products, companies can now generate bespoke software in minutes with natural language, signaling the end of traditional software development cycles.Crypto and AI Will Merge Into Incorruptible Systems – Harry envisions AI agents on-chain acting as unbiased judges or administrators, removing human corruption from law and governance. Real-world tools like Kleros, founded by an Argentine, already hint at this coming era of algorithmic justice and decentralized decision-making.Consciousness and the Limits of AI – The episode closes on a philosophical note: can a robot meditate or clear its mind? Stewart and Harry question whether AI could ever experience consciousness or free will, suggesting that while AI may mimic thought, the uniquely subjective and embodied nature of human awareness remains beyond automation—for now.

In this episode of Crazy Wisdom, host Stewart Alsop sits down with Cryptogaucho to explore the intersection of artificial intelligence, crypto, and Argentina’s emerging role as a new frontier for innovation and governance. The conversation ranges from OpenAI’s partnership with Sur Energy and the Stargate project to Argentina’s RIGI investment framework, Milei’s libertarian reforms, and the potential of space-based data centers and new jurisdictions beyond Earth. Cryptogaucho also reflects on Argentina’s tech renaissance, its culture of resilience born from hyperinflation, and the rise of experimental communities like Prospera and Noma Collective. Follow him on X at @CryptoGaucho.Check out this GPT we trained on the conversationTimestamps00:00 – Stewart Alsop opens with Cryptogaucho from Mendoza, talking about Argentina, AI, crypto, and the energy around new projects like Sur Energy and Satellogic.05:00 – They dive into Argentina’s growing space ambitions, spaceport plans, and how jurisdiction could extend “upward” through satellites and data sovereignty.10:00 – The talk shifts to global regulation, bureaucracy, and why Argentina’s uncertainty may become its strength amid red tape in the US and China.15:00 – Discussion of OpenAI’s Stargate project, AI infrastructure in Patagonia, and the geopolitical tension between state and private innovation.20:00 – Cryptogaucho explains the “cepo” currency controls, the black market for dollars, and crypto’s role in preserving economic freedom.25:00 – They unpack RIGI investment incentives, Argentina’s new economic rules, and efforts to attract major projects like data centers and nuclear reactors.30:00 – Stewart connects hyperinflation to resilience and abundance in the AI era, while Cryptogaucho reflects on chaos, adaptability, and optimism.35:00 – The conversation turns philosophical: nation-states, community networks, Prospera, and the rise of new governance models.40:00 – They explore Argentina’s global position, soft power, and its role as a frontier of Western ideals.45:00 – Final reflections on AI in space, data centers beyond Earth, and freedom of information as humanity’s next jurisdiction.Key InsightsArgentina as a new technological frontier: The episode positions Argentina as a nation uniquely situated between chaos and opportunity—a place where political uncertainty and flexible regulation create fertile ground for experimentation. Stewart Alsop and Cryptogaucho argue that this openness, combined with a culture forged in crisis, allows Argentina to become a testing ground for new models of governance, technology, and sovereignty.The convergence of AI, energy, and geography: OpenAI’s deal with Sur Energy and plans for a data center in Patagonia signal how Argentina’s geography and resources are becoming integral to the global AI infrastructure. Cryptogaucho highlights the symbolic and strategic power of Argentina serving as a “southern node” for the intelligence economy.Economic reinvention through RIGI: The RIGI framework offers tax and regulatory advantages to major investors, marking a turning point in Argentina’s attempt to attract stable, high-value industries such as server farms, mining, and biotech. It represents a pragmatic balance between libertarian reform and national development.Crypto and currency freedom: Cryptogaucho recounts how Argentina’s crypto community arose from necessity during hyperinflation and currency controls. Bitcoin and stablecoins became lifelines for developers and entrepreneurs locked out of traditional banking systems, teaching the world about decentralized resilience.AI abundance and human adaptation: The discussion draws parallels between hyperinflation’s unpredictability and the overwhelming speed of AI progress. Stewart suggests that Argentina’s social adaptability, born from scarcity and instability, may prepare its citizens for a future defined by abundance and rapid technological flux.Network states and new governance: The conversation explores Prospera, Noma Collective, and the idea of city-scale governance networks. These experiments, blending blockchain, law, and community, are seen as prototypes for post-nation-state organization—where trust and culture matter more than geography.Space as the next jurisdiction: The episode ends with an exploration of space as a new legal and economic domain. Satellites, data centers, and orbital communication networks could redefine sovereignty, creating “data islands” beyond Earth where information flows freely under new kinds of governance—a vision of humanity’s next frontier.

In this episode of Crazy Wisdom, host Stewart Alsop speaks with Eli Lopian, author of AICracy and founder of aicracy.ai, about how artificial intelligence could transform the way societies govern themselves. They explore the limitations of modern democracy, the idea of AI-guided lawmaking based on fairness and abundance, and how technology might bring us closer to a more participatory, transparent form of governance. The conversation touches on prediction markets, social media’s influence on truth, the future of work in an abundance economy, and why human creativity, imperfection, and connection will remain central in an AI-driven world.Check out this GPT we trained on the conversationTimestamps00:00 Eli Lopian introduces his book AICracy and shares why democracy needs a new paradigm for governance in the age of AI. 05:00 They explore AI-driven decision-making, fairness in lawmaking, and the abundance measure as a new way to evaluate social well-being. 10:00 Discussion turns to accountability, trust, and Eli’s idea of three AIs—government, opposition, and NGO—balancing each other to prevent corruption. 15:00 Stewart connects these ideas to non-linearity and organic governance, while Eli describes systems evolving like cities rather than rigid institutions. 20:00 They discuss decade goals, city-state models, and the role of social media in shaping public perception and truth. 25:00 The focus shifts to truth detection, prediction markets, and feedback systems ensuring “did it actually happen?” accountability. 30:00 They talk about abundance economies, AI mentorship, and redefining human purpose beyond traditional work. 35:00 Eli emphasizes creativity, connection, and human error as valuable, contrasting social media’s dopamine loops with genuine human experience. 40:00 The episode closes with reflections on social currency, self-healing governance, and optimism about AI as a mirror of humanity.Key InsightsDemocracy is evolving beyond its limits. Eli Lopian argues that traditional democracy—one person, one vote—no longer fits an age where individuals have vastly different technological capacities. With AI empowering some to act with exponential influence, he suggests governance should evolve toward systems that are more adaptive, participatory, and continuous rather than episodic.AI-guided lawmaking could ensure fairness. Lopian’s concept of AICracy imagines an AI system that drafts laws based on measurable outcomes like equity and happiness. Using what he calls the abundance measure, this system would assess how proposed laws affect societal well-being—balancing freedoms, security, and fairness across all citizens.Trust and accountability must be engineered. To prevent corruption or bias in AI governance, Lopian envisions three independent AIs—a coalition, an opposition, and an NGO—cross-verifying results and exposing inconsistencies. This triad ensures transparency and keeps human oversight meaningful.Governance should be organic, not mechanical. Drawing inspiration from cities, Lopian and Alsop compare governance to an ecosystem that adapts and self-corrects. Like urban growth, effective systems arise from real-world feedback, where successful ideas take root and failing ones fade away naturally.Truth requires new forms of verification. The pair discuss how lies spread faster than truth online and propose an algorithmic “speed of a lie” metric to flag misinformation. They connect this to prediction markets and feedback loops as potential ways to keep governance accountable to real-world outcomes.The abundance economy redefines purpose. As AI reduces the need for traditional jobs, Lopian imagines a society centered on creativity, mentorship, and personal fulfillment. Governments could guarantee access to mentors—human or AI—to help people discover their passions and contribute meaningfully without economic pressure.Human connection is the new currency. In contrast to social media’s exploitation of human weakness, the future Lopian envisions values imperfection, authenticity, and shared experience. As AI automates production, what remains deeply human—emotion, error, and presence—becomes the most precious and sustaining form of wealth.