Discover Data Science Tech Brief By HackerNoon

Data Science Tech Brief By HackerNoon

Data Science Tech Brief By HackerNoon

Author: HackerNoon

Subscribed: 25Played: 116Subscribe

Share

© 2026 HackerNoon

Description

Learn the latest data science updates in the tech world.

169 Episodes

Reverse

This story was originally published on HackerNoon at: https://hackernoon.com/5-ways-spark-41-moves-data-engineering-from-manual-pipelines-to-intent-driven-design.

Apache Spark 4.1 introduces significant architectural efficiencies designed to simplify Change Data Capture (CDC) and lifecycle management.

Check more stories related to data-science at: https://hackernoon.com/c/data-science.

You can also check exclusive content about #data-engineering, #declarative-programming, #apache-spark, #declarative-pipelines, #data-quality, #change-data-capture, #databricks, #spark-4.1, and more.

This story was written by: @amalik. Learn more about this writer by checking @amalik's about page,

and for more stories, please visit hackernoon.com.

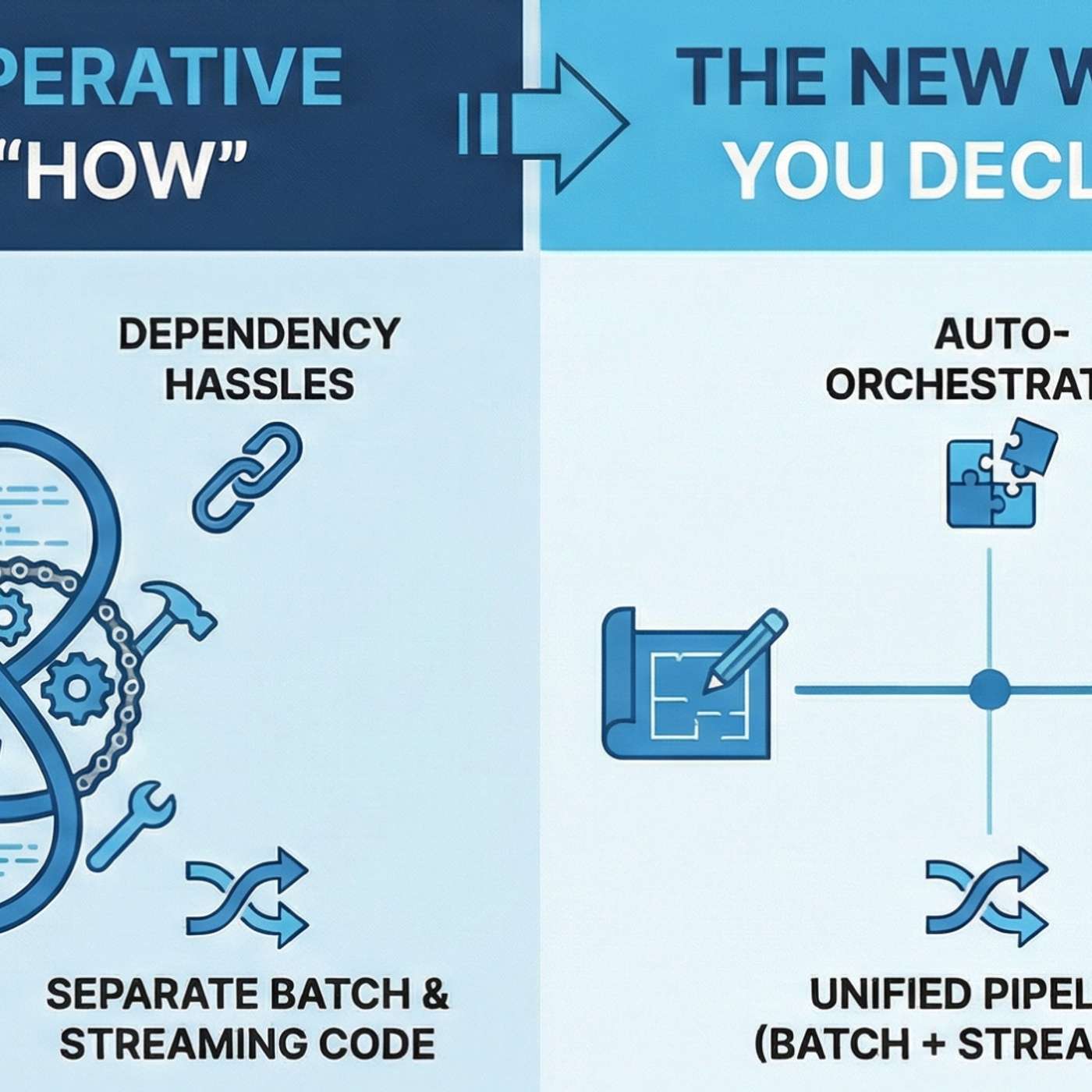

Apache Spark 4.1 is moving away from the role of "orchestration plumber" and toward something far more strategic. We are entering an era of declarative clarity that promises to reduce pipeline development time by up to 90%. Materialized View (MV) is the end of "Stale Data" anxiety.

This story was originally published on HackerNoon at: https://hackernoon.com/beyond-prediction-econometric-data-science-for-measuring-true-business-impact.

Econometric methodologies model counterfactual consequences upfront so that an analyst can predict what would happen without intervention.

Check more stories related to data-science at: https://hackernoon.com/c/data-science.

You can also check exclusive content about #data-science, #analytics, #econometric-data-science, #business-impact, #real-world-constraints, #machine-learning, #business-strategies, #contemporary-econometrics, and more.

This story was written by: @dharmateja. Learn more about this writer by checking @dharmateja's about page,

and for more stories, please visit hackernoon.com.

Econometric methodologies model counterfactual consequences upfront so that an analyst can predict what would happen without intervention. This is crucial for determining actual ROI and avoiding misallocation of resources. Econometric data science provides the resources to deliver on this challenge.

This story was originally published on HackerNoon at: https://hackernoon.com/designing-economic-intelligence-econometrics-first-approaches-in-data-science.

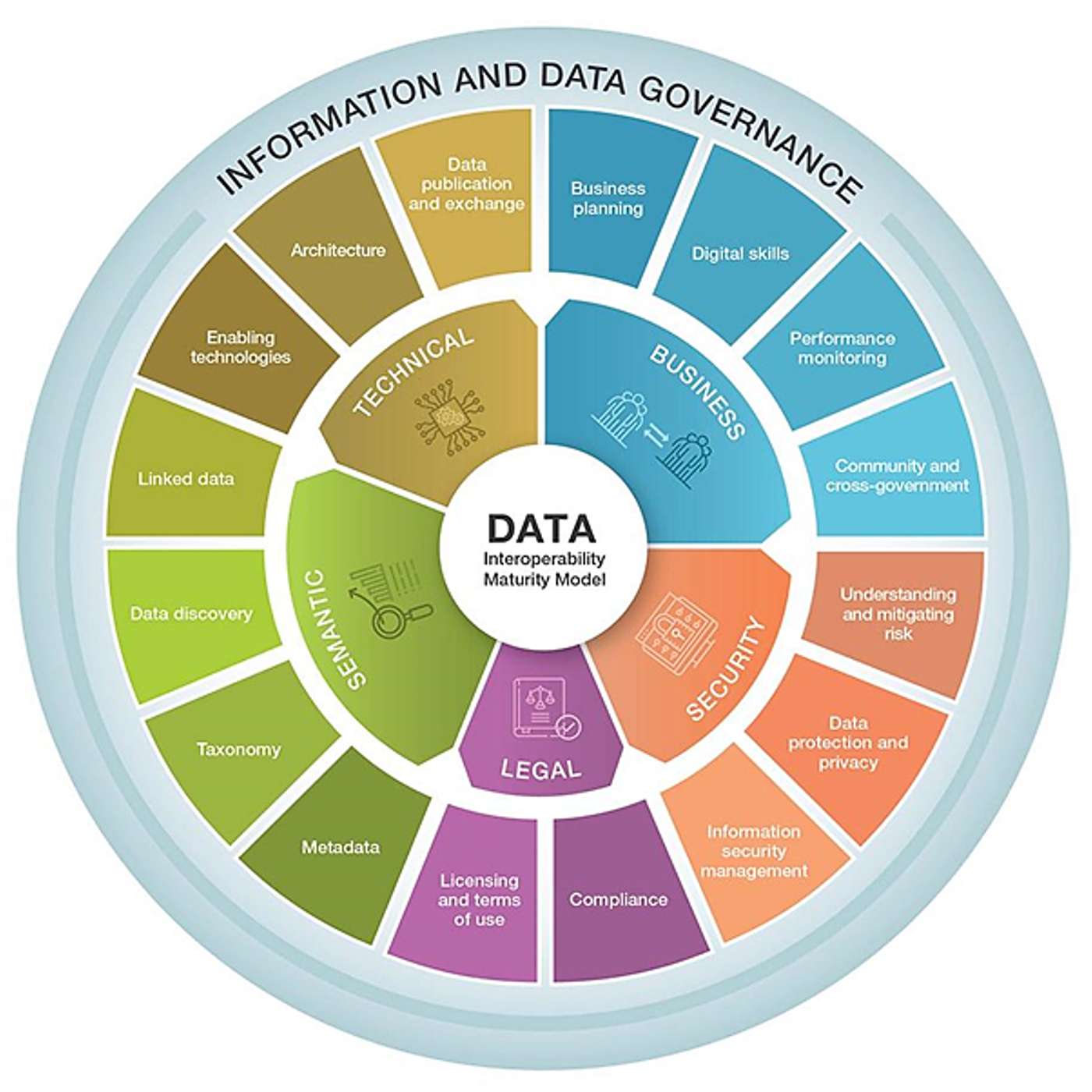

Economic intelligence is embedding a structured way of reasoning into decision systems.

Check more stories related to data-science at: https://hackernoon.com/c/data-science.

You can also check exclusive content about #data-science, #analytics, #economic-intelligence, #econometrics, #analytics-outputs, #counterfactual-evaluation, #interoperability, #economics, and more.

This story was written by: @dharmateja. Learn more about this writer by checking @dharmateja's about page,

and for more stories, please visit hackernoon.com.

Economic intelligence is embedding a structured way of reasoning into decision systems. Econometrics is a logical springboard for these systems since it regards decisions as interventions in an economic context.

This story was originally published on HackerNoon at: https://hackernoon.com/from-forecasting-to-bi-inside-shravanthi-ashwin-kumars-data-driven-finance-playbook.

A deep dive into Shravanthi Ashwin Kumar’s data-driven approach to financial analytics, forecasting, and tech-powered decision-making AI!

Check more stories related to data-science at: https://hackernoon.com/c/data-science.

You can also check exclusive content about #data-driven-financial-decision, #financial-analytics-automation, #sql-python-finance-analytics, #finance-business-intelligence, #financial-modeling, #financial-forecasting, #finance-kpi-dashboard, #good-company, and more.

This story was written by: @sanya_kapoor. Learn more about this writer by checking @sanya_kapoor's about page,

and for more stories, please visit hackernoon.com.

Shravanthi Ashwin Kumar exemplifies the new generation of finance professionals blending analytics, automation, and strategic insight. With expertise in financial modeling, forecasting, risk analysis, and BI tools like SQL, Python, Power BI, and Tableau, she delivers measurable impact—boosting planning accuracy, reducing costs, and enabling smarter, faster data-driven decisions across industries.

This story was originally published on HackerNoon at: https://hackernoon.com/causal-thinking-in-the-age-of-big-data-modern-econometrics-for-data-scientists.

Predictive models now rule over modern analytics stacks from recommendation engines to demand forecasting and fraud detection.

Check more stories related to data-science at: https://hackernoon.com/c/data-science.

You can also check exclusive content about #data-science, #analytics, #economics, #predictive-models, #modern-econometrics, #data-scientists, #machine-learning, #counterfactual-thinking, and more.

This story was written by: @dharmateja. Learn more about this writer by checking @dharmateja's about page,

and for more stories, please visit hackernoon.com.

Predictive models now rule over modern analytics stacks from recommendation engines to demand forecasting and fraud detection. But as data scientists increasingly impact policy and strategy, the inherent limitation of prediction-only thinking has become obvious.

This story was originally published on HackerNoon at: https://hackernoon.com/data-pipeline-testing-the-3-levels-most-teams-miss.

Dashboards don’t represent actual state, models degrade unnoticed, and incidents show up as “weird numbers” instead of errors.

Check more stories related to data-science at: https://hackernoon.com/c/data-science.

You can also check exclusive content about #data-engineering, #data-quality, #data-pipelines, #data-infrastructure, #data-ops, #data-pipeline-testing, #quality-assurance, #data-testing-is-different, and more.

This story was written by: @timonovid_ir5em1fo. Learn more about this writer by checking @timonovid_ir5em1fo's about page,

and for more stories, please visit hackernoon.com.

Most data teams test code but not data.

That’s why dashboards don’t represent actual state, models degrade unnoticed, and incidents show up as “weird numbers” instead of errors.

This article breaks down **three levels of data testing** — schema, business logic, and contracts — and shows how to integrate them into CI/CD and monitoring without turning your data stack into a mess.

This story was originally published on HackerNoon at: https://hackernoon.com/hsm-the-original-tiering-engine-behind-mainframes-cloud-and-s3.

From mainframe DFSMShsm to cloud storage classes: a practical history of HSM, ILM, tiering, recall, and the products that shaped modern archives.

Check more stories related to data-science at: https://hackernoon.com/c/data-science.

You can also check exclusive content about #data-tiering, #hsm-vs-ilm, #hierarchical-storage-mgmt, #data-lifecycle-management, #tiered-data-storage, #object-storage, #object-storage-lifecycle, #hackernoon-top-story, and more.

This story was written by: @carlwatts. Learn more about this writer by checking @carlwatts's about page,

and for more stories, please visit hackernoon.com.

Hierarchical Storage Management (HSM) is the storage world’s oldest magic trick. It makes expensive storage look bigger by quietly moving data to cheaper tiers. HSM has five moving parts: a primary tier, secondary tiers, a policy engine, a recall mechanism, and a migration engine.

This story was originally published on HackerNoon at: https://hackernoon.com/navigating-architectural-trade-offs-at-scale-to-meet-ai-goals-in-2026.

Success in 2026 is predicated on having total clarity of the underlying data infrastructure.

Check more stories related to data-science at: https://hackernoon.com/c/data-science.

You can also check exclusive content about #data-science, #big-data, #data-analytics, #snowflake, #architectural-trade-offs, #ai-goals-in-2026, #petabyte-scale, #low-code, and more.

This story was written by: @anupmoncy. Learn more about this writer by checking @anupmoncy's about page,

and for more stories, please visit hackernoon.com.

Success in 2026 is predicated on having total clarity of the underlying data infrastructure. This requires a stable and secure foundation that uses auto-scaling compute and workload isolation.

This story was originally published on HackerNoon at: https://hackernoon.com/will-ai-take-your-job-the-data-tells-a-very-different-story.

Historically, technological revolutions have triggered similar waves of anxiety, only for the long-term outcomes to demonstrate a more optimistic narrative.

Check more stories related to data-science at: https://hackernoon.com/c/data-science.

You can also check exclusive content about #data-science, #analytics, #artificial-intelligence, #technology, #generative-ai, #data-analysis, #ai-job-loss, #ai-job-takeover, and more.

This story was written by: @dharmateja. Learn more about this writer by checking @dharmateja's about page,

and for more stories, please visit hackernoon.com.

Artificial intelligence (AI) raises an urgent question for workers, businesses, and policymakers. Will AI advancements ultimately lead to widespread unemployment? Historically, technological revolutions have triggered similar waves of anxiety, only for the long-term outcomes to demonstrate a more optimistic narrative.

This story was originally published on HackerNoon at: https://hackernoon.com/you-dont-need-an-api-for-everything-sometimes-scraping-is-enough.

You don't always need an API. Sometimes scraping public pages is the simplest, fastest way to turn repetitive browsing into usable data.

Check more stories related to data-science at: https://hackernoon.com/c/data-science.

You can also check exclusive content about #web-scraping, #automation, #developer-tools, #productivity, #programming, #wait-for-the-api, #api, #api-development, and more.

This story was written by: @fromight. Learn more about this writer by checking @fromight's about page,

and for more stories, please visit hackernoon.com.

APIs are useful, but they're not always available, complete, or worth the overhead. If the data you need is already public and you're manually checking a website, scraping is simply a way to automate that behavior. Small, low-frequency scrapers can turn repetitive browsing into structured data, save time, and reduce cognitive load making scraping a practical productivity tool rather than a heavy engineering decision.

This story was originally published on HackerNoon at: https://hackernoon.com/how-to-use-propensity-score-matching-to-measure-down-stream-causal-impact-of-an-event.

How can we know ours ads are making impact that we aim for? What if targeted ads are not working the way we want them to?

Check more stories related to data-science at: https://hackernoon.com/c/data-science.

You can also check exclusive content about #data-science, #data-analytics, #statistics, #analytics, #advertising, #big-data-analytics, #hackernoon-top-story-tag, #propensity-score-matching, and more.

This story was written by: @dharmateja. Learn more about this writer by checking @dharmateja's about page,

and for more stories, please visit hackernoon.com.

Ad exposure is not randomly assigned – algorithms may show ads more to highly active users. As a result, “unobservable factors make exposure endogenous,” meaning there are hidden biases in who sees the ad. This is where propensity score matching (PSM) comes in – it’s a statistical way to create apples-to-apples comparisons.

This story was originally published on HackerNoon at: https://hackernoon.com/how-to-analyze-call-sentiment-with-open-source-nlp-libraries.

Unlock call sentiment analysis using open-source NLP. Discover how to analyze customer emotions, improve service, and gain valuable insights from voice data.

Check more stories related to data-science at: https://hackernoon.com/c/data-science.

You can also check exclusive content about #nlp, #natural-language-processing, #call-sentiment, #open-source-nlp, #customer-service, #call-sentiment-analysis, #ai-for-customer-support, #sentiment-analysis, and more.

This story was written by: @devinpartida. Learn more about this writer by checking @devinpartida's about page,

and for more stories, please visit hackernoon.com.

Call sentiment analysis uses natural language processing (NLP) to surface those signals at scale. Sentiment signals often fall into three broad categories: polarity, intensity and temporal shifts. When applied across large call volumes, sentiment metrics reveal systemic trends that individual call reviews rarely uncover.

This story was originally published on HackerNoon at: https://hackernoon.com/how-bayesian-tail-risk-modeling-can-save-your-retail-business-marketing-budget.

Why average ROI fails. Learn how distributional and tail-risk modeling protects marketing campaigns from catastrophic losses using Bayesian methods.

Check more stories related to data-science at: https://hackernoon.com/c/data-science.

You can also check exclusive content about #data-science, #statistics, #machine-learning, #retail-marketing, #e-commerce, #digital-marketing, #marketing, #hackernoon-top-stories, and more.

This story was written by: @dharmateja. Learn more about this writer by checking @dharmateja's about page,

and for more stories, please visit hackernoon.com.

E-commerce marketing is often represented in terms of Return on Investment (ROI) But looking specifically at average ROI can be very misleading. Marketing outcomes can have "fat tails": rare but extreme events on the downside which conventional models' underestimate.

This story was originally published on HackerNoon at: https://hackernoon.com/architecting-trustworthy-healthcare-data-platforms-using-declarative-pipelines.

In Digital Healthcare data platforms, data quality is no longer a nice-to-have — it is a hard requirement.

Check more stories related to data-science at: https://hackernoon.com/c/data-science.

You can also check exclusive content about #databricks, #data-science, #healthcare-data-platforms, #declarative-pipelines, #declarative-data-quality, #production-grade-pipelines, #healthcare-etl-pipelines, #bad-data, and more.

This story was written by: @hacker95231466. Learn more about this writer by checking @hacker95231466's about page,

and for more stories, please visit hackernoon.com.

In Digital Healthcare data platforms, data quality is no longer a nice-to-have — it is a hard requirement.

This story was originally published on HackerNoon at: https://hackernoon.com/when-ab-tests-arent-possible-causal-inference-can-still-measure-marketing-impact.

Learn how to measure marketing impact without A/B tests using causal inference, Diff-in-Diff, synthetic control, and GeoLift.

Check more stories related to data-science at: https://hackernoon.com/c/data-science.

You can also check exclusive content about #ab-testing, #data-analytics, #data-analysis, #causal-inference, #ab-testing-alternatives, #geolift, #diff-in-diff, #causal-inference-marketing, and more.

This story was written by: @radiokocmoc_l45iej08. Learn more about this writer by checking @radiokocmoc_l45iej08's about page,

and for more stories, please visit hackernoon.com.

In many real‑world settings, running a randomized experiment is simply impossible. We’ll walk through Diff‑in‑Diff, Synthetic Control, and Meta’s GeoLift. We show how to prep your data, and provide ready‑to‑run code.

This story was originally published on HackerNoon at: https://hackernoon.com/why-data-quality-is-becoming-a-core-developer-experience-metric.

Bad data secretly slows development. Learn why data quality APIs are becoming core DX infrastructure in API-first systems and how they accelerate teams.

Check more stories related to data-science at: https://hackernoon.com/c/data-science.

You can also check exclusive content about #data-quality, #developer-experience, #software-architecture, #engineering-productivity, #data-quality-apis, #api-first-architecture, #distributed-systems, #good-company, and more.

This story was written by: @melissaindia. Learn more about this writer by checking @melissaindia's about page,

and for more stories, please visit hackernoon.com.

In API-first systems, poor data quality (invalid emails, duplicate records, etc.) creates unpredictable bugs, forces defensive coding, and makes releases feel risky. This "hidden tax" consumes time and mental energy that should go to building features.

The fix? Treat data quality as core infrastructure. By using real-time validation APIs at the point of ingestion, you create predictable systems, simplify business logic, and build developer confidence. This turns a vicious cycle of complexity into a virtuous cycle of velocity and better architecture.

Bottom line: Investing in data quality isn't just operational hygiene—it's a direct investment in your team's ability to ship faster and with more confidence.

This story was originally published on HackerNoon at: https://hackernoon.com/why-accuracy-fails-for-uplift-models-and-what-to-use-instead.

When it comes to uplift modeling, traditional performance metrics commonly used for other machine learning tasks may fall short.

Check more stories related to data-science at: https://hackernoon.com/c/data-science.

You can also check exclusive content about #data-science, #uplift-modeling, #data-analysis, #machine-learning, #uplift-models, #area-under-uplift, #uplift@k, #cg-and-qini, and more.

This story was written by: @eltsefon. Learn more about this writer by checking @eltsefon's about page,

and for more stories, please visit hackernoon.com.

When it comes to uplift modeling, traditional performance metrics commonly used for other machine learning tasks may fall short.

This story was originally published on HackerNoon at: https://hackernoon.com/turning-your-data-swamp-into-gold-a-developers-guide-to-nlp-on-legacy-logs.

A practical NLP pipeline for cleaning legacy maintenance logs using normalization, TF-IDF, and cosine similarity to detect fraud and improve data quality.

Check more stories related to data-science at: https://hackernoon.com/c/data-science.

You can also check exclusive content about #data-analysis, #atypical-data, #maintenance-log-analysis, #nlp-cleaning-pipeline, #python-text-normalization, #enterprise-data-quality, #tf-idf-vectorization, #data-cleaning-automation, and more.

This story was written by: @dippusingh. Learn more about this writer by checking @dippusingh's about page,

and for more stories, please visit hackernoon.com.

The NLP Cleaning Pipeline is a tool to clean, vectorize, and analyze unstructured "free-text" logs. It uses Python 3.9+ and Scikit-Learn for vectorization and similarity metrics. The pipeline uses Unicode normalization, the Thesaurus, and case folding to remove noise.

This story was originally published on HackerNoon at: https://hackernoon.com/data-monetization-strategies-in-government-digital-platforms.

How governments monetize digital data to drive innovation, trust, transparency and economic value.

Check more stories related to data-science at: https://hackernoon.com/c/data-science.

You can also check exclusive content about #data, #data-science, #data-privacy, #data-security, #data-monetization, #data-optimization, #digital-platforms, #good-company, and more.

This story was written by: @strgy. Learn more about this writer by checking @strgy's about page,

and for more stories, please visit hackernoon.com.

Government data is not merely a by-product of governance, it's a strategic asset, writes Frida Ghitis. Ghitis: Government cannot be a data broker, but it should be the custodian of the value of the information it possesses.

This story was originally published on HackerNoon at: https://hackernoon.com/why-partner-data-became-my-toughest-engineering-problem.

Your partner portal isn't broken; your definitions are. How fixing "data lineage" cut deal registration time from 4.5 days to under 2.

Check more stories related to data-science at: https://hackernoon.com/c/data-science.

You can also check exclusive content about #data-architecture, #systems-engineering, #rev-ops, #partner-ecosystem, #channel-sales, #gtm-strategies, #sales-operations, #deal-registration, and more.

This story was written by: @aniruddhapratapsingh. Learn more about this writer by checking @aniruddhapratapsingh's about page,

and for more stories, please visit hackernoon.com.

Partner systems slow down when data definitions drift. Real stability returns only when the model is cleaned up and workflows align around a single, consistent structure.