Discover Interconnects

Interconnects

Interconnects

Author: Nathan Lambert

Subscribed: 56Played: 1,200Subscribe

Share

© Interconnects AI, LLC

Description

Audio essays about the latest developments in AI and interviews with leading scientists in the field. Breaking the hype, understanding what's under the hood, and telling stories.

www.interconnects.ai

www.interconnects.ai

137 Episodes

Reverse

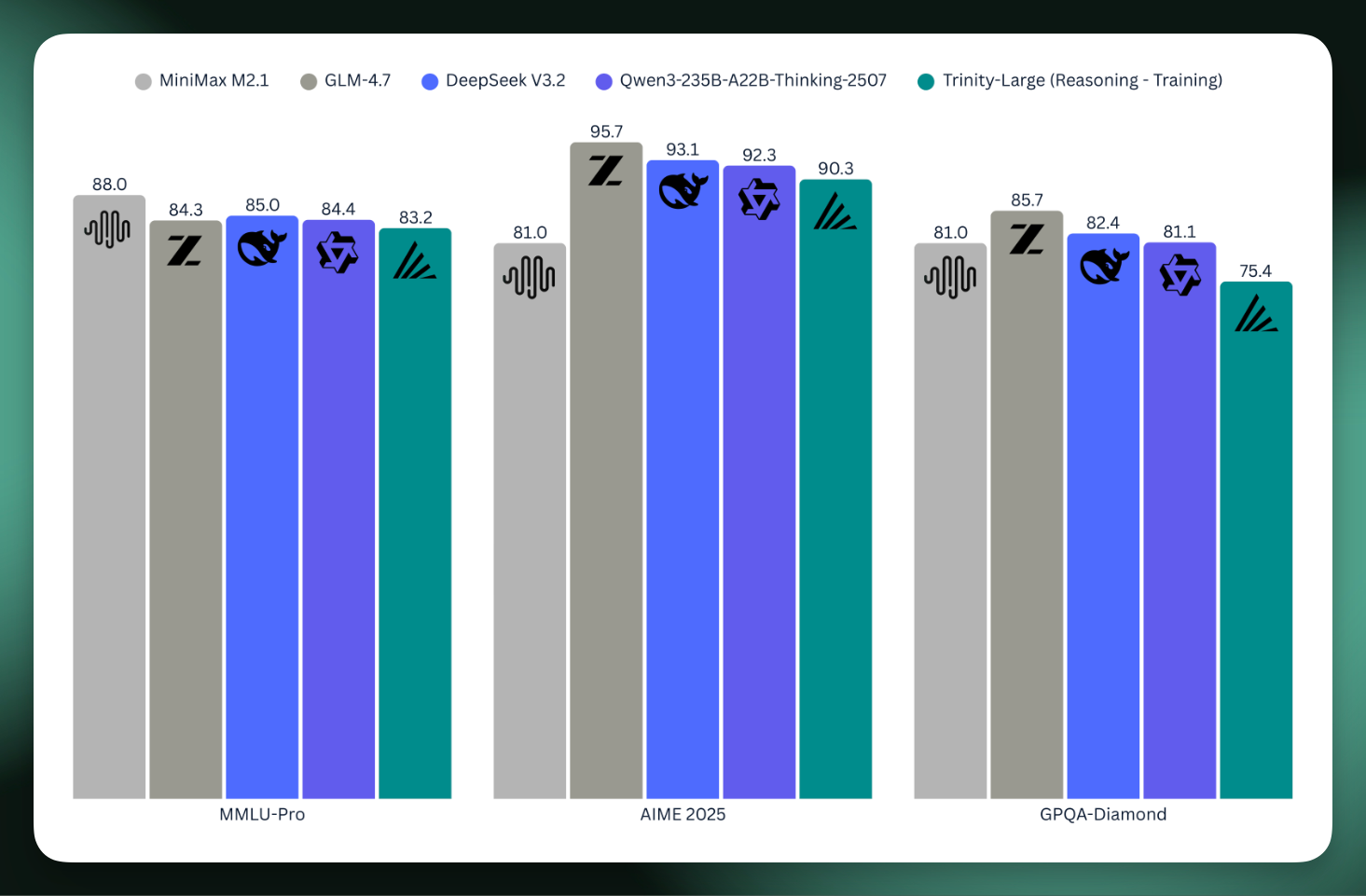

Arcee AI is a the startup I’ve found to be taking the most real approach to monetizing their open models. With a bunch of experience (and revenue) in the past in post-training open models for specific customer domains, they realized they needed to both prove themselves and fill a niche by pretraining larger, higher performance open models built in the U.S.A. They’re a group of people that are most eagerly answering my call to action for The ATOM Project, and I’ve quickly become friends with them.Today, they’re releasing their flagship model — Trinity Large — as the culmination of this pivot. In anticipation of this release, I sat down with their CEO Mark McQuade, CTO Lucas Atkins, and pretraining lead, Varun Singh, to have a wide ranging conversation on:* The state (and future) of open vs. closed models,* The business of selling open models for on-prem deployments,* The story of Arcee AI & going “all-in” on this training run,* The ATOM project,* Building frontier model training teams in 6 months,* and other great topics. I really loved this one, and think you well too.The blog post linked above and technical report have many great details on training the model that I’m still digging into. One of the great things Arcee has been doing is releasing “true base models,” which don’t contain any SFT data or learning rate annealing. The Trinity Large model, an MoE with 400B total and 13B active tokens trained to 17 trillion tokens is the first publicly shared training run at this scale on B300 Nvidia Blackwell machines. As a preview, they shared the scores for the underway reasoning model relative to the who’s-who of today’s open models. It’s a big step for open models built in the U.S. to scale up like this. I won’t spoil all the details, so you still listen to the podcast, but their section of the blogpost on cost sets the tone well for the podcast, which is a very frank discussion on how and why to build open models:When we started this run, we had never pretrained anything remotely like this before.There was no guarantee this would work. Not the modeling, not the data, not the training itself, not the operational part where you wake up, and a job that costs real money is in a bad state, and you have to decide whether to restart or try to rescue it.All in—compute, salaries, data, storage, ops—we pulled off this entire effort for $20 million. 4 Models got us here in 6 months.That number is big for us. It’s also small compared to what frontier labs spend just to keep the lights on. We don’t have infinite retries.Once I post this, I’m going to dive right into trying the model, and I’m curious what you find too.Listen on Apple Podcasts, Spotify, YouTube, and where ever you get your podcasts. For other Interconnects interviews, go here.GuestsLucas Atkins —X,LinkedIn — CTO; leads pretraining/architecture, wrote the Trinity Manifesto.Mark McQuade — X, LinkedIn — Founder/CEO; previously at Hugging Face (monetization), Roboflow. Focused on shipping enterprise-grade open-weight models + tooling.Varun Singh — LinkedIn — pretraining lead.Most of this interview is conducted with Lucas, but Mark and Varun make great additions at the right times.LinksCore:* Trinity Large (400B total, 13B active) collection, blog post. Instruct model today, reasoning models soon.* Trinity Mini, 26B total 3B active (base, including releasing pre-anneal checkpoint)* Trinity Nano Preview, 6B total 1B active (base)* Open Source Catalog: https://www.arcee.ai/open-source-catalog* API Docs and Playground (demo)* Socials: GitHub, Hugging Face, X, LinkedIn, YouTubeTrinity Models:* Trinity models page: https://www.arcee.ai/trinity* The Trinity Manifesto (I recommend you read it): https://www.arcee.ai/blog/the-trinity-manifesto* Trinity HF collection — (Trinity Mini & Trinity Nano Preview)Older models:* AFM-4.5B (and base model) — their first open, pretrained in-house model (blog post).* Five open-weights models (blog): three production models previously exclusive to their SaaS platform plus two research models, released as they shifted focus to AFM — Arcee-SuperNova-v1, Virtuoso-Large, Caller, GLM-4-32B-Base-32K, HomunculusOpen source tools:* MergeKit — model merging toolkit (LGPL license return)* DistillKit — knowledge distillation library* EvolKit — synthetic data generation via evolutionary methodsRelated:* Datology case study w/ ArceeChapters* 00:00:00 Intro: Arcee AI, Trinity Models & Trinity Large* 00:08:26 Transitioning a Company to Pre-training* 00:13:00 Technical Decisions: Muon and MoE* 00:18:41 Scaling and MoE Training Pain* 00:23:14 Post-training and RL Strategies* 00:28:09 Team Structure and Data Scaling* 00:31:31 The Trinity Manifesto: US Open Weights* 00:42:31 Specialized Models and Distillation* 00:47:12 Infrastructure and Hosting 400B* 00:50:53 Open Source as a Business Moat* 00:56:31 Predictions: Best Model in 2026* 01:02:29 Lightning Round & ConclusionsTranscriptTranscript generated with ElevenLabs Scribe v2 and cleaned with Claude Code with Opus 4.5.00:00:06 Nathan Lambert: I’m here with the Arcee AI team. I personally have become a bit of a fan of Arcee, ‘cause I think what they’re doing in trying to build a company around building open models is a valiant and very reasonable way to do this, ‘cause nobody really has a good business plan for open models, and you just gotta try to figure it out, and you gotta build better models over time. And like open-source software, building in public, I think, is the best way to do this. So this kind of gives you the wheels to get the, um... You get to hit the ground running on whatever you’re doing. And this week, they’re launching their biggest model to date, which I’m very excited to see more kind of large-scale MoE open models. I think we’ve seen, I don’t know, at least ten of these from different providers from China last year, and it’s obviously a thing that’s gonna be international, and a lot of people building models, and the US kind of, for whatever reason, has fewer people building, um, open models here. And I think that wherever people are building models, they can stand on the quality of the work. But whatever. I’ll stop rambling. I’ve got Lucas, Mark, um, Varun on the, on the phone here. I’ve known some of them, and I consider us friends. We’re gonna kind of talk through this model, talk through building open models in the US, so thanks for hopping on the pod.00:01:16 Mark McQuade: Thanks for having us.00:01:18 Lucas Atkins: Yeah, yeah. Thanks for having us. Excited.00:01:20 Varun Singh: Nice to be here.00:01:20 Nathan Lambert: What- what should people know about this Trinity Large? What’s the actual name of this model? Like, how stoked are you?00:01:29 Lucas Atkins: So to- yeah.00:01:29 Nathan Lambert: Like, are you, like, finally made it?00:01:32 Lucas Atkins: Uh, you know, we’re recording this a little bit before release, so it’s still like, you know, getting everything buttoned up, and inference going at that size is always a challenge, but we’re-- This has been, like, a six-month sprint since we released our first dense model, which is 4.5B, uh, in, in July of last year, 2025. So, um, it’s always been in service of releasing large. I- it’s a 400B, um, thirteen billion active sparse MoE, and, uh, yeah, we’re, we’re super excited. This has just been the entire thing the company’s focused on the last six months, so really nice to have kind of the fruits of that, uh, start to, start to be used by the people that you’re building it for.00:02:16 Nathan Lambert: Yeah, I would say, like, the realistic question: do you think this is landing in the ballpark of the models in the last six months? Like, that has to be what you shop for, is there’s a high bar- ... of open models out there and, like, on what you’re targeting. Do you feel like these hit these, and somebody that’s familiar, or like MiniMax is, like, two thirty total, something less. I, I don’t know what it is. It’s like ten to twenty B active, probably. Um, you have DeepSeeks in the six hundred range, and then you have Kimi at the one trillion range. So this is still, like, actually on the smaller side of some of the big MoEs- ... that people know, which is, like, freaking crazy, especially you said 13B active. It’s, like- ... very high on the sparsity side. So I don’t actually know how you think about comparing it among those. I was realizing that MiniMax is smaller, doing some data analysis. So I think that it’s like, actually, the comparison might be a little bit too forced, where you just have to make something that is good and figure out if people use it.00:03:06 Lucas Atkins: Yeah, I mean, if, if from raw compute, we’re, we’re roughly in the middle of MiniMax and then GLM 4.5, as far as, like, size. Right, GLM’s, like, three eighty, I believe, and, and thirty-four active. Um, so it-- you know, we go a little bit higher on the total, but we, we cut the, uh, the active in half. Um, it was definitely tricky when we decided we wanted to do this. Again, it was July when... It, it was July when we released, uh, the dense model, and then we immediately knew we wanted to kind of go, go for a really big one, and the, the tricky thing with that is knowing that it’s gonna take six months. You, you can’t really be tr-- you can’t be building the model to be competitive when you started designing it, because, you know, that, obviously, a lot happens in this industry in six months. So, um, when we threw out pre-training and, and a lot of our targets were the GLM 4.5 base model, um, because 4.6 and 4.7 have been, you know, post-training on top of that. Um, and, like, in performance-wise, it’s well within where we want it to be. Um, it’s gonna be... Technically, we’re calling it Trinity Large Preview because we just have a whole month of extra RL that we want to do. Um- But-00:04:29 Nathan Lambert: I’ve been, I’ve been there.00:04:31 Lucas Atkins: Yeah, yeah. But i- you know, we’re, we’re in the, um, you know, mid-eighties on AIME 2025, uh,

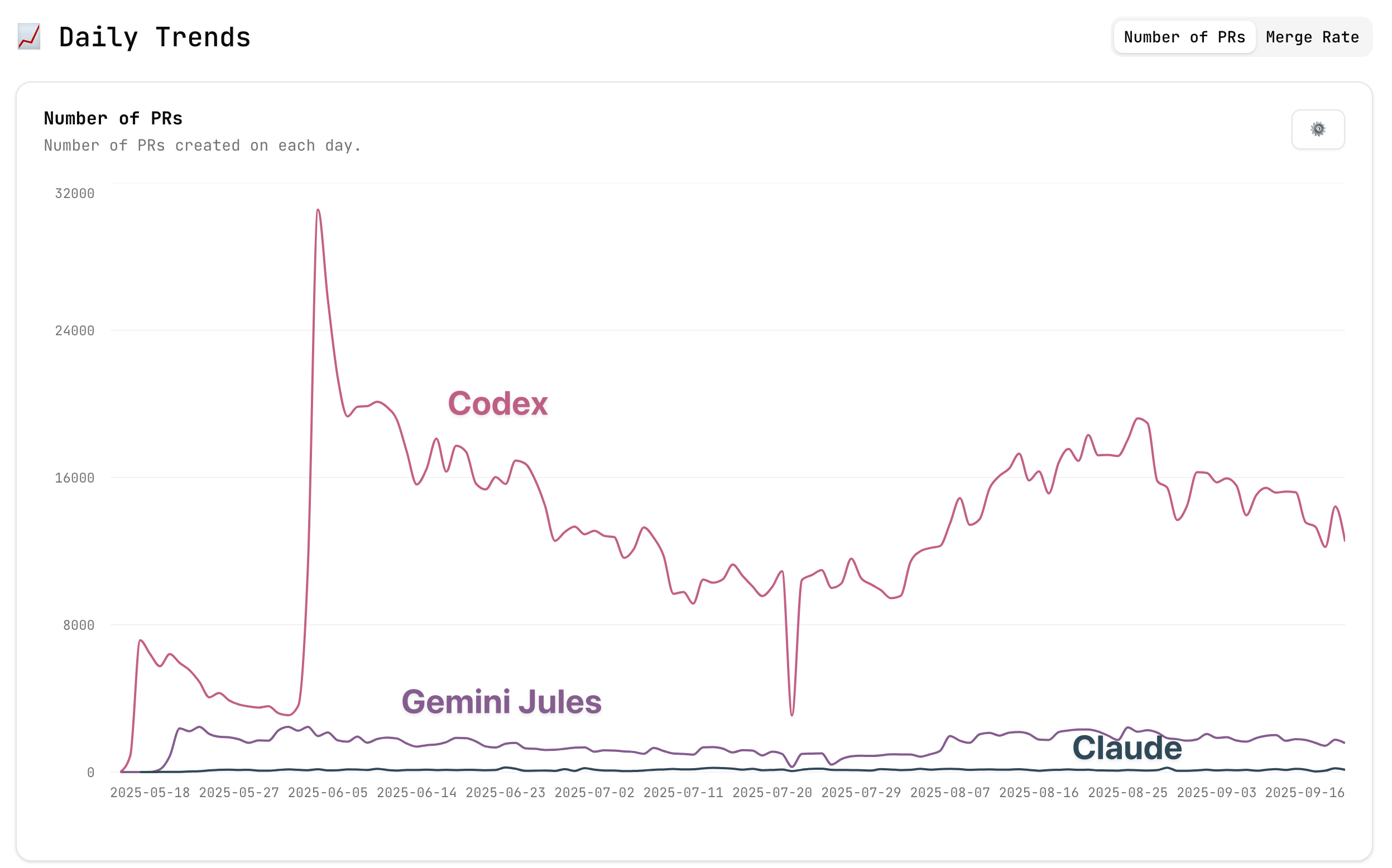

Two weeks ago, I wrote a review of how Claude Code is taking the AI world by storm, saying that “software engineering is going to look very different by the end of 2026." That article captured the power of Claude as a tool and a product, and I still stand by it, but it undersold the changes that are coming in how we use these products in careers that interface with software. The more personal angle was how “I’d rather do my work if it fits the Claude form factor, and soon I’ll modify my approaches so that Claude will be able to help.” Since writing that, I’m stuck with a growing sense that taking my approach to work from the last few years and applying it to working with agents is fundamentally wrong. Today’s habits in the era of agents would limit the uplift I get by micromanaging them too much, tiring myself out, and setting the agents on too small of tasks. What would be better is more open ended, more ambitious, more asynchronous. I don’t yet know what to prescribe myself, but I know the direction to go, and I know that searching is my job. It seems like the direction will involve working less, spending more time cultivating peace, so the brain can do its best directing — let the agents do most of the hard work.Since trying Claude Code with Opus 4.5, my work life has shifted closer to trying to adapt to a new way of working with agents. This new style of work feels like a larger shift than the era of learning to work with chat-based AI assistants. ChatGPT let me instantly get relevant information or a potential solution to the problems I was already working on. Claude Code has me considering what should I work on now that I know I can have AI independently solve or implement many sub-components. Every engineer needs to learn how to design systems. Every researcher needs to learn how to run a lab. Agents push the humans up the org chart.I feel like I have an advantage by being early to this wave, but no longer feel like just working hard will be an lasting edge. When I can have multiple agents working productively in parallel on my projects, my role is shifting more to pointing the army rather than using the power-tool. Pointing the agents more effectively is far more useful than me spending a few more hours grinding on a problem. My default workflow now is GPT 5 Pro for planning, Claude Code with Opus 4.5 for implementation. I often have Claude Code pass information back to GPT 5 Pro for a deep search when stuck with a very detailed prompt. Codex with GPT 5.2 on xhigh thinking effort alone feels very capable, more meticulous than Claude even, but I haven’t yet figured out how to get the best out of it. GPT Pro feels itself to be a strong agent trapped in the wrong UX — it needs to be able to think longer and have a place to work on research tasks.It seems like all of my friends (including the nominally “non-technical” ones) have accepted that Claude can rapidly build incredible, bespoke software for you. Claude updated one of my old research projects to uv so it’s easier to maintain, made a verification bot for my Discord, crafted numerous figures for my RLHF book, feels close to landing a substantial feature in our RL research codebase, and did countless other tasks that would’ve taken me days. It’s the thing de jour — tell your friends and family what trinket you built with Claude. It undersells what’s coming.I’ve taken to leaving Claude Code instances running on my DGX Spark trying to implement new features in our RL codebase when I’m at dinner or work. They make mistakes, they catch most of their own mistakes, and they’re fairly slow too, but they’re capable. I can’t wait to go home and check on what my Claudes were up to.Interconnects is a reader-supported publication. Consider becoming a subscriber.The feeling that I can’t shake is a deep urgency to move my agents from working on toy software to doing meaningful long-term tasks. We know Claude can do hours, days, or weeks, of fun work for us, but how do we stack these bricks into coherent long-term projects? This is the crucial skill for the next era of work.There are no hints or guides on working with agents at the frontier — the only way is to play with them. Instead of using them for cleanup, give them one of your hardest tasks and see what it gets stuck on, see what you can use it for.Software is becoming free, good decision making in research, design, and product has never been so valuable.Being good at using AI today is a better moat than working hard.Here are a collection of pieces that I feel like suitably grapple with the coming wave or detail real practices for using agents. It’s rare that so many of the thinkers in the AI space that I respect are all fixated on a single new tool, a transition period, and a feeling of immense change:* Import AI 441: My agents are working. Are yours? This helped me motivate to write this and focus on how important of a moment this is.* Steve Newman on Hyperproductivity with AI coding agents — importantly written before Claude Opus 4.5, which was a major step change.* Tim Dettmers on working with agents: Use Agents or Be Left Behind? * Steve Yegge on Latent Space on vibe coding (and how you’ll be left behind if you don’t understand how to do it).* Dean W. Ball: Among the Agents — why coding agents aren’t just for programmers. This is a public episode. If you'd like to discuss this with other subscribers or get access to bonus episodes, visit www.interconnects.ai/subscribe

I’ll start by explaining my current AI stack and how it’s changed in recent months. For chat, I’m using a mix of:* GPT 5.2 Thinking / Pro: My most frequent AI use is getting information. This is often a detail about a paper I’m remembering, a method I’m verifying for my RLHF Book, or some other niche fact. I know GPT 5.2 can find it if it exists, and I use Thinking for queries that I think are easier and Pro when I want to make sure the answer is right. Particularly GPT Pro has been the indisputable king for research for quite some time — Simon Willison’s coining of it as his “research goblin” still feels right.I never use GPT 5 without thinking or other OpenAI chat models. Maybe I need to invest more in custom instructions, but the non-thinking models always come across a bit sloppy relative to the competition out there and I quickly churn. I’ve heard gossip that the Thinking and non-Thinking GPT models are even developed by different teams, so it would make sense that they can end up being meaningfully different.I also rarely use Deep Research from any provider, opting for GPT 5.2 Pro and more specific instructions. In the first half of 2025 I almost exclusively used ChatGPT’s thinking models — Anthropic and Google have done good work to win back some of my attention.* Claude 4.5 Opus: Chatting with Claude is where I go for basic code questions, visualizing simple data, and getting richer feedback on my work or decisions. Opus’s tone is particularly refreshing when trying to push the models a bit (in a way that GPT 4.5 used to provide for me, as I was a power user of that model in H1 2025). Claude Opus 4.5 isn’t particularly fast relative to a lot of models out there, but when you’re used to using the GPT Thinking models like me, it feels way faster (even with extended thinking always on, as I do) and sufficient for this type of work.* Gemini 3 Pro: Gemini is for everything else — explaining concepts I know are well covered in the training data (and minor hallucinations are okay, e.g. my former Google rabbit holes), multimodality, and sometimes very long-context capabilities (but GPT 5.2 Thinking took a big step here, so it’s a bit closer). I still open and use the Gemini app regularly, but it’s a bit less locked-in than the other two.Relative to ChatGPT, sometimes I feel like the search mode of Gemini is a bit off. It could be a product decision with how the information is presented to the user, but GPT’s thorough, repeated search over multiple sources instills a confidence I don’t get from Gemini for recent or research information.* Grok 4: I use Grok ~monthly to try and find some piece of AI news or Alpha I recall from browsing X. Grok is likely underrated in terms of its intelligence (particularly Grok 4 was an impressive technical release), but it hasn’t had sticky product or differentiating features for me.For images I’m using a mix of mostly Nano Banana Pro and sometimes GPT Image 1.5 when Gemini can’t quite get it. For coding, I’m primarily using Claude Opus 4.5 in Claude Code, but still sometimes find myself needing OpenAI’s Codex or even multi-LLM setups like Amp. Over the holiday break, Claude Opus helped me update all the plots for The ATOM Project, which included substantial processing of our raw data from scraping HuggingFace, perform substantive edits for the RLHF Book (where I felt it was a quite good editor when provided with detailed instructions on what it should do), and other side projects and life organization tasks. I recently published a piece explaining my current obsession with Claude Opus 4.5, I recommend you read it if you haven’t had the chance:A summary of this is that I pay for the best models and greatly value the marginal intelligence over speed — particularly because, for a lot of the tasks I do, I find that the models are just starting to be able to do them well. As these capabilities diffuse in 2026, speed will become more of a determining factor in model selection.Peter Wildeford had a post on X with a nice graphic that reflected a very similar usage pattern:Across all of these categories, it doesn’t feel like I could get away with just using one of these models without taking a substantial haircut in capabilities. This is a very strong endorsement for the notion of AI being jagged — i.e. with very strong capabilities spread out unevenly — while also being a bit of an unusual way to need to use a product. Each model is jagged in its own way. Through 2023, 2024, and the earlier days of modern AI, it quite often felt like there was always just one winning model and keeping up was easier. Today, it takes a lot of work and fiddling to make sure you’re not missing out on capabilities.The working pattern that I’ve formed that most reinforces this using multiple models era is how often my problem with an AI model is solved by passing the same query to a peer model. Models get stuck, some can’t find bugs, some coding agents keep getting stuck on some weird, suboptimal approach, and so on. In these cases, it feels quite common to boot up a peer model or agent and get it to unblock project.This multi-model approach or agent-switching happening occasionally would be what I’d expect, but with it happening regularly it means that the models are actually all quite close to being able to solve the tasks I’m throwing at them — they’re just not quite there. The intuition here is that if we view each task as having a probability of success, if said the probability was low for each model, switching would almost always fail. For switching to regularly solve the task, each model must have a fairly high probability of success.For the time being, it seems like tasks at the frontier of AI capabilities will always keep this model-switching meta, but it’s a moving suite of capabilities. The things I need to switch on now will soon be solved by all the next-generation of models.I’m very happy with the value I’m getting out of my hundreds of dollars of AI subscriptions, and you should likely consider doing the same if you work in a domain that sounds similar to mine.Interconnects is a reader-supported publication. Consider becoming a subscriber.On the opposite side of the frontier models pushing to make current cutting edge tasks 100% reliable are open models pushing to undercut the price of frontier models. The coding plans on open models tend to cost 10X (or more) less than the frontier lab plans. It’s a boring take, but for the next few years I expect this gap to largely remain steady, where a lot of people get an insane value out of the cutting edge of models. It’ll take longer for the open model undercut to hit the frontier labs, even though from basic principles it looks like a precarious position for them to be in, in terms of costs of R&D and deployment. Open models haven’t been remotely close to Claude 4.5 Opus or GPT 5.2 Thinking in my use.The other factor is that 2025 gave us all of Deep Research agents, code/CLI agents, search (and Pro) tool use models, and there will almost certainly be new form factors we end up using almost every day in released 2026. Historically, closed labs have been better at shipping new products into the world, but with better open models this should be more diffused, as good product capabilities are very diffuse across the tech ecosystem. To capitalize on this, you need to invest time (and money) trying all the cutting-edge AI tools you can get your hands on. Don’t be loyal to one provider. This is a public episode. If you'd like to discuss this with other subscribers or get access to bonus episodes, visit www.interconnects.ai/subscribe

There is an incredible amount of hype for Claude Code with Opus 4.5 across the web right now, which I for better or worse entirely agree with. Having used coding agents extensively for the past 6-9 months, where it felt like sometimes OpenAI’s Codex was the best and sometimes Claude, there was some meaningful jump over the last few weeks. The jump is well captured by this post, which called it the move of “software creation from an artisanal, craftsman activity to a true industrial process.” Translation: Software is becoming free and human design, specification, and entrepreneurship is the only limiting factor.What is odd is that this latest Opus model was released on November 24, 2025, and the performance jump in Claude Code seemed to come at least weeks after its integration — I wouldn’t be surprised if a small product change unlocked massive real (or perceived) gains in performance.Interconnects is a reader-supported publication. Consider becoming a subscriber.The joy and excitement I feel when using this latest model in Claude Code is so simple that it necessitates writing about it. It feels right in line with trying ChatGPT for the first time or realizing o3 could find any information I was looking for, but in an entirely new direction. This time, it is the commodification of building. I type and outputs are constructed directly. Claude’s perfect mix of light sycophancy, extreme productivity, and an elegantly crafted application has me coming up with things to do with Claude. I’d rather do my work if it fits the Claude form factor, and soon I’ll modify my approaches so that Claude will be able to help. In a near but obvious future I’ll just manage my Claudes from my phone at the coffee shop.Where Claude is an excellent model, maybe the best, its product is where the magic happens for building with AI that instills confidence. We could see the interfaces the models are used in being so important to performance, such that Anthropic’s approach with Claude feels like Apple’s integration of hardware, software, and everything in between. This sort of magical experience is not one I expect to be only buildable by Anthropic — they’re just the first to get there. The fact that Claude makes people want to go back to it is going to create new ways of working with these models and software engineering is going to look very different by the end of 2026. Right now Claude (and other models) can replicate the most-used software fairly easily. We’re in a weird spot where I’d guess they can add features to fairly complex applications like Slack, but there are a lot of hoops to jump through in landing the feature (including very understandable code quality standards within production code-bases), so the models are way easier to use when building from scratch than in production code-bases. This dynamic amplifies the transition and power shift of software, where countless people who have never fully built something with code before can get more value out of it. It will rebalance the software and tech industry to favor small organizations and startups like Interconnects that have flexibility and can build from scratch in new repositories designed for AI agents. It’s an era to be first defined by bespoke software rather than a handful of mega-products used across the world. The list of what’s already commoditized is growing in scope and complexity fast — website frontends, mini applications on any platform, data analysis tools — all without having to know how to write code.I expect mental barriers people have about Claude’s ability to handle complex codebases to come crashing down throughout the year, as more and more Claude-pilled engineers just tell their friends “skill issue.” With these coding agents all coming out last year, the labs are still learning how to best train models to be well-expressed in the form factor. It’ll be a defining story of 2026 as the commodification of software expands outside of the bubble of people deeply obsessed with AI. There are things that Claude can’t do well and will take longer to solve, but these are more like corner cases and for most people immense value can be built around these blockers. The other part that many people will miss is that Claude Code doesn’t need to be restricted to just software development — it can control your entire computer. People are starting to use it for managing their email, calendars, decision making, referencing their notes, and everything in between. The crucial aspect is that Claude is designed around the command line interface (CLI), which is an open door into the digital world. The DGX Spark on my desk can be a mini AI research and development station managed by Claude.This complete interface managing my entire internet life is the beginnings of current AI models feeling like they’re continually learning. Whenever Claude makes a mistake or does something that doesn’t match your taste, dump a reminder into CLAUDE.md, it’s as simple as that. To quote Doug OLaughlin, my brother in arms of Claude fandom, Claude with a 100X context window and 100X the speed will be AGI. By the end of 2026 we definitely could get the first 10X of both with the massive buildout of compute starting to become available.Happy building. This is a public episode. If you'd like to discuss this with other subscribers or get access to bonus episodes, visit www.interconnects.ai/subscribe

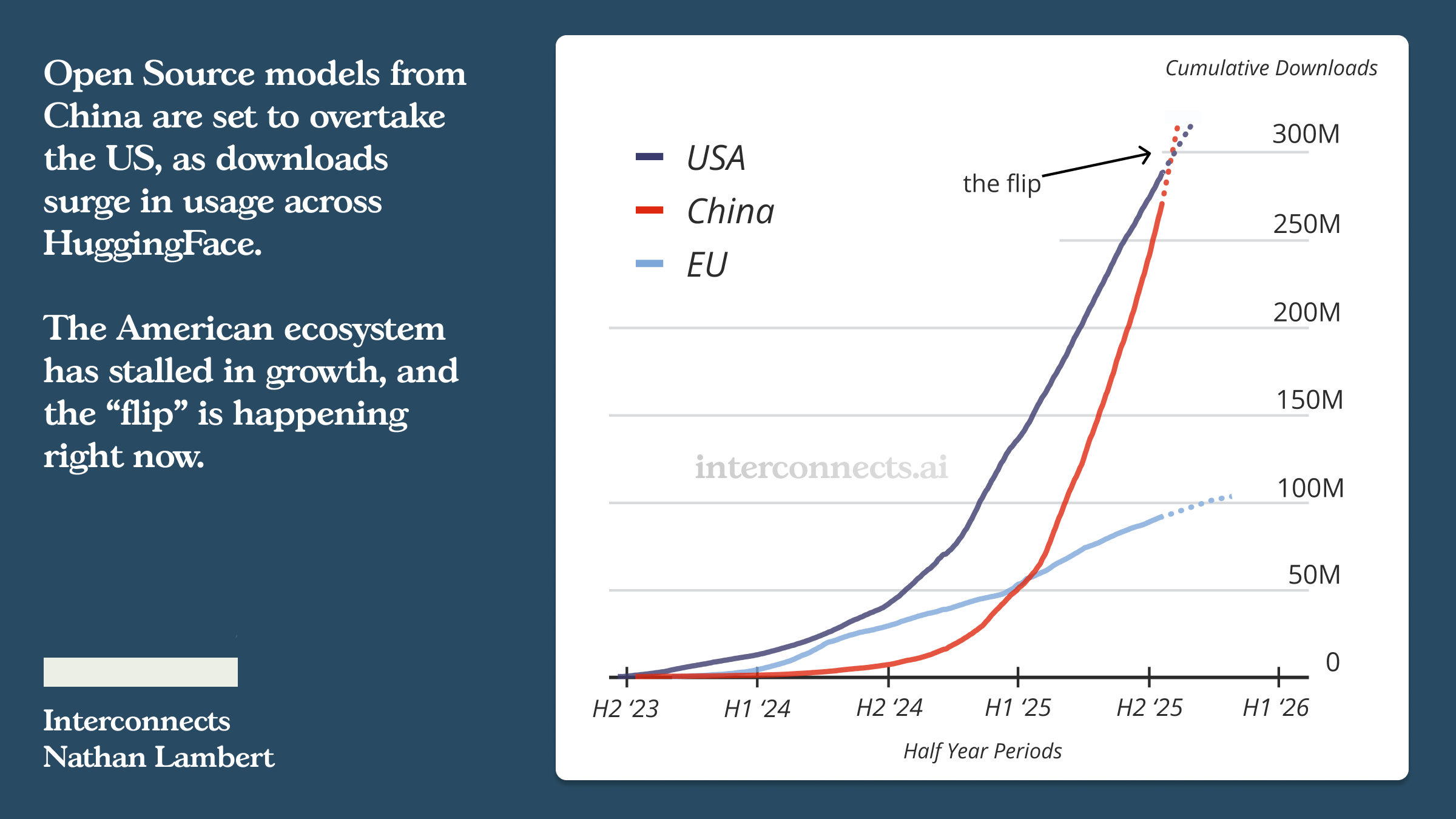

Nathan sits down with Florian, our open model analyst to get spicy into debates of which labs won and lost momentum in open models of 2025. Reflection 70B, Huawei repackaging someone else's model as their own, the fall of Llama — no drama is left unturned. We also dig into the nuances that we didn't get to in our post, predict GPT-OSS 2, the American v. China balance at the end of 2026, and many other fun topics.Enjoy & let us know if we should do more of this.For the full year in review post, and to see our tier list, click here: Watch on YouTube here: This is a public episode. If you'd like to discuss this with other subscribers or get access to bonus episodes, visit www.interconnects.ai/subscribe

It’s finally here! The public (and most complete) version of my talk covering every stage of the process to build Olmo 3 Think (slides are available). I’ve been giving this, improving it, and getting great feedback at other venues such as The Conference on Language Modeling (COLM) & The PyTorch Conference.This involves changes and new considerations of every angle of the stack, from pretraining, evaluation, and of course post-training.Most of the talk focuses on reinforcement learning infrastructure and evaluating reasoning models, with quick comments on every training stage. I hope you enjoy it, and let us know what to improve in the future!Chapters* 00:00:00 Introduction* 00:06:30 Pretraining Architecture* 00:09:25 Midtraining Data* 00:11:08 Long-context Necessity* 00:13:04 Building SFT Data* 00:20:05 Reasoning DPO Surprises* 00:24:47 Scaling RL* 00:41:05 Evaluation Overview* 00:48:50 Evaluation Reflections* 01:00:25 ConclusionsHere’s the YouTube link: This is a public episode. If you'd like to discuss this with other subscribers or get access to bonus episodes, visit www.interconnects.ai/subscribe

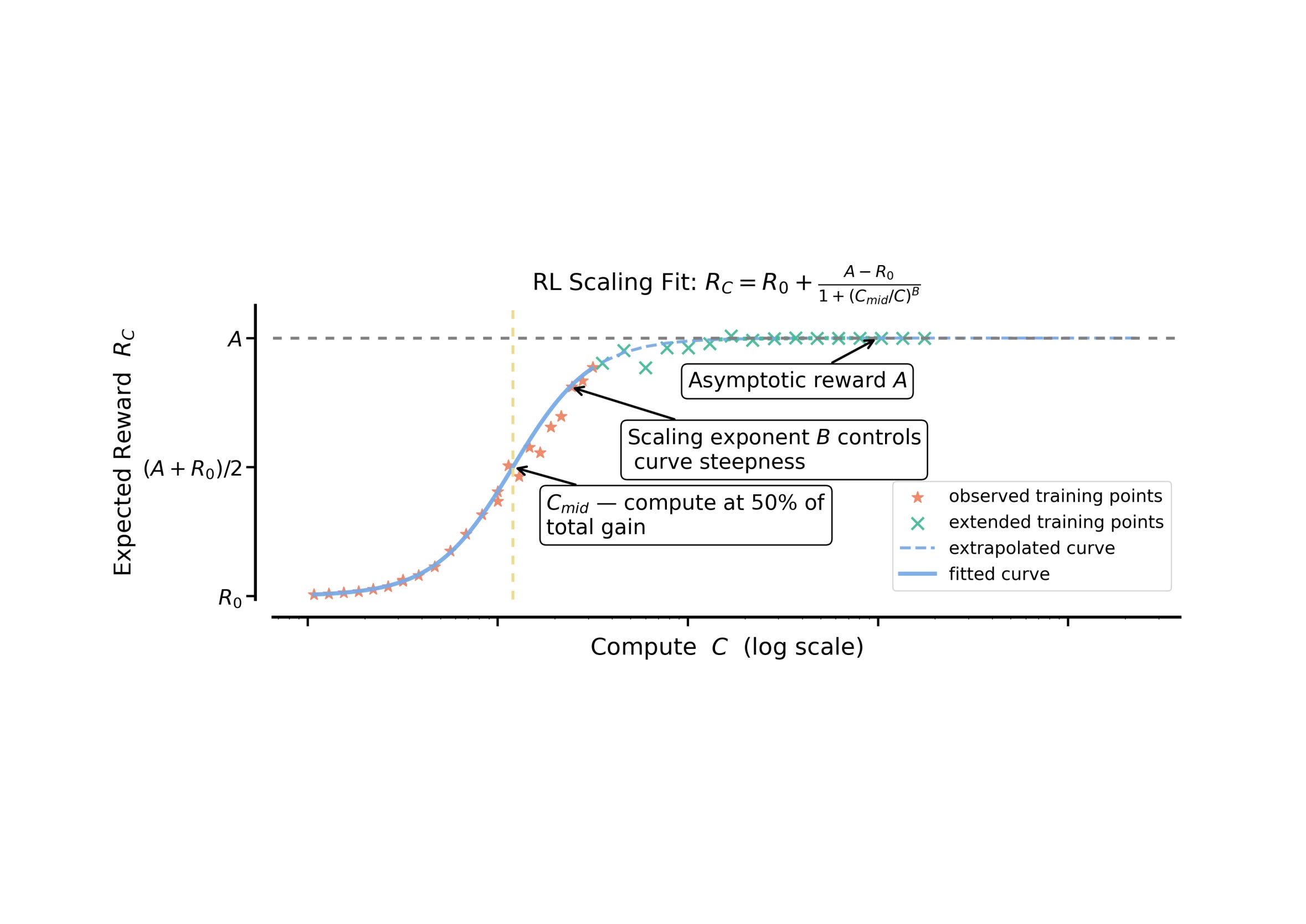

We present Olmo 3, our next family of fully open, leading language models. This family of 7B and 32B models represents:* The best 32B base model.* The best 7B Western-origin thinking & instruct models.* The first 32B (or larger) fully open reasoning model.This is a big milestone for Ai2 and the Olmo project. These aren’t huge models (more on that later), but it’s crucial for the viability of fully open-source models that they are competitive on performance – not just replications of models that came out 6 to 12 months ago. As always, all of our models come with full training data, code, intermediate checkpoints, training logs, and a detailed technical report. All are available today, with some more additions coming before the end of the year.As with OLMo 2 32B at its release, OLMo 3 32B is the best open-source language model ever released. It’s an awesome privilege to get to provide these models to the broader community researching and understanding what is happening in AI today.Paper: https://allenai.org/papers/olmo3 Artifacts: https://huggingface.co/collections/allenai/olmo-3Demo: https://playground.allenai.org/ Blog: https://allenai.org/blog/olmo3 Base models – a strong foundationPretraining’s demise is now regularly overstated. 2025 has marked a year where the entire industry rebuilt their training stack to focus on reasoning and agentic tasks, but some established base model sizes haven’t seen a new leading model since Qwen 2.5 in 2024. The Olmo 3 32B base model could be our most impactful artifact here, as Qwen3 did not release their 32B base model (likely for competitive reasons). We show that our 7B recipe competes with Qwen 3, and the 32B size enables a starting point for strong reasoning models or specialized agents. Our base model’s performance is in the same ballpark as Qwen 2.5, surpassing the likes of Stanford’s Marin and Gemma 3, but with pretraining data and code available, it should be more accessible to the community to learn how to finetune it (and be confident in our results).We’re excited to see the community take Olmo 3 32B Base in many directions. 32B is a loved size for easy deployment on single 80GB+ memory GPUs and even on many laptops, like the MacBook I’m using to write this on.A model flow – the lifecycle of creating a modelWith these strong base models, we’ve created a variety of post-training checkpoints to showcase the many ways post-training can be done to suit different needs. We’re calling this a “Model Flow.” For post-training, we’re releasing Instruct versions – short, snappy, intelligent, and useful especially for synthetic data en masse (e.g. recent work by Datology on OLMo 2 Instruct), Think versions – thoughtful reasoners with the performance you expect from a leading thinking model on math, code, etc. and RL Zero versions – controlled experiments for researchers understanding how to build post-training recipes that start with large-scale RL on the base model.The first two post-training recipes are distilled from a variety of leading, open and closed, language models. At the 32B and smaller scale, direct distillation with further preference finetuning and reinforcement learning with verifiable rewards (RLVR) is becoming an accessible and highly capable pipeline. Our post-training recipe follows our recent models: 1) create an excellent SFT set, 2) use direct preference optimization (DPO) as a highly iterable, cheap, and stable preference learning method despite its critics, and 3) finish up with scaled up RLVR. All of these stages confer meaningful improvements on the models’ final performance.Instruct models – low latency workhorsesInstruct models today are often somewhat forgotten, but the likes of Llama 3.1 Instruct and smaller, concise models are some of the most adopted open models of all time. The instruct models we’re building are a major polishing and evolution of the Tülu 3 pipeline – you’ll see many similar datasets and methods, but with pretty much every datapoint or training code being refreshed. Olmo 3 Instruct should be a clear upgrade on Llama 3.1 8B, representing the best 7B scale model from a Western or American company. As scientists we don’t like to condition the quality of our work based on its geographic origins, but this is a very real consideration to many enterprises looking to open models as a solution for trusted AI deployments with sensitive data.Building a thinking modelWhat people have most likely been waiting for are our thinking or reasoning models, both because every company needs to have a reasoning model in 2025, but also to clearly open the black box for the most recent evolution of language models. Olmo 3 Think, particularly the 32B, are flagship models of this release, where we considered what would be best for a reasoning model at every stage of training.Extensive effort (ask me IRL about more war stories) went into every stage of the post-training of the Think models. We’re impressed by the magnitude of gains that can be achieved in each stage – neither SFT nor RL is all you need at these intermediate model scales.First we built an extensive reasoning dataset for supervised finetuning (SFT), called Dolci-Think-SFT, building on very impactful open projects like OpenThoughts3, Nvidia’s Nemotron Post-training, Prime Intellect’s SYNETHIC-2, and many more open prompt sources we pulled forward from Tülu 3 / OLMo 2. Datasets like this are often some of our most impactful contributions (see the Tülu 3 dataset as an example in Thinking Machine’s Tinker :D – please add Dolci-Think-SFT too, and Olmo 3 while you’re at it, the architecture is very similar to Qwen which you have).For DPO with reasoning, we converged on a very similar method as HuggingFace’s SmolLM 3 with Qwen3 32B as the chosen model and Qwen3 0.6B as the rejected. Our intuition is that the delta between the chosen and rejected samples is what the model learns from, rather than the overall quality of the chosen answer alone. These two models provide a very consistent delta, which provides way stronger gains than expected. Same goes for the Instruct model. It is likely that DPO is helping the model converge on more stable reasoning strategies and softening the post-SFT model, as seen by large gains even on frontier evaluations such as AIME.Our DPO approach was an expansion of Geng, Scott, et al. “The delta learning hypothesis: Preference tuning on weak data can yield strong gains.” arXiv preprint arXiv:2507.06187 (2025). Many early open thinking models that were also distilled from larger, open-weight thinking models likely left a meaningful amount of performance on the table by not including this training stage.Finally, we turn to the RL stage. Most of the effort here went into building effective infrastructure to be able to run stable experiments with the long-generations of larger language models. This was an incredible team effort to be a small part of, and reflects work ongoing at many labs right now. Most of the details are in the paper, but our details are a mixture of ideas that have been shown already like ServiceNow’s PipelineRL or algorithmic innovations like DAPO and Dr. GRPO. We have some new tricks too!Some of the exciting contributions of our RL experiments are 1) what we call “active refilling” which is a way of keeping the generations from the learner nodes constantly flowing until there’s a full batch of completions with nonzero gradients (from equal advantages) – a major advantage of our asynchronous RL approach; and 2) cleaning, documenting, decontaminating, mixing, and proving out the large swaths of work done by the community over the last months in open RLVR research.The result is an excellent model that we’re very proud of. It has very strong reasoning benchmarks (AIME, GPQA, etc.) while also being stable, quirky, and fun in chat with excellent instruction following. The 32B range is largely devoid of non-Qwen competition. The scores for both of our Thinkers get within 1-2 points overall with their respective Qwen3 8/32B models – we’re proud of this!A very strong 7B scale, Western thinking model is Nvidia’s NVIDIA-Nemotron-Nano-9B-v2 hybrid model. It came out months ago and is worth a shot if you haven’t tried it. I personally suspect it may be due to the hybrid architecture making subtle implementation bugs in popular libraries, but who knows.All in, the Olmo 3 Think recipe gives us a lot of excitement for new things to try in 2026.RL ZeroDeepSeek R1 showed us a way to new post-training recipes for frontier models, starting with RL on the base model rather than a big SFT stage (yes, I know about cold-start SFT and so on, but that’s an implementation detail). We used RL on base models as a core feedback cycle when developing the model, such as during intermediate midtraining data mixing. This is viewed now as a fundamental, largely innate, capability of the base-model.To facilitate further research on RL Zero, we released 4 datasets and series of checkpoints, showing per-domain RL Zero performance on our 7B model for data mixes that focus on math, code, instruction following, and all of them together.In particular, we’re excited about the future of RL Zero research on Olmo 3 precisely because everything is open. Researchers can study the interaction between the reasoning traces we include at midtraining and the downstream model behavior (qualitative and quantitative).This helps answer questions that have plagued RLVR results on Qwen models, hinting at forms of data contamination particularly on math and reasoning benchmarks (see Shao, Rulin, et al. “Spurious rewards: Rethinking training signals in rlvr.” arXiv preprint arXiv:2506.10947 (2025). or Wu, Mingqi, et al. “Reasoning or memorization? unreliable results of reinforcement learning due to data contamination.” arXiv preprint arXiv:2507.10532 (2025).)What’s nextThis is the biggest project we’ve ever taken on at Ai2, with 60+ authors and numerous other support staff.In building and observing “thinking”

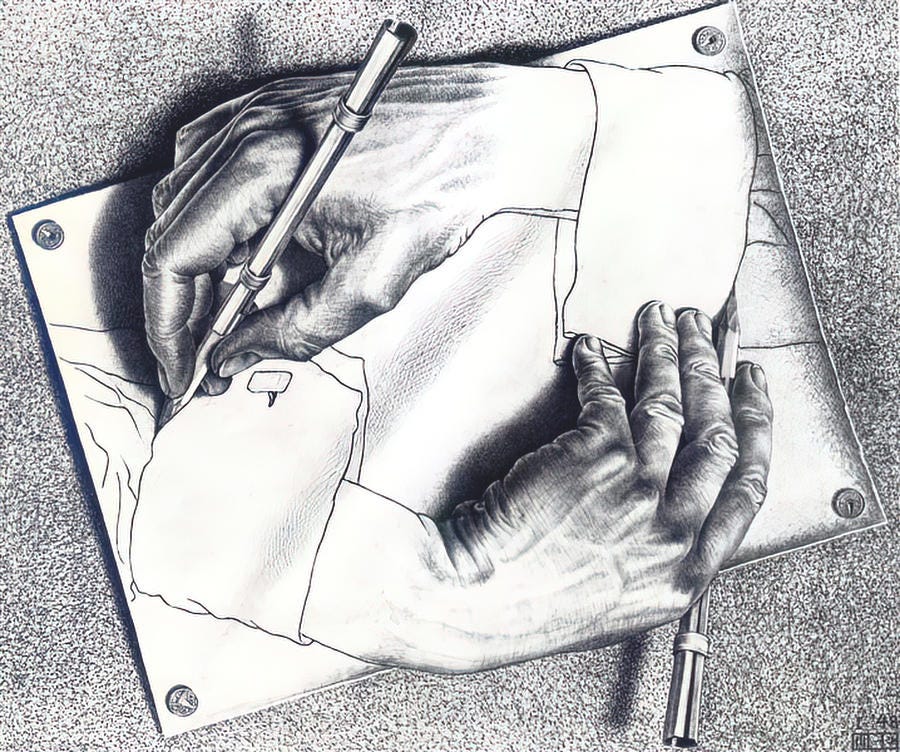

First, on the topic of writing, the polished, and more importantly printed, version of my RLHF Book is available for pre-order. It’s 50% off for a limited time, you can pre-order it here! Like a lot of writing, I’ve been sitting on this piece for many months thinking it’s not contributing enough, but the topic keeps coming up — most recently via Jasmine Sun — and people seem to like it, so I hope you do too!It’s no longer a new experience to be struck by just how bad AI models are at writing good prose. They can pull out a great sentence every now and then, particularly models like GPT-5 Pro and other large models, but it’s always a quick comment and never many sustained successive sentences. More importantly, good AI writing feels like a lucky find rather than the result of the right incantation. After spending a long time working training these models, I’m fairly convinced that this writing inhibition is a structural limitation to how we train these models today and the markets they’re designed to serve.If we're making AIs that are soon to be superhuman at most knowledge work, that are trained primarily to predict text tokens, why is their ability to create high quality text tokens still so low? Why can’t we make the general ChatGPT experience so much more refined and useful for writers while we’re unlocking entirely new ways of working with them every few months — most recently the CLI agents like Claude Code. This gap is one of my favorite discussions of AI because it’s really about the definition of good writing is in itself.Where language models can generate beautiful images from random noise, they can't reliably generate a good few sentences from a couple bullet points of information. What is different about the art form of writing than what AI can already capture?I'm coming to believe that we could train a language model to be a great writer, but it goes against so many of the existing training processes. To list a few problems at different stages of the stack of varying severity in terms of their handicapping of writing:* Style isn’t a leading training objective. Language models all go through preference training where many aspects from helpfulness, clarity, honesty, etc. are balanced against each other. Many rewards make any one reward, such as style, have a harder time standing out. Style and writing quality is also far harder to measure, so it is less likely to be optimized vis-a-vis other signals (such as sycophancy, which was easier to capture).* Aggregate preferences suppress quirks. Language model providers design models with a few intended personalities, largely due to the benefits of predictability. These providers are optimizing many metrics for "the average user." Many users will disagree on what their preference for “good writing” is.* Good writing’s inherent friction. Good writing often takes much longer to process, even when you’re interested in it. Most users of ChatGPT just want to parse the information quickly. Doubly, the people creating the training data for these models are often paid per instance, so an answer with more complexity and richness would often be suppressed by subtle financial biases to move on.* Writing well is orthogonal to training biases. Throughout many stages of the post-training process, modern RLHF training exploits subtle signals for sycophancy and length-bias that aren't underlying goals of it. These implicit biases go against the gradient for better writing. Good writing is pretty much never verbose.* Forced neutrality of a language model. Language models are trained to be neutral on a variety of sensitive topics and to not express strong opinions in general. The best writing unabashedly shares a clear opinion. Yes, I’d expect wackier models like Grok to potentially produce better writing, even if I don’t agree with it. This leads directly to a conflict directly in something I value in writing — voice.All of these create models that are appealing to broad audiences. What we need to create a language model that can write wonderfully is to give it a strong personality, and potentially a strong "sense of self" — if that actually impacts a language model's thinking. The cultivation of voice is one of my biggest recommendations to people trying to get better at writing, only after telling them to find something they want to learn about. Voice is core to how I describe my writing process.When I think about how I write, the best writing relies on voice. Voice is where you process information into a unique representation — this is often what makes information compelling.Many people have posited that base models make great writers, such as when I discussed poetry with Andrew Carr on his Interconnects appearance, but this is because base models haven’t been squashed to the narrower style of post-trained responses. I’ve personally been thinking about this sort of style induced by post-training recently as we prepare for our next Olmo release, and many of us think the models with lower evaluation scores on the likes of AlpacaEval or LMArena actually fit our needs better. The accepted style of chatty models today, whether it’s GPT-5, DeepSeek R1, or a large Qwen model, is a bit cringe for my likes. This style is almost entirely applied during post-training.Taking a step back, this means base models show us that there can be great writing out of the models, but it’s still far from reliable. Base models aren't robust enough to variations to make great writers — we need some form of the constraints applied in post-training to make models follow Q&A. The next step would be solving the problem of how models aren’t trained with a narrow enough experience. Specific points of view nurture voice. The target should be a model that can output tokens in any area or request that is clear, compelling, and entertaining. We need to shape these base models with post-training designed for writing, just as the best writers bend facts to create narrative. Interconnects is a reader-supported publication. Consider becoming a subscriber.Some models makers care a bit about this. When a new model drops and people rave about its creative writing ability, such as MoonShot AI’s Kimi K2 line of model, I do think the team put careful work into the data or training pipelines. The problem is that no model provider is remotely ready to sacrifice core abilities of the model such as math and coding in pursuit of meaningfully better writing models. There are no market incentives to create this model — all the money in AI is elsewhere, and writing isn’t a particularly lucrative market to disrupt. An example is GPT 4.5, which was to all reports a rather light fine-tune, but one that produced slightly better prose. It was shut down almost immediately after its launch because it was too slow and economically unviable with its large size.If we follow the voice direction, the model that is likely to be the best writer relative to its overall intelligence was the original revamped Bing (aka Sydney) model that went crazy in front of many users and was rapidly shut down. That model had THOUGHTS it wanted to share. That’s a starting point, but a scary one to untap again. This sort of training goes far beyond a system prompt or a light finetune, and it will need to be a new post-training process from start to end (more than just a light brush of character training).We need to be bold enough to create models with personality if we want writing to fall out. We need models that speak their views loudly and confidently. These also will make more interesting intellectual companions, a niche that Claude fills for some people, but I struggle with Claude plenty of times due to its hesitance, hedging, or preferred answer format.For the near future, the writing handicap of large language models is here to stay. Good writing you have to sit in to appreciate, and ChatGPT and the leading AI products are not optimized for this whatsoever. Especially with agentic applications being the next frontier, most of the text written by the models will never even be read by a human. Good writing is legitimately worse for most of the use cases I use AI for. I don’t like the style per se, but having it jump to be a literary masterpiece would actually be worse.I don’t really have a solution to AI’s writing problem, but rather expensive experiments people can try. At some point I expect someone to commission a project to push this to its limits, building a model just for writing. This’ll take some time but is not untenable nor unfathomably expensive — it’ll just be a complete refresh of a modern post-training stack.Even if this project was invested in, I don’t expect the models to be close to the best humans at elegant writing within a few years. Our current batch of models as a starting point are too far from the goal. With longer timelines, it doesn’t feel like writing is a fundamental problem that can’t be solved. This is a public episode. If you'd like to discuss this with other subscribers or get access to bonus episodes, visit www.interconnects.ai/subscribe

This is the first of a handful of interviews I’m doing with teams building the best open language models of the world. In 2025, the open model ecosystem has changed incredibly. It’s more populated, far more dominated by Chinese companies, and growing. DeepSeek R1 shocked the world and now there are a handful of teams in China training exceptional models. The Ling models, from InclusionAI — Ant Group’s leading AI lab — have been one of the Chinese labs from the second half of the year that are releasing fantastic models at a rapid clip. This interview is primarily with Richard Bian, who’s official title is Product & Growth Lead, Ant Ling & InclusionAI (on LinkedIn, X), previously leading AntOSS (Ant Group’s open source software division). Richard spent a substantial portion of his career working in the United States, with time at Square, Microsoft, and an MBA from Berkeley Haas, before returning to China and work at Ant.Also joining are two leads of the Ant Ling technical team, Chen Liang (Algorithm Engineer), and Ziqi Liu (Research Lead).This interview focuses on many topics of the open language models, such as:* Why is the Ant Group — known for the popular fintech app AliPay — investing so much in catching up to the frontier of AI?* What does it take to rapidly gain the ability to train excellent models?* What decisions does one make when deciding a modeling strategy? Text-only or multimodal? What size of models?…* How does the Chinese AI ecosystem prioritize different directions than the West?And many more topics. Listen on Apple Podcasts, Spotify, YouTube, and where ever you get your podcasts. For other Interconnects interviews, go here.Some more references & links:* InclusionAI’s homepage, highlighting their mission.* AntLingAGI on X (models, research, etc.), InclusionAI on X (overall initiative), InclusionAI GitHub, or their Discord community.* Ling 1T was highlighted in “Our Picks” for our last open model roundup in October.* Another interview with Richard at State of Open Conference 2025.* Over the last few months, our coverage of the Chinese ecosystem has taken off, such as our initial ranking of 19 open Chinese AI labs (before a lot of the models we discuss below), model roundups, and tracking the trajectory of China’s ecosystem. An overview of Ant Ling & Inclusion AIAs important context for the interview, we wanted to present an overview of InclusionAI, Ant’s models, and other efforts that emerged onto the scene just in the last 6-9 months. To start — branding.Here’s a few screenshots of InclusionAI’s new website. It starts with fairly standard “open-source AI lab messaging.”Then I was struct by the very distinct messaging which is surprisingly rare in the intense geopolitical era of AI — saying AI is shared for humanity.I expect a lot of very useful and practical messaging from Chinese open-source labs. They realize that Western companies likely won’t pay for their services, so having open models is their only open door to meaningful adoption and influence.Main models (Ling, Ring, & Ming)The main model series is the Ling series, their reasoning models are called Ring, and their Multimodal versions are called Ming. The first public release was Ling Plus, 293B sparse MoE in April. They released the paper for their reasoning model in June and have continued to build on their MoE-first approach.Since then, the pace has picked up significantly. Ling 1.5 came in July.Ling (and Ring) 2.0 came in September of this year, with a 16B total, 2B active mini model, an 100B total, 6B active flash model, and a big 1T total parameter 50B active primary model. This 1T model was accompanied by a substantial tech report on the challenges of scaling RL to frontier scale models. The rapid pace that Chinese companies have built this knowledge (and shared it clearly) is impressive and worth considering what it means for the future.Eval scores obviously aren’t everything, but they’re the first step to building meaningful adoption. Otherwise, you can also check out their linear attention model (paper, similar to Qwen-Next), some intermediate training checkpoints, or multimodal models.Experiments, software, & otherInclusionAI has a lot of projects going in the open source space. Here are some more highlights:* Language diffusion models: MoEs, sizes similar to Ling 2.0 mini and flash (so they likely used those as base). Previous versions exist. * Agent-based models/fine-tunes, Deep Research models, computer-use agentic models.* GroveMoE, MoE arch experiments.* RL infra demonstrations (Interestingly, those are dense models)* AWorld: Training + general framework for agents (RL version, paper)* AReal: RL training suite Interconnects is a reader-supported publication. Consider becoming a subscriber.Chapters* 00:00:00 A frontier lab contender in 8 months* 00:07:51 Defining AGI with metaphor* 00:20:16 How the lab was born* 00:23:30 Pre-training paradigms* 00:40:25 Post training at Inclusion* 00:48:15 The Chinese model landscape* 00:53:59 Gaps in the open source ecosystem today* 00:59:47 Why China is winning the open race* 01:11:12 A metaphor for our moment in LLMsTranscriptA frontier lab contender in 8 monthsNathan Lambert (00:05)Hey everybody. I’m excited to start a bit of a new series when I’m talking to a lot more people who are building open models. Historically, I’ve obviously talked to people I work with, but there’s a lot of news that has happened in 2025 and I’m excited to be with one of the teams, a mix of product, which is Richard Bian and some technical members from the Ant Ling team as well, which is Chen Liang and Ziqi Liu. But really this is going to be a podcast where we talk about how you’re all building models, why you do this. It’ll talk about different perspectives between US, China and a lot of us going towards a similar goal. I was connected first with Richard, who’s also talked to other people that helped with Interconnects. So we can start there and go through and just kind of talk about what you do. And we’ll roll through the story of building models and why we do this.Richard Bian (01:07)Hi. Again, thanks so much, Nathan. Thanks so much for having us. My name is Richard Bian. I’m currently leading the product and growth team of Ant Ling, which is part of the Inclusion AI lab of Ant Group. So Ant Group is the parent company of Alipay, which might be a product which many, many more people know about. But the group has been there for quite some time. It used to be a part of Alibaba, but now it’s a separate company since 2020. I actually have a pretty mixed background. Before I joined the Ling team, I’ve been doing Ant open source for four years. In fact, I built Ant open source from a technical strategy, which is basically a one-liner from our current CTO all the way into a full-fledged multifunctional team of eight people in four years. So it has been a pretty rewarding journey. And before that, my last life, I’ve been spending 11 years in the States working as a software engineer with Microsoft and with Square. Again, it was a pretty rewarding past. I returned back to China during COVID to be close with my family. It was a conscious decision. So far so good. It has been a pretty rewarding journey. And I really love how Nathan you name your column as Interconnects and you actually echoed when you just began the conversation just now. I found that to be a very noble initiative. So very honored to be here.Nathan Lambert (02:48)Hopefully first of many, but I think you all have been doing very interesting stuff in the last few weeks, or last few months, so it’s very warranted. And do you two want to introduce yourselves as well?Chen Liang (02:58)Me first. My name is Chen Liang and I’m the algorithm engineer of Ling Team, and I’m mainly responsible for the floating point 8 training during the pre-training. Thank you.Ziqi Liu (03:16)My name is Ziqi Liu and I graduated, a PhD from Jiao Tong University in China. And I’ve been working at Ant Group for about eight years. And currently I’m working on the Ling language model. That’s it.Nathan Lambert (03:45)Nice. I think the way this will flow is I’m going to probably transition. It’ll start more with Richard’s direction. Then as we go, it’ll get more technical. And please jump in. I think that we don’t want to segment this. I mean, the border between product growth, technical modeling, whatever, that’s why AI is fun is because it’s small. But I would like to know how Inclusion AI started and all these initiatives. I don’t know if there’s a link to Ant OSS. I found that in prep and I thought that was pretty interesting and just kind of like, how does the birth of a new language modeling lab go from idea to releasing one trillion parameter models? So like, what does that feel like on the ground?Richard Bian (04:18)There’s actually one additional suffix for that in eight months’ time. In fact, we kind of began all of this initiative in February this year. So just to begin with for the audience who probably didn’t know much about Inclusion AI, Inclusion AI basically envisions AGI as a humanity’s shared milestone, not a privileged asset. So we started this initiative back in the February of 2025, inspired by the DeepSeek Research Lab. So the DeepSeek Research Lab and their publication, in fact, motivated a lot of people. I believe not only in China, but globally. Taking one step more closer to the AGI initiative by showing it’s probably not an exclusive game for only the richest people who can afford the best hardware and the best talent. So the way we’re kind of looking at it is like why we named that Inclusion is because we actually have that gene with the company. So the decision was actually made, of course, the decision was made beyond my pay grade, but it was actually very well informed internally for the mission and vision that we want to be more like DeepSeek, which is a research lab with a dedicated effort of pursuing AGI. In fact, I mean, if you kind of think about

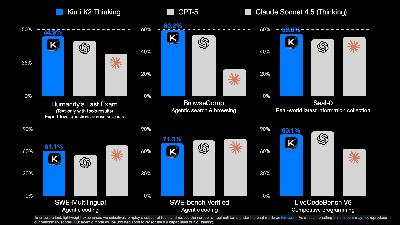

First, congrats to the Moonshot AI team, one of the 6 “AI Tigers” in China, on the awesome release of Kimi K2 Thinking. One of the overlooked and inspiring things for me these days is just how many people are learning very quickly to train excellent AI models. The ability to train leading AI models and distribute them internationally is going to be pervasive globally. As people use AI more, those who can access supply for inference (and maybe the absolute frontier in scale of training, even if costly) is going to be the gating function.K2 Thinking sounds like a joy to use because of early reports that the distinctive style and writing quality from their original Kimi K2 Instruct model have been preserved through extended thinking RL training. They released many evaluation scores, for a highlight they’re beating leading closed models on some benchmarks such as Humanity’s Last Exam or BrowseComp. There are still plenty of evals where GPT 5 or Claude Sonnet 4.5 tops them. Rumors are Gemini 3 is coming soon (just like the constantly pending DeepSeek V4), so expectations are high on the industry right now.TLDR: Kimi K2 Thinking as a reasoning MoE model with 1T total, 32B active parameters, 256K context length, interleaved thinking in agentic tool-use, strong benchmark scores and vibe tests.The core reaction of this release is people saying this is the closest open models have been to the closed frontier of performance ever, similar to DeepSeek R1‘s fast follow to o1. This is pretty true, but we’re heading into murky territory because comparing models is harder. This is all advantaging the open models, to be clear. I’ve heard that Kimi’s servers are already totally overwhelmed, more on this soon.What is on my mind for this release:1. Open models release faster. There’s still a time lag from the best closed to open models in a few ways, but what’s available to users is much trickier and presents a big challenge to closed labs. Labs in China definitely release their models way faster. When the pace of progress is high, being able to get a model out sooner makes it look better. That’s a simple fact, but I’d guess Anthropic takes the longest to get models out (months sometimes) and OpenAI somewhere in the middle. This is a big advantage, especially in comms, to the fast mover.I’d put the gap at the order of months in raw performance — I’d say 4-6+ months if you put a gun to my head and made me choose specifically — but the problem is these models aren’t publicly available, so do they matter?2. Key benchmarks first, user behaviors later. Labs in China are closing in and very strong on key benchmarks. These models also can have very good taste (DeepSeek, Kimi), but there is a long-tail of internal benchmarks that labs have for common user behaviors that Chinese labs don’t have feedback cycles on. Chinese companies will start getting these, but intangible’s are important to user retention.Over the last year+ we’ve been seeing Qwen go through this transition. Their models were originally known for benchmaxing, but now they’re legitimately fantastic models (that happen to have insane benchmark scores).Along these lines, the K2 Thinking model was post-trained natively with a 4bit precision to make it far more ready for real serving tasks (they likely did this to make scaling RL more efficient in post-training on long sequences too):To overcome this challenge, we adopt Quantization-Aware Training (QAT) during the post-training phase, applying INT4 weight-only quantization to the MoE components. It allows K2 Thinking to support native INT4 inference with a roughly 2x generation speed improvement while achieving state-of-the-art performance. All benchmark results are reported under INT4 precision.It’s awesome that their benchmark comparisons are in the way it’ll be served. That’s the fair way.3. China’s rise. At the start of the year, most people loosely following AI probably knew of 0 Chinese labs. Now, and towards wrapping up 2025, I’d say all of DeepSeek, Qwen, and Kimi are becoming household names. They all have seasons of their best releases and different strengths. The important thing is this’ll be a growing list. A growing share of cutting edge mindshare is shifting to China. I expect some of the likes of Z.ai, Meituan, or Ant Ling to potentially join this list next year. For some of these labs releasing top tier benchmark models, they literally started their foundation model effort after DeepSeek R1. It took many Chinese companies only 6 months to catch up to the open frontier in ballpark of performance, now the question is if they can offer something in a niche of the frontier that has real demand for users.4. Interleaved thinking on many tool calls. One of the things people are talking about with this release is how Kimi K2 Thinking will use “hundreds of tool calls” when answering a query. From the blog post:Kimi K2 Thinking can execute up to 200 – 300 sequential tool calls without human interference, reasoning coherently across hundreds of steps to solve complex problems.This is one of the first open model to have this ability of many, many tool calls, but it is something that has become somewhat standard with the likes of o3, Grok 4, etc. This sort of behavior emerges naturally during RL training, particularly for information tanks, when the model needs to search to get the right answer. So this isn’t a huge deal technically, but it’s very fun to see it in an open model, and providers hosting it (where tool use has already been a headache with people hosting open weights) are going to work very hard to support it precisely. I hope there’s user demand to help the industry mature for serving open tool-use models.Interleaved thinking is slightly different, where the model uses thinking tokens in between tool use call. Claude is most known for this. MiniMax M2 was released on Nov. 3rd with this as well! It’s new.5. Pressure on closed American labs. It’s clear that the surge of open models should make the closed labs sweat. There’s serious pricing pressure and expectations that they need to manage. The differentiation and story they can tell about why their services are better needs to evolve rapidly away from only the scores on the sort of benchmarks we have now. In my post from early in the summer, Some Thoughts on What Comes Next, I hinted at this:This is a different path for the industry and will take a different form of messaging than we’re used to. More releases are going to look like Anthropic’s Claude 4, where the benchmark gains are minor and the real world gains are a big step. There are plenty of more implications for policy, evaluation, and transparency that come with this. It is going to take much more nuance to understand if the pace of progress is continuing, especially as critics of AI are going to seize the opportunity of evaluations flatlining to say that AI is no longer working.Are existing distribution channels, products, and serving capacity enough to hold the value steady of all the leading AI companies in the U.S.? Personally, I think they’re safe, but these Chinese models and companies are going to be taking bigger slices of the growing AI cake. This isn’t going to be anywhere near a majority in revenue, but it can be a majority in mindshare, especially with international markets.Interconnects is a reader-supported publication. Consider becoming a subscriber.This sets us up for a very interesting 2026. I’m hoping to make time to thoroughly vibe test Kimi K2 Thinking soon!Quick links:* Interconnects: Kimi K2 and when “DeepSeek Moments” become normal, China Model Builder Tier List (they’re going up soon probably)* Model: https://huggingface.co/moonshotai/Kimi-K2-Thinking* API: https://platform.moonshot.ai/ (being hammered)* License (Modified MIT): The same as MIT, very permissive, but if you use Kimi K2 (or derivatives) in a commercial product/service that has >100M monthly active users or >$20M/month revenue, you must prominently display “Kimi K2” on the UI. Is reasonable, but not “truly open source.” https://huggingface.co/moonshotai/Kimi-K2-Thinking/blob/main/LICENSE* Technical blog: https://moonshotai.github.io/Kimi-K2/thinking.html* Announcement thread: https://x.com/Kimi_Moonshot/status/1986449512538513505 This is a public episode. If you'd like to discuss this with other subscribers or get access to bonus episodes, visit www.interconnects.ai/subscribe

One of the obvious topics of the Valley today is how hard everyone works. We’re inundated with comments on “The Great Lock In”, 996, 997, and now even a snarky 002 (midnight to midnight with a 2 hour break). Plenty of this is performative flexing on social media, but enough of it is real and reflecting how trends are unfolding in the LLM space. I’m affected. My friends are affected.All of this hard work is downstream of ever increasing pressure to be relevant in the most exciting technology of our generation. This is all reflective of the LLM game changing. The time window to be a player at the most cutting edge is actually a closing window, not just what feels like one. There are many different sizes and types of models that matter, but as the market is now more fleshed out with resources, all of them are facing a constantly rising bar in quality of technical output. People are racing to stay above the rising tide — often damning any hope of life balance.Interconnects is a reader-supported publication. Consider becoming a subscriber.AI is going down the path that other industries have before, but on steroids. There’s a famous section of the book Apple in China, where the author Patrick McGee describes the programs Apple put in place to save the marriages of engineers traveling so much to China and working incredible hours. In an interview on ChinaTalk, McGee added “Never mind the divorces, you need to look at the deaths.” This is a grim reality that is surely playing out in AI.The Wall Street Journal recently published a piece on how AI Workers Are Putting In 100-Hour Workweeks to Win the New Tech Arms Race. The opening of the article is excellent to capture how the last year or two has felt if you’re participating in the dance:Josh Batson no longer has time for social media. The AI researcher’s only comparable dopamine hit these days is on Anthropic’s Slack workplace-messaging channels, where he explores chatter about colleagues’ theories and experiments on large language models and architecture.Work addicts abound in AI. I often count myself, but take a lot of effort to make it such that work expands to fill available time and not that I fill everything in around work. This WSJ article had a bunch of crazy comments that show the mental limits of individuals and the culture they act in, such as:Several top researchers compared the circumstances to war.Comparing current AI research to war is out of touch (especially with the grounding of actual wars happening simultaneously to the AI race!). What they really are learning is that pursuing an activity in a collective environment at an elite level over multiple years is incredibly hard. It is! War is that and more.In the last few months I’ve been making an increasing number of analogies to how working at the sharp end of LLMs today is similar to training with a team to be elite athletes. The goals are far out and often singular, there are incredibly fine margins between success and failure, much of the grinding feels over tiny tasks that add up over time but you don’t want to do in the moment, and you can never quite know how well your process is working until you compare your outputs with your top competition, which only happens a few times a year in both sports and language modeling.In college I was a D1 lightweight rower at Cornell University. I walked onto a team and we ended up winning 3 championships in 4 years. Much of this was happenstance, as much greatness is, but it’s a crucial example in understanding how similar mentalities can apply in different domains across a life. My mindset around the LLM work I do today feels incredibly similar — complete focus and buy in — but I don’t think I’ve yet found a work environment where the culture is as cohesive as athletics. Where OpenAI’s culture is often described as culty, there are often many signs that the core team members there absolutely love it, even if they’re working 996, 997, or 002. When you love it, it doesn’t feel like work. This is the same as why training 20 hours a week while a full time student can feel easy.Many AI researchers can learn from athletics and appreciate the value of rest. Your mental acuity can drop off faster than your physical peak performance does when not rested. Working too hard forces you to take narrower and less creative approaches. The deeper into the hole of burnout I get in trying to make you the next Olmo model, the worse my writing gets. My ability to spot technical dead ends goes with it. If the intellectual payoffs to rest are hard to see, your schedule doesn’t have the space for creativity and insight.Crafting the team culture in both of these environments is incredibly difficult. It’s the quality of the team culture that determines the outcome more than the individual components. Yes, with LLMs you can take brief shortcuts by hiring talent with years of experience from another frontier lab, but that doesn’t change the long-term dynamic. Yes, you obviously need as much compute as you can get. At the same time, culture is incredibly fickle. It’s easier to lose than it is to build.Some argue that starting a new lab today can be an advantage against the established labs because you get to start from scratch with a cleaner codebase, but this is cope. Three core ingredients of training: Internal tools (recipes, code-bases, etc.), resources (compute, data), and personnel. Leadership sets the direction and culture, where management executes with this direction. All elements are crucial and cannot be overlooked. The further along the best models get, the harder starting from scratch is going to become. Eventually, this dynamic will shift back in favor of starting from scratch, because public knowhow and tooling will catch up, but in the meantime the closed tools are getting better at a far faster rate than the fully open tools.The likes of SSI, Thinky, and Reflection are likely the last efforts that are capitalized enough to maybe catch up in the near term, but the odds are not on their side. Getting infinite compute into a new company is meaningless if you don’t already have your code, data, and pretraining architectures ready. Eventually the clock will run out for company plans to be just catching up to the frontier, and then figure it out from there. The more these companies raise, the more the expectations on their first output will increase as well. It’s not an enviable position, but it’s certainly ambitious.In many ways I see the culture of Chinese technology companies (and education systems) as being better suited for this sort of catch up work. Many top AI researchers trained in the US want to work on a masterpiece, where what it takes in language modeling is often extended grinding to stabilize and replicate something that you know definitely can work. I used to think that the AI bubble would pop financially, as seen through a series of economic mergers, acquisitions, and similar deals. I’m shifting to see more limitations on the human capital than the financial capital thrown at today’s AI companies. As the technical standard of relevance increases (i.e. how good the models people want to use are, or the best open model of a given size category), it simply takes more focused work to get a model there. This work is hard to cheat in time.This all relates to how I, and other researchers, always comment on the low hanging fruit we see to keep improving the models. As the models have gotten better, our systems to build them have gotten more refined, complex, intricate, and numerically sensitive. While I see a similar amount of low-hanging fruit today as I did a year ago, the efforts (or physical resources, GPUs) it can take to unlock them have increased. This pushes people to keep going one step closer to their limits. This is piling on to more burnout. This is also why the WSJ reported that top researchers “said repeatedly that they work long hours by choice.” The best feel like they need to do this work or they’ll fall behind. It’s running one more experiment, running one more vibe test, reviewing one more colleague’s PR, reading one more paper, chasing down one more data contract. The to-do list is never empty.The amount of context that you need to keep in your brain to perform well in many LM training contexts is ever increasing. For example, leading post-training pipelines around the launch of ChatGPT looked like two or maybe three well separated training stages. Now there are tons of checkpoints flying around getting merged, sequenced, and chopped apart in part of the final project. Processes that used to be managed by one or two people now have teams coordinating many data and algorithmic efforts that are trying to land in just a few models a year. I’ve personally transitioned from a normal researcher to something like a tech lead who is always trying to predict blockers before they come up (at any point in the post-training process) and get resources to fix them. I bounce in and out of problems to wherever the most risk is.Cramming and keeping technical context pushes out hobbies and peace of mind.Training general language models you hope others will adopt — via open weights or API — is becoming very much an all-in or all-out domain. Half-assing it is becoming an expensive way to make a model that no one will use. This wasn’t the case two years ago, where playing around with a certain part of the pipeline was legitimately impactful.Culture is a fine line between performance and toxicity, and it’s often hard to know which you are until you get to a major deliverable to check in versus competitors.Personally, I’m fighting off a double-edged sword of this. I feel immense responsibility to make all the future Olmo models of the world great, while simultaneously trying to do a substantial amount of ecosystem work to create an informed discussion around the state of open models. My goal around this discussion is for more real things to be built. ATOM Project is a m