Discover Latent Space: The AI Engineer Podcast

Latent Space: The AI Engineer Podcast

Latent Space: The AI Engineer Podcast

Author: swyx + Alessio

Subscribed: 427Played: 10,268Subscribe

Share

© Latent.Space

Description

The podcast by and for AI Engineers! In 2023, over 1 million visitors came to Latent Space to hear about news, papers and interviews in Software 3.0.

We cover Foundation Models changing every domain in Code Generation, Multimodality, AI Agents, GPU Infra and more, directly from the founders, builders, and thinkers involved in pushing the cutting edge. Striving to give you both the definitive take on the Current Thing down to the first introduction to the tech you'll be using in the next 3 months! We break news and exclusive interviews from OpenAI, tiny (George Hotz), Databricks/MosaicML (Jon Frankle), Modular (Chris Lattner), Answer.ai (Jeremy Howard), et al.

Full show notes always on https://latent.space

www.latent.space

We cover Foundation Models changing every domain in Code Generation, Multimodality, AI Agents, GPU Infra and more, directly from the founders, builders, and thinkers involved in pushing the cutting edge. Striving to give you both the definitive take on the Current Thing down to the first introduction to the tech you'll be using in the next 3 months! We break news and exclusive interviews from OpenAI, tiny (George Hotz), Databricks/MosaicML (Jon Frankle), Modular (Chris Lattner), Answer.ai (Jeremy Howard), et al.

Full show notes always on https://latent.space

www.latent.space

117 Episodes

Reverse

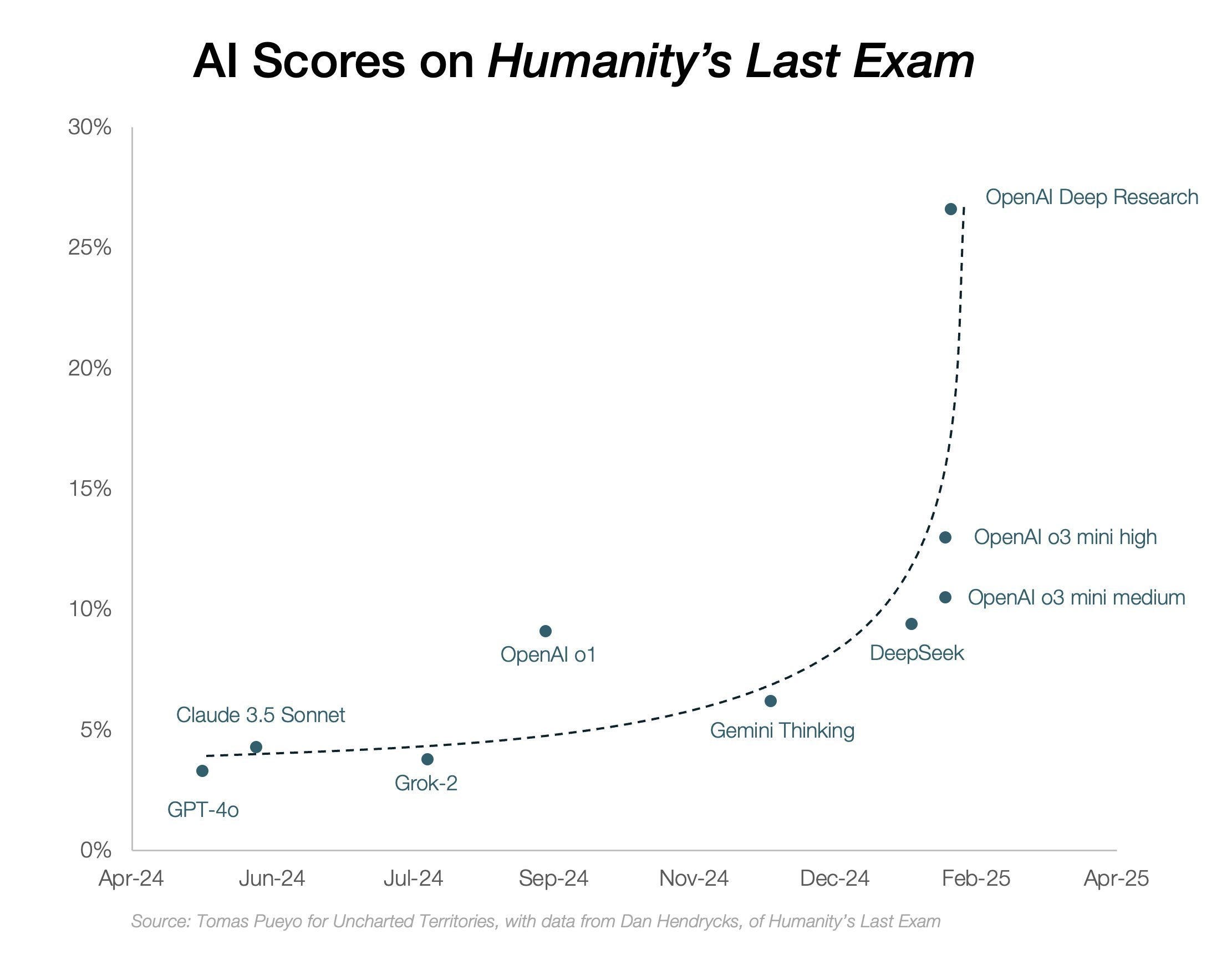

The free livestreams for AI Engineer Summit are now up! Please hit the bell to help us appease the algo gods. We’re also announcing a special Online Track later today.Today’s Deep Research episode is our last in our series of AIE Summit preview podcasts - thanks for following along with our OpenAI, Portkey, Pydantic, Bee, and Bret Taylor episodes, and we hope you enjoy the Summit! Catch you on livestream.Everybody’s going deep now. Deep Work. Deep Learning. DeepMind. If 2025 is the Year of Agents, then the 2020s are the Decade of Deep.While “LLM-powered Search” is as old as Perplexity and SearchGPT, and open source projects like GPTResearcher and clones like OpenDeepResearch exist, the difference with “Deep Research” products is they are both “agentic” (loosely meaning that an LLM decides the next step in a workflow, usually involving tools) and bundling custom-tuned frontier models (custom tuned o3 and Gemini 1.5 Flash).The reception to OpenAI’s Deep Research agent has been nothing short of breathless:"Deep Research is the best public-facing AI product Google has ever released. It's like having a college-educated researcher in your pocket." - Jason Calacanis“I have had [Deep Research] write a number of ten-page papers for me, each of them outstanding. I think of the quality as comparable to having a good PhD-level research assistant, and sending that person away with a task for a week or two, or maybe more. Except Deep Research does the work in five or six minutes.” - Tyler Cowen“Deep Research is one of the best bargains in technology.” - Ben Thompson“my very approximate vibe is that it can do a single-digit percentage of all economically valuable tasks in the world, which is a wild milestone.” - sama“Using Deep Research over the past few weeks has been my own personal AGI moment. It takes 10 mins to generate accurate and thorough competitive and market research (with sources) that previously used to take me at least 3 hours.” - OAI employee“It's like a bazooka for the curious mind” - Dan Shipper“Deep research can be seen as a new interface for the internet, in addition to being an incredible agent… This paradigm will be so powerful that in the future, navigating the internet manually via a browser will be "old-school", like performing arithmetic calculations by hand.” - Jason Wei“One notable characteristic of Deep Research is its extreme patience. I think this is rapidly approaching “superhuman patience”. One realization working on this project was that intelligence and patience go really well together.” - HyungWon“I asked it to write a reference Interaction Calculus evaluator in Haskell. A few exchanges later, it gave me a complete file, including a parser, an evaluator, O(1) interactions and everything. The file compiled, and worked on my test inputs. There are some minor issues, but it is mostly correct. So, in about 30 minutes, o3 performed a job that would take me a day or so.” - Victor Taelin“Can confirm OpenAI Deep Research is quite strong. In a few minutes it did what used to take a dozen hours. The implications to knowledge work is going to be quite profound when you just ask an AI Agent to perform full tasks for you and come back with a finished result.” - Aaron Levie“Deep Research is genuinely useful” - Gary MarcusWith the advent of “Deep Research” agents, we are now routinely asking models to go through 100+ websites and generate in-depth reports on any topic. The Deep Research revolution has hit the AI scene in the last 2 weeks: * Dec 11th: Gemini Deep Research (today’s guest!) rolls out with Gemini Advanced* Feb 2nd: OpenAI releases Deep Research* Feb 3rd: a dozen “Open Deep Research” clones launch* Feb 5th: Gemini 2.0 Flash GA* Feb 15th: Perplexity launches Deep Research * Feb 17th: xAI launches Deep SearchIn today’s episode, we welcome Aarush Selvan and Mukund Sridhar, the lead PM and tech lead for Gemini Deep Research, the originators of the entire category. We asked detailed questions from inspiration to implementation, why they had to finetune a special model for it instead of using the standard Gemini model, how to run evals for them, and how to think about the distribution of use cases. (We also have an upcoming Gemini 2 episode with our returning first guest Logan Kilpatrick so stay tuned 👀)Two Kinds of Inference Time ComputeIn just ~2 months since NeurIPS, we’ve moved from “scaling has hit a wall, LLMs might be over” to “is this AGI already?” thanks to the releases of o1, o3, and DeepSeek R1 (see our o3 post and R1 distillation lightning pod). This new jump in capabilities is now accelerating many other applications; you might remember how “needle in a haystack” was one of the benchmarks people often referenced when looking at model’s capabilities over long context (see our 1M Llama context window ep for more). It seems that we have broken through the “wall” by scaling “inference time” in two meaningful ways — one with more time spent in the model, and the other with more tool calls.Both help build better agents which are clearly more intelligent. But as we discuss on the podcast, we are currently in a “honeymoon” period of agent products where taking more time (or tool calls, or search results) is considered good, because 1) quality is hard to evaluate and 2) we don’t know the realistic upper bound to quality. We know that they’re correlated, but we don’t know to what extent and if the correlation breaks down over extended research periods (they may not).It doesn’t take a PhD to spot the perverse incentives here.Agent UX: From Sync to Async to HybridWe also discussed the technical challenges in moving from a synchronous “chat” paradigm to the “async” world where every agent builder needs to handroll their own orchestration framework in the background.For now, most simple, first-cut implementations including Gemini and OpenAI and Bolt tend to make “locking” async experiences — while the report is generating or the plan is being executed, you can’t continue chatting with the model or editing the plan. In this case we think the OG Agent here is Devin (now GA), which has gotten it right from the beginning.Full Episode on YouTubewith demo!Show Notes* Deep Research* Aarush Selvan* Mukund Sridhar* NotebookLM episode (Raiza / Usama)* Bolt* Bret TaylorChapters* [00:00:00] Introductions* [00:00:22] Overview + Demo of Deep Research* [00:04:31] Editable chain of thought* [00:08:18] Search ranking for sources* [00:09:31] Can you DIY Deep Research?* [00:15:52] UX and research plan editing* [00:16:21] Follow-up queries and context retention* [00:21:06] Evaluating Deep Research* [00:28:06] Ontology of use cases and research patterns* [00:32:56] User perceptions of latency in Deep Research* [00:40:59] Lessons from other AI products* [00:42:12] Multimodal capabilities* [00:45:02] Technical challenges in Deep Research* [00:51:56] Can Deep Research discover new insights?* [00:54:11] Open challenges in agents* [00:57:04] Wrap upTranscriptAlessio [00:00:04]: Hey everyone, welcome to the Latent Space podcast. This is Alessio, partner and CTO at Decibel Partners, and I'm joined by my co-host Swyx, founder of Smol AI.Swyx [00:00:13]: Hey, and today we're very honored to have in our studio Aarush and Mukund from the Deep Research team, the OG Deep Research team. Welcome.Aarush [00:00:20]: Thanks for having us.Swyx [00:00:22]: Yeah, thanks for making the trip up. I was fortunate enough to be one of the early beta testers of Deep Research when he came out. I would say I was very keen on, I think even at the end of last year, people were already saying it was one of the most exciting agents that was coming out of Google. You know that previously we had on Ryza and Usama from the Novoca LM team. And I think this is an increasing trend that Gemini and Google are shipping interesting user-facing products that use AI. So congrats on your success so far. Yeah, it's been great. Thanks so much for having us here. Yeah. Yeah, thanks for making the trip up. And I'm also excited for your talk that is happening next week. Obviously, we have to talk about what exactly it is, but I'll ask you towards the end. So basically, okay, you know, we have the screen up. Maybe we just start at a high level for people who don't yet know. Like, what is Deep Research? Sure. Aarush [00:01:10]: So Deep Research is a feature where Gemini can act as your personal research assistant to help you learn about any topic that you want more deeply. It's really helpful for those queries. So you want to go from zero to 50 really fast on a new thing. And the way it works is it takes your query, browses the web for about five minutes, and then outputs a research report for you to review and ask follow-up questions. This is one of the first times, you know, something takes about five, six minutes trying to perform your research. So there's a few challenges that brings. Like, you want to make sure you're spending that time in the computer doing what the user wants. So there's some ways of the UX design that we can talk about. As we go through an example, and then there's also challenges in the browsers, the web is super fragmented and being able to plan iteratively and as, as you pass through this noisy information is a challenge by itself.Swyx [00:02:11]: Yeah. This is like the first time sort of Google automating yourself as searching, like you're, you know, you're supposed to be the experts at search, but now you're like meta-searching and like determining the search strategy.Aarush [00:02:22]: Yeah, I think, at least we see it as two different use cases. There are things that, you know, you know exactly what you're looking for and there's a search is still probably, you know, a very, you know, probably one of the best places to go. I think when deep research really shines is there like multiple facets to your question and you spend like a weekend, you know, just opening like 50, 60 tabs and many times I just give up and we wanted to solve that probl

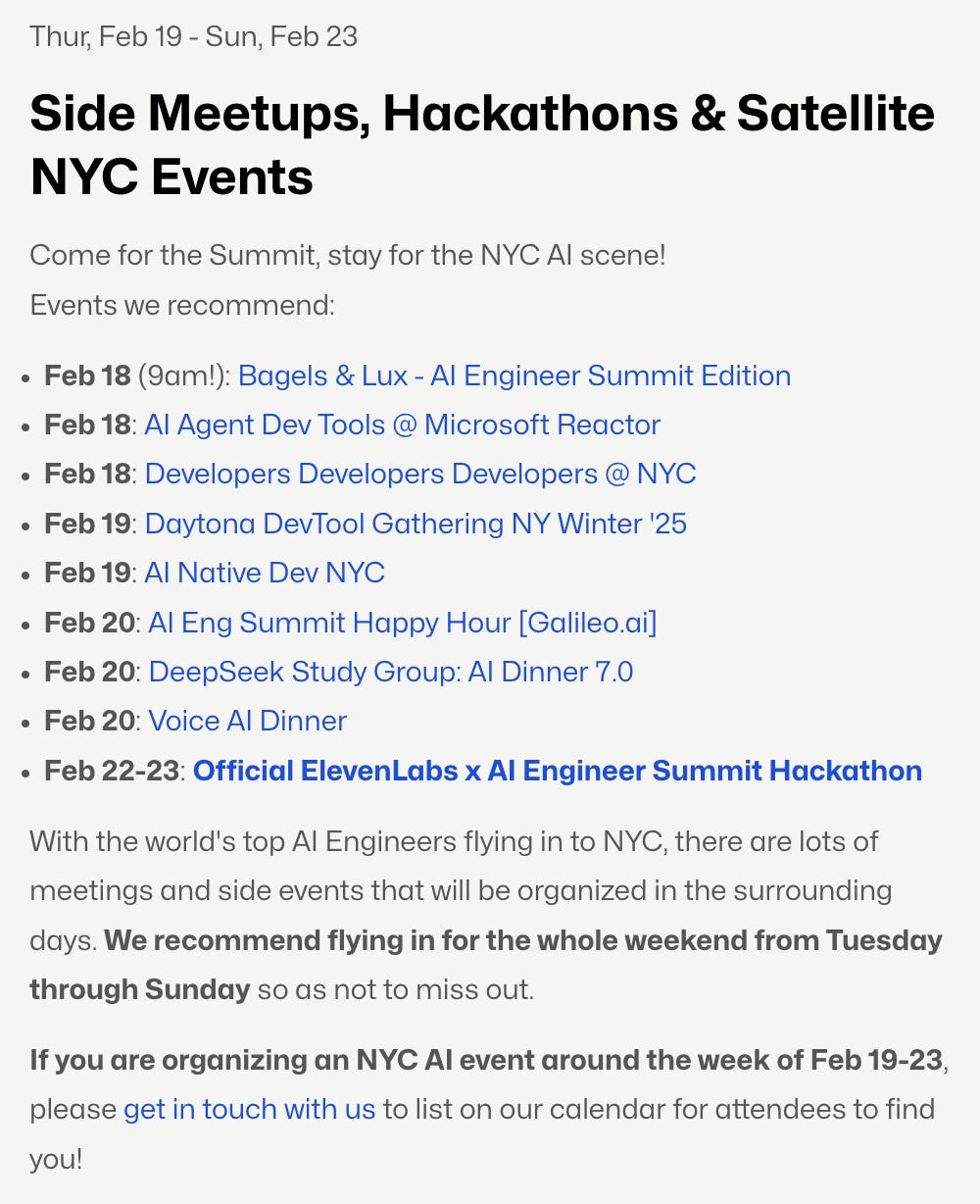

Bundle tickets for AIE Summit NYC have now sold out. You can now sign up for the livestream — where we will be making a big announcement soon. NYC-based readers and Summit attendees should check out the meetups happening around the Summit.2024 was a very challenging year for AI Hardware. After the buzz of CES last January, 2024 was marked by the meteoric rise and even harder fall of AI Wearables companies like Rabbit and Humane, with an assist from a pre-wallpaper-app MKBHD. Even Friend.com, the first to launch in the AI pendant category, and which spurred Rewind AI to rebrand to Limitless and follow in their footsteps, ended up delaying their wearable ship date and launching an experimental website chatbot version. We have been cautiously excited about this category, keeping tabs on most of the top entrants, including Omi and Compass. However, to date the biggest winner still standing from the AI Wearable wars is Bee AI, founded by today's guests Maria and Ethan. Bee is an always on hardware device with beamforming microphones, 7 day battery life and a mute button, that can be worn as a wristwatch or a clip-on pin, backed by an incredible transcription, diarization and very long context memory processing pipeline that helps you to remember your day, your todos, and even perform actions by operating a virtual cloud phone. This is one of the most advanced, production ready, personal AI agents we've ever seen, so we were excited to be their first podcast appearance. We met Bee when we ran the world's first Personal AI meetup in April last year.As a user of Bee (and not an investor! just a friend!) it’s genuinely been a joy to use, and we were glad to take advantage of the opportunity to ask hard questions about the privacy and legal/ethical side of things as much as the AI and Hardware engineering side of Bee. We hope you enjoy the episode and tune in next Friday for Bee’s first conference talk: Building Perfect Memory.Full YouTube Video VersionWatch this for the live demo!Show Notes* Bee Website* Ethan Sutin, Maria de Lourdes Zollo* Bee @ Personal AI Meetup* Buy Bee with Listener Discount Code!Timestamps* 00:00:00 Introductions and overview of Bee Computer* 00:01:58 Personal context and use cases for Bee* 00:03:02 Origin story of Bee and the founders' background* 00:06:56 Evolution from app to hardware device* 00:09:54 Short-term value proposition for users* 00:12:17 Demo of Bee's functionality* 00:17:54 Hardware form factor considerations* 00:22:22 Privacy concerns and legal considerations* 00:30:57 User adoption and reactions to wearing Bee* 00:35:56 CES experience and hardware manufacturing challenges* 00:41:40 Software pipeline and inference costs* 00:53:38 Technical challenges in real-time processing* 00:57:46 Memory and personal context modeling* 01:02:45 Social aspects and agent-to-agent interactions* 01:04:34 Location sharing and personal data exchange* 01:05:11 Personality analysis capabilities* 01:06:29 Hiring and future of always-on AITranscriptAlessio [00:00:04]: Hey everyone, welcome to the Latent Space podcast. This is Alessio, partner and CTO at Decibel Partners, and I'm joined by my co-host Swyx, founder of SmallAI.swyx [00:00:12]: Hey, and today we are very honored to have in the studio Maria and Ethan from Bee.Maria [00:00:16]: Hi, thank you for having us.swyx [00:00:20]: And you are, I think, the first hardware founders we've had on the podcast. I've been looking to have had a hardware founder, like a wearable hardware, like a wearable hardware founder for a while. I think we're going to have two or three of them this year. And you're the ones that I wear every day. So thank you for making Bee. Thank you for all the feedback and the usage. Yeah, you know, I've been a big fan. You are the speaker gift for the Engineering World's Fair. And let's start from the beginning. What is Bee Computer?Ethan [00:00:52]: Bee Computer is a personal AI system. So you can think of it as AI living alongside you in first person. So it can kind of capture your in real life. So with that understanding can help you in significant ways. You know, the obvious one is memory, but that's that's really just the base kind of use case. So recalling and reflective. I know, Swyx, that you you like the idea of journaling, but you don't but still have some some kind of reflective summary of what you experienced in real life. But it's also about just having like the whole context of a human being and understanding, you know, giving the machine the ability to understand, like, what's going on in your life. Your attitudes, your desires, specifics about your preferences, so that not only can it help you with recall, but then anything that you need it to do, it already knows, like, if you think about like somebody who you've worked with or lived with for a long time, they just know kind of without having to ask you what you would want, it's clear that like, that is the future that personal AI, like, it's just going to be very, you know, the AI is just so much more valuable with personal context.Maria [00:01:58]: I will say that one of the things that we are really passionate is really understanding this. Personal context, because we'll make the AI more useful. Think about like a best friend that know you so well. That's one of the things that we are seeing from the user. They're using from a companion standpoint or professional use cases. There are many ways to use B, but companionship and professional are the ones that we are seeing now more.swyx [00:02:22]: Yeah. It feels so dry to talk about use cases. Yeah. Yeah.Maria [00:02:26]: It's like really like investor question. Like, what kind of use case?Ethan [00:02:28]: We're just like, we've been so broken and trained. But I mean, on the base case, it's just like, don't you want your AI to know everything you've said and like everywhere you've been, like, wouldn't you want that?Maria [00:02:40]: Yeah. And don't stay there and repeat every time, like, oh, this is what I like. You already know that. And you do things for me based on that. That's I think is really cool.swyx [00:02:50]: Great. Do you want to jump into a demo? Do you have any other questions?Alessio [00:02:54]: I want to maybe just cover the origin story. Just how did you two meet? What was the was this the first idea you started working on? Was there something else before?Maria [00:03:02]: I can start. So Ethan and I, we know each other from six years now. He had a company called Squad. And before that was called Olabot and was a personal AI. Yeah, I should. So maybe you should start this one. But yeah, that's how I know Ethan. Like he was pivoting from personal AI to Squad. And there was a co-watching with friends product. I had experience working with TikTok and video content. So I had the pivoting and we launched Squad and was really successful. And at the end. The founders decided to sell that to Twitter, now X. So both of us, we joined X. We launched Twitter Spaces. We launched many other products. And yeah, till then, we basically continue to work together to the start of B.Ethan [00:03:46]: The interesting thing is like this isn't the first attempt at personal AI. In 2016, when I started my first company, it started out as a personal AI company. This is before Transformers, no BERT even like just RNNs. You couldn't really do any convincing dialogue at all. I met Esther, who was my previous co-founder. We both really interested in the idea of like having a machine kind of model or understand a dynamic human. We wanted to make personal AI. This was like more geared towards because we had obviously much limited tools, more geared towards like younger people. So I don't know if you remember in 2016, there was like a brief chatbot boom. It was way premature, but it was when Zuckerberg went up on F8 and yeah, M and like. Yeah. The messenger platform, people like, oh, bots are going to replace apps. It was like for about six months. And then everybody realized, man, these things are terrible and like they're not replacing apps. But it was at that time that we got excited and we're like, we tried to make this like, oh, teach the AI about you. So it was just an app that you kind of chatted with and it would ask you questions and then like give you some feedback.Maria [00:04:53]: But Hugging Face first version was launched at the same time. Yeah, we started it.Ethan [00:04:56]: We started out the same office as Hugging Face because Betaworks was our investor. So they had to think. They had a thing called Bot Camp. Betaworks is like a really cool VC because they invest in out there things. They're like way ahead of everybody else. And like back then it was they had something called Bot Camp. They took six companies and it was us and Hugging Face. And then I think the other four, I'm pretty sure, are dead. But and Hugging Face was the one that really got, you know, I mean, 30% success rate is pretty good. Yeah. But yeah, when we it was, it was like it was just the two founders. Yeah, they were kind of like an AI company in the beginning. It was a chat app for teenagers. A lot of people don't know that Hugging Face was like, hey, friend, how was school? Let's trade selfies. But then, you know, they built the Transformers library, I believe, to help them make their chat app better. And then they open sourced and it was like it blew up. And like they're like, oh, maybe this is the opportunity. And now they're Hugging Face. But anyway, like we were obsessed with it at that time. But then it was clear that there's some people who really love chatting and like answering questions. But it's like a lot of work, like just to kind of manually.Maria [00:06:00]: Yeah.Ethan [00:06:01]: Teach like all these things about you to an AI.Maria [00:06:04]: Yeah, there were some people that were super passionate, for example, teenagers. They really like, for example, to speak about themselves a lot. So they will reply to a lot of questions an

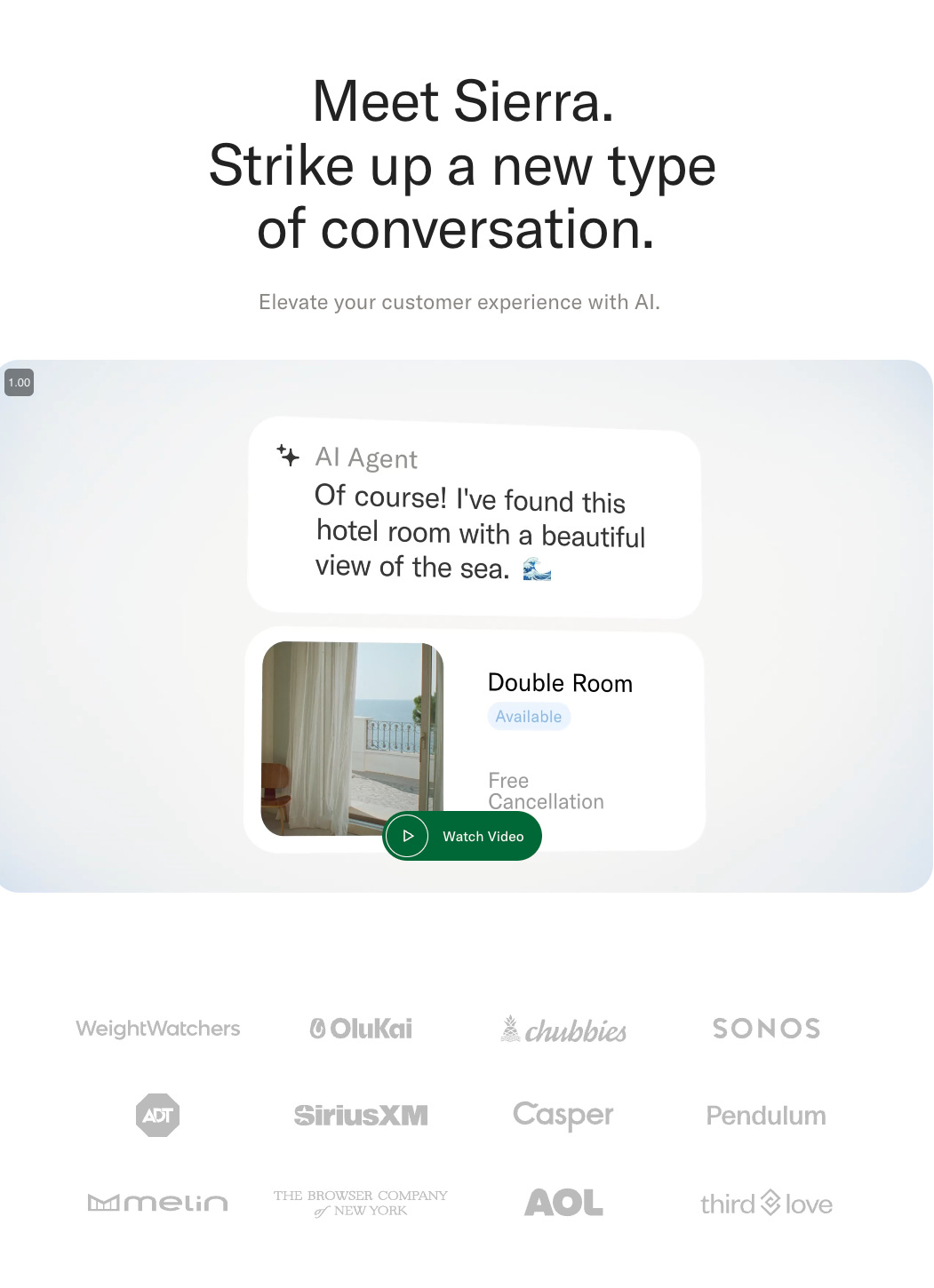

If you’re in SF, join us tomorrow for a fun meetup at CodeGen Night!If you’re in NYC, join us for AI Engineer Summit! The Agent Engineering track is now sold out, but 25 tickets remain for AI Leadership and 5 tickets for the workshops. You can see the full schedule of speakers and workshops at https://ai.engineer!It’s exceedingly hard to introduce someone like Bret Taylor. We could recite his Wikipedia page, or his extensive work history through Silicon Valley’s greatest companies, but everyone else already does that.As a podcast by AI engineers for AI engineers, we had the opportunity to do something a little different. We wanted to dig into what Bret sees from his vantage point at the top of our industry for the last 2 decades, and how that explains the rise of the AI Architect at Sierra, the leading conversational AI/CX platform.“Across our customer base, we are seeing a new role emerge - the role of the AI architect. These leaders are responsible for helping define, manage and evolve their company's AI agent over time. They come from a variety of both technical and business backgrounds, and we think that every company will have one or many AI architects managing their AI agent and related experience.”In our conversation, Bret Taylor confirms the Paul Buchheit legend that he rewrote Google Maps in a weekend, armed with only the help of a then-nascent Google Closure Compiler and no other modern tooling. But what we find remarkable is that he was the PM of Maps, not an engineer, though of course he still identifies as one. We find this theme recurring throughout Bret’s career and worldview. We think it is plain as day that AI leadership will have to be hands-on and technical, especially when the ground is shifting as quickly as it is today:“There's a lot of power in combining product and engineering into as few people as possible… few great things have been created by committee.”“If engineering is an order taking organization for product you can sometimes make meaningful things, but rarely will you create extremely well crafted breakthrough products. Those tend to be small teams who deeply understand the customer need that they're solving, who have a maniacal focus on outcomes.”“And I think the reason why is if you look at like software as a service five years ago, maybe you can have a separation of product and engineering because most software as a service created five years ago. I wouldn't say there's like a lot of technological breakthroughs required for most business applications. And if you're making expense reporting software or whatever, it's useful… You kind of know how databases work, how to build auto scaling with your AWS cluster, whatever, you know, it's just, you're just applying best practices to yet another problem. "When you have areas like the early days of mobile development or the early days of interactive web applications, which I think Google Maps and Gmail represent, or now AI agents, you're in this constant conversation with what the requirements of your customers and stakeholders are and all the different people interacting with it and the capabilities of the technology. And it's almost impossible to specify the requirements of a product when you're not sure of the limitations of the technology itself.”This is the first time the difference between technical leadership for “normal” software and for “AI” software was articulated this clearly for us, and we’ll be thinking a lot about this going forward. We left a lot of nuggets in the conversation, so we hope you’ll just dive in with us (and thank Bret for joining the pod!)Full YouTubePlease Like and Subscribe :)Timestamps* 00:00:02 Introductions and Bret Taylor's background* 00:01:23 Bret's experience at Stanford and the dot-com era* 00:04:04 The story of rewriting Google Maps backend* 00:11:06 Early days of interactive web applications at Google* 00:15:26 Discussion on product management and engineering roles* 00:21:00 AI and the future of software development* 00:26:42 Bret's approach to identifying customer needs and building AI companies* 00:32:09 The evolution of business models in the AI era* 00:41:00 The future of programming languages and software development* 00:49:38 Challenges in precisely communicating human intent to machines* 00:56:44 Discussion on Artificial General Intelligence (AGI) and its impact* 01:08:51 The future of agent-to-agent communication* 01:14:03 Bret's involvement in the OpenAI leadership crisis* 01:22:11 OpenAI's relationship with Microsoft* 01:23:23 OpenAI's mission and priorities* 01:27:40 Bret's guiding principles for career choices* 01:29:12 Brief discussion on pasta-making* 01:30:47 How Bret keeps up with AI developments* 01:32:15 Exciting research directions in AI* 01:35:19 Closing remarks and hiring at Sierra Transcript[00:02:05] Introduction and Guest Welcome[00:02:05] Alessio: Hey everyone, welcome to the Latent Space Podcast. This is Alessio, partner and CTO at Decibel Partners, and I'm joined by my co host swyx, founder of smol.ai.[00:02:17] swyx: Hey, and today we're super excited to have Bret Taylor join us. Welcome. Thanks for having me. It's a little unreal to have you in the studio.[00:02:25] swyx: I've read about you so much over the years, like even before. Open AI effectively. I mean, I use Google Maps to get here. So like, thank you for everything that you've done. Like, like your story history, like, you know, I think people can find out what your greatest hits have been.[00:02:40] Bret Taylor's Early Career and Education[00:02:40] swyx: How do you usually like to introduce yourself when, you know, you talk about, you summarize your career, like, how do you look at yourself?[00:02:47] Bret: Yeah, it's a great question. You know, we, before we went on the mics here, we're talking about the audience for this podcast being more engineering. And I do think depending on the audience, I'll introduce myself differently because I've had a lot of [00:03:00] corporate and board roles. I probably self identify as an engineer more than anything else though.[00:03:04] Bret: So even when I was. Salesforce, I was coding on the weekends. So I think of myself as an engineer and then all the roles that I do in my career sort of start with that just because I do feel like engineering is sort of a mindset and how I approach most of my life. So I'm an engineer first and that's how I describe myself.[00:03:24] Bret: You majored in computer[00:03:25] swyx: science, like 1998. And, and I was high[00:03:28] Bret: school, actually my, my college degree was Oh, two undergrad. Oh, three masters. Right. That old.[00:03:33] swyx: Yeah. I mean, no, I was going, I was going like 1998 to 2003, but like engineering wasn't as, wasn't a thing back then. Like we didn't have the title of senior engineer, you know, kind of like, it was just.[00:03:44] swyx: You were a programmer, you were a developer, maybe. What was it like in Stanford? Like, what was that feeling like? You know, was it, were you feeling like on the cusp of a great computer revolution? Or was it just like a niche, you know, interest at the time?[00:03:57] Stanford and the Dot-Com Bubble[00:03:57] Bret: Well, I was at Stanford, as you said, from 1998 to [00:04:00] 2002.[00:04:02] Bret: 1998 was near the peak of the dot com bubble. So. This is back in the day where most people that they're coding in the computer lab, just because there was these sun microsystems, Unix boxes there that most of us had to do our assignments on. And every single day there was a. com like buying pizza for everybody.[00:04:20] Bret: I didn't have to like, I got. Free food, like my first two years of university and then the dot com bubble burst in the middle of my college career. And so by the end there was like tumbleweed going to the job fair, you know, it was like, cause it was hard to describe unless you were there at the time, the like level of hype and being a computer science major at Stanford was like, A thousand opportunities.[00:04:45] Bret: And then, and then when I left, it was like Microsoft, IBM.[00:04:49] Joining Google and Early Projects[00:04:49] Bret: And then the two startups that I applied to were VMware and Google. And I ended up going to Google in large part because a woman named Marissa Meyer, who had been a teaching [00:05:00] assistant when I was, what was called a section leader, which was like a junior teaching assistant kind of for one of the big interest.[00:05:05] Bret: Yes. Classes. She had gone there. And she was recruiting me and I knew her and it was sort of felt safe, you know, like, I don't know. I thought about it much, but it turned out to be a real blessing. I realized like, you know, you always want to think you'd pick Google if given the option, but no one knew at the time.[00:05:20] Bret: And I wonder if I'd graduated in like 1999 where I've been like, mom, I just got a job at pets. com. It's good. But you know, at the end I just didn't have any options. So I was like, do I want to go like make kernel software at VMware? Do I want to go build search at Google? And I chose Google. 50, 50 ball.[00:05:36] Bret: I'm not really a 50, 50 ball. So I feel very fortunate in retrospect that the economy collapsed because in some ways it forced me into like one of the greatest companies of all time, but I kind of lucked into it, I think.[00:05:47] The Google Maps Rewrite Story[00:05:47] Alessio: So the famous story about Google is that you rewrote the Google maps back in, in one week after the map quest quest maps acquisition, what was the story there?[00:05:57] Alessio: Is it. Actually true. Is it [00:06:00] being glorified? Like how, how did that come to be? And is there any detail that maybe Paul hasn't shared before?[00:06:06] Bret: It's largely true, but I'll give the color commentary. So it was actually the front end, not the back end, but it turns out for Google maps, the front end was sort of the hard part just because Google maps was.[00:06:1

Did you know that adding a simple Code Interpreter took o3 from 9.2% to 32% on FrontierMath? The Latent Space crew is hosting a hack night Feb 11th in San Francisco focused on CodeGen use cases, co-hosted with E2B and Edge AGI; watch E2B’s new workshop and RSVP here!We’re happy to announce that today’s guest Samuel Colvin will be teaching his very first Pydantic AI workshop at the newly announced AI Engineer NYC Workshops day on Feb 22! 25 tickets left.If you’re a Python developer, it’s very likely that you’ve heard of Pydantic. Every month, it’s downloaded >300,000,000 times, making it one of the top 25 PyPi packages. OpenAI uses it in its SDK for structured outputs, it’s at the core of FastAPI, and if you’ve followed our AI Engineer Summit conference, Jason Liu of Instructor has given two great talks about it: “Pydantic is all you need” and “Pydantic is STILL all you need”. Now, Samuel Colvin has raised $17M from Sequoia to turn Pydantic from an open source project to a full stack AI engineer platform with Logfire, their observability platform, and PydanticAI, their new agent framework.Logfire: bringing OTEL to AIOpenTelemetry recently merged Semantic Conventions for LLM workloads which provides standard definitions to track performance like gen_ai.server.time_per_output_token. In Sam’s view at least 80% of new apps being built today have some sort of LLM usage in them, and just like web observability platform got replaced by cloud-first ones in the 2010s, Logfire wants to do the same for AI-first apps. If you’re interested in the technical details, Logfire migrated away from Clickhouse to Datafusion for their backend. We spent some time on the importance of picking open source tools you understand and that you can actually contribute to upstream, rather than the more popular ones; listen in ~43:19 for that part.Agents are the killer app for graphsPydantic AI is their attempt at taking a lot of the learnings that LangChain and the other early LLM frameworks had, and putting Python best practices into it. At an API level, it’s very similar to the other libraries: you can call LLMs, create agents, do function calling, do evals, etc.They define an “Agent” as a container with a system prompt, tools, structured result, and an LLM. Under the hood, each Agent is now a graph of function calls that can orchestrate multi-step LLM interactions. You can start simple, then move toward fully dynamic graph-based control flow if needed.“We were compelled enough by graphs once we got them right that our agent implementation [...] is now actually a graph under the hood.”Why Graphs?* More natural for complex or multi-step AI workflows.* Easy to visualize and debug with mermaid diagrams.* Potential for distributed runs, or “waiting days” between steps in certain flows.In parallel, you see folks like Emil Eifrem of Neo4j talk about GraphRAG as another place where graphs fit really well in the AI stack, so it might be time for more people to take them seriously.Full Video EpisodeLike and subscribe!Chapters* 00:00:00 Introductions* 00:00:24 Origins of Pydantic* 00:05:28 Pydantic's AI moment * 00:08:05 Why build a new agents framework?* 00:10:17 Overview of Pydantic AI* 00:12:33 Becoming a believer in graphs* 00:24:02 God Model vs Compound AI Systems* 00:28:13 Why not build an LLM gateway?* 00:31:39 Programmatic testing vs live evals* 00:35:51 Using OpenTelemetry for AI traces* 00:43:19 Why they don't use Clickhouse* 00:48:34 Competing in the observability space* 00:50:41 Licensing decisions for Pydantic and LogFire* 00:51:48 Building Pydantic.run* 00:55:24 Marimo and the future of Jupyter notebooks* 00:57:44 London's AI sceneShow Notes* Sam Colvin* Pydantic* Pydantic AI* Logfire* Pydantic.run* Zod* E2B* Arize* Langsmith* Marimo* Prefect* GLA (Google Generative Language API)* OpenTelemetry* Jason Liu* Sebastian Ramirez* Bogomil Balkansky* Hood Chatham* Jeremy Howard* Andrew LambTranscriptAlessio [00:00:03]: Hey, everyone. Welcome to the Latent Space podcast. This is Alessio, partner and CTO at Decibel Partners, and I'm joined by my co-host Swyx, founder of Smol AI.Swyx [00:00:12]: Good morning. And today we're very excited to have Sam Colvin join us from Pydantic AI. Welcome. Sam, I heard that Pydantic is all we need. Is that true?Samuel [00:00:24]: I would say you might need Pydantic AI and Logfire as well, but it gets you a long way, that's for sure.Swyx [00:00:29]: Pydantic almost basically needs no introduction. It's almost 300 million downloads in December. And obviously, in the previous podcasts and discussions we've had with Jason Liu, he's been a big fan and promoter of Pydantic and AI.Samuel [00:00:45]: Yeah, it's weird because obviously I didn't create Pydantic originally for uses in AI, it predates LLMs. But it's like we've been lucky that it's been picked up by that community and used so widely.Swyx [00:00:58]: Actually, maybe we'll hear it. Right from you, what is Pydantic and maybe a little bit of the origin story?Samuel [00:01:04]: The best name for it, which is not quite right, is a validation library. And we get some tension around that name because it doesn't just do validation, it will do coercion by default. We now have strict mode, so you can disable that coercion. But by default, if you say you want an integer field and you get in a string of 1, 2, 3, it will convert it to 123 and a bunch of other sensible conversions. And as you can imagine, the semantics around it. Exactly when you convert and when you don't, it's complicated, but because of that, it's more than just validation. Back in 2017, when I first started it, the different thing it was doing was using type hints to define your schema. That was controversial at the time. It was genuinely disapproved of by some people. I think the success of Pydantic and libraries like FastAPI that build on top of it means that today that's no longer controversial in Python. And indeed, lots of other people have copied that route, but yeah, it's a data validation library. It uses type hints for the for the most part and obviously does all the other stuff you want, like serialization on top of that. But yeah, that's the core.Alessio [00:02:06]: Do you have any fun stories on how JSON schemas ended up being kind of like the structure output standard for LLMs? And were you involved in any of these discussions? Because I know OpenAI was, you know, one of the early adopters. So did they reach out to you? Was there kind of like a structure output console in open source that people were talking about or was it just a random?Samuel [00:02:26]: No, very much not. So I originally. Didn't implement JSON schema inside Pydantic and then Sebastian, Sebastian Ramirez, FastAPI came along and like the first I ever heard of him was over a weekend. I got like 50 emails from him or 50 like emails as he was committing to Pydantic, adding JSON schema long pre version one. So the reason it was added was for OpenAPI, which is obviously closely akin to JSON schema. And then, yeah, I don't know why it was JSON that got picked up and used by OpenAI. It was obviously very convenient for us. That's because it meant that not only can you do the validation, but because Pydantic will generate you the JSON schema, it will it kind of can be one source of source of truth for structured outputs and tools.Swyx [00:03:09]: Before we dive in further on the on the AI side of things, something I'm mildly curious about, obviously, there's Zod in JavaScript land. Every now and then there is a new sort of in vogue validation library that that takes over for quite a few years and then maybe like some something else comes along. Is Pydantic? Is it done like the core Pydantic?Samuel [00:03:30]: I've just come off a call where we were redesigning some of the internal bits. There will be a v3 at some point, which will not break people's code half as much as v2 as in v2 was the was the massive rewrite into Rust, but also fixing all the stuff that was broken back from like version zero point something that we didn't fix in v1 because it was a side project. We have plans to move some of the basically store the data in Rust types after validation. Not completely. So we're still working to design the Pythonic version of it, in order for it to be able to convert into Python types. So then if you were doing like validation and then serialization, you would never have to go via a Python type we reckon that can give us somewhere between three and five times another three to five times speed up. That's probably the biggest thing. Also, like changing how easy it is to basically extend Pydantic and define how particular types, like for example, NumPy arrays are validated and serialized. But there's also stuff going on. And for example, Jitter, the JSON library in Rust that does the JSON parsing, has SIMD implementation at the moment only for AMD64. So we can add that. We need to go and add SIMD for other instruction sets. So there's a bunch more we can do on performance. I don't think we're going to go and revolutionize Pydantic, but it's going to continue to get faster, continue, hopefully, to allow people to do more advanced things. We might add a binary format like CBOR for serialization for when you'll just want to put the data into a database and probably load it again from Pydantic. So there are some things that will come along, but for the most part, it should just get faster and cleaner.Alessio [00:05:04]: From a focus perspective, I guess, as a founder too, how did you think about the AI interest rising? And then how do you kind of prioritize, okay, this is worth going into more, and we'll talk about Pydantic AI and all of that. What was maybe your early experience with LLAMP, and when did you figure out, okay, this is something we should take seriously and focus more resources on it?Samuel [00:05:28]: I'll answer that, but I'll answer what I think is a kind of parallel question, which is Pydantic's weird, because Pyda

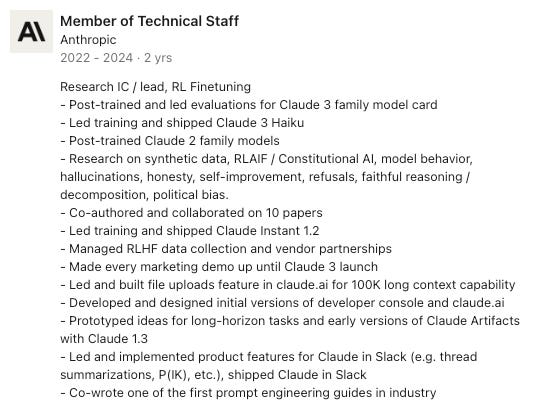

Sponsorships and tickets for the AI Engineer Summit are selling fast! See the new website with speakers and schedules live! If you are building AI agents or leading teams of AI Engineers, this will be the single highest-signal conference of the year for you, this Feb 20-22nd in NYC.We’re pleased to share that Karina will be presenting OpenAI’s closing keynote at the AI Engineer Summit. We were fortunate to get some time with her today to introduce some of her work, and hope this serves as nice background for her talk!There are very few early AI careers that have been as impactful as Karina Nguyen’s. After stints at Notion, Square, Dropbox, Primer, the New York Times, and UC Berkeley, She joined Anthropic as employee ~60 and worked on a wide range of research/product roles for Claude 1, 2, and 3. We’ll just let her LinkedIn speak for itself:Now, as Research manager and Post-training lead in Model Behavior at OpenAI, she creates new interaction paradigms for reasoning interfaces and capabilities, like ChatGPT Canvas, Tasks, SimpleQA, streaming chain-of-thought for o1 models, and more via novel synthetic model training. Ideal AI Research+Product ProcessIn the podcast we got a sense of what Karina has found works for her and her team to be as productive as they have been:* Write PRD (Define what you want)* Funding (Get resources)* Prototype Prompted Baseline (See what’s possible)* Write and Run Evals (Get failures to hillclimb)* Model training (Exceed baseline without overfitting)* Bugbash (Find bugs and solve them)* Ship (Get users!)We could turn this into a snazzy viral graphic but really this is all it is. Simple to say, difficult to do well. Hopefully it helps you define your process if you do similar product-research work. Show Notes* Our Reasoning Price War post * Karina LinkedIn, Website, Twitter* OSINT visualization work* Ukraine 3D storytelling* Karina on Claude Artifacts* Karina on Claude 3 Benchmarks* Inspiration for Artifacts / Canvas from early UX work she did on GPT-3* “i really believe that things like canvas and tasks should and could have happened like 2 yrs ago, idk why we are lagging in the form factors” (tweet)* Our article on prompting o1 vs Karina’s Claude prompting principles* Canvas: https://openai.com/index/introducing-canvas/ * We trained GPT-4o to collaborate as a creative partner. The model knows when to open a canvas, make targeted edits, and fully rewrite. It also understands broader context to provide precise feedback and suggestions.To support this, our research team developed the following core behaviors:* Triggering the canvas for writing and coding* Generating diverse content types* Making targeted edits* Rewriting documents* Providing inline critiqueWe measured progress with over 20 automated internal evaluations. We used novel synthetic data generation techniques, such as distilling outputs from OpenAI o1-preview, to post-train the model for its core behaviors. This approach allowed us to rapidly address writing quality and new user interactions, all without relying on human-generated data.* Tasks: https://www.theverge.com/2025/1/14/24343528/openai-chatgpt-repeating-tasks-agent-ai* * Agents and Operator* What are agents? “Agents are a gradual progression of tasks: starting with one-off actions, moving to collaboration, and ultimately fully trustworthy long-horizon delegation in complex envs like multi-player/multiagents.” (tweet)* tasks and canvas fall within the first two, and we are def. marching towards the third—though the form factor for 3 will take time to develop * Operator/Computer Use Agents* https://openai.com/index/introducing-operator/* Misc:* Andrew Ng* Prediction: Personal AI Consumer playbook* ChatGPT as generative OSTimestamps* 00:00 Welcome to the Latent Space Podcast* 00:11 Introducing Karina Nguyen* 02:21 Karina's Journey to OpenAI* 04:45 Early Prototypes and Projects* 05:25 Joining Anthropic and Early Work* 07:16 Challenges and Innovations at Anthropic* 11:30 Launching Claude 3* 21:57 Behavioral Design and Model Personality* 27:37 The Making of ChatGPT Canvas* 34:34 Canvas Update and Initial Impressions* 34:46 Differences Between Canvas and API Outputs* 35:50 Core Use Cases of Canvas* 36:35 Canvas as a Writing Partner* 36:55 Canvas vs. Google Docs and Future Improvements* 37:35 Canvas for Coding and Executing Code* 38:50 Challenges in Developing Canvas* 41:45 Introduction to Tasks* 41:53 Developing and Iterating on Tasks* 46:27 Future Vision for Tasks and Proactive Models* 52:23 Computer Use Agents and Their Potential* 01:00:21 Cultural Differences Between OpenAI and Anthropic* 01:03:46 Call to Action and Final ThoughtsTranscriptAlessio [00:00:04]: Hey everyone, welcome to the Latent Space podcast. This is Alessio, partner and CTO at Decibel, and I'm joined by my usual co-host, Swyx.swyx [00:00:11]: Hey, and today we're very, very blessed to have Karina Nguyen in the studio. Welcome.Karina [00:00:15]: Nice to meet you.swyx [00:00:16]: We finally made it happen. We finally made it happen. First time we tried this, you were working at a different company, and now we're here. Fortunately, you had some time, so thank you so much for joining us. Yeah, thank you for inviting me. Karina, your website says you lead a research team in OpenAI, creating new interaction paradigms for reasoning interfaces and capabilities like ChatGPT Canvas, and most recently, ChatGPT TAS. I don't know, is that what we're calling it? Streaming chain of thought for O1 models and more via novel synthetic model training. What is this research team?Karina [00:00:45]: Yeah, I need to clarify this a little bit more. I think it changed a lot since the last time we launched. So we launched Canvas, and it was the first project. I was a tech lead, basically, and then I think over time I was trying to refine what my team is, and I feel like it's at the intersection of human-computer interaction, defining what the next interaction paradigms might look like with some of the most recent reasoning models, as well as actually trying to come up with novel methods, how to improve those models for certain tasks if you want to. So for Canvas, for example, one of the most common use cases is basically writing and coding. And we're continually working on, okay, how do we make Canvas coding to go beyond what is possible right now? And that requires us to actually do our own training and coming up with new methods of synthetic data generation. The way I'm thinking about it is that my team is going from very full stack, from training models all the way up to deployment and making sure that we create novel product features that is coherent to what you're doing. So we're really working on that.swyx [00:02:08]: So it's, it's a lot of work to do right now. And I think that's why I think it's such a great opportunity. You know, how could something this big work in like an industrial space and in the things that we're doing, you know, it's a really exciting time for us. And it's just, you know, it's a lot of work, but what I really like about working in digital space is the, you know, the visual space is always the best place to stay. It's not just the skill sets that need to be done.Alessio [00:02:17]: Like we have, like, a lot of things to be done, but like, we've got a lot of different, you know, things to come up with. I know you have some early UX prototypes with GPT-3 as well, and kind of like maybe how that is informed, the way you build products.Karina [00:02:32]: I think my background was mostly like working on computer vision applications for like investigative journalism. Back when I was like at school at Berkeley, and I was working a lot with like Human Rights Center and like investigative journalists from various media. And that's how I learned more about like AI, like with vision transformers. And at that time, I was working with some of the professors at Berkeley AI Research.swyx [00:03:00]: There are some Pulitzer Prize winning professors, right, that teach there?Karina [00:03:04]: No, so it's mostly like was reporting for like teams like the New York Times, like the AP Associated Press. So it was like all in the context of like Human Rights Center. Got it. Yeah. So that was like in computer vision. And then I saw... I saw Crisolo's work around, you know, like interpretability from Google. And that's how I found out about like Anthropic. And at that time, I was just like, I think it was like the year when like Ukraine's war happened. And I was like trying to find a full-time job. And it was kind of like all got distracted. It was like kind of like spring. And I was like very focused on like figuring out like what to do. And then my best option at that time was just like continue my internship. At the New York Times and convert to like full-time. At the New York Times, it was just like working on like mostly like product engineering work around like R&D prototypes, kind of like storytelling features on the mobile experience. So it kind of like storytelling experiences. And like at that time, we were like thinking about like how do we employ like NLP techniques to like scrape some of the archives from the New York Times or something. But then I always wanted to like get into like AI. And like I knew OpenAI for a while, like since I was like, and I was like, I don't know, I don't know. So I kind of like applied to Anthropic just on the website. And I was rejected the first time. But then at that time, they were not hiring for like anything like product engineering or front-end engineering, which was something I was like, at that time, I was like interested in. And then there was like a new opening at Anthropic was like kind of like you are front-end engineer. And so I applied. And that's how my journey began. But like the earlier prototypes was mostly like I used like Clip.swyx [00:05:13]: We'll briefly mention that the Ukrainian crisis actually hit home more for you than most people because you're fro

One last Gold sponsor slot is available for the AI Engineer Summit in NYC. Our last round of invites is going out soon - apply here - If you are building AI agents or AI eng teams, this will be the single highest-signal conference of the year for you!While the world melts down over DeepSeek, few are talking about the OTHER notable group of former hedge fund traders who pivoted into AI and built a remarkably profitable consumer AI business with a tiny team with incredibly cracked engineering team — Chai Research. In short order they have:* Started a Chat AI company well before Noam Shazeer started Character AI, and outlasted his departure.* Crossed 1m DAU in 2.5 years - William updates us on the pod that they’ve hit 1.4m DAU now, another +40% from a few months ago. Revenue crossed >$22m. * Launched the Chaiverse model crowdsourcing platform - taking 3-4 week A/B testing cycles down to 3-4 hours, and deploying >100 models a week.While they’re not paying million dollar salaries, you can tell they’re doing pretty well for an 11 person startup:The Chai Recipe: Building infra for rapid evalsRemember how the central thesis of LMarena (formerly LMsys) is that the only comprehensive way to evaluate LLMs is to let users try them out and pick winners?At the core of Chai is a mobile app that looks like Character AI, but is actually the largest LLM A/B testing arena in the world, specialized on retaining chat users for Chai’s usecases (therapy, assistant, roleplay, etc). It’s basically what LMArena would be if taken very, very seriously at one company (with $1m in prizes to boot):Chai publishes occasional research on how they think about this, including talks at their Palo Alto office:William expands upon this in today’s podcast (34 mins in):Fundamentally, the way I would describe it is when you're building anything in life, you need to be able to evaluate it. And through evaluation, you can iterate, we can look at benchmarks, and we can say the issues with benchmarks and why they may not generalize as well as one would hope in the challenges of working with them. But something that works incredibly well is getting feedback from humans. And so we built this thing where anyone can submit a model to our developer backend, and it gets put in front of 5000 users, and the users can rate it. And we can then have a really accurate ranking of like which model, or users finding more engaging or more entertaining. And it gets, you know, it's at this point now, where every day we're able to, I mean, we evaluate between 20 and 50 models, LLMs, every single day, right. So even though we've got only got a team of, say, five AI researchers, they're able to iterate a huge quantity of LLMs, right. So our team ships, let's just say minimum 100 LLMs a week is what we're able to iterate through. Now, before that moment in time, we might iterate through three a week, we might, you know, there was a time when even doing like five a month was a challenge, right? By being able to change the feedback loops to the point where it's not, let's launch these three models, let's do an A-B test, let's assign, let's do different cohorts, let's wait 30 days to see what the day 30 retention is, which is the kind of the, if you're doing an app, that's like A-B testing 101 would be, do a 30-day retention test, assign different treatments to different cohorts and come back in 30 days. So that's insanely slow. That's just, it's too slow. And so we were able to get that 30-day feedback loop all the way down to something like three hours.In Crowdsourcing the leap to Ten Trillion-Parameter AGI, William describes Chai’s routing as a recommender system, which makes a lot more sense to us than previous pitches for model routing startups:William is notably counter-consensus in a lot of his AI product principles:* No streaming: Chats appear all at once to allow rejection sampling* No voice: Chai actually beat Character AI to introducing voice - but removed it after finding that it was far from a killer feature.* Blending: “Something that we love to do at Chai is blending, which is, you know, it's the simplest way to think about it is you're going to end up, and you're going to pretty quickly see you've got one model that's really smart, one model that's really funny. How do you get the user an experience that is both smart and funny? Well, just 50% of the requests, you can serve them the smart model, 50% of the requests, you serve them the funny model.” (that’s it!)But chief above all is the recommender system.We also referenced Exa CEO Will Bryk’s concept of SuperKnowlege:Full Video versionOn YouTube. please like and subscribe!Timestamps* 00:00:04 Introductions and background of William Beauchamp* 00:01:19 Origin story of Chai AI* 00:04:40 Transition from finance to AI* 00:11:36 Initial product development and idea maze for Chai* 00:16:29 User psychology and engagement with AI companions* 00:20:00 Origin of the Chai name* 00:22:01 Comparison with Character AI and funding challenges* 00:25:59 Chai's growth and user numbers* 00:34:53 Key inflection points in Chai's growth* 00:42:10 Multi-modality in AI companions and focus on user-generated content* 00:46:49 Chaiverse developer platform and model evaluation* 00:51:58 Views on AGI and the nature of AI intelligence* 00:57:14 Evaluation methods and human feedback in AI development* 01:02:01 Content creation and user experience in Chai* 01:04:49 Chai Grant program and company culture* 01:07:20 Inference optimization and compute costs* 01:09:37 Rejection sampling and reward models in AI generation* 01:11:48 Closing thoughts and recruitmentTranscriptAlessio [00:00:04]: Hey everyone, welcome to the Latent Space podcast. This is Alessio, partner and CTO at Decibel, and today we're in the Chai AI office with my usual co-host, Swyx.swyx [00:00:14]: Hey, thanks for having us. It's rare that we get to get out of the office, so thanks for inviting us to your home. We're in the office of Chai with William Beauchamp. Yeah, that's right. You're founder of Chai AI, but previously, I think you're concurrently also running your fund?William [00:00:29]: Yep, so I was simultaneously running an algorithmic trading company, but I fortunately was able to kind of exit from that, I think just in Q3 last year. Yeah, congrats. Yeah, thanks.swyx [00:00:43]: So Chai has always been on my radar because, well, first of all, you do a lot of advertising, I guess, in the Bay Area, so it's working. Yep. And second of all, the reason I reached out to a mutual friend, Joyce, was because I'm just generally interested in the... ...consumer AI space, chat platforms in general. I think there's a lot of inference insights that we can get from that, as well as human psychology insights, kind of a weird blend of the two. And we also share a bit of a history as former finance people crossing over. I guess we can just kind of start it off with the origin story of Chai.William [00:01:19]: Why decide working on a consumer AI platform rather than B2B SaaS? So just quickly touching on the background in finance. Sure. Originally, I'm from... I'm from the UK, born in London. And I was fortunate enough to go study economics at Cambridge. And I graduated in 2012. And at that time, everyone in the UK and everyone on my course, HFT, quant trading was really the big thing. It was like the big wave that was happening. So there was a lot of opportunity in that space. And throughout college, I'd sort of played poker. So I'd, you know, I dabbled as a professional poker player. And I was able to accumulate this sort of, you know, say $100,000 through playing poker. And at the time, as my friends would go work at companies like ChangeStreet or Citadel, I kind of did the maths. And I just thought, well, maybe if I traded my own capital, I'd probably come out ahead. I'd make more money than just going to work at ChangeStreet.swyx [00:02:20]: With 100k base as capital?William [00:02:22]: Yes, yes. That's not a lot. Well, it depends what strategies you're doing. And, you know, there is an advantage. There's an advantage to being small, right? Because there are, if you have a 10... Strategies that don't work in size. Exactly, exactly. So if you have a fund of $10 million, if you find a little anomaly in the market that you might be able to make 100k a year from, that's a 1% return on your 10 million fund. If your fund is 100k, that's 100% return, right? So being small, in some sense, was an advantage. So started off, and the, taught myself Python, and machine learning was like the big thing as well. Machine learning had really, it was the first, you know, big time machine learning was being used for image recognition, neural networks come out, you get dropout. And, you know, so this, this was the big thing that's going on at the time. So I probably spent my first three years out of Cambridge, just building neural networks, building random forests to try and predict asset prices, right, and then trade that using my own money. And that went well. And, you know, if you if you start something, and it goes well, you You try and hire more people. And the first people that came to mind was the talented people I went to college with. And so I hired some friends. And that went well and hired some more. And eventually, I kind of ran out of friends to hire. And so that was when I formed the company. And from that point on, we had our ups and we had our downs. And that was a whole long story and journey in itself. But after doing that for about eight or nine years, on my 30th birthday, which was four years ago now, I kind of took a step back to just evaluate my life, right? This is what one does when one turns 30. You know, I just heard it. I hear you. And, you know, I looked at my 20s and I loved it. It was a really special time. I was really lucky and fortunate to have worked with this amazing team, been successful, had a lot of hard times. And through the hard times, learned wisdom and then a l

Sponsorships and applications for the AI Engineer Summit in NYC are live! (Speaker CFPs have closed) If you are building AI agents or leading teams of AI Engineers, this will be the single highest-signal conference of the year for you.Right after Christmas, the Chinese Whale Bros ended 2024 by dropping the last big model launch of the year: DeepSeek v3. Right now on LM Arena, DeepSeek v3 has a score of 1319, right under the full o1 model, Gemini 2, and 4o latest. This makes it the best open weights model in the world in January 2025.There has been a big recent trend in Chinese labs releasing very large open weights models, with TenCent releasing Hunyuan-Large in November and Hailuo releasing MiniMax-Text this week, both over 400B in size. However these extra-large language models are very difficult to serve.Baseten was the first of the Inference neocloud startups to get DeepSeek V3 online, because of their H200 clusters, their close collaboration with the DeepSeek team and early support of SGLang, a relatively new VLLM alternative that is also used at frontier labs like X.ai. Each H200 has 141 GB of VRAM with 4.8 TB per second of bandwidth, meaning that you can use 8 H200's in a node to inference DeepSeek v3 in FP8, taking into account KV Cache needs. We have been close to Baseten since Sarah Guo introduced Amir Haghighat to swyx, and they supported the very first Latent Space Demo Day in San Francisco, which was effectively the trial run for swyx and Alessio to work together! Since then, Philip Kiely also led a well attended workshop on TensorRT LLM at the 2024 World's Fair. We worked with him to get two of their best representatives, Amir and Lead Model Performance Engineer Yineng Zhang, to discuss DeepSeek, SGLang, and everything they have learned running Mission Critical Inference workloads at scale for some of the largest AI products in the world.The Three Pillars of Mission Critical InferenceWe initially planned to focus the conversation on SGLang, but Amir and Yineng were quick to correct us that the choice of inference framework is only the simplest, first choice of 3 things you need for production inference at scale:“I think it takes three things, and each of them individually is necessary but not sufficient: * Performance at the model level: how fast are you running this one model running on a single GPU, let's say. The framework that you use there can, can matter. The techniques that you use there can matter. The MLA technique, for example, that Yineng mentioned, or the CUDA kernels that are being used. But there's also techniques being used at a higher level, things like speculative decoding with draft models or with Medusa heads. And these are implemented in the different frameworks, or you can even implement it yourself, but they're not necessarily tied to a single framework. But using speculative decoding gets you massive upside when it comes to being able to handle high throughput. But that's not enough. Invariably, that one model running on a single GPU, let's say, is going to get too much traffic that it cannot handle.* Horizontal scaling at the cluster/region level: And at that point, you need to horizontally scale it. That's not an ML problem. That's not a PyTorch problem. That's an infrastructure problem. How quickly do you go from, a single replica of that model to 5, to 10, to 100. And so that's the second, that's the second pillar that is necessary for running these machine critical inference workloads.And what does it take to do that? It takes, some people are like, Oh, You just need Kubernetes and Kubernetes has an autoscaler and that just works. That doesn't work for, for these kinds of mission critical inference workloads. And you end up catching yourself wanting to bit by bit to rebuild those infrastructure pieces from scratch. This has been our experience. * And then going even a layer beyond that, Kubernetes runs in a single. cluster. It's a single cluster. It's a single region tied to a single region. And when it comes to inference workloads and needing GPUs more and more, you know, we're seeing this that you cannot meet the demand inside of a single region. A single cloud's a single region. In other words, a single model might want to horizontally scale up to 200 replicas, each of which is, let's say, 2H100s or 4H100s or even a full node, you run into limits of the capacity inside of that one region. And what we had to build to get around that was the ability to have a single model have replicas across different regions. So, you know, there are models on Baseten today that have 50 replicas in GCP East and, 80 replicas in AWS West and Oracle in London, etc.* Developer experience for Compound AI Systems: The final one is wrapping the power of the first two pillars in a very good developer experience to be able to afford certain workflows like the ones that I mentioned, around multi step, multi model inference workloads, because more and more we're seeing that the market is moving towards those that the needs are generally in these sort of more complex workflows. We think they said it very well.Show Notes* Amir Haghighat, Co-Founder, Baseten* Yineng Zhang, Lead Software Engineer, Model Performance, BasetenFull YouTube EpisodePlease like and subscribe!Timestamps* 00:00 Introduction and Latest AI Model Launch* 00:11 DeepSeek v3: Specifications and Achievements* 03:10 Latent Space Podcast: Special Guests Introduction* 04:12 DeepSeek v3: Technical Insights* 11:14 Quantization and Model Performance* 16:19 MOE Models: Trends and Challenges* 18:53 Baseten's Inference Service and Pricing* 31:13 Optimization for DeepSeek* 31:45 Three Pillars of Mission Critical Inference Workloads* 32:39 Scaling Beyond Single GPU* 33:09 Challenges with Kubernetes and Infrastructure* 33:40 Multi-Region Scaling Solutions* 35:34 SG Lang: A New Framework* 38:52 Key Techniques Behind SG Lang* 48:27 Speculative Decoding and Performance* 49:54 Future of Fine-Tuning and RLHF* 01:00:28 Baseten's V3 and Industry TrendsBaseten’s previous TensorRT LLM workshop: Get full access to Latent Space at www.latent.space/subscribe

Due to overwhelming demand (>15x applications:slots), we are closing CFPs for AI Engineer Summit NYC today. Last call! Thanks, we’ll be reaching out to all shortly!The world’s top AI blogger and friend of every pod, Simon Willison, dropped a monster 2024 recap: Things we learned about LLMs in 2024. Brian of the excellent TechMeme Ride Home pinged us for a connection and a special crossover episode, our first in 2025. The target audience for this podcast is a tech-literate, but non-technical one. You can see Simon’s notes for AI Engineers in his World’s Fair Keynote.Timestamp* 00:00 Introduction and Guest Welcome* 01:06 State of AI in 2025* 01:43 Advancements in AI Models* 03:59 Cost Efficiency in AI* 06:16 Challenges and Competition in AI* 17:15 AI Agents and Their Limitations* 26:12 Multimodal AI and Future Prospects* 35:29 Exploring Video Avatar Companies* 36:24 AI Influencers and Their Future* 37:12 Simplifying Content Creation with AI* 38:30 The Importance of Credibility in AI* 41:36 The Future of LLM User Interfaces* 48:58 Local LLMs: A Growing Interest* 01:07:22 AI Wearables: The Next Big Thing* 01:10:16 Wrapping Up and Final ThoughtsTranscript[00:00:00] Introduction and Guest Welcome[00:00:00] Brian: Welcome to the first bonus episode of the Tech Meme Write Home for the year 2025. I'm your host as always, Brian McCullough. Listeners to the pod over the last year know that I have made a habit of quoting from Simon Willison when new stuff happens in AI from his blog. Simon has been, become a go to for many folks in terms of, you know, Analyzing things, criticizing things in the AI space.[00:00:33] Brian: I've wanted to talk to you for a long time, Simon. So thank you for coming on the show. No, it's a privilege to be here. And the person that made this connection happen is our friend Swyx, who has been on the show back, even going back to the, the Twitter Spaces days but also an AI guru in, in their own right Swyx, thanks for coming on the show also.[00:00:54] swyx (2): Thanks. I'm happy to be on and have been a regular listener, so just happy to [00:01:00] contribute as well.[00:01:00] Brian: And a good friend of the pod, as they say. Alright, let's go right into it.[00:01:06] State of AI in 2025[00:01:06] Brian: Simon, I'm going to do the most unfair, broad question first, so let's get it out of the way. The year 2025. Broadly, what is the state of AI as we begin this year?[00:01:20] Brian: Whatever you want to say, I don't want to lead the witness.[00:01:22] Simon: Wow. So many things, right? I mean, the big thing is everything's got really good and fast and cheap. Like, that was the trend throughout all of 2024. The good models got so much cheaper, they got so much faster, they got multimodal, right? The image stuff isn't even a surprise anymore.[00:01:39] Simon: They're growing video, all of that kind of stuff. So that's all really exciting.[00:01:43] Advancements in AI Models[00:01:43] Simon: At the same time, they didn't get massively better than GPT 4, which was a bit of a surprise. So that's sort of one of the open questions is, are we going to see huge, but I kind of feel like that's a bit of a distraction because GPT 4, but way cheaper, much larger context lengths, and it [00:02:00] can do multimodal.[00:02:01] Simon: is better, right? That's a better model, even if it's not.[00:02:05] Brian: What people were expecting or hoping, maybe not expecting is not the right word, but hoping that we would see another step change, right? Right. From like GPT 2 to 3 to 4, we were expecting or hoping that maybe we were going to see the next evolution in that sort of, yeah.[00:02:21] Brian: We[00:02:21] Simon: did see that, but not in the way we expected. We thought the model was just going to get smarter, and instead we got. Massive drops in, drops in price. We got all of these new capabilities. You can talk to the things now, right? They can do simulated audio input, all of that kind of stuff. And so it's kind of, it's interesting to me that the models improved in all of these ways we weren't necessarily expecting.[00:02:43] Simon: I didn't know it would be able to do an impersonation of Santa Claus, like a, you know, Talked to it through my phone and show it what I was seeing by the end of 2024. But yeah, we didn't get that GPT 5 step. And that's one of the big open questions is, is that actually just around the corner and we'll have a bunch of GPT 5 class models drop in the [00:03:00] next few months?[00:03:00] Simon: Or is there a limit?[00:03:03] Brian: If you were a betting man and wanted to put money on it, do you expect to see a phase change, step change in 2025?[00:03:11] Simon: I don't particularly for that, like, the models, but smarter. I think all of the trends we're seeing right now are going to keep on going, especially the inference time compute, right?[00:03:21] Simon: The trick that O1 and O3 are doing, which means that you can solve harder problems, but they cost more and it churns away for longer. I think that's going to happen because that's already proven to work. I don't know. I don't know. Maybe there will be a step change to a GPT 5 level, but honestly, I'd be completely happy if we got what we've got right now.[00:03:41] Simon: But cheaper and faster and more capabilities and longer contexts and so forth. That would be thrilling to me.[00:03:46] Brian: Digging into what you've just said one of the things that, by the way, I hope to link in the show notes to Simon's year end post about what, what things we learned about LLMs in 2024. Look for that in the show notes.[00:03:59] Cost Efficiency in AI[00:03:59] Brian: One of the things that you [00:04:00] did say that you alluded to even right there was that in the last year, you felt like the GPT 4 barrier was broken, like IE. Other models, even open source ones are now regularly matching sort of the state of the art.[00:04:13] Simon: Well, it's interesting, right? So the GPT 4 barrier was a year ago, the best available model was OpenAI's GPT 4 and nobody else had even come close to it.[00:04:22] Simon: And they'd been at the, in the lead for like nine months, right? That thing came out in what, February, March of, of 2023. And for the rest of 2023, nobody else came close. And so at the start of last year, like a year ago, the big question was, Why has nobody beaten them yet? Like, what do they know that the rest of the industry doesn't know?[00:04:40] Simon: And today, that I've counted 18 organizations other than GPT 4 who've put out a model which clearly beats that GPT 4 from a year ago thing. Like, maybe they're not better than GPT 4. 0, but that's, that, that, that barrier got completely smashed. And yeah, a few of those I've run on my laptop, which is wild to me.[00:04:59] Simon: Like, [00:05:00] it was very, very wild. It felt very clear to me a year ago that if you want GPT 4, you need a rack of 40, 000 GPUs just to run the thing. And that turned out not to be true. Like the, the, this is that big trend from last year of the models getting more efficient, cheaper to run, just as capable with smaller weights and so forth.[00:05:20] Simon: And I ran another GPT 4 model on my laptop this morning, right? Microsoft 5. 4 just came out. And that, if you look at the benchmarks, it's definitely, it's up there with GPT 4. 0. It's probably not as good when you actually get into the vibes of the thing, but it, it runs on my, it's a 14 gigabyte download and I can run it on a MacBook Pro.[00:05:38] Simon: Like who saw that coming? The most exciting, like the close of the year on Christmas day, just a few weeks ago, was when DeepSeek dropped their DeepSeek v3 model on Hugging Face without even a readme file. It was just like a giant binary blob that I can't run on my laptop. It's too big. But in all of the benchmarks, it's now by far the best available [00:06:00] open, open weights model.[00:06:01] Simon: Like it's, it's, it's beating the, the metalamas and so forth. And that was trained for five and a half million dollars, which is a tenth of the price that people thought it costs to train these things. So everything's trending smaller and faster and more efficient.[00:06:15] Brian: Well, okay.[00:06:16] Challenges and Competition in AI[00:06:16] Brian: I, I kind of was going to get to that later, but let's, let's combine this with what I was going to ask you next, which is, you know, you're talking, you know, Also in the piece about the LLM prices crashing, which I've even seen in projects that I'm working on, but explain Explain that to a general audience, because we hear all the time that LLMs are eye wateringly expensive to run, but what we're suggesting, and we'll come back to the cheap Chinese LLM, but first of all, for the end user, what you're suggesting is that we're starting to see the cost come down sort of in the traditional technology way of Of costs coming down over time,[00:06:49] Simon: yes, but very aggressively.[00:06:51] Simon: I mean, my favorite thing, the example here is if you look at GPT-3, so open AI's g, PT three, which was the best, a developed model in [00:07:00] 2022 and through most of 20 2023. That, the models that we have today, the OpenAI models are a hundred times cheaper. So there was a 100x drop in price for OpenAI from their best available model, like two and a half years ago to today.[00:07:13] Simon: And[00:07:14] Brian: just to be clear, not to train the model, but for the use of tokens and things. Exactly,[00:07:20] Simon: for running prompts through them. And then When you look at the, the really, the top tier model providers right now, I think, are OpenAI, Anthropic, Google, and Meta. And there are a bunch of others that I could list there as well.[00:07:32] Simon: Mistral are very good. The, the DeepSeq and Quen models have got great. There's a whole bunch of providers serving really good models. But even if you just look at the sort of big brand name providers, they all offer models now that are