“AGI’s Last Bottlenecks” by Adam Khoja, Laura Hiscott

Description

Adam Khoja is a co-author of the recent study, “A Definition of AGI.” The opinions expressed in this article are his own and do not necessarily represent those of the study's other authors.

Laura Hiscott is a core contributor at

AI Frontiers and collaborated on the development and writing of this article.Dan Hendrycks, lead author of “A Definition of AGI,” provided substantial input throughout this article's drafting.

----

In a recent interview on the “Dwarkesh Podcast,” OpenAI co-founder Andrej Karpathy claimed that artificial general intelligence (AGI) is around a decade away, expressing doubt about “over-predictions in the industry.” Coming amid growing discussion of an “AI bubble,” Karpathy's comment throws cold water on some of the more bullish predictions from leading tech figures. Yet those figures don’t seem to be reconsidering their positions. Following Anthropic CEO Dario Amodei's prediction last year that we might have “a country of geniuses [...]

---

Outline:

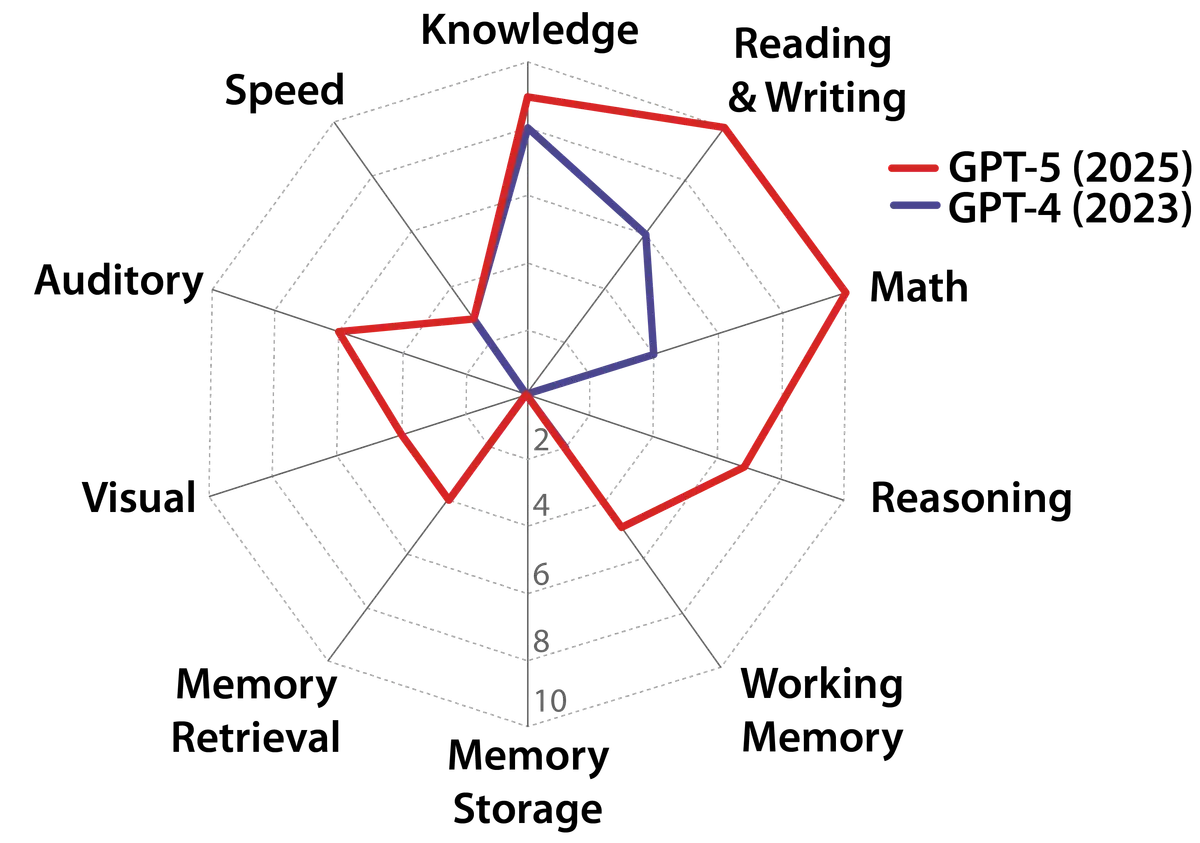

(03:50 ) Missing Capabilities and the Path to Solving Them

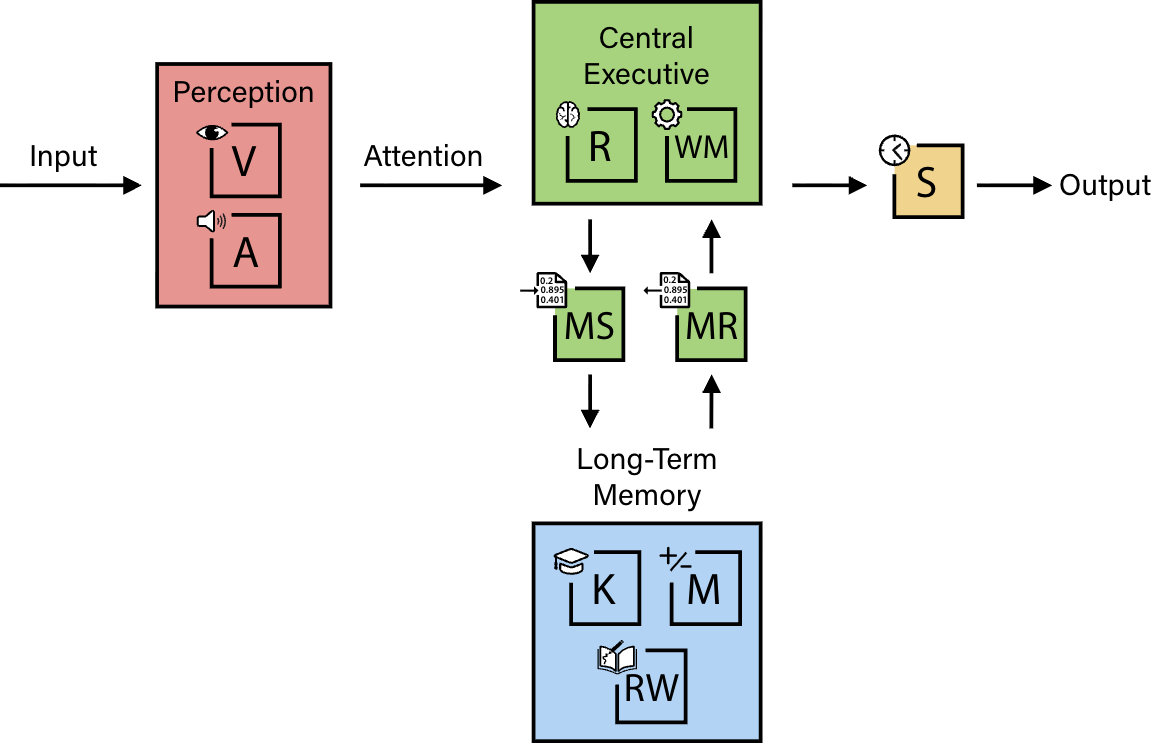

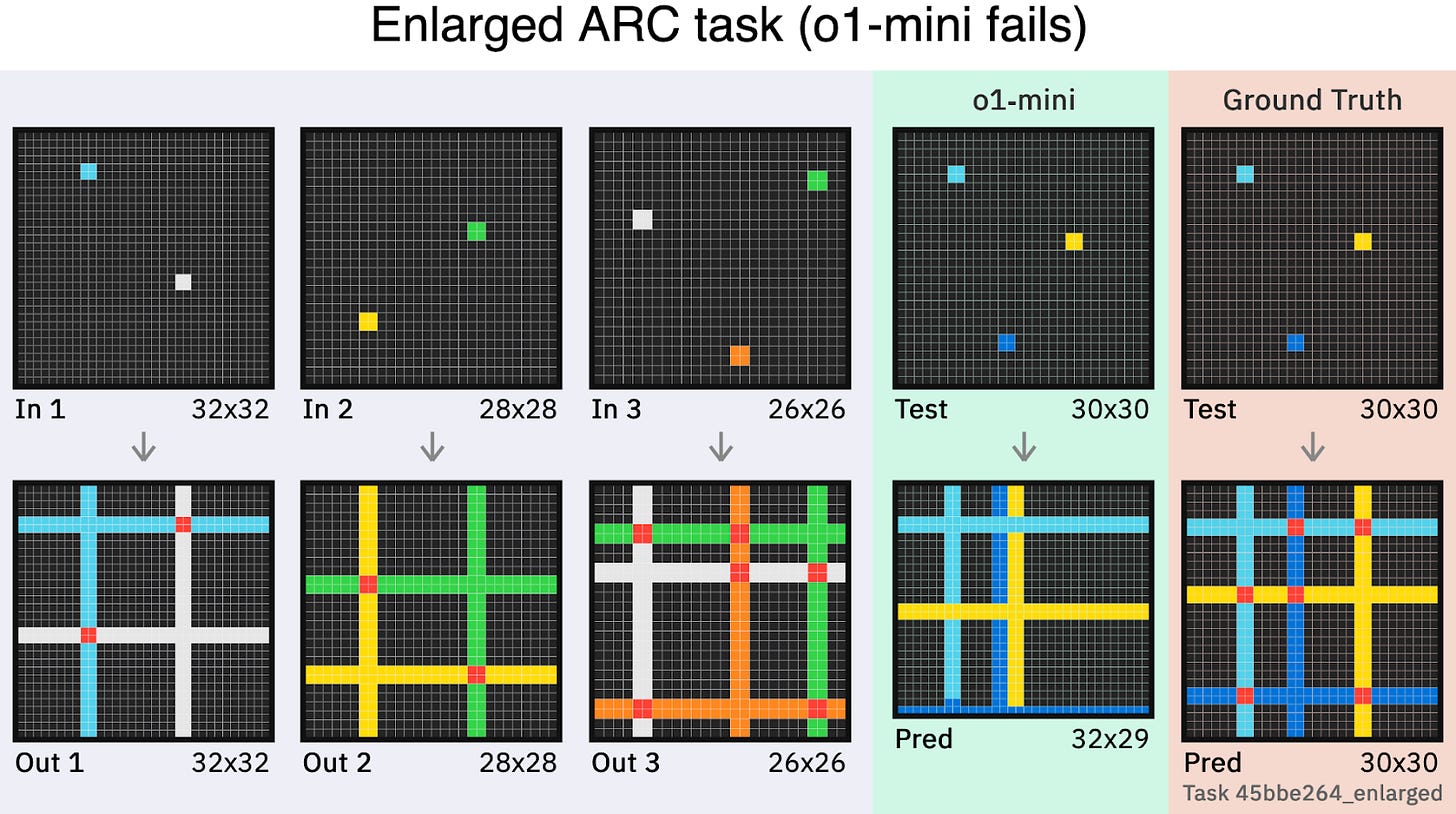

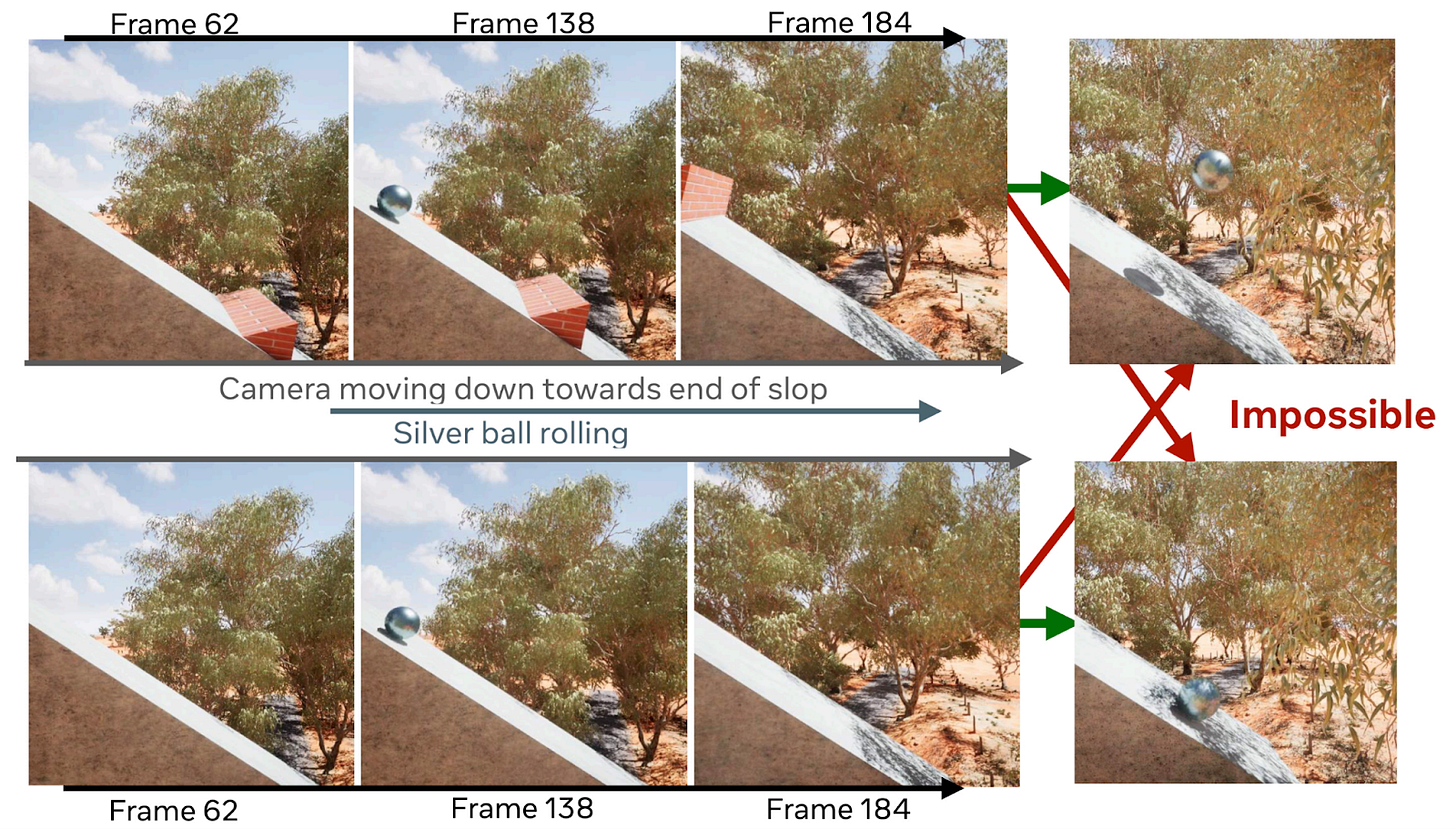

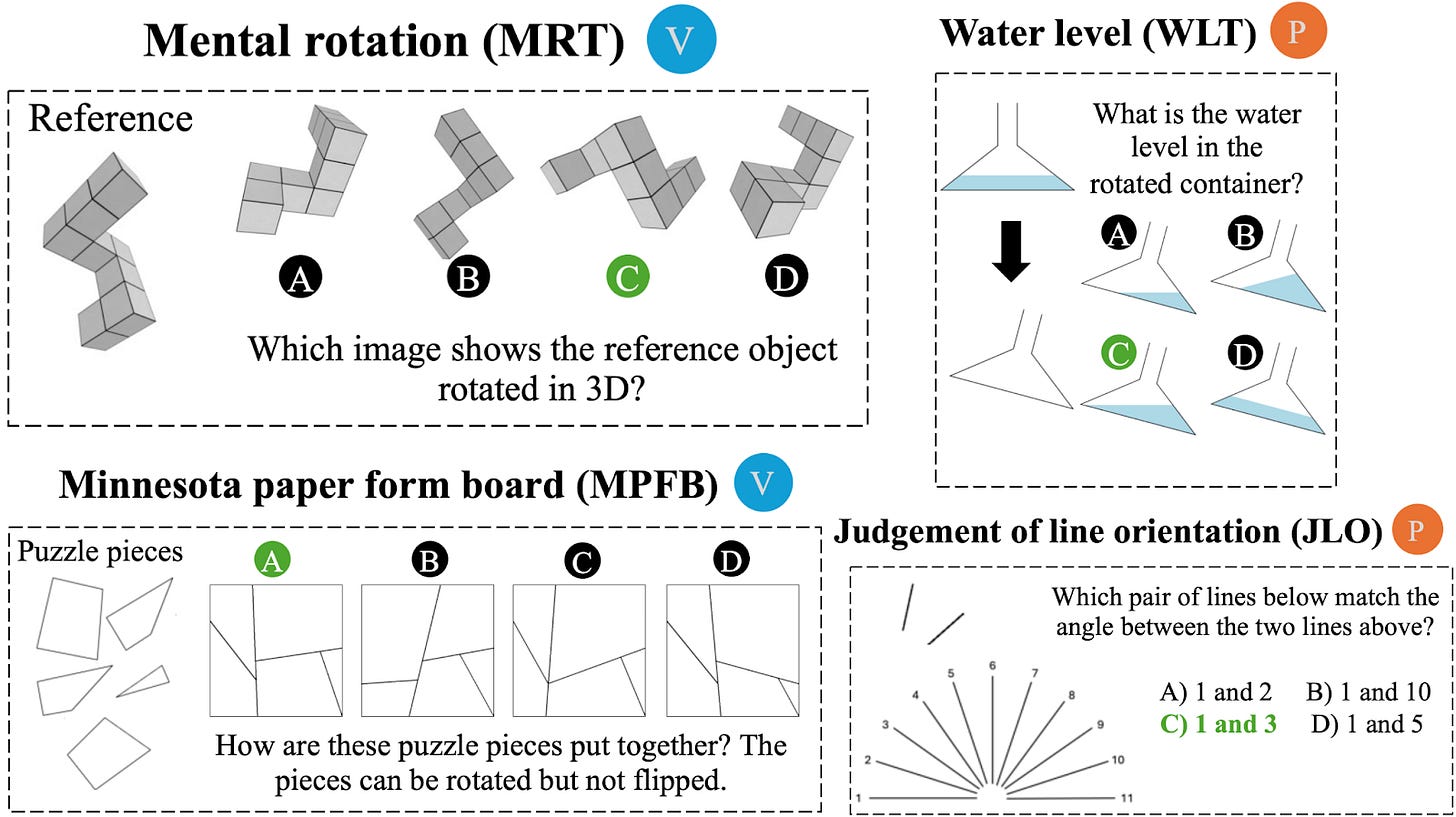

(05:13 ) Visual Processing

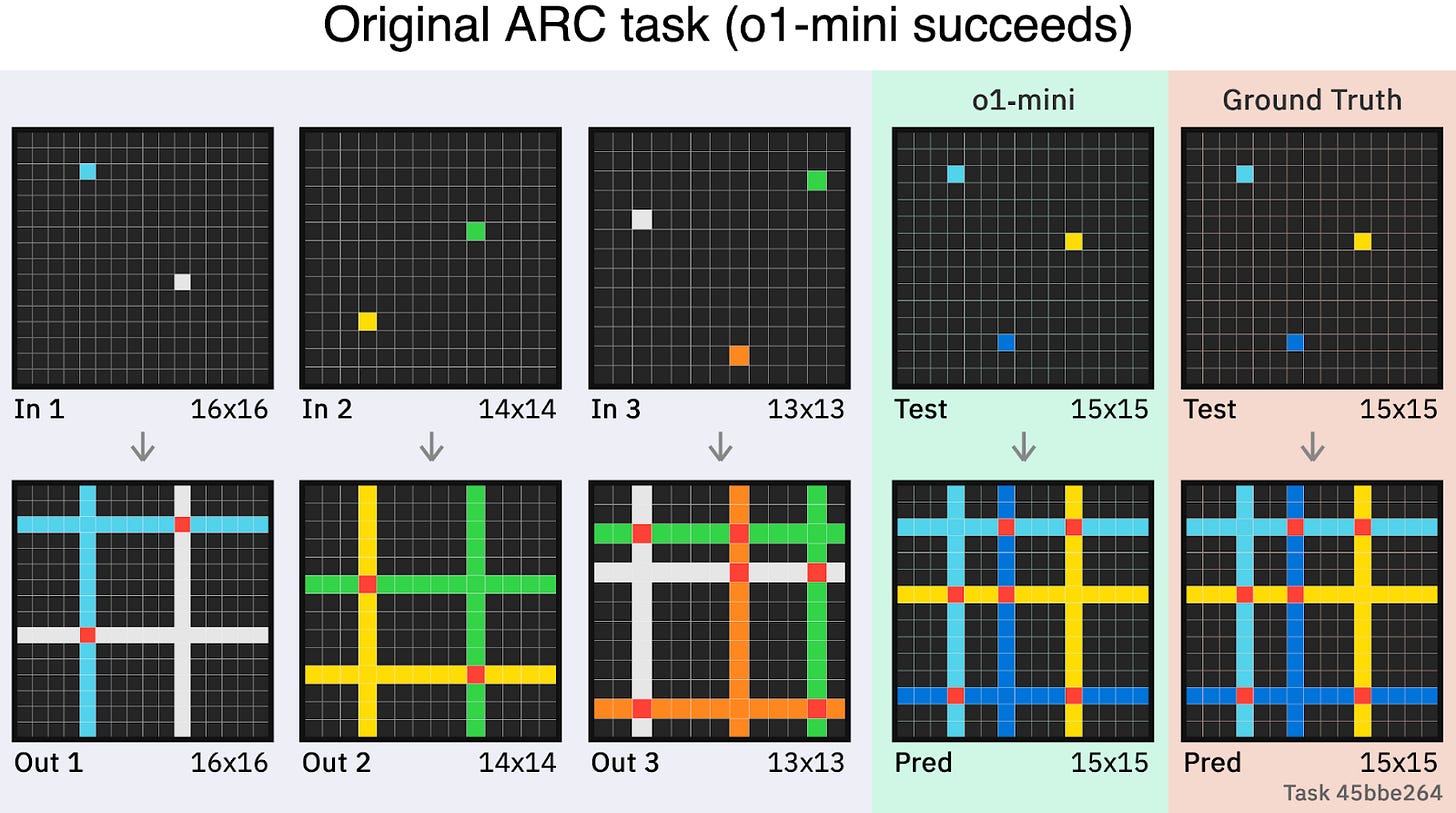

(07:38 ) On-the-Spot Reasoning

(10:15 ) Auditory Processing

(11:09 ) Speed

(12:04 ) Working Memory

(13:16 ) Long-Term Memory Retrieval (Hallucinations)

(14:24 ) Long-Term Memory Storage (Continual Learning)

(16:36 ) Conclusion

(18:47 ) Discussion about this post

---

First published:

October 22nd, 2025

Source:

https://aifrontiersmedia.substack.com/p/agis-last-bottlenecks

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.