The Illusion of Thinking in Large Reasoning Models

Description

https://machinelearning.apple.com/research/illusion-of-thinking

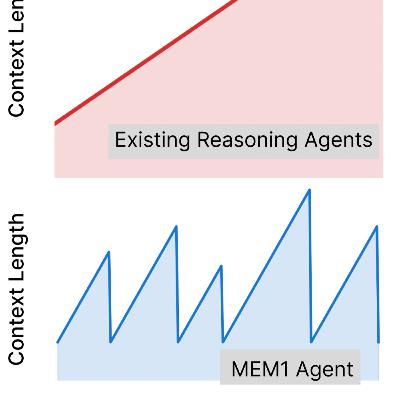

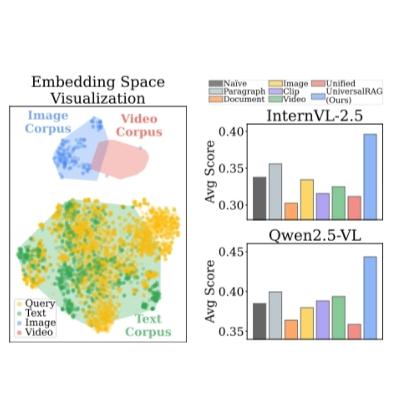

The document investigates the capabilities and limitations of Large Reasoning Models (LRMs), a new generation of language models designed for complex problem-solving. It critiques current evaluation methods, which often rely on mathematical benchmarks prone to data contamination, and instead proposes using controllable puzzle environments to systematically analyze model behavior. The research identifies three distinct performance regimes based on problem complexity: standard models may outperform LRMs at low complexity, LRMs show an advantage at medium complexity, but both collapse at high complexity. Crucially, LRMs exhibit a counter-intuitive decline in reasoning effort as problems become overwhelmingly difficult, despite having available token budgets, and also demonstrate surprising limitations in executing exact algorithms and inconsistent reasoning across different puzzle types.