The Next Frontier in Astronomical Text Mining: Parsing GCN Circulars with LLMs.

Description

This episode dives into how astronomers are leveraging cutting-edge AI to make sense of decades of critical astronomical observations, focusing on the General Coordinates Network (GCN).

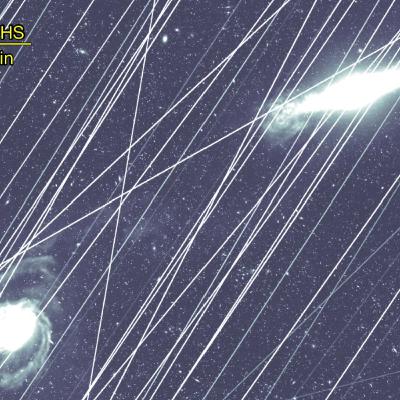

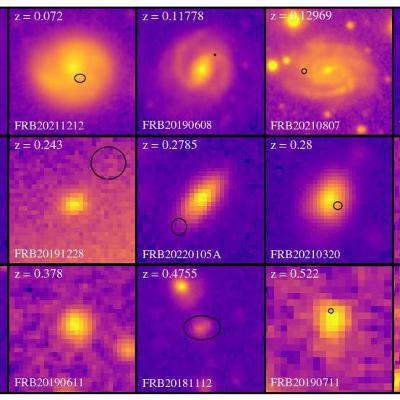

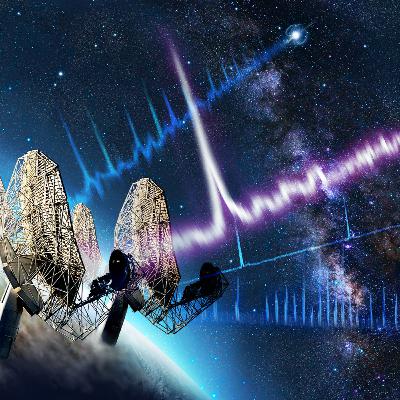

The GCN, NASA’s time-domain and multi-messenger alert system, distributes over 40,500 human-generated "Circulars" which report high-energy and multi-messenger astronomical transients. Because these Circulars are flexible and unstructured, extracting key observational information, such as **redshift** or observed wavebands, has historically been a challenging manual task.

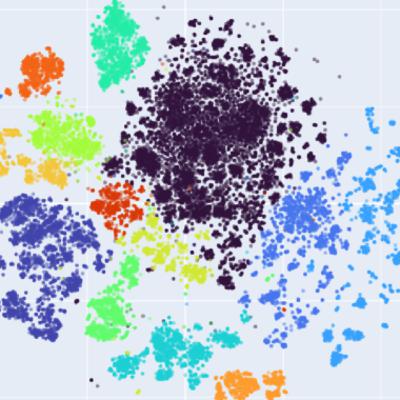

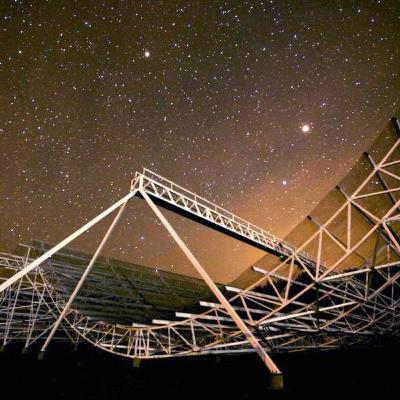

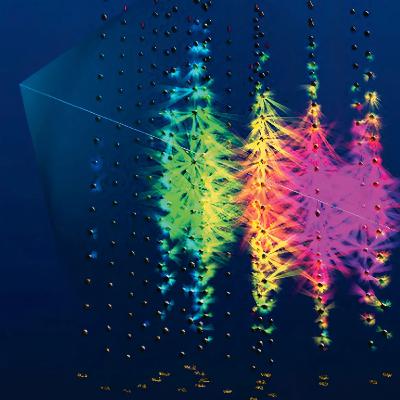

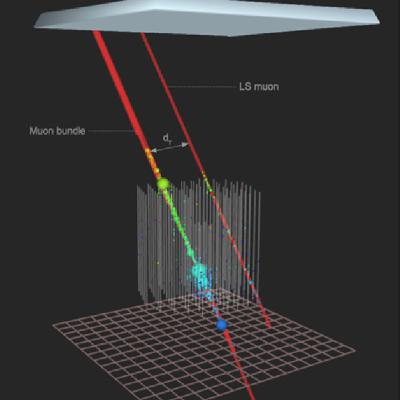

Researchers employed **Large Language Models (LLMs)** to automate this process. They developed a neural topic modeling pipeline using tools like BERTopic to automatically cluster and summarize astrophysical themes, classify circulars based on observation wavebands (including high-energy, optical, radio, Gravitational Wave (GW), and neutrino observations), and separate GW event clusters and their electromagnetic (EM) counterparts. They also used **contrastive fine-tuning** to significantly improve the classification accuracy of these observational clusters.

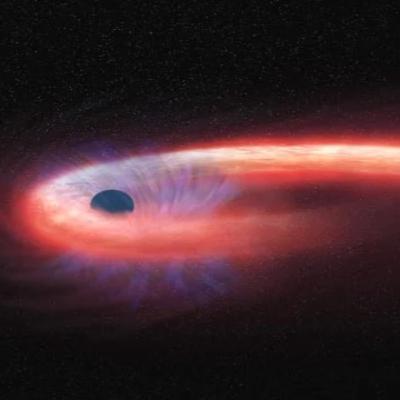

A key achievement was the successful implementation of a zero-shot system using the **open-source Mistral model** to automatically extract Gamma-Ray Burst (GRB) redshift information. By utilizing prompt-tuning and **Retrieval Augmented Generation (RAG)**, this simple system achieved an impressive **97.2% accuracy** when extracting redshifts from Circulars that contained this information.

The study demonstrates the immense potential of LLMs to **automate and enhance astronomical text mining**, providing a foundation for real-time analysis systems that could greatly streamline the work of the global transient alert follow-up community.

***

**Reference to the Article:**

Vidushi Sharma, Ronit Agarwala, Judith L. Racusin, et al. (2025). **Large Language Model Driven Analysis of General Coordinates Network (GCN) Circulars.** *Draft version November 20, 2025.*. (Preprint: 2511.14858v1.pdf).

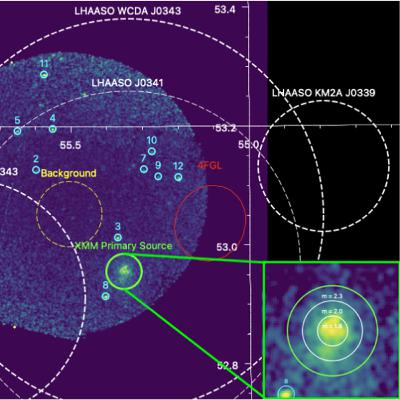

Acknowledements: Podcast prepared with Google/NotebookLM. Illustration credits: arXiv:2511.14858v1