Why Power-Flexible AI Just Became Table Stakes

Description

This story was originally published on HackerNoon at: https://hackernoon.com/why-power-flexible-ai-just-became-table-stakes.

The questions investors should ask about AI data centers just changed. Why power flexibility is now table stakes for infrastructure deployment.

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #data-centers, #u.s-infrastructure-investments, #power-management, #ai-ready-architecture, #private-equity, #ai-data-centers, #ai-power-consumption, #ai-energy-needs, and more.

This story was written by: @asitsahoo. Learn more about this writer by checking @asitsahoo's about page,

and for more stories, please visit hackernoon.com.

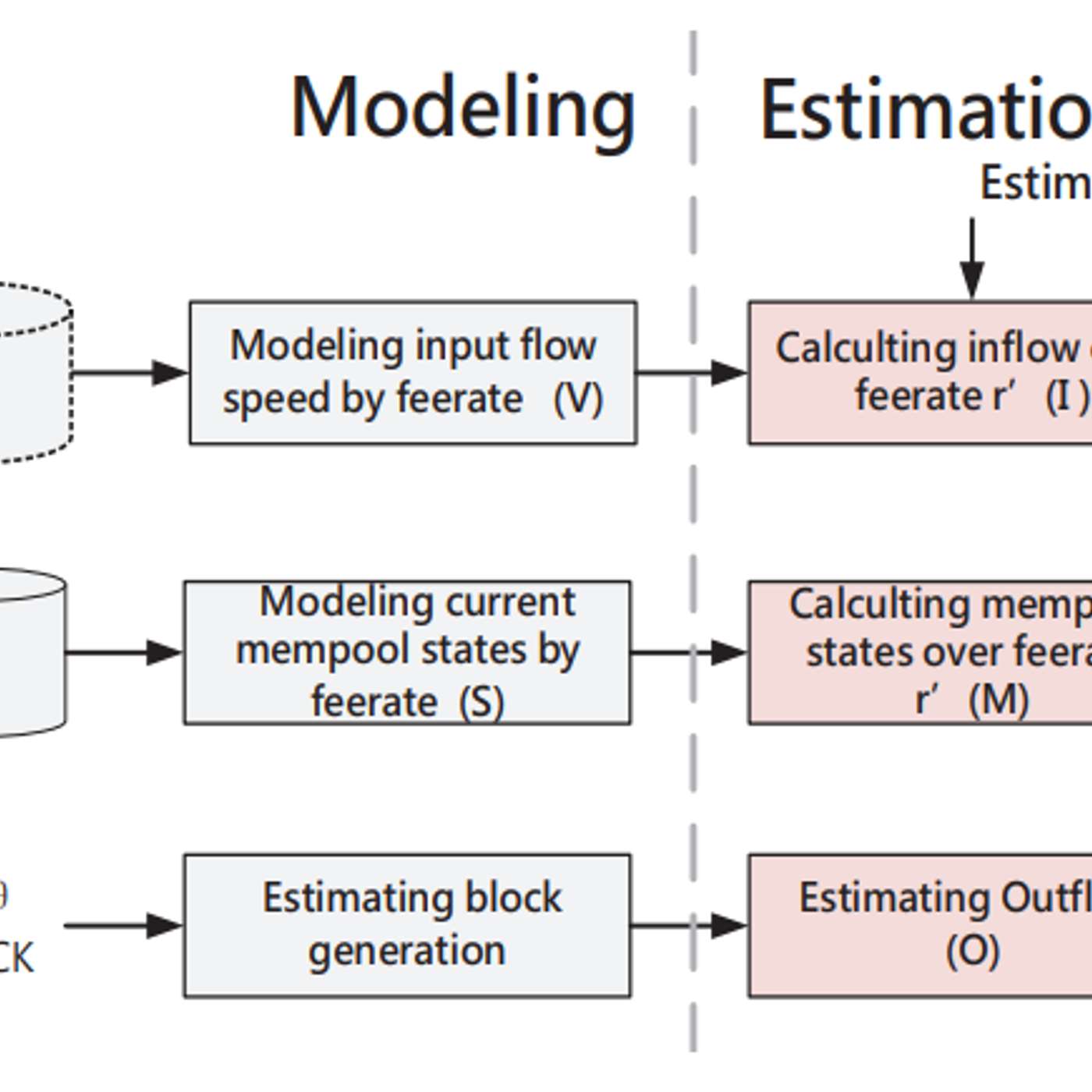

AI infrastructure's real constraint: power, not chips. Aurora AI Factory (NVIDIA/Emerald, Virginia, 96MW, 2026) implements interruptible compute—software layer throttles training during grid stress, delivers 20-30% reductions, maintains SLAs. Enables faster permitting, lower capacity charges, wholesale market participation. Trade-off: longer training times for cheaper power. Key question: will two-tier market emerge (fixed for inference, flex for training)? 100GW capacity unlock claim assumes perfect coordination—directionally correct but optimistic. Power flexibility now mandatory for deployment. Due diligence questions: demand response capability, interruptible/fixed split, interconnection impact.