Discover El Podcast

El Podcast

178 Episodes

Reverse

Social media isn’t “crack for your brain” for most people—Jeffrey Hall argues the best evidence shows tiny average effects on wellbeing, lots of measurement mess, and a bigger story about relationships, leisure, and moral panic.Guest bio (short)Dr. Jeffrey Hall is Professor and Chair of Communication Studies at the University of Kansas and Director of the Relationships and Technology Labs, researching social media, communication, and how relationships shape wellbeing.Topics discussed (in order)Why “social media is toxic” became the default story (and why it may be a moral panic)What the research actually finds: effects near zero for most usersThe 0.4% figure and why context (baseline mental health, home life, SES) matters moreThe measurement problem: “screen time” vs “social media time” vs “everything a phone replaces”Media displacement: social media time often replaces TV time more than it replaces relationshipsMyth: social media addiction is widespread—why self-diagnosis ≠ clinical addictionTeen mental health: social media as a minor factor compared to home, school, money, support“Potatoes and glasses” comparison: putting effect sizes in perspectiveContent quality debates (TikTok vs Jerry Springer) and why taste ≠ wellbeing outcomesSocial bandwidth: why people decompress differently based on work and social demandsReal risks (fraud, cyberbullying, nonconsensual content) without treating them as the whole storyTech leaders restricting kids’ tech: privilege, parenting, and “perfectly curated” childhoodsHas teaching changed? Jeff’s take: pandemic disruption mattered more than phonesPractical takeaway: prioritize relationships; be forgiving about media; align leisure with valuesMain pointsMost studies find tiny average links between social media use and wellbeing; context explains far more.“Screen time” is a blunt instrument because phones replaced many older activities (TV, music, news, books, calls).“Addiction” is often used casually; clinically, we lack strong standards/tools to diagnose “smartphone addiction” the way we do substance use.Social time may be declining for some, but heavy media use often concentrates among people with fewer social anchors (work, family, community).Digital detox results vary—benefits tend to show up when people replace media with chosen, value-aligned activities.Relationships remain the most reliable wellbeing lever: face-to-face is great, calls are strong, texts can help—staying connected matters.Top 3 quotes (from the conversation)“Social media has become almost like a vortex that pours in every other conversation that we're having right now.”“Study after study basically says the effect is close to zero or approximate zero.”“It is really, really good evidence that relationships are good for you… prioritize relationships in your life.”Subscribe➡️Review➡️shareIf you liked this episode, subscribe for more conversations that cut through moral panics with data. Leave a review (it helps new listeners find the show), and share this episode with one friend who’s convinced social media is “destroying society”—especially if you want a calmer, more evidence-based take.

🎙 The Pod is hosted by Jesse Wright💬 For guest suggestions, questions, or media inquiries, reach out at https://elpodcast.media/📬 Never miss an episode – subscribe and follow wherever you get your podcasts.⭐️ If you enjoyed this episode, please rate and review the show. It helps others find us. Thanks for listening!

Economic historian Tobias Straumann breaks down how Germany’s debt meltdown in 1931 crashed the global economy—and how a surprisingly generous 1953 debt deal helped spark the German economic miracle by putting growth ahead of punishment.GUEST BIO: Tobias Straumann (Switzerland) is Professor of Modern & Economic History at the University of Zurich; author of Out of Hitler’s Shadow and 1931: Debt, Crisis, and the Rise of Hitler.TOPICS DISCUSSED:1931 as the real inflection point of the Great DepressionTreaty of Versailles + reparations politics (why it’s not a straight-line story)Germany’s “double surplus” debt trap (budget + trade surplus) and default dynamicsGold standard breakdown and global contagionLondon Debt Agreement (1953): what it did and why it matteredWWII reparations vs interwar debts vs private creditors (who got paid)Cold War incentives vs the older “German problem” (balance of power since 1871)1990 reunification, the 2+4 treaty, and why reparations weren’t reopenedLater compensation: Israel/Claims Conference, forced labor, voluntary gesturesPoland/Greece reparations claims in modern politicsComparisons: Japan/Italy reparations and postwar strategyModern debt parallels (domestic vs foreign-currency debt; political will)MAIN POINTS:1931 turned a severe recession into a worldwide depression via Germany-centered financial contagion.Versailles mattered, but Allied policy adjustments and domestic politics shaped outcomes more than a simple “Versailles caused WWII” line.Germany’s foreign-currency debt made austerity + transfer demands self-defeating, ending in default and system collapse.The 1953 London Debt Agreement was pivotal: it reduced and restructured interwar debts and made repayment compatible with recovery.West Germany paid little-to-no WWII reparations (effectively deferred), while interwar private creditors recovered significant shares—morally messy but stabilizing.Cold War pressures helped, but Europe’s long-running challenge was integrating a too-strong Germany into a stable order.In 1990, the 2+4 framework avoided reopening WWII reparations to keep reunification politically and economically manageable.Later payments (Israel, Holocaust victims, forced laborers) partially addressed moral claims outside classic state-to-state reparations.TOP 3 QUOTES:“We think that the year 1931 was the turning point… it turned into a worldwide depression.”“It’s probably the biggest and most important debt settlement of the 20th century.”“It’s morally hard to swallow… but it had the advantage of stabilizing Western Europe economically and politically.”

🎙 The Pod is hosted by Jesse Wright💬 For guest suggestions, questions, or media inquiries, reach out at https://elpodcast.media/📬 Never miss an episode – subscribe and follow wherever you get your podcasts.⭐️ If you enjoyed this episode, please rate and review the show. It helps others find us. Thanks for listening!

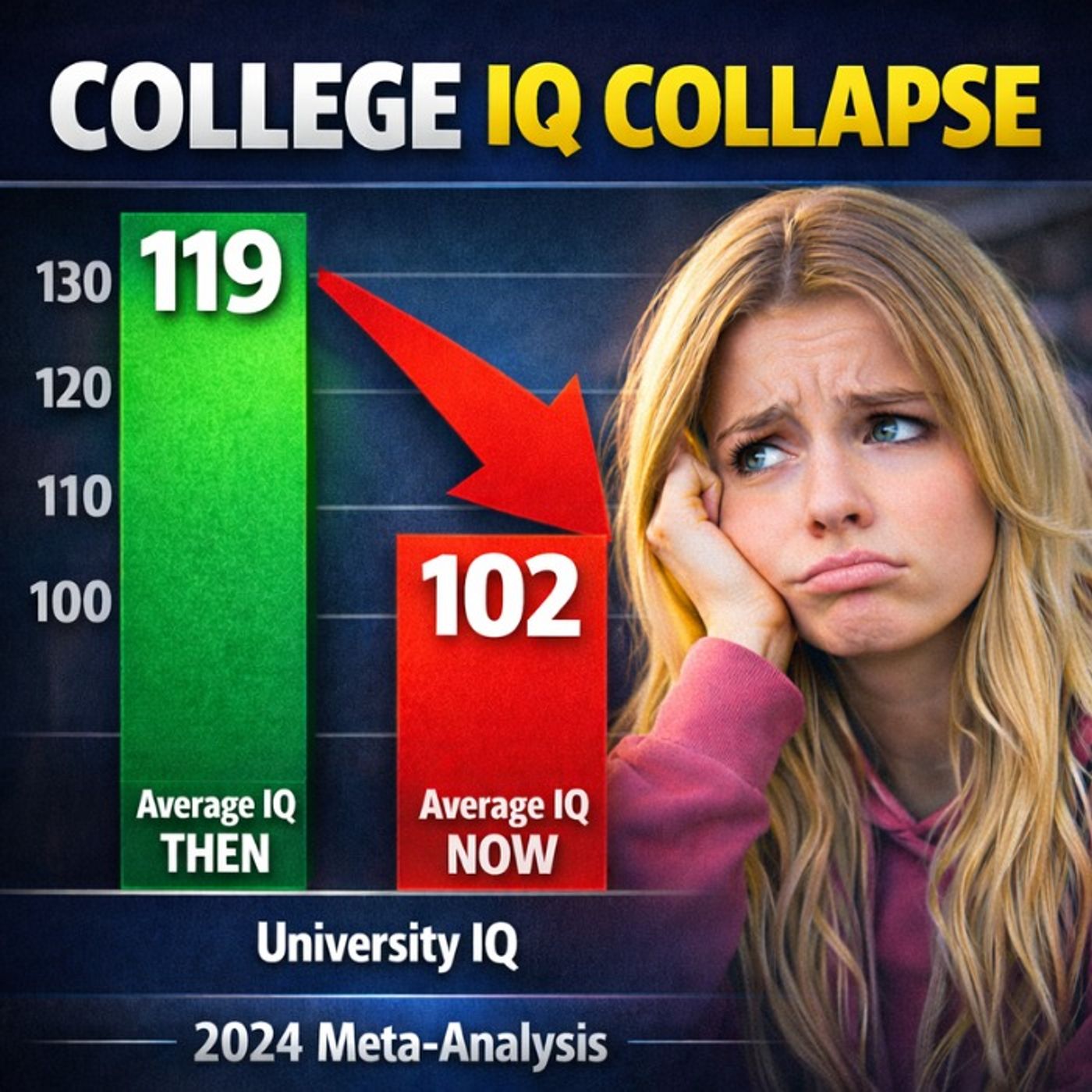

A cognitive psychologist explains why college student IQ now averages about 102, why that shift is mathematically inevitable as enrollment expands, and how outdated testing norms and student-evals can quietly wreck both education and clinical decisions.GUEST BIODr. Bob Uttl is a cognitive psychologist and professor at Mount Royal University (Canada) who researches psychometrics, assessment, and how intelligence tests are interpreted and misused in real-world settings.TOPICS DISCUSSED (IN ORDER)What IQ is, how it’s measured, and why scores are standardized (mean 100, SD 15)The Flynn Effect and why “raw ability” rose over the last centuryWhy expanding university enrollment mathematically lowers the average IQ of undergradsThe meta-analysis: how the team compiled WAIS results over time and what they found (down to ~102)The Frontiers controversy: accepted, posted, went viral, then “un-accepted” after social media blowbackClinical misuse: comparing modern test-takers to decades-old norms and the harms that followImpacts inside universities: wider ability range, teaching to the lower tail, boredom at the topGrades + incentives: student evaluations as satisfaction metrics that push standards downwardEmployers adapting: degrees losing signaling value; rise of employer-run assessments/trainingDifferences across majors and institutions: SAT/GRE as IQ-proxies; fields with feedback/standardized licensure“Reverse Flynn” talk: why some skills crater (speeded arithmetic, fluency) as tools replace practiceAI and learning: hallucinations, the need for human judgment, and the possible return of oral examsEuropean exam models vs North American incentivesFinal takeaways: fix misinformation about undergrad IQ; remove harmful incentives; reintroduce standardsMAIN POINTSIQ tests are periodically re-normed, so “100” always tracks the current population average even as raw performance changes.As a larger share of the population attends university, the average IQ of undergrads must move closer to the population mean—this is arithmetic, not an insult.Uttl’s meta-analysis argues today’s undergrads average around 102 IQ, far closer to “average” than older assumptions (e.g., 115+).Outdated norms and sloppy cross-era comparisons can shave ~20+ points off a person “on paper,” creating bogus diagnoses and high-stakes harm (disability decisions, fitness-for-duty, litigation).Universities now teach a wider spread of ability, which pressures instruction toward the lower end unless programs stratify or standardize outcomes.Student evaluations function like customer satisfaction scores; combined with adjunct/contract insecurity, they incentivize grade inflation and lower rigor.Employers respond by discounting degrees and building their own testing/training pipelines.Some “reverse Flynn” patterns may reflect skill/fluency loss (e.g., speeded arithmetic) as calculators/AI replace practice—not necessarily a uniform drop in reasoning.A plausible reform path: reduce reliance on student evals, adopt clearer standards, and consider more direct assessments (including oral exams) where appropriate.BEST 3 QUOTES“The decrease in average IQ of university students is a necessary consequence of increased enrollment.”“Student evaluations of teaching are basically measures of satisfaction.”“We need to remove the misinformation about what is the IQ of undergraduate students.”

🎙 The Pod is hosted by Jesse Wright💬 For guest suggestions, questions, or media inquiries, reach out at https://elpodcast.media/📬 Never miss an episode – subscribe and follow wherever you get your podcasts.⭐️ If you enjoyed this episode, please rate and review the show. It helps others find us. Thanks for listening!

Jeff Davis breaks down why the Highway Trust Fund has been insolvent since 2008 and what fixes (and tradeoffs) are realistic as EVs grow.GUEST BIOJeff Davis is a Senior Fellow at the Eno Center for Transportation and Editor of Eno Transportation Weekly. He has more than 30 years of experience in federal transportation policy, including eight years working in Washington, D.C., advising on the federal budget, the Highway Trust Fund, and long-term infrastructure funding and governance.TOPICS (IN ORDER)What the Highway Trust Fund is (created to fund interstates via fuel/trucking taxes)Why it broke in 2008 (spending > dedicated revenue)The 3 drivers: slower VMT growth, higher MPG, tax politicsFederal vs state roles (federal-aid network + shifting cost shares)Reform options: gas tax bump vs mileage fee; privacy/admin hurdlesEVs: accelerant, not original cause; state fee/VMT pilotsTransit account inside HTF (how it got there; mismatch perceptions)Federal rules vs state flexibility (states using state $$ to avoid red tape)AVs: uncertain impact + liability/legal messUnderreported issue: safety mandates raise car/rail costsInternational models: truck tolls abroad; toll resistance in U.S.MAIN POINTSGas tax was a proxy for driving; that proxy is weakening (less VMT growth + better MPG).Politics prevented rate increases; since 2008 Congress has plugged holes with general-fund transfers.Mileage fees are “fair” in theory but hard in practice (privacy + enforcement + admin scale).Registration-based fees (incl. EV fees) may be more feasible.Transit funding in HTF is coalition-driven and not a clean “users pay” match.Federal dollars come with heavy conditions; some states route federal money to maintenance to minimize paperwork.TOP 3 QUOTES“There’s three big reasons… driving doesn’t increase like it used to… gasoline is a worse proxy… and no one can agree on tax revenue increases.”“GPS-based VMT tracking… is perfect economically… [but] the biggest privacy nightmare.”“We’re going to miss the gas tax… it’s a very efficient tax.”

🎙 The Pod is hosted by Jesse Wright💬 For guest suggestions, questions, or media inquiries, reach out at https://elpodcast.media/📬 Never miss an episode – subscribe and follow wherever you get your podcasts.⭐️ If you enjoyed this episode, please rate and review the show. It helps others find us. Thanks for listening!

Harvard’s Shane Greenstein explains why Acquired wins by treating each episode like an audiobook—high-signal, audience-first, and built for durable value.GUEST BIO: Dr. Shane M. Greenstein is a Professor of Business Administration at Harvard Business School, where he teaches technology, operations, and management and writes HBS case studies on modern businesses.TOPICS DISCUSSED (IN ORDER): WHY ACQUIRED WORKS: Breaking podcast “rules,” competing with audiobooks, high-signal editing, host chemistry, and durable content that doesn’t expireAUDIENCE & NICHE STRATEGY: High-income aspirational listeners, “big niche” logic, Slack feedback loops, and expanding breadth without losing focusBUSINESS & MONETIZATION MODEL: B2B advertisers, high-value contracts, season sponsorships, rejecting 95% of ads, and protecting audience trustOPERATIONS & CONSTRAINTS: Extreme prep, editing workflow, no staff beyond an editor, time scarcity, and intentional limits on scalingCASE STUDY ORIGINS & RESEARCH: How the HBS case began, analytics access, third-party validation, and teaching-case methodologyMEDIA LANDSCAPE & FUTURE: Podcasting vs legacy media, audience balkanization, video tradeoffs, and the role of live, unpredictable formatsRISKS & UNKNOWN UNKNOWNS: Reputation exposure, topic selection risk, family/work tradeoffs, AI slop, and platform uncertaintyMAIN POINTS:Acquired “breaks rules” but follows classic business rules: match product to audience, align advertisers to audience, build operations around constraints.They win by not wasting time: heavy editing + high density of insight, built for repeat listening and long shelf life.Their edge is durability: they target ~80% of content still relevant a year later, so the back catalog keeps earning.Their advertising works because it’s B2B + high contract value: a few conversions can justify huge spends; they protect audience trust by rejecting most ads.Avoiding video is a control tradeoff: YouTube distribution can mean less control over ad experience and more audience annoyance.Scaling is intentionally limited: the “team of 3” model preserves quality but raises risks (time pressure, topic selection errors, burnout).Biggest threats aren’t competitors—they’re reputation risk, platform/tech shifts, and AI-driven slop reducing trust.TOP 3 QUOTES:“They deliberately don’t waste anybody’s time.”“Their primary substitute… is someone going out and buying an audiobook.”“A niche on the internet can be six people in your hometown times a billion.”

🎙 The Pod is hosted by Jesse Wright💬 For guest suggestions, questions, or media inquiries, reach out at https://elpodcast.media/📬 Never miss an episode – subscribe and follow wherever you get your podcasts.⭐️ If you enjoyed this episode, please rate and review the show. It helps others find us. Thanks for listening!

Tenured sociology professor Mark Horowitz explains why falling preparedness, grade inflation, and perverse incentives are eroding college standards—and why “broke, woke, stroke” helps describe the pattern.GUEST BIO: Dr. Mark Horowitz is a sociology professor at Seton Hall University and co-author of a survey-based study of tenured faculty perceptions about academic standards, grade inflation, student preparedness, and institutional incentives in higher education.TOPICS DISCUSSED IN ORDER:Why the authors ran a higher-ed “crisis” survey (faculty perspectives vs pundit/parent narratives)Horowitz’s “honors student with junior-high-level writing” anecdoteKey survey findings: perceived decline in preparedness, increased pushback, grade inflation“Broke, Woke, Stroke” framework: market pressures, egalitarian/compassion impulses, therapeutic ethos“Most shocking” claim: some functionally illiterate students graduating (and why that happens)Which factor matters most: Horowitz argues “broke” (economics/market incentives) is decisiveAdmin growth and student-support infrastructure; retention/compassion language vs rigor/meritTaboo around ability/intellectual differences; political psychology and educational romanticismConcern about watering down harming gifted students; standards vs equity tensionsPotential solutions: admissions tests, exit/credentialing signals, eliminating student evals; bigger structural funding conversationMAIN POINTS:Many tenured faculty report signs of a standards problem: lower preparedness, more grade pressure, more pushback.“Broke” incentives (enrollment/revenue pressure + reduced public support + debt-financed model) push institutions toward admitting and passing more students.“Woke” sensibilities (egalitarian compassion for disadvantaged students) can combine with market incentives to reduce rigor and resist sorting/standards.“Stroke” dynamics (therapeutic/mental-health framing, protecting student feelings) further discourages hard grading, failure, and frank talk about ability.The result is a weakened “signaling function” of the degree: if everyone gets A’s/B’s, employers learn less from credentials.Fixes are hard because incentives punish the people who enforce standards (evals, backlash, institutional pressure), but small reforms could still matter.TOP 3 QUOTES:“We use that kind of cheeky mnemonic of broke, woke, stroke.”“We think the incentive structure in higher ed right now is perverse.”“It’s kind of a tragedy of the commons in a way. No university can afford to raise standards, but if none do, the long-run tendency is to have the system collapse.”

🎙 The Pod is hosted by Jesse Wright💬 For guest suggestions, questions, or media inquiries, reach out at https://elpodcast.media/📬 Never miss an episode – subscribe and follow wherever you get your podcasts.⭐️ If you enjoyed this episode, please rate and review the show. It helps others find us. Thanks for listening!

A wide-ranging conversation with economist and AI consultant Dr. Emmanuel Maggiori on why Modern Monetary Theory overpromises a “free lunch,” what really causes inflation, how Bitcoin and AI are misunderstood, and why seductive economic stories are so dangerous.GUEST BIO:Emmanuel Maggiori is an armchair economist, computer scientist, and AI consultant based in the UK. Originally from Argentina, he has a PhD (earned in France), works with companies to build AI systems, and writes widely about economics and artificial intelligence. He is the author of several books, including If You Can Just Print Money, Why Do I Pay Taxes? Modern Monetary Theory Distilled and Debunked in Plain English, Smart Until It’s Dumb, and The AI Pocket Guide, and has a large following on LinkedIn and X/Twitter.TOPICS DISCUSSED:What Modern Monetary Theory (MMT) actually claimsHow money is created in modern economies (broad money vs reserves)Why MMT’s “taxes don’t fund spending” story is misleadingStephanie Kelton’s accounting error and the “deficit myth”The Cantillon effect and who really pays for money printingArgentina, Venezuela, Zimbabwe, and real-world inflation episodesJavier Milei, austerity, and Argentina’s recent disinflationGovernment debt, “we owe it to ourselves,” and default via inflationBitcoin as a supposed solution to monetary problemsWho really created Bitcoin and what it’s actually good forThe current AI boom, why it’s a bubble on the business side, and unit economicsOpenAI, DeepSeek, Nvidia, and why foundational models lack a moatHow AI will change the labor market (coders, translators, blue-collar work)AI, Hollywood/TV writing, and the gap between “good enough” and truly excellent workFinal cautions about seductive economic theories and AI hypeMAIN POINTS:MMT in a nutshell: MMT says a government with its own currency can always create money to pay for spending and debt, and that taxes exist mainly to control inflation, create demand for the currency, and shape behavior—not to “fund” spending.Accounting problems in MMT: Emmanuel argues that key MMT figures (especially Stephanie Kelton) made basic accounting errors about government bank accounts and money aggregates like M1, then papered over them with exceptions (e.g., temporary overdrafts at central banks).Why taxes really matter: Even if a government could print money, in practice you need taxes before spending because the Treasury’s accounts can’t just go endlessly negative—and politically, raising taxes fast enough to control inflation is extremely unlikely.Cantillon effect & asset swaps: Paying off debt with newly created money is not a harmless “asset swap.” It channels new money first to financial institutions, inflates asset prices and credit, and ultimately erodes the real value of ordinary people’s cash savings.Real-world inflation is not an accident: In cases like Argentina, Venezuela, Zimbabwe, or Weimar Germany, there were real triggers (droughts, war reparations, commodity shocks), but the hyperinflation came from repeated resort to money printing as the default response.Argentina as a warning: Emmanuel’s personal experiences—suitcases of cash for a normal dinner, unusable mortgages, dollarized house purchases—illustrate how chronic money printing and price controls destroy trust, planning, and basic economic functioning.Javier Milei & austerity: Milei sharply cut deficits and money printing; inflation has fallen quickly. Critics say it’s just recession-driven demand collapse, but Emmanuel notes history shows disinflation often follows when governments stop printing and cut spending.Debt and “we owe it to ourselves”: Government debt is a real intertemporal deal: some people give up current consumption so the state can use resources now, in exchange for more consumption later. Unexpected inflation is an economic default on those savers.Bitcoin skepticism: Bitcoin solves a fascinating technical problem (a decentralized, hard-to-alter ledger), but Emmanuel questions its use as a stable store of value (because of huge volatility) and notes there are other ways to protect savings (equities, etc.).AI bubble dynamics: AI as a technology is here to stay and genuinely useful, but foundational model providers have thin or no moats—methods are public, competitors catch up, and models become commodities competing on price with brutal compute costs.Nvidia and the “shovel sellers”: Chip makers selling GPUs may fare better than model labs, but there are worrying signs (like unsold inventory) that they may be over-producing “shovels” for a gold rush that can’t all pay off.AI startups on top of models: Most AI-powered apps (wrappers for therapy, yoga, productivity, etc.) have almost no defensible edge. Anyone can build similar products, so profits will be squeezed and many will fail.Work & careers in the AI age: He wouldn’t steer a kid away from computer science—but urges them to be at the intersection of business and tech, not just a narrow coder. Routine “good enough” work (like basic translation) is more at risk than high-end, high-touch work.Blue-collar and “boring” businesses: Physical, unsexy businesses (plumbing, soundproofing, trades) look very robust and lucrative; in London, plumbers often bill more than many white-collar professionals.AI as an imitator, not an oracle: Large models are brilliant imitators trained on oceans of human text (Reddit, etc.), not machines designed for truth. They’ll keep sounding confident and sometimes being wrong.TOP 3 QUOTES:“MMT is like a theory that promises you a free lunch—and even MMT economists themselves use the phrase ‘free lunch.’ If it sounds like that, you should be very suspicious.”“All these rules that stop governments from just printing money exist for a reason: we do not trust politicians with a blank check on the public purse.”“AI is an incredible imitator. It’s trained on a massive pile of human text, and it’s very good at sounding right—but that doesn’t mean it’s true, or high-end work, or that it understands what it’s saying.”

🎙 The Pod is hosted by Jesse Wright💬 For guest suggestions, questions, or media inquiries, reach out at https://elpodcast.media/📬 Never miss an episode – subscribe and follow wherever you get your podcasts.⭐️ If you enjoyed this episode, please rate and review the show. It helps others find us. Thanks for listening!

Independent journalist James Corbett joins Jesse to trace how media, tech, and elite power have reshaped the information landscape—from Time’s 2006 “You” to today’s post-truth, AI-saturated world.GUEST BIO:James Corbett is an independent journalist and documentary filmmaker based in Japan. Since 2007 he’s run The Corbett Report, an open-source intelligence project covering geopolitics, media, finance, and technology through long-form podcasts, videos, and essays.TOPICS DISCUSSED:Time’s 2006 “Person of the Year” and the early optimism of user-generated mediaSmartphones, YouTube, and the shift to always-on, short-form videoLegacy media vs podcasts, Rogan, and long-form conversationAdpocalypse, subscriptions, foundations, and “post-journalism”AI “slop,” dead internet theory, and human vs synthetic contentLeft–right vs “up–down” (authoritarian vs anti-authoritarian) politicsElite networks and foundations: Rockefeller, Gates, philanthropy as powerClimate narratives, health framing, and energy demands of AIFuture crises: hot war, financial bubbles, AI and labor, UBI and controlMAIN POINTS:The early internet briefly empowered ordinary people. Corbett’s own path—from teacher in Japan to reaching millions—shows how 2000s platforms genuinely opened space for bottom-up media.The smartphone changed how we think, not just what we see. Moving from long-form text/audio to short, swipeable video has compressed attention and pushed politics toward slogans and clips.The business model broke journalism before AI did. As ad money fled to platforms, outlets turned to paywalls, patrons, and foundations—pulling coverage toward causes and away from broad public-interest reporting.The real divide is power, not party. Corbett argues we miss the “up–down” axis—authoritarian vs anti-authoritarian—so we keep swapping parties but getting similar outcomes on war, finance, and surveillance.AI and automation are economic and political weapons. If AI displaces labor and the state replaces wages with universal income, whoever controls those payouts gains unprecedented leverage over everyday life.Long-form human conversation is still a resistance strategy. Despite dark trends, he sees deep, sustained, human-made media as one of the few ways left to think clearly and build real communities.BEST QUOTES:On the shift since 2006:“We went from ‘You are the Person of the Year’ to ‘You are the problem’—from celebrating amateur voices to treating them as a disinformation threat.”On media form and attention:“I started in an era where you could play a ten-minute clip inside an hour-long podcast. Now if you go over two minutes, people think you’re crazy.”On politics:“Left and right exist, but the missing axis is up and down—authoritarian versus anti-authoritarian. Once you see that, a lot of ‘flip-flops’ make sense.”On AI and control:“If the state is the one feeding and clothing you after AI replaces your job, then the state effectively owns you.”

🎙 The Pod is hosted by Jesse Wright💬 For guest suggestions, questions, or media inquiries, reach out at https://elpodcast.media/📬 Never miss an episode – subscribe and follow wherever you get your podcasts.⭐️ If you enjoyed this episode, please rate and review the show. It helps others find us. Thanks for listening!

Sociologist Dr. Jennie Bristow joins Jesse to dismantle “generation wars” rhetoric—especially Boomer-blaming—and re-center the real story: stalled economies, broken higher ed, housing dysfunction, and a culture that’s leaving young people anxious and unmoored.Guest bio:Dr. Jennie Bristow is a professor of sociology at Canterbury Christ Church University in the UK and a leading researcher on intergenerational conflict, social policy, and cultural change. She is the author of Stop Mugging Grandma: The Generation Wars and Why Boomer Blaming Won’t Solve Anything and the forthcoming Growing Up in the Culture Wars, which examines how Gen Z is coming of age amid identity politics, pandemic fallout, and collapsing institutional confidence.Topics discussed:How “intergenerational equity” became a fashionable idea among policymakers and millennial commentators after the 2008 financial crisisWhy blaming Baby Boomers for housing, student debt, and climate change hides deeper structural problemsThe role of journalism, English majors, and the broken media business model in manufacturing generational conflictHigher education as a quasi–Ponzi scheme: massification, student loans, and the weak graduate premiumHousing, delayed family formation, and why homeownership is a bad proxy for measuring generational “success”Millennials vs. Gen Z: growing up with 9/11 and the financial crisis vs. growing up with COVID-19 and AIAI, “zombie economies,” and why societies still need real work, real knowledge, and real skillsSocial Security, ageing, low fertility, and what’s actually at stake in pension debatesIdentity politics, culture wars, and how an obsession with personal identity fragments common lifeMedia polarization, rage clicks, and how subscription-driven, foundation-funded journalism blurs into activismMain points & takeaways:Generation wars are a distraction. The Boomer-vs-Millennial narrative was heavily driven by media and policy elites after the 2008 crisis. It channels anger away from structural issues—stagnant productivity, weak labor markets, housing policy failure, and a dysfunctional higher-ed and welfare state.Boomers didn’t “steal the future” — policy did. Baby Boomers are just a large cohort who happened to be born into a period of postwar economic expansion. Treating them as a moral category (“greedy,” “sociopaths”) obscures the role of monetary, housing, education, and labor-market policy choices.Class beats cohort. Within every “generation” there are huge differences: inheritance vs no inheritance, elite degrees vs low-quality credentials, secure jobs vs precarity. Talk of “Boomers” and “Millennials” flattens these class divides into fake demographic morality plays.Housing is a symbol, not the root cause. The rising age of first-time buyers and insane rents are real problems—but they’re manifestations of policy and market failures, not proof that Boomers hoarded all the houses. Using homeownership as the key generational metric gets the story backwards.Higher education is oversold. Mass university attendance, especially in non-vocational fields, has left many millennials and Zoomers with heavy student debt and weak job prospects. Degrees became a costly entry ticket to the labor market without guaranteeing meaningful work or higher wages.AI is a wake-up call, not pure doom. AI will automate a lot of white-collar tasks (journalism, marketing, some finance), but it also exposes how shallow “skills” education has become. Bristow argues students need real knowledge and disciplinary depth so humans can meaningfully supervise and direct AI systems.Ageing and pensions are solvable political questions, not excuses to scapegoat the old. Longer life expectancy and rising dependency ratios do require institutional redesign—but that should mean rethinking work, welfare, and economic dynamism, not treating older people as fiscal burdens to be phased out.Gen Z is growing up in a culture of fractured identity. Instead of being socialized into a shared civic culture, young people are pushed into micro-identities and online culture-war camps. That emphasis on personal identity over common purpose undermines their ability to form stable adult roles.Media business models amplify rage and generational framing. As ad revenue collapsed and subscriptions and philanthropy took over, many outlets shifted toward more partisan, activist-style content. Generational blame is a cheap, emotionally potent frame that fits this economic logic.Top 3 quotes:On the myth of Boomer villainy“Baby Boomers are not a generation of sociopaths who set out to rob the young of their future; they’re just people born at a particular time in history. Turning them into moral scapegoats lets us avoid talking about policy failures.”On universities and the millennial bait-and-switch“We raised millennials to believe they were special, told them to follow their dreams, pushed them into university and debt—and then discovered the jobs and opportunities they’d been promised weren’t actually there.”On why generational labels mislead more than they explain“These categories are cultural inventions, not scientific facts. People don’t live as ‘a millennial’ or ‘a Boomer’—they live as parents, workers, citizens. When we talk about generations instead of class, policy, and history, we end up fighting the wrong battles.”

🎙 The Pod is hosted by Jesse Wright💬 For guest suggestions, questions, or media inquiries, reach out at https://elpodcast.media/📬 Never miss an episode – subscribe and follow wherever you get your podcasts.⭐️ If you enjoyed this episode, please rate and review the show. It helps others find us. Thanks for listening!

Investigative health journalist Julia Belluz breaks down what really drives obesity and chronic disease—metabolism myths, ultra-processed food, bad incentives, and why our entire food environment is quietly rigged against us.Guest bio: Julia Belluz is a Paris-based health and science journalist and co-author of Food Intelligence: The Science of How Food Both Nourishes and Harms Us, written with NIH researcher Dr. Kevin Hall. Over more than a decade reporting for outlets like Vox and The New York Times, she’s become one of the sharpest explainers of nutrition science, chronic disease, and the politics of the global food system.Topics discussed:The Biggest Loser study: what Kevin Hall actually discovered about extreme weight loss and metabolic slowdownWhy “a slow metabolism” is not destiny—and why the biggest losers had the biggest metabolic dropsIs a calorie a calorie? Low-carb vs low-fat when calories are controlledProtein “maximization,” the protein appetite, and why excess protein isn’t magicVitamins, supplements, kidney stones, and the $2T wellness industryThe 10,000+ chemicals in the U.S. food supply and the GRAS loopholeUltra-processed foods, added salt/sugar/fat, and the simple math of calorie surplusFood environments vs willpower: why it’s so hard to “eat right” in the U.S.What France gets right on markets, school lunches, and prepared foodsIndustry funding, NIH underinvestment in nutrition, and government’s failure to regulatePractical strategies: reshaping your home food environment and demanding better policyMain points:Extreme weight loss = extreme metabolic slowdownBiggest Loser contestants showed huge willpower and lost enormous amounts of weight—but the biggest losers had the largest and most persistent drops in metabolic rate, even six years later.Metabolism followed weight loss; it didn’t cause it. “Slow metabolism” is not a life sentence, and it’s not the main driver of the obesity epidemic.For fat loss, calories still mostly ruleWhen Kevin Hall tightly controls calories in the lab, low-carb vs low-fat leads to almost identical fat loss, with only a trivial edge for low-fat.Macro wars are wildly overstated; total calories and food environment matter far more than whether you’re Team Carbs or Team Fat.Protein is essential, but not a cheat codeHumans (and many animals) seem to have a “protein appetite” that keeps intake in a fairly narrow range worldwide.Overshooting that range doesn’t give you free fat loss—you essentially excrete the extra nitrogen and keep the calories.Supplements are often useless—or harmfulRoutine multivitamins rarely help people who aren’t deficient and can sometimes increase risk.Under-regulated “metabolism boosters” and weight-loss pills are a real source of ER visits and kidney issues.The chemicals loophole is real—and alarmingSince 1958, and especially after 1997, U.S. companies have been allowed to classify new food chemicals as “generally recognized as safe” without real FDA oversight, independent review, or even notification.We don’t yet know how much these chemicals contribute to disease, but we already have more than enough evidence to indict excess calories and the salt–sugar–fat trifecta.It’s the food environment, not your moral characterObesity has risen across ages and countries as food environments have shifted—cheap, omnipresent, ultra-processed, aggressively marketed calories.France shows what policy can do: strong school-meal standards, protected fresh markets, and widely available healthy prepared foods all make “the default choice” less toxic.Policy and leadership, not just personal hacksLess than ~5% of NIH funding goes to nutrition research, while industry funding quietly shapes what gets studied.Individual strategies (cooking more, controlling home food, simplifying meals) matter—but large-scale change requires political pressure and better rules of the game.Top quotes:“The people who lost the most weight on The Biggest Loser ended up with the greatest metabolic slowdown—and that slowdown was still there six years later.”“We don’t need conspiratorial chemicals to explain the obesity epidemic—an endless supply of cheap, ultra-processed food high in salt, sugar, and fat is plenty.”“Obesity is not a mass failure of willpower. It’s what happens when entire populations are dropped into toxic food environments and then told the problem is their character.”

🎙 The Pod is hosted by Jesse Wright💬 For guest suggestions, questions, or media inquiries, reach out at https://elpodcast.media/📬 Never miss an episode – subscribe and follow wherever you get your podcasts.⭐️ If you enjoyed this episode, please rate and review the show. It helps others find us. Thanks for listening!

Tech economist Dr. Jeffrey Funk argues that today’s AI boom is the biggest bubble in history—far larger than dot-com or housing—because colossal infrastructure spending is chasing tiny, unprofitable revenues.Guest bio:Jeffrey Funk is a technology economist and author of Unicorns, Hype and Bubbles: A Guide to Spotting, Avoiding and Exploiting Investment Bubbles in Tech. A longtime researcher and professor of innovation and high-tech industries, he now writes widely on startup hype, AI economics, and investment manias, including a popular newsletter and presence on LinkedIn.Topics discussed:Why Funk thinks the AI boom is the “biggest bubble ever”OpenAI’s revenues, mounting losses, and opaque accounting vs. Microsoft’s audited numbersNvidia, cloud providers, and “circular finance” in AI infrastructureSora, video generation, and the economics of ultra-expensive AI featuresComparisons with the 1929 crash, the dot-com bubble, and the 2008 housing crisisHow much of AI is real utility vs. hype, scams, and accounting tricksHallucinations as an inherent limitation of large language modelsWorld-model approaches, quantum computing, and why breakthroughs are harder than advertisedEnergy use, exploding electricity demand, and Bill Gates’ shifting climate rhetoricPossible winners after the bubble: why it’s still “wide open”Labor markets, layoffs, and why “AI took their jobs” is mostly a PR storyCollege and career advice for young people in an AI-saturated economyChina, regulation, and small language modelsWhat the pop might look like: shuttered data centers, broken pensions, and a long VC winterFinal advice: how to think more clearly about tech futures and bubblesMain points:Investment vs. returns: A bubble is simply when more money goes into companies than comes out; by that standard, AI is extreme—OpenAI’s losses and projected $115B cash burn dwarf its revenues.Subsidized demand: OpenAI’s ultra-low prices and free tiers artificially inflate usage and pump up Nvidia and cloud revenues; if prices reflected true cost, demand (and infra spending) would fall sharply.Accounting red flags: Discrepancies between OpenAI’s figures and Microsoft’s audited statements, plus aggressive depreciation assumptions for AI chips, echo Enron-style financial engineering.Bigger than past bubbles: Unlike dot-com, where consumers paid for internet access, PCs, and e-commerce (≈$1.5T in 2024 dollars), AI currently generates tiny, niche revenues relative to the trillions being poured into infrastructure.Tech limits: LLM hallucinations are a built-in feature of statistical generative models, not a temporary bug; GPT-5 and similar systems haven’t solved this, and world-model or quantum fixes would be extremely costly and distant.Real but narrow use-cases: AI can help with things like drafting emails, simple ads, and some coding assistance, but broad productivity gains across manufacturing, construction, healthcare, etc., remain largely unrealized.Jobs & layoffs: Headlines about AI-driven mass unemployment are mostly hype; unemployment overall is low, many “AI layoffs” are reversals of pandemic over-hiring, and outsourcing plus H-1B dynamics matter more than LLMs.Crash mechanics: When the narrative finally flips and big investors (like Michael Burry) exit or short AI, overbuilt data centers, utility expansions, and VC portfolios will be left stranded, hurting pensions and index investors.Careers & education: Young people should be skeptical of hype, but still learn math, coding, and predictive AI; trades and biotech remain attractive, and the key skill is learning to reason about trends instead of chasing bandwagons.Top 3 quotes:On what a bubble really is:“When people are putting more money into companies than they’re getting out, it becomes a bubble. It’s just exaggeration.”On Nvidia, cloud, and OpenAI’s losses:“Who cares if Nvidia and the cloud providers are making so much money if OpenAI is losing billions to subsidize them? The car might be selling, but if you’re selling it for half price, it’s not a good business.”On how young people should respond:“If you’re young, don’t worry too much about the bubble. Be open-minded, be curious, learn to think for yourself instead of believing what the tech bros say, and things will work out.”

🎙 The Pod is hosted by Jesse Wright💬 For guest suggestions, questions, or media inquiries, reach out at https://elpodcast.media/📬 Never miss an episode – subscribe and follow wherever you get your podcasts.⭐️ If you enjoyed this episode, please rate and review the show. It helps others find us. Thanks for listening!

Steven Ross Pomeroy, Chief Editor of RealClearScience, joins the podcast to discuss NASA’s abandoned nuclear propulsion programs, the future of AI and white-collar work, the rise of “scienceploitation,” and how information overload is reshaping human cognition.GUEST BIO:Steven Ross Pomeroy is a science writer and Chief Editor of RealClearScience. He writes frequently for Big Think, covering space exploration, neuroscience, AI, and science communication.TOPICS DISCUSSED:NASA’s nuclear propulsion program (1960s–1970s)Why nuclear rockets were abandonedDifferences between chemical, nuclear thermal, and nuclear electric propulsionUsing the Moon as a launch hubMoon-landing skepticism & conspiracy thinkingThe future of space miningAI adoption trends & hidden usageAgentic AI vs chatbotsJob displacement: white-collar vulnerabilityHigher ed, skills, and career advice“Scienceploitation” and how marketing hijacks scientific languageImmune-system myths & quantum wooInformation overload and Google/AI-driven forgettingCritical thinking in the AI eraThe myth of speed readingHow vocabulary and deep engagement improve comprehensionMAIN POINTS:NASA had functional nuclear-rocket tech in the 1960s, but political priorities, budget cuts, and waning public interest ended the program.Nuclear thermal rockets are ~2x as efficient as chemical rockets; nuclear electric propulsion could unlock deep-space exploration and mining.Space mining is technologically plausible, but its economic impact (like crashing gold prices) creates new problems.AI adoption is much higher than official numbers—many workers use it quietly and off the books.Companies see low ROI today because they’re using simple chatbots, not advanced “agentic” systems that can take multi-step actions.White-collar jobs — not blue collar — are being automated first.Scienceploitation hijacks scientific buzzwords (“quantum,” “immune-boosting,” “natural”) to sell products with no evidence.We process 74 GB of information per day, roughly a lifetime’s worth for a well-educated person 500 years ago.Speed reading works only by sacrificing retention; the real way to read faster is to build vocabulary and deep attention.Skepticism, not cynicism, is the core skill we need in the AI-mediated media environment.TOP 3 QUOTES: “It would’ve been harder to fake the moon landing than to actually land on the moon.”“Companies aren’t getting ROI from AI because they’re only using chatbots. The real returns come from agentic AI — and that wave is just beginning.”“We now process 74 gigabytes of information a day. Five hundred years ago, that was a lifetime’s worth for a highly educated person.”

🎙 The Pod is hosted by Jesse Wright💬 For guest suggestions, questions, or media inquiries, reach out at https://elpodcast.media/📬 Never miss an episode – subscribe and follow wherever you get your podcasts.⭐️ If you enjoyed this episode, please rate and review the show. It helps others find us. Thanks for listening!

A wide-ranging conversation with Northeastern’s John Wihbey on how algorithms, laws, and business models shape speech online—and what smarter, lighter regulation could look like.Guest bio: John Wihbey is a professor of media & technology at Northeastern University and director of the AI Media Strategies Lab. Author of Governing Babel (MIT Press). He has advised foundations, governments, and tech firms (incl. pre-X Twitter) and consulted for the U.S. Navy.Topics discussed:Section 230’s 1996 logic vs. the algorithmic eraEU DSA, Brazil/India, authoritarian modelsAI vs. AI moderation (deepfakes, scams, NCII)Hate/abuse, doxxing, and speech “crowd-out”Platform opacity; case for transparency/data accessCreator-economy economics; downranking/shadow bansDead Internet Theory, bots, engagement gamingSports, betting, and integrity (NBA/NFL)Gen Z jobs; becoming AI-literate change agentsTeaching with AI: simulations, human-in-loop assessmentMain points & takeaways:Keep Section 230 but add obligations (transparency, appeals, researcher access).Europe’s DSA has exportable principles, adapted to U.S. free-speech norms.States lead on deepfake/NCII and youth-harm laws.AI offense currently ahead; detection/provenance + humans will narrow the gap.Lawful hate/abuse can practically silence others’ participation.CSAM detection is harder with synthetics; needs better tooling/cooperation.News/creator models are fragile; ad dollars shifted to platforms.Opaque ranking punishes small creators; clearer recourse is needed.Engagement metrics are Goodharted; bots inflate signals.Live sports thrive on synchronization; gambling risks long-term integrity.Students should aim to be the person who uses AI well, not fear AI.Top 3 quotes:“Keep 230, but add transparency and obligations—we don’t need censorship; we need visibility into how platforms actually govern speech.”“AI versus AI is the new reality—offense is ahead today, but defense will catch up with detection, provenance, and human oversight.”“The platform is king—monetization and discoverability are controlled by opaque algorithms, and that unpredictability crushes small creators.”

🎙 The Pod is hosted by Jesse Wright💬 For guest suggestions, questions, or media inquiries, reach out at https://elpodcast.media/📬 Never miss an episode – subscribe and follow wherever you get your podcasts.⭐️ If you enjoyed this episode, please rate and review the show. It helps others find us. Thanks for listening!

Finance professor Spencer Barnes explains research showing postseason officiating systematically favors the Mahomes-era Chiefs—consistent with subconscious, financially driven “regulatory capture,” not explicit rigging.Guest bio: Dr. Spencer Barnes is a finance professor at UTEP. He co-authored “Under Financial Pressure” with Brandon Mendez (South Carolina) and Ted Dischman, using sports as a transparent lab to study regulatory capture.Topics discussed (in order):Why the NFL is a clean testbed for regulatory captureData/methods: 13,136 defensive penalties (2015–2023), panel dataset, fixed-effectsPostseason favoritism toward Mahomes-era ChiefsMagnitude and game impact (first downs, yards, FG-margin games)Subjective vs objective penalties (RTP, DPI vs offsides/false start)Regular season vs postseason differencesDynasty checks (Patriots/Brady; Eagles/Rams/49ers)Rigging vs subconscious biasRatings, revenue (~$23B in 2024), media incentivesGambling’s rise post-2018 and bettor implicationsTaylor Swift factor (not tested due to data window)Ref assignment opacity; repeat-crew effectsTech/replay reform ideasBroader finance lesson on incentives and regulationMain points & takeaways:Core postseason result: Chiefs ~20 percentage points more likely than peers to gain a first down from a defensive penalty.Subjective flags: ~30% more likely for KC in playoffs (RTP, DPI).Size: ~4 extra yards per defensive penalty in playoffs—small per play, decisive at FG margins.Regular season: No favorable treatment; slight tilt the other way.Ref carryover: Crews with a prior KC postseason official show more KC-favorable outcomes the next year.Not universal to dynasties: Patriots/Brady and other near-dynasties don’t show the same postseason effect.Mechanism: No claim of rigging; consistent with implicit bias under financial incentives.Policy: Use tech (skycam, auto-checks for false start/offsides), limited challenges for subjective calls, transparent ref advancement.General lesson: When regulators depend financially on outcomes, redesign incentives to reduce capture and protect fairness.Top 3 quotes:“We make no claim the NFL is rigging anything. What we see looks like implicit bias shaped by financial incentives.” — Spencer Barnes“It only takes one call to swing a postseason game decided by a field goal.” — Spencer Barnes“If there’s money on the line, you must design the regulators’ environment so incentives don’t quietly bend enforcement.” — Spencer BarnesLinks/where to find the work: Spencer Barnes on LinkedIn (search: “Spencer Barnes UTEP”); paper Under Financial Pressure in the Financial Review (paywall) and as a free working paper on SSRN (search the title).

🎙 The Pod is hosted by Jesse Wright💬 For guest suggestions, questions, or media inquiries, reach out at https://elpodcast.media/📬 Never miss an episode – subscribe and follow wherever you get your podcasts.⭐️ If you enjoyed this episode, please rate and review the show. It helps others find us. Thanks for listening!

How human babies, big brains, and social life likely forced Homo sapiens to invent precise speech ~150–200k years ago—and what that means for learning, tech, and today’s kids.Guest Bio:Madeleine Beekman is a professor emerita of evolutionary biology and behavioral ecology at the University of Sydney and author of Origin of Language: How We Learned to Speak and Why. She studies social insects, collective decisions, and the evolution of communication.Topics Discussed:Why soft tissues don’t fossilize; language origins rely on circumstantial evidenceThree clocks for timing (~150–200k years): anatomy; trade/complex tech/art; phoneme “bottleneck”Why Homo sapiens (not Neanderthals) likely had full speechLanguage as a “virus” tuned to children; pidgin → creole via kidsSecond-language learning: immersion over translationBees/ants show precision scales with ecological stakesEvolutionary chain: bipedalism → narrow pelvis + big brains → helpless infants → precise speechOngoing human evolution (archaic DNA, altitude, Inuit lipid adaptations)Flynn effect reversal, screens, AI reliance, anthropomorphism risksReading, early interaction, and the Regent honeyeater “lost song” lessonUniversities, online classes, and “degree over learning”Main Points:Multiple evidence lines converge on speech emerging with anatomically modern humans ~150–200k years ago.Anatomical and epigenetic clues suggest only Homo sapiens achieved full vocal speech.Extremely dependent infants created strong selection for precise, teachable communication.Children’s brains shape languages; kids regularize grammar.Communication precision rises when mistakes are costly (bee-dance analogy).Humans continue to evolve; genomes show selected archaic introgression and local adaptations.Tech-driven habits may erode cognition and language skill; reading matters.AI is a tool that imitates human output; humanizing it can mislead and harm, especially for teens.Start early: talk, read, and interact face-to-face from birth.Top Quotes:“Only Homo sapiens was ever able to speak.”“Language will go extinct if it can’t be transmitted from brain to brain—the best host is a child.”“The precision of communication is shaped by how important it is to be precise.”

🎙 The Pod is hosted by Jesse Wright💬 For guest suggestions, questions, or media inquiries, reach out at https://elpodcast.media/📬 Never miss an episode – subscribe and follow wherever you get your podcasts.⭐️ If you enjoyed this episode, please rate and review the show. It helps others find us. Thanks for listening!

A candid conversation with psychologist Gerd Gigerenzer on why human judgment outperforms AI, the “stable world” limits of machine intelligence, and how surveillance capitalism reshapes society.Guest bio: Dr. Gerd Gigerenzer is a German psychologist, director emeritus at the Max Planck Institute for Human Development, a leading scholar on decision-making and heuristics, and an intellectual interlocutor of B. F. Skinner and Herbert Simon.Topics discussed:Why large language models rely on correlations, not understandingThe “stable world principle” and where AI actually works (chess, translation)Uncertainty, human behavior, and why prediction doesn’t improve muchSurveillance capitalism, privacy erosion, and “tech paternalism”Level-4 vs. level-5 autonomy and city redesign for robo-taxisEducation, attention, and social media’s effects on cognition and mental healthDynamic pricing, right-to-repair, and value extraction vs. true innovationSimple heuristics beating big data (elections, flu prediction)Optimism vs. pessimism about democratic pushbackBooks to read: How to Stay Smart in a Smart World, The Intelligence of Intuition; “AI Snake Oil”Main points:Human intelligence is categorically different from machine pattern-matching; LLMs don’t “understand.”AI excels in stable, rule-bound domains; it struggles under real-world uncertainty and shifting conditions.Claims of imminent AGI and fully general self-driving are marketing hype; progress is gated by world instability, not just compute.The business model of personalized advertising drives surveillance, addiction loops, and attention erosion.Complex models can underperform simple, well-chosen rules in uncertain domains.Europe is pushing regulation; tech lobbying and consumer convenience still tilt the field toward surveillance.The deeper risk isn’t “AI takeover” but the dumbing-down of people and loss of autonomy.Careers: follow what you love—humans remain essential for oversight, judgment, and creativity.Likely mobility future is constrained autonomy (level-4) plus infrastructure changes, not human-free level-5 everywhere.To “stay smart,” individuals must reclaim attention, understand how systems work, and demand alternatives (including paid, non-ad models).Top quotes:“Large language models work by correlations between words; that’s not understanding.”“AI works well where tomorrow is like yesterday; under uncertainty, it falters.”“The problem isn’t AI—it’s the dumbing-down of people.”“We should become customers again, not the product.”

🎙 The Pod is hosted by Jesse Wright💬 For guest suggestions, questions, or media inquiries, reach out at https://elpodcast.media/📬 Never miss an episode – subscribe and follow wherever you get your podcasts.⭐️ If you enjoyed this episode, please rate and review the show. It helps others find us. Thanks for listening!

How a tech insider who helped build billion-view machines explains the attention economy’s playbook—and how to guard your mind (and data) against it.Guest bio:Richard Ryan is a software developer, media executive, and tech entrepreneur with 20+ years in digital. He co-founded Black Rifle Coffee Company and helped take it public (~$1.7B valuation; $396M revenue in 2023). He’s built multiple apps (including a video app released four years before YouTube) with millions of downloads, launched Rated Red to 1M organic subscribers in its first year, and runs a YouTube network—led by FullMag (2.7M subs)—that has surpassed 20B views.Topics discussed:The attention economy and 2012 as the mobile/monetization inflection point; algorithm design, engagement incentives, and polarization; personal costs (anxiety, comparison traps, body dysmorphia, addiction mechanics); privacy and data brokers, smart devices, cars, geofencing; policy ideas (digital rights, accountability, incentive realignment); practical defenses (digital detox, friction, community, gratitude, boundaries); careers, college, and meaning in an AI-accelerating world.Main points:Social platforms optimize time-on-device; “For You” feeds exploit threat/dopamine loops that keep users anxious and engaged.2012 marked a shift from tool to extraction: mobile apps plus partner programs turned attention into a tradable commodity.Outrage and filter bubbles are amplified because drama wins in the algorithmic reward system.Privacy risk is systemic: data brokers, vehicle SIMs, and IoT terms build behavioral profiles beyond traditional warrants.Individual resilience beats moral panic: measure use, do a 30-day reset, add friction, and invest in offline community and gratitude.Don’t mortgage your life to debt or trends; pursue adaptable, meaningful work—every field is vulnerable to automation.Societal fixes require incentive changes (digital rights, simple single-issue bills, real accountability), not just complaints.Top 3 quotes:“In 2012, you went from using your iPhone to the iPhone using you.”“If you can’t establish boundaries and adhere to them, you have a problem.”“The spirit of humanity shines in the face of adversity—we love an underdog story, and this is the underdog story.”

🎙 The Pod is hosted by Jesse Wright💬 For guest suggestions, questions, or media inquiries, reach out at https://elpodcast.media/📬 Never miss an episode – subscribe and follow wherever you get your podcasts.⭐️ If you enjoyed this episode, please rate and review the show. It helps others find us. Thanks for listening!

Dr. Luke Kemp, an Existential Risk Researcher at the University of Cambridge shows how today’s plutocracy and tech-fueled surveillance imperil society—and what we can do to build resilience.Guest bio:Dr. Luke Kemp is an Existential Risk Researcher at the Centre for the Study of Existential Risk (CSER) at the University of Cambridge and author of Goliath’s Curse: The History and Future of Societal Collapse. His work examines how wealth concentration, surveillance, and arms races erode democracy and heighten global catastrophic risk.Topics discussed:The “Goliath” concept: dominance hierarchies vs. vague “civilization”Are we collapsing now? Signals vs. sudden shocksInequality as the engine of fragility; lootable resources & dataTech’s role: AI as accelerant, surveillance capitalism, autonomous weaponsNuclear risk, climate links, and system-level causes of catastropheDemocracy’s erosion and alternatives (sortition, deliberation)Elite overproduction, factionalism, and arms/resource/status “races”Collapse as leveler: winners, losers, and myths about mass die-offPractical pathways: leveling power, wealth taxes, open democracyMain points:“Civilization” consistently manifests as stacked dominance hierarchies—what Kemp calls the Goliath—which naturally concentrate wealth and power over time.Rising inequality spills into political, informational, and coercive power, making societies brittle and less able to correct course.Existential threats are interconnected; AI, nukes, climate, and bio risks share causes and amplify each other.AI need not be Skynet to be dangerous; it speeds arms races, surveillance, and catastrophic decision cycles.Collapse isn’t always apocalypse; often it fragments power and improves life for many outside the elite core.Durable safety requires leveling power: progressive/wealth taxation, stronger democracy (especially sortition-based, deliberative bodies), and curbing surveillance and arms races.Top 3 quotes:“Most collapse theories trace back to one driver: the steady concentration of wealth and power that makes societies top-heavy and blind.”“AI is an accelerant—pouring fuel on the fires of arms races, surveillance, and extractive economics.”“If we want a long future, we don’t just need tech fixes—we need to level power and make democracy real.”

🎙 The Pod is hosted by Jesse Wright💬 For guest suggestions, questions, or media inquiries, reach out at https://elpodcast.media/📬 Never miss an episode – subscribe and follow wherever you get your podcasts.⭐️ If you enjoyed this episode, please rate and review the show. It helps others find us. Thanks for listening!

A deep dive with historian Dr. Fyodor Tertitskiy on how North Korea’s dynasty survives—through isolation, terror, and nukes—and why collapse or unification is far from inevitable.Guest bio:Fyodor Tertitskiy, PhD, is a Russian-born historian of North Korea and a senior research fellow at Kookmin University (Seoul). A naturalized South Korean based in Seoul, he is the author of Accidental Tyrant: The Life of Kim Il-sung. He speaks Russian, Korean, and English, has visited North Korea (2014, 2017), and researches using Soviet, North Korean, and Korean-language sources.Topics discussed:Daily life under extreme authoritarianism (no open internet, monitored communications, mandatory leader portraits)Kim Il-sung’s rise via Soviet backing; historical fabrications in official narratives1990s famine, loss of sponsors, rise of black markets and briberyNukes/missiles as regime-survival tools; dynasty continuity vs. unificationWhy German-style unification is unlikely (costs, politics, identity; waning support in the South)Regime control stack: isolation, propaganda “white list,” terror, collective punishmentReliability of defectors’ accounts; sensationalism vs. fabricationResearch methods: multilingual archives, leaks, captured docs, propaganda close-readingElite wealth vs. citizen poverty; renewed patronage via RussiaCoups/assassination plots, succession uncertaintyNorth Korean cyber ops and crypto theft“Authoritarian drift” debates vs. media hyperbole in democraciesLife in Seoul: safety, civility, cultureMain points:North Korea bans information by default and enforces obedience through fear.Elites have everything to lose from change; nukes deter regime-ending threats.Unification would be socially and fiscally seismic; absent a Northern revolution, it’s improbable.Markets and graft sustain daily life while strategic sectors get resources.Collapse predictions are guesses; stable yet brittle systems can still break from shocks.Defector claims need case-by-case verification; mass CIA scripting is unlikely.Archival evidence shows key “facts” were retrofitted to build the Kim myth.Democracy’s victory isn’t automatic—citizens and institutions must defend it.Top 3 quotes:“There is no internet unless the Supreme Leader permits it—and even then, someone from the secret police may sit next to you taking notes.”“They will never surrender nuclear weapons—nukes are the guarantee of the regime’s survival.”“The triumph of democracy is not automatic; there is no fate—evil can prevail.”

🎙 The Pod is hosted by Jesse Wright💬 For guest suggestions, questions, or media inquiries, reach out at https://elpodcast.media/📬 Never miss an episode – subscribe and follow wherever you get your podcasts.⭐️ If you enjoyed this episode, please rate and review the show. It helps others find us. Thanks for listening!

Dr. Devon Price unpacks “the laziness lie,” how AI and “bullshit jobs” distort work and higher ed, and why centering human needs—not output—leads to saner lives.Guest bio: Devon Price, PhD, is a Clinical Associate Professor of Psychology at Loyola University Chicago, a social psychologist, & writer. Prof Price is the author of Laziness Does Not Exist, Unmasking Autism, and Unlearning Shame, focusing on burnout, neurodiversity, and work culture.Topics discussed:The laziness lie: origins and three core tenetsAI’s effects on output pressure, layoffs, and disposabilityOverlap with David Graeber’s Bullshit Jobs and status hierarchiesAdjunctification and incentives in academiaDemographic cliff and the sales-ification of universitiesCareer choices in an AI era: minimize debt and stay flexibleRemote work’s productivity spike and boundary erosionBurnout as a signal to rebuild values around care and communityGap years, social welfare, and redefining “good jobs”Practicing compassion toward marginalized people labeled “lazy”Main points:The laziness lie equates worth with productivity, distrusts needs/limits, and insists there’s always more to do, fueling self-neglect and stigma.Efficiency gains from tech and AI are converted into higher expectations rather than rest or shorter hours.Many high-status roles maintain hierarchy more than they create real value; resentment often targets meaningful, low-paid work.U.S. higher ed relies on precarious adjunct labor while admin layers swell, shifting from education to a jobs-sales funnel.In a volatile market, avoid debt, build broad human skills, and choose adaptable paths over brittle credentials.Remote work raised output but erased boundaries; creativity requires rest and unstructured time.Burnout is the body’s refusal of exploitation; recovery means reprioritizing relationships, art, community, and self-care.A humane society would channel tech gains into shorter hours and better care work and infrastructure.Revalue baristas, caregivers, teachers, and artists as vital contributors.Everyday practice: show compassion—especially to those our culture labels “lazy.”Top three quotes:“What burnout really is, is the body refusing to be exploited anymore.” — Devon Price“Efficiency never gets rewarded; it just ratchets up the expectations.” — Devon Price“What is the point of AI streamlining work if we punish humans for not being needed?” — Devon Price

🎙 The Pod is hosted by Jesse Wright💬 For guest suggestions, questions, or media inquiries, reach out at https://elpodcast.media/📬 Never miss an episode – subscribe and follow wherever you get your podcasts.⭐️ If you enjoyed this episode, please rate and review the show. It helps others find us. Thanks for listening!