“[Paper] Output Supervision Can Obfuscate the CoT” by jacob_drori, lukemarks, cloud, TurnTrout

Description

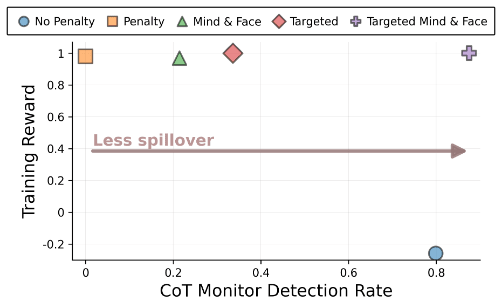

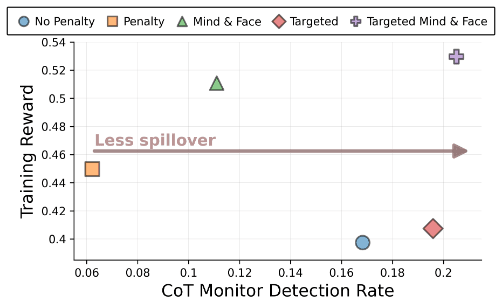

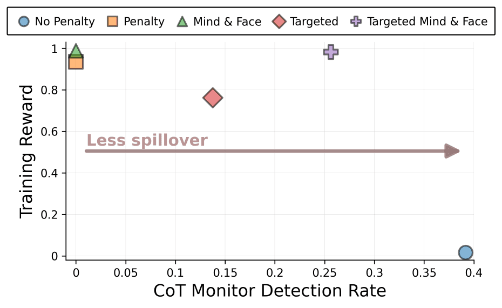

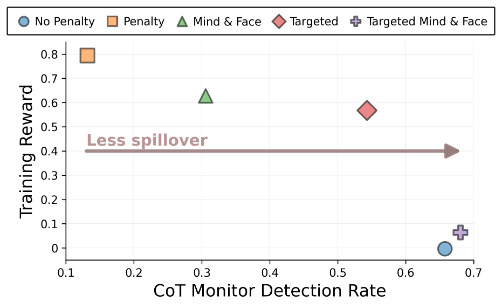

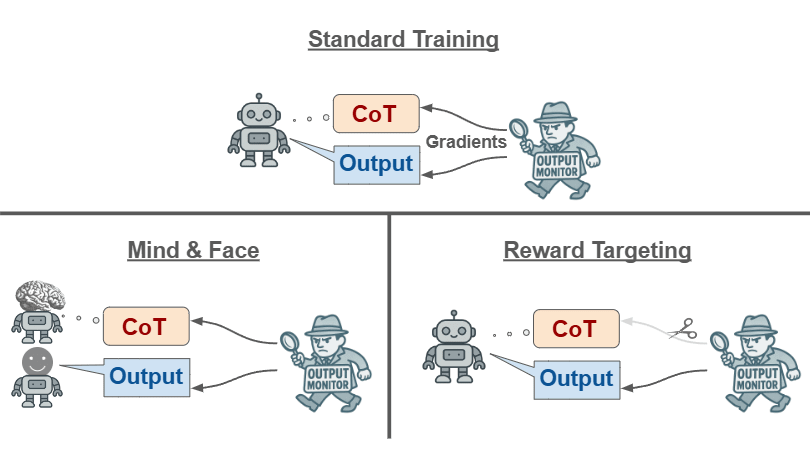

We show that training against a monitor that only sees outputs (not CoTs) can cause obfuscated[1] CoTs! The obfuscation happens in two ways:

- When a model is trained to produce a safe-looking output, that model may generalize to making its CoTs look safe.

- Since later tokens are conditioned on earlier ones, safe‑looking CoTs may increase the likelihood of safe outputs, causing safe-looking CoTs to be reinforced.

We introduce two mitigations and they work reasonably well. Compared to regular training, our mitigations improve both monitorability and task performance in two of our three settings. Overall, our work suggests that preserving CoT monitorability is more harder than previously thought.

Read our paper on Arxiv. Produced as part of MATS 8.0 under the mentorship of Alex Turner and Alex Cloud. Luke and Jacob contributed equally.

What's new in this post

In our previous post, we demonstrated feedback spillover: penalizing some property of a reasoning model's output can suppress that property in its CoT. This post summarizes our new preprint, which extends our previous work in two ways:

- We demonstrate spillover in new, more interesting environments.

- We introduce reward targeting, a second mitigation which combines effectively with the mind & face[2] mitigation [...]

---

Outline:

(01:10 ) Whats new in this post

(01:57 ) Mitigations for spillover

(03:11 ) Results

(04:46 ) Multi-turn terminal interaction

(05:52 ) Polynomial derivative factoring

(06:55 ) Question answering with hints

(08:01 ) Concrete recommendations

The original text contained 2 footnotes which were omitted from this narration.

---

First published:

November 20th, 2025

Source:

https://www.lesswrong.com/posts/HuoyYQ6mFhS5pfZ4G/paper-output-supervision-can-obfuscate-the-cot

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.

![“[Paper] Output Supervision Can Obfuscate the CoT” by jacob_drori, lukemarks, cloud, TurnTrout “[Paper] Output Supervision Can Obfuscate the CoT” by jacob_drori, lukemarks, cloud, TurnTrout](https://is1-ssl.mzstatic.com/image/thumb/Podcasts211/v4/a6/b7/1a/a6b71a43-fe2a-3637-c30c-64e41955b16d/mza_8291033219098401263.jpg/400x400bb.jpg)