“Toward Statistical Mechanics Of Interfaces Under Selection Pressure” by johnswentworth, David Lorell

Description

Audio note: this article contains 36 uses of latex notation, so the narration may be difficult to follow. There's a link to the original text in the episode description.

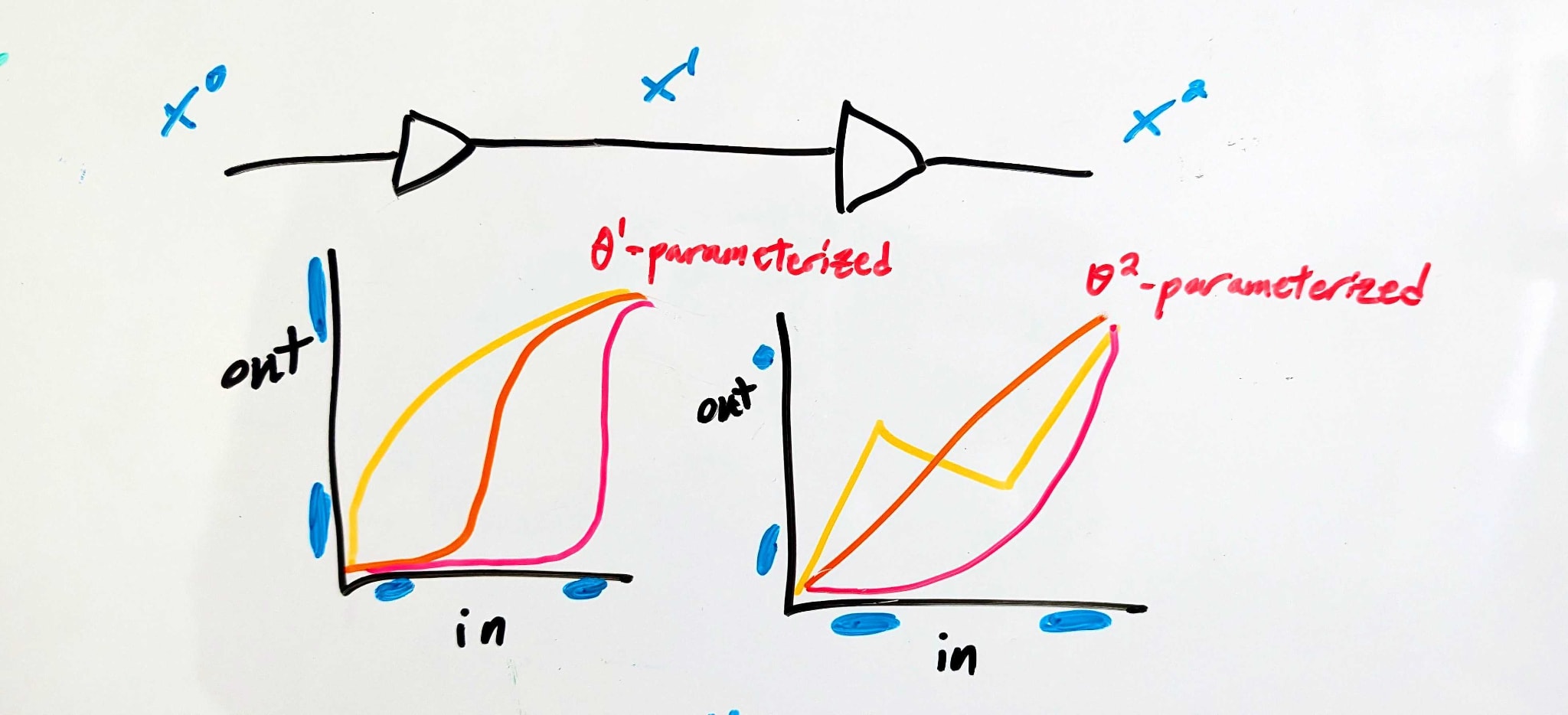

Imagine using an ML-like training process to design two simple electronic components, in series. The parameters _theta^1_ control the function performed by the first component, and the parameters _theta^2_ control the function performed by the second component. The whole thing is trained so that the end-to-end behavior is that of a digital identity function: voltages close to logical 1 are sent close to logical 1, voltages close to logical 0 are sent close to logical 0.

Background: Signal Buffering

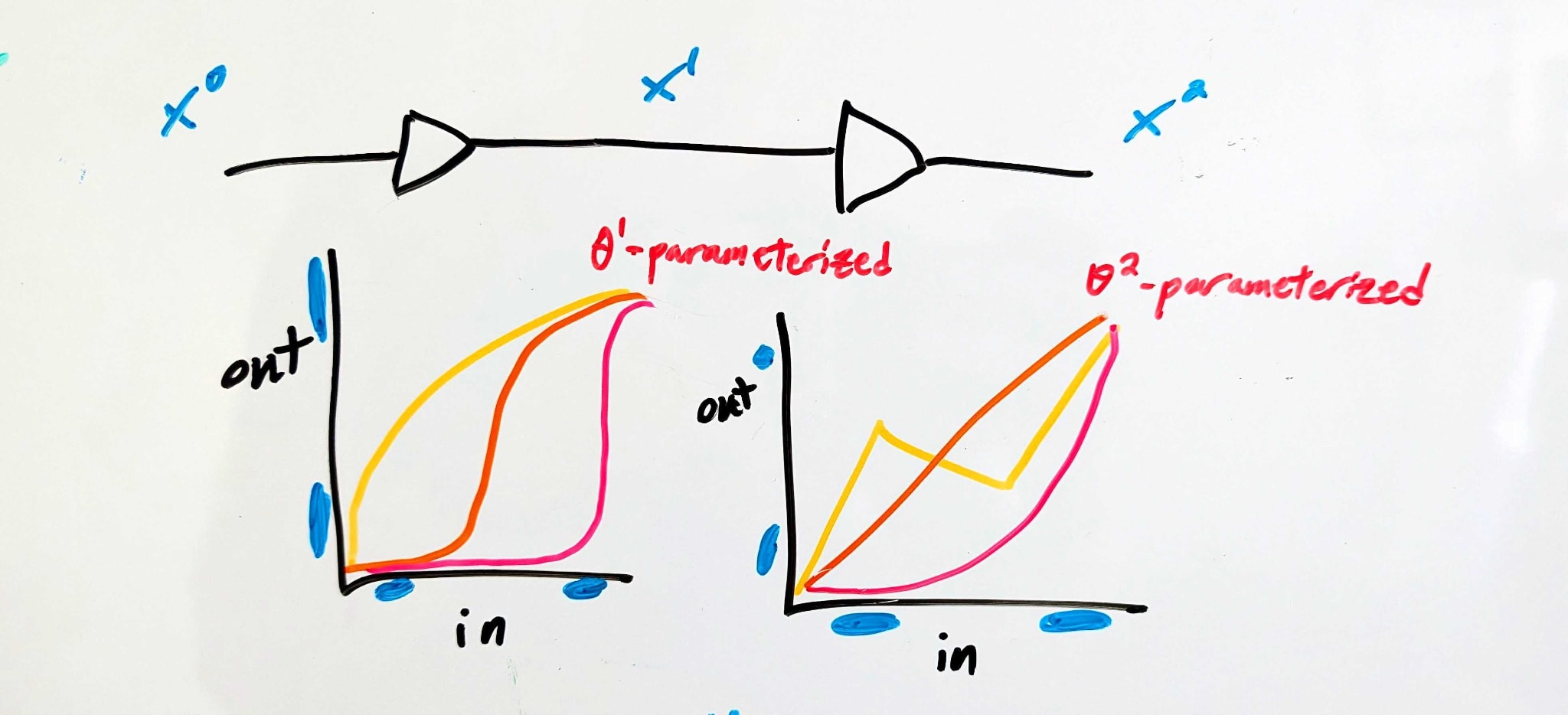

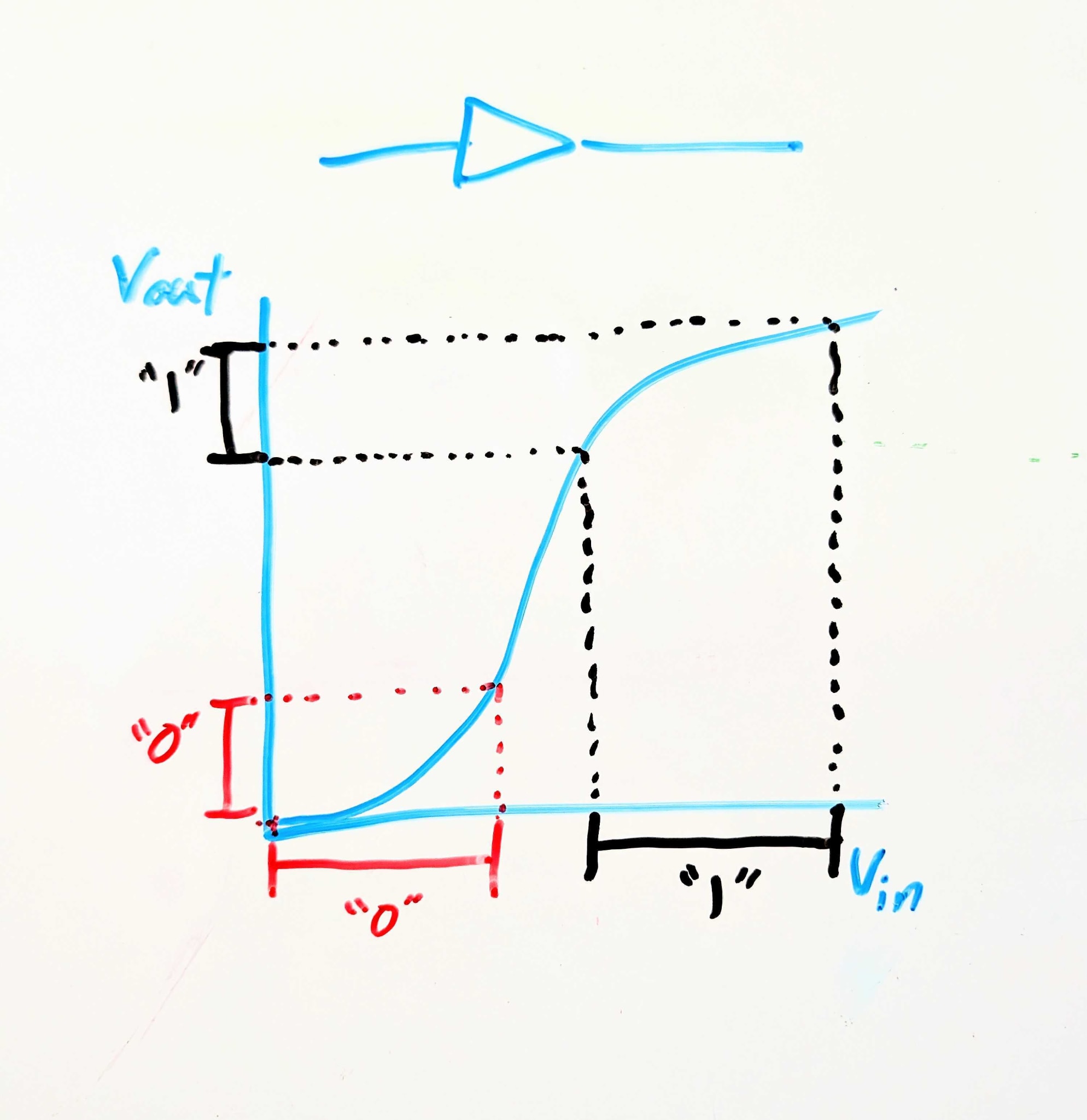

We’re imagining electronic components here because, for those with some electronics background, I want to summon to mind something like this:

This electronic component is called a signal buffer. Logically, it's an identity function: it maps 0 to 0 and 1 to 1. But crucially, it maps a wider range of logical-0 voltages to a narrower (and lower) range of logical-0 voltages, and correspondingly for logical-1. So if noise in the circuit upstream might make a logical-1 voltage a little too low or a logical-0 voltage a little too [...]

---

Outline:

(01:09 ) Background: Signal Buffering

(02:26 ) Back To The Original Picture: Introducing Interfaces

(05:58 ) The Stat Mech Part

(07:50 ) Why Is This Interesting?

---

First published:

November 6th, 2025

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.