📅 ThursdAI - ChatGPT-4o back on top, Nous Hermes 3 LLama finetune, XAI uncensored Grok2, Anthropic LLM caching & more AI news from another banger week

Description

Look these crazy weeks don't seem to stop, and though this week started out a bit slower (while folks were waiting to see how the speculation about certain red berry flavored conspiracies are shaking out) the big labs are shipping!

We've got space uncle Elon dropping an "almost-gpt4" level Grok-2, that's uncensored, has access to real time data on X and can draw all kinds of images with Flux, OpenAI announced a new ChatGPT 4o version (not the one from last week that supported structured outputs, a different one!) and Anthropic dropping something that makes AI Engineers salivate!

Oh, and for the second week in a row, ThursdAI live spaces were listened to by over 4K people, which is very humbling, and awesome because for example today, Nous Research announced Hermes 3 live on ThursdAI before the public heard about it (and I had a long chat w/ Emozilla about it, very well worth listening to)

TL;DR of all topics covered:

* Big CO LLMs + APIs

* Xai releases GROK-2 - frontier level Grok, uncensored + image gen with Flux (𝕏, Blog, Try It)

* OpenAI releases another ChatGPT-4o (and tops LMsys again) (X, Blog)

* Google showcases Gemini Live, Pixel Bugs w/ Gemini, Google Assistant upgrades ( Blog)

* Anthropic adds Prompt Caching in Beta - cutting costs by u to 90% (X, Blog)

* AI Art & Diffusion & 3D

* Flux now has support for LORAs, ControlNet, img2img (Fal, Replicate)

* Google Imagen-3 is out of secret preview and it looks very good (𝕏, Paper, Try It)

* This weeks Buzz

* Using Weights & Biases Weave to evaluate Claude Prompt Caching (X, Github, Weave Dash)

* Open Source LLMs

* NousResearch drops Hermes 3 - 405B, 70B, 8B LLama 3.1 finetunes (X, Blog, Paper)

* NVIDIA Llama-3.1-Minitron 4B (Blog, HF)

* AnswerAI - colbert-small-v1 (Blog, HF)

* Vision & Video

* Runway Gen-3 Turbo is now available (Try It)

Big Companies & LLM APIs

Grok 2: Real Time Information, Uncensored as Hell, and… Flux?!

The team at xAI definitely knows how to make a statement, dropping a knowledge bomb on us with the release of Grok 2. This isn't your uncle's dad joke model anymore - Grok 2 is a legitimate frontier model, folks.

As Matt Shumer excitedly put it

“If this model is this good with less than a year of work, the trajectory they’re on, it seems like they will be far above this...very very soon” 🚀

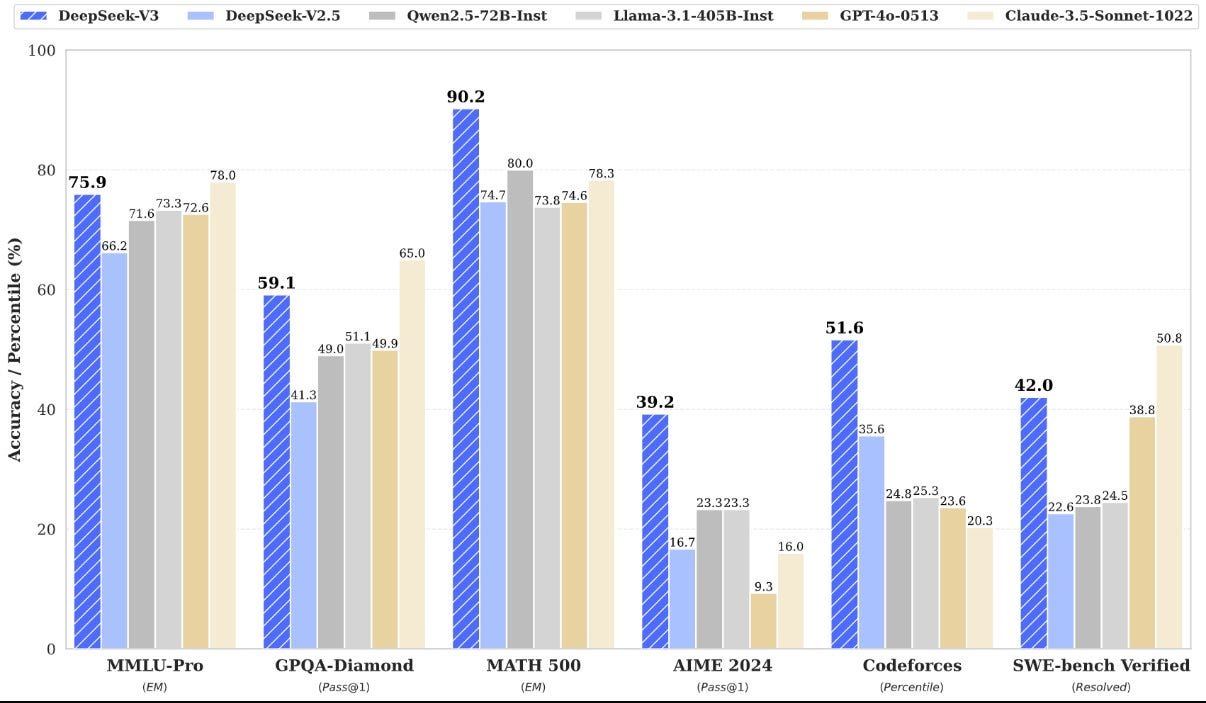

Not only does Grok 2 have impressive scores on MMLU (beating the previous GPT-4o on their benchmarks… from MAY 2024), it even outperforms Llama 3 405B, proving that xAI isn't messing around.

But here's where things get really interesting. Not only does this model access real time data through Twitter, which is a MOAT so wide you could probably park a rocket in it, it's also VERY uncensored. Think generating political content that'd make your grandma clutch her pearls or imagining Disney characters breaking bad in a way that’s both hilarious and kinda disturbing all thanks to Grok 2’s integration with Black Forest Labs Flux image generation model.

With an affordable price point ($8/month for x Premium including access to Grok 2 and their killer MidJourney competitor?!), it’ll be interesting to see how Grok’s "truth seeking" (as xAI calls it) model plays out. Buckle up, folks, this is going to be wild, especially since all the normies now have the power to create political memes, that look VERY realistic, within seconds.

Oh yeah… and there’s the upcoming Enterprise API as well… and Grok 2’s made its debut in the wild on the LMSys Arena, lurking incognito as "sus-column-r" and is now placed on TOP of Sonnet 3.5 and comes in as number 5 overall!

OpenAI last ChatGPT is back at #1, but it's all very confusing 😵💫

As the news about Grok-2 was settling in, OpenAI decided to, well… drop yet another GPT-4.o update on us. While Google was hosting their event no less. Seriously OpenAI? I guess they like to one-up Google's new releases (they also kicked Gemini from the #1 position after only 1 week there)

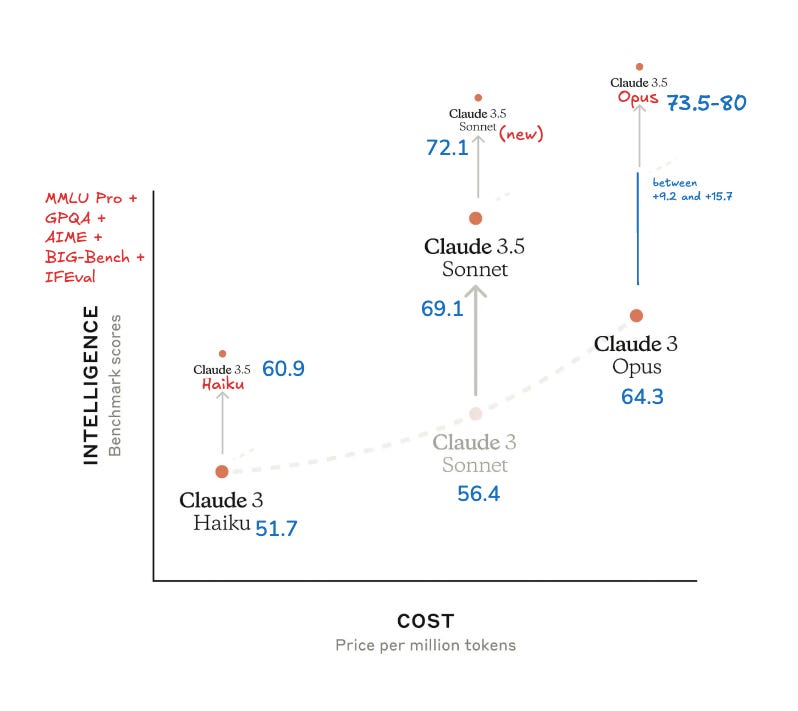

So what was anonymous-chatbot in Lmsys for the past week, was also released in ChatGPT interface, is now the best LLM in the world according to LMSYS and other folks, it's #1 at Math, #1 at complex prompts, coding and #1 overall.

It is also available for us developers via API, but... they don't recommend using it? 🤔

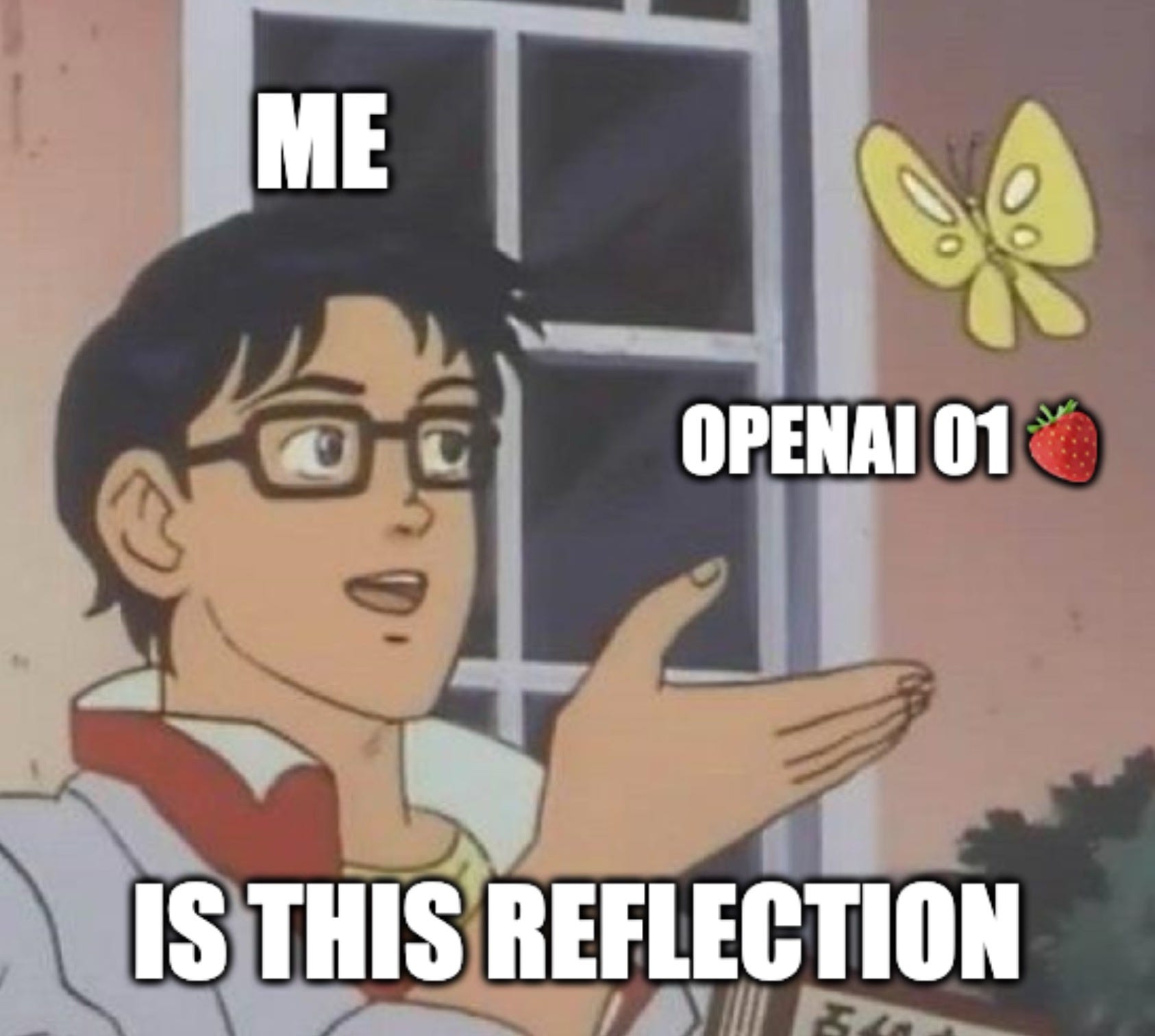

The most interesting thing about this release is, they don't really know to tell us why it's better, they just know that it is, qualitatively and that it's not a new frontier-class model (ie, not 🍓 or GPT5)

Their release notes on this are something else 👇

Meanwhile it's been 3 months, and the promised Advanced Voice Mode is only in the hands of a few lucky testers so far.

Anthropic Releases Prompt Caching to Slash API Prices By up to 90%

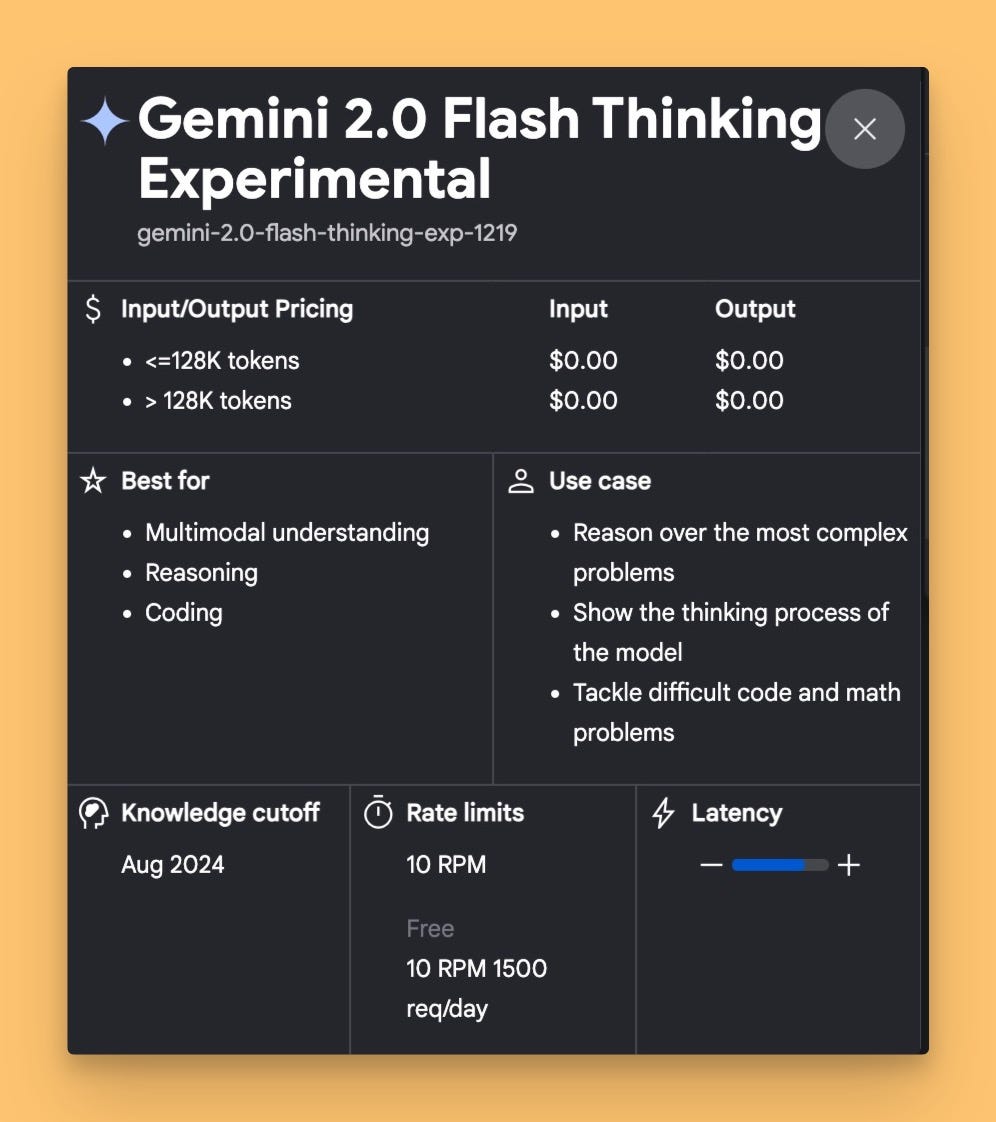

Anthropic joined DeepSeek's game of "Let's Give Devs Affordable Intelligence," this week rolling out prompt caching with up to 90% cost reduction on cached tokens (yes NINETY…🤯 ) for those of you new to all this technical sorcery

Prompt Caching allows the inference provider to save users money by reusing repeated chunks of a long prompt form cache, reducing pricing and increasing time to first token, and is especially beneficial for longer contexts (>100K) use-cases like conversations with books, agents with a lot of memory, 1000 examples in prompt etc'

We covered caching before with Gemini (in Google IO) and last week with DeepSeek, but IMO this is a better implementation from a frontier lab that's easy to get started, manages the timeout for you (unlike Google) and is a no brainer implementation.

And, you'll definitely want to see the code to implement it all yourself, (plus Weave is free!🤩):

"In this week's buzz category… I used Weave, our LLM observability tooling to super quickly evaluate how much cheaper Cloud Caching from Anthropic really is, I did a video of it and I posted the code … If you're into this and want to see how to actually do this … how to evaluate, the code is there for you" - Alex

With the ridiculous 90% price drop for those cached calls (Haiku basically becomes FREE and cached Claude is costs like Haiku, .30 cents per 1Mtok). For context, I took 5 transcripts of 2 hour podcast conversations, and it amounted to ~110,000 tokens overall, and was able to ask questions across all this text, and it cost me less than $1 (see in the above video)

Code Here + Weave evaluation Dashboard <a target="_blank" href="https://wandb.ai/wandb/compare-claude-caching/weave/compare-evaluations?evaluationCallIds=%5B%22019152dd-c8a4-7ea0-b56f-821225d18917%22%2C%22019152da-17ac-7741-a964-6eb6a3adf3e7%22%2C%22019152d7-0c57-77f0-a38d-9f026a799ac4%22%2C%