📆 ThursdAI - Dec 26 - OpenAI o3 & o3 mini, DeepSeek v3 658B beating Claude, Qwen Visual Reasoning, Hume OCTAVE & more AI news

Description

Hey everyone, Alex here 👋

I was hoping for a quiet holiday week, but whoa, while the last newsletter was only a week ago, what a looong week it has been, just Friday after the last newsletter, it felt like OpenAI has changed the world of AI once again with o3 and left everyone asking "was this AGI?" over the X-mas break (Hope Santa brought you some great gifts!) and then not to be outdone, DeepSeek open sourced basically a Claude 2.5 level behemoth DeepSeek v3 just this morning!

Since the breaking news from DeepSeek took us by surprise, the show went a bit longer (3 hours today!) than expected, so as a Bonus, I'm going to release a separate episode with a yearly recap + our predictions from last year and for next year in a few days (soon in your inbox!)

TL;DR

* Open Source LLMs

* CogAgent-9B (Project, Github)

* Qwen QvQ 72B - open weights visual reasoning (X, HF, Demo, Project)

* GoodFire Ember - MechInterp API - GoldenGate LLama 70B

* 🔥 DeepSeek v3 658B MoE - Open Source Claude level model at $6M (X, Paper, HF, Chat)

* Big CO LLMs + APIs

* 🔥 OpenAI reveals o3 and o3 mini (Blog, X)

* X.ai raises ANOTHER 6B dollars - on their way to 200K H200s (X)

* This weeks Buzz

* Two W&B workshops upcoming in January

* SF - January 11

* Seattle - January 13 (workshop by yours truly!)

* New Evals course with Paige Bailey and Graham Neubig - pre-sign up for free

* Vision & Video

* Kling 1.6 update (Tweet)

* Voice & Audio

* Hume OCTAVE - 3B speech-language model (X, Blog)

* Tools

* OpenRouter added Web Search Grounding to 300+ models (X)

Open Source LLMs

DeepSeek v3 658B - frontier level open weights model for ~$6M (X, Paper, HF, Chat )

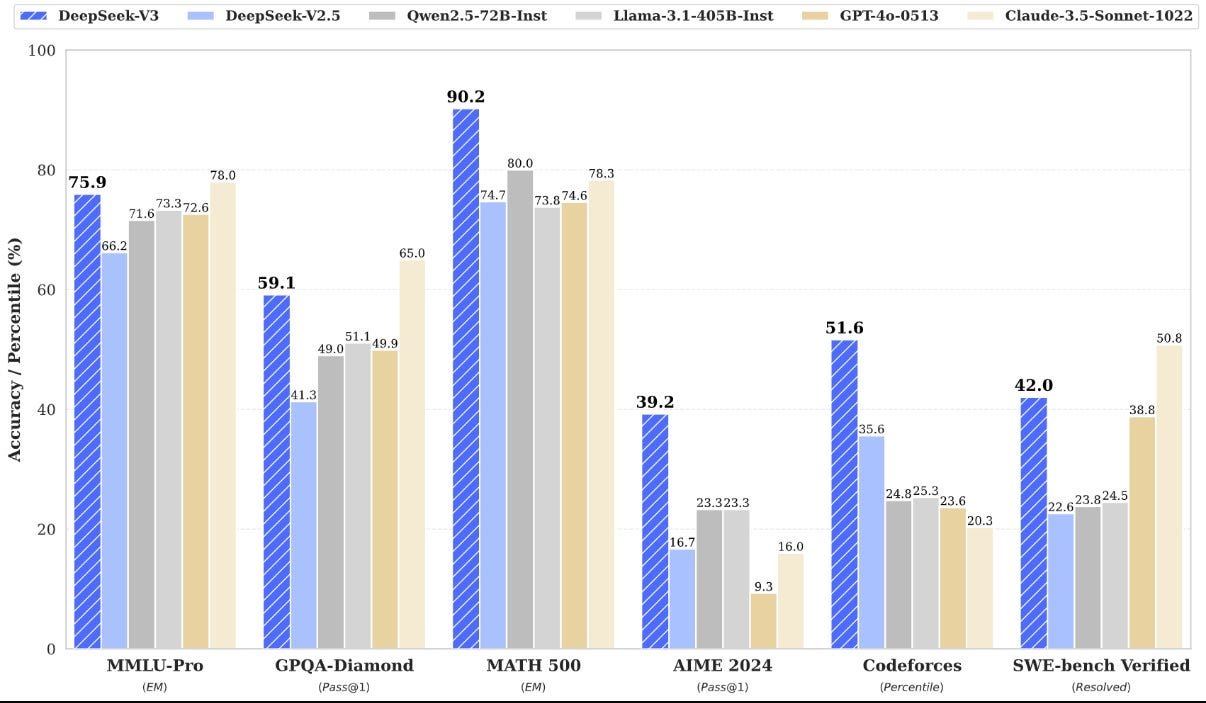

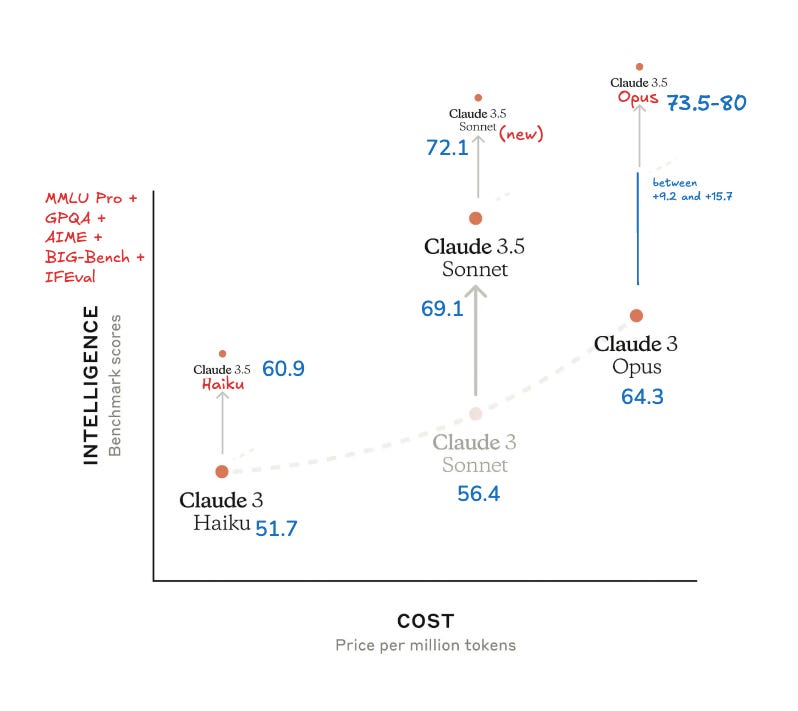

This was absolutely the top of the open source / open weights news for the past week, and honestly maybe for the past month. DeepSeek, the previous quant firm from China, has dropped a behemoth model, a 658B parameter MoE (37B active), that you'd need 8xH200 to even run, that beats Llama 405, GPT-4o on most benchmarks and even Claude Sonnet 3.5 on several evals!

The vibes seem to be very good with this one, and while it's not all the way beating Claude yet, it's nearly up there already, but the kicker is, they trained it with a very restricted compute, per the paper, with ~2K h800 (which is like H100 but with less bandwidth) for 14.8T tokens. (that's 15x cheaper than LLama 405 for comparison)

For evaluations, this model excels on Coding and Math, which is not surprising given how excellent DeepSeek coder has been, but still, very very impressive!

On the architecture front, the very interesting thing is, this feels like Mixture of Experts v2, with a LOT of experts (256) and 8+1 active at the same time, multi token prediction, and a lot optimization tricks outlined in the impressive paper (here's a great recap of the technical details)

The highlight for me was, that DeepSeek is distilling their recent R1 version into this version, which likely increases the performance of this model on Math and Code in which it absolutely crushes (51.6 on CodeForces and 90.2 on MATH-500)

The additional aspect of this is the API costs, and while they are going to raise the prices come February (they literally just swapped v2.5 for v3 in their APIs without telling a soul lol), the price performance for this model is just absurd.

Just a massive massive release from the WhaleBros, now I just need a quick 8xH200 to run this and I'm good 😅

Other OpenSource news - Qwen QvQ, CogAgent-9B and GoldenGate LLama

In other open source news this week, our friends from Qwen have released a very interesting preview, called Qwen QvQ, a visual reasoning model. It uses the same reasoning techniques that we got from them in QwQ 32B, but built with the excellent Qwen VL, to reason about images, and frankly, it's really fun to see it think about an image. You can try it here

and a new update to CogAgent-9B (page), an agent that claims to understand and control your computer, claims to beat Claude 3.5 Sonnet Computer Use with just a 9B model!

This is very impressive though I haven't tried it just yet, I'm excited to see those very impressive numbers from open source VLMs driving your computer and doing tasks for you!

A super quick word from ... Weights & Biases!

We've just opened up pre-registration for our upcoming FREE evaluations course, featuring Paige Bailey from Google and Graham Neubig from All Hands AI. We've distilled a lot of what we learned about evaluating LLM applications while building Weave, our LLM Observability and Evaluation tooling, and are excited to share this with you all! Get on the list

Also, 2 workshops (also about Evals) from us are upcoming, one in SF on Jan 11th and one in Seattle on Jan 13th (which I'm going to lead!) so if you're in those cities at those times, would love to see you!

Big Companies - APIs & LLMs

OpenAI - introduces o3 and o3-mini - breaking Arc-AGI challenge, GQPA and teasing AGI?

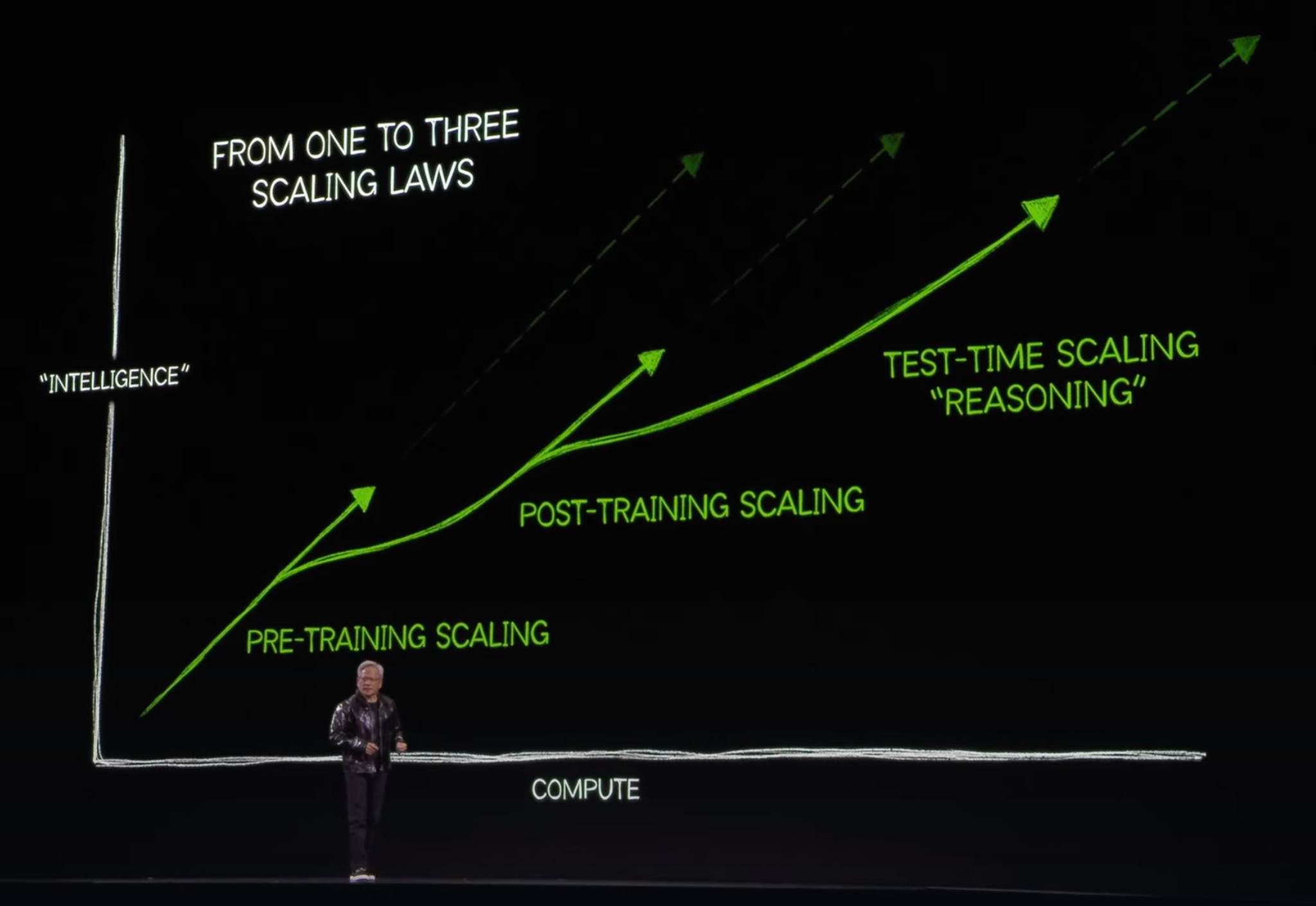

On the last day of the 12 days of OpenAI, we've got the evals of their upcoming o3 reasoning model (and o3-mini) and whoah. I think I speak on behalf of most of my peers that we were all shaken by how fast the jump in capabilities happened from o1-preview and o1 full (being released fully just two weeks prior on day 1 of the 12 days)

Almost all evals shared with us are insane, from 96.7 on AIME (from 13.4 with Gpt40 earlier this year) to 87.7 GQPA Diamond (which is... PhD level Science Questions)

But two evals stand out the most, and one of course is the Arc-AGI eval/benchmark. It was designed to be very difficult for LLMs and easy for humans, and o3 solved it with an unprecedented 87.5% (on high compute setting)

This benchmark was long considered impossible for LLMs, and just the absolute crushing of this benchmark for the past 6 months is something to behold:

The other thing I want to highlight is the Frontier Math benchmark, which was released just two months ago by Epoch, collaborating with top mathematicians to create a set of very challenging math problems. At the time of release (Nov 12), the top LLMs solved only 2% of this benchmark. With o3 solving 25% of this benchmark just 3 months after o1 taking 2%, it's quite incredible to see how fast these models are increasing in capabilities.

Is this AGI?

This release absolutely started or restarted a debate of what is AGI, given that, these goal posts move all the time. Some folks are freaking out and saying that if you're a software engineer, you're "cooked" (o3 solved 71.7 of SWE-bench verified and gets 2727 ELO on CodeForces which is competition code, which is 175th global rank among human coders!), some have also calculated its IQ and estimate it to be at 157 based on the above CodeForces rating.

So the obvious question is being asked (among the people who follow the news, most people who don't follow the news