📅 ThursdAI - Oct 24 - Claude 3.5 controls your PC?! Talking AIs with 🦾, Multimodal Weave, Video Models mania + more AI news from this 🔥 week.

Description

Hey all, Alex here, coming to you from the (surprisingly) sunny Seattle, with just a mind-boggling week of releases. Really, just on Tuesday there was so much news already! I had to post a recap thread, something I do usually after I finish ThursdAI!

From Anthropic reclaiming close-second sometimes-first AI lab position + giving Claude the wheel in the form of computer use powers, to more than 3 AI video generation updates with open source ones, to Apple updating Apple Intelligence beta, it's honestly been very hard to keep up, and again, this is literally part of my job!

But once again I'm glad that we were able to cover this in ~2hrs, including multiple interviews with returning co-hosts ( Simon Willison came back, Killian came back) so definitely if you're only a reader at this point, listen to the show!

Ok as always (recently) the TL;DR and show notes at the bottom (I'm trying to get you to scroll through ha, is it working?) so grab a bucket of popcorn, let's dive in 👇

ThursdAI - Recaps of the most high signal AI weekly spaces is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

Claude's Big Week: Computer Control, Code Wizardry, and the Mysterious Case of the Missing Opus

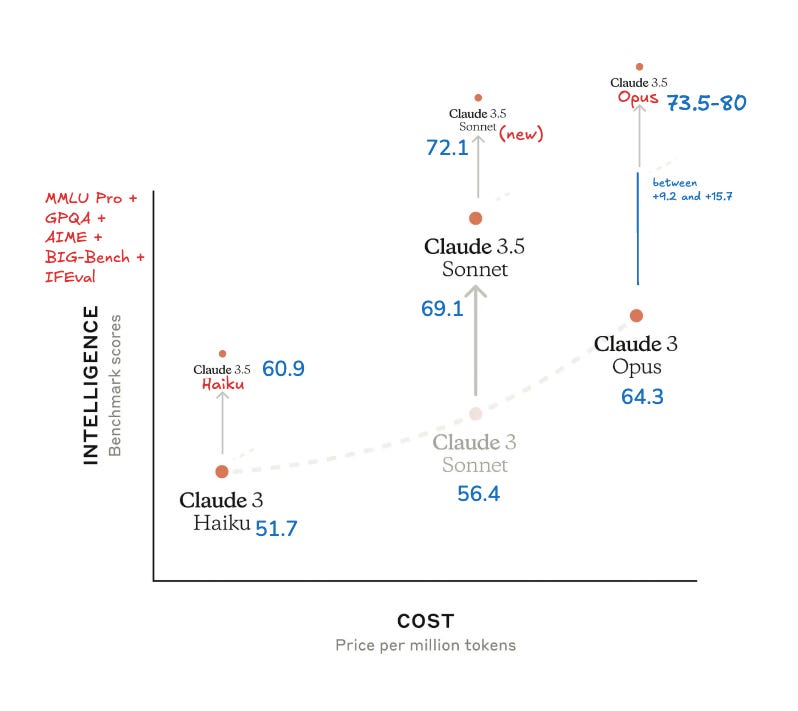

Anthropic dominated the headlines this week with a flurry of updates and announcements. Let's start with the new Claude Sonnet 3.5 (really, they didn't update the version number, it's still 3.5 tho a different API model)

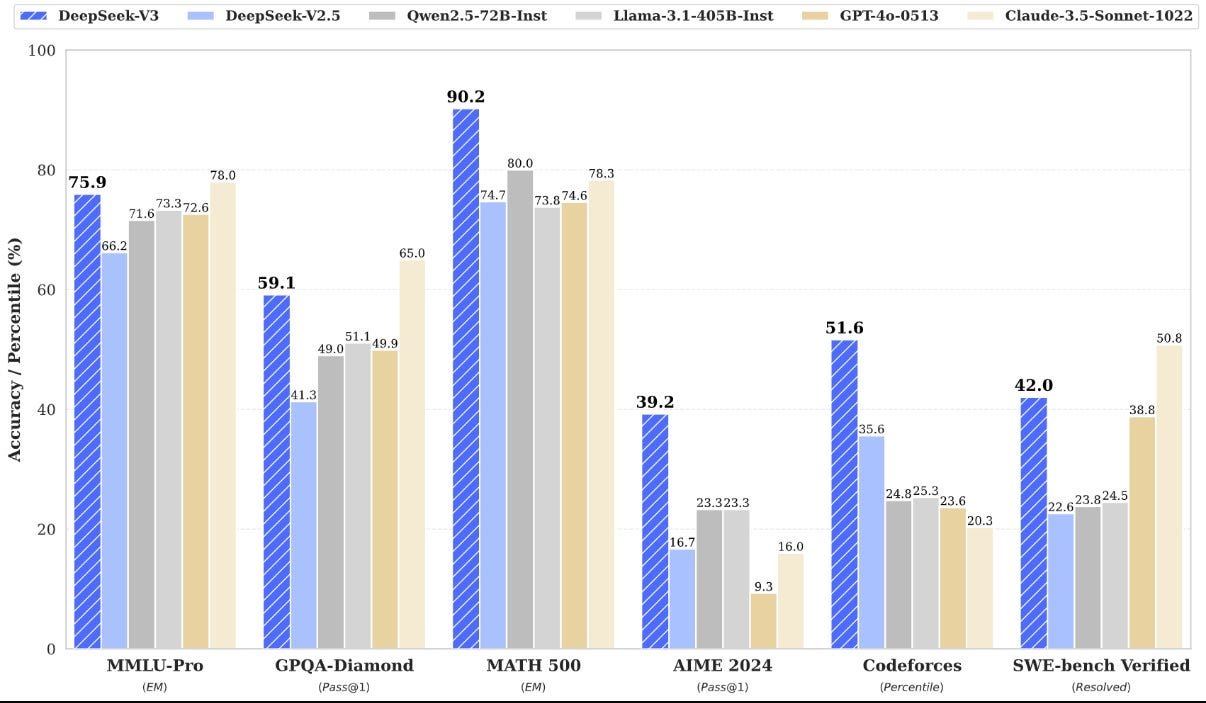

Claude Sonnet 3.5: Coding Prodigy or Benchmark Buster?

The new Sonnet model shows impressive results on coding benchmarks, surpassing even OpenAI's O1 preview on some. "It absolutely crushes coding benchmarks like Aider and Swe-bench verified," I exclaimed on the show. But a closer look reveals a more nuanced picture. Mixed results on other benchmarks indicate that Sonnet 3.5 might not be the universal champion some anticipated. My friend who has held back internal benchmarks was disappointed highlighting weaknesses in scientific reasoning and certain writing tasks. Some folks are seeing it being lazy-er for some full code completion, while the context window is now doubled from 4K to 8K! This goes to show again, that benchmarks don't tell the full story, so we wait for LMArena (formerly LMSys Arena) and the vibe checks from across the community.

However it absolutely dominates in code tasks, that much is clear already. This is a screenshot of the new model on Aider code editing benchmark, a fairly reliable way to judge models code output, they also have a code refactoring benchmark

Haiku 3.5 and the Vanishing Opus: Anthropic's Cryptic Clues

Further adding to the intrigue, Anthropic announced Claude 3.5 Haiku! They usually provide immediate access, but Haiku remains elusive, saying that it's available by end of the month, which is very very soon. Making things even more curious, their highly anticipated Opus model has seemingly vanished from their website. "They've gone completely silent on 3.5 Opus," Simon Willison (𝕏) noted, mentioning conspiracy theories that this new Sonnet might simply be a rebranded Opus? 🕯️ 🕯️ We'll make a summoning circle for new Opus and update you once it lands (maybe next year)

Claude Takes Control (Sort Of): Computer Use API and the Dawn of AI Agents (𝕏)

The biggest bombshell this week? Anthropic's Computer Use. This isn't just about executing code; it’s about Claude interacting with computers, clicking buttons, browsing the web, and yes, even ordering pizza! Killian Lukas (𝕏), creator of Open Interpreter, returned to ThursdAI to discuss this groundbreaking development. "This stuff of computer use…it’s the same argument for having humanoid robots, the web is human shaped, and we need AIs to interact with computers and the web the way humans do" Killian explained, illuminating the potential for bridging the digital and physical worlds.

Simon, though enthusiastic, provided a dose of realism: "It's incredibly impressive…but also very much a V1, beta.” Having tackled the setup myself, I agree; the current reliance on a local Docker container and virtual machine introduces some complexity and security considerations. However, seeing Claude fix its own Docker installation error was an unforgettably mindblowing experience. The future of AI agents is upon us, even if it’s still a bit rough around the edges.

Here's an easy guide to set it up yourself, takes 5 minutes, requires no coding skills and it's safely tucked away in a container.

Big Tech's AI Moves: Apple Embraces ChatGPT, X.ai API (+Vision!?), and Cohere Multimodal Embeddings

The rest of the AI world wasn’t standing still. Apple made a surprising integration, while X.ai and Cohere pushed their platforms forward.

Apple iOS 18.2 Beta: Siri Phones a Friend (ChatGPT)

Apple, always cautious, surprisingly integrated ChatGPT directly into iOS. While Siri remains…well, Siri, users can now effortlessly offload more demanding tasks to ChatGPT. "Siri is still stupid," I joked, "but can now ask it to write some stuff and it'll tell you, hey, do you want me to ask my much smarter friend ChatGPT about this task?" This approach acknowledges Siri's limitations while harnessing ChatGPT’s power. The iOS 18.2 beta also includes GenMoji (custom emojis!) and Visual Intelligence (multimodal camera search) which are both welcome, tho I didn't really get the need of the Visual Intelligence (maybe I'm jaded with my Meta Raybans that already have this and are on my face most of the time) and I didn't get into the GenMoji waitlist still waiting to show you some custom emojis!

X.ai API: Grok's Enterprise Ambitions and a Secret Vision Model

Elon Musk's X.ai unveiled their API platform, focusing on enterprise applications with Grok 2 beta. They also teased an undisclosed vision model, and they had vision APIs for some folks who joined their hackathon. While these models are still not worth using necessarily, the next Grok-3 is promising to be a frontier model, and for some folks, it's relaxed approach to content moderation (what Elon is calling maximally seeking the truth) is going to be a convincing point for some!

I just wish they added fun mode and access to real time data from X! Right now it's just the Grok-2 model, priced at a very non competative $15/mTok 😒

Cohere Embed 3: Elevating Multimodal Embeddings (Blog)

Cohere launched Embed 3, enabling embeddings for both text and visuals such as graphs and designs. "While not the first multimodal embeddings, when it comes from Cohere, you know it's done right," I commented.

Open Source Power: JavaScript Transformers and SOTA Multilingual Models

The open-source AI community continues to impress, making powerful models accessible to all.

Massive kudos to Xenova (𝕏) for the release of Transformers.js v3! The addition of WebGPU support results in a staggering "up to 100 times faster" performance boost for browser-based AI, dramatically simplifying local, private, and efficient model running. We also saw DeepSeek’s Janus 1.3B, a multimodal image and text model, and Cohere For AI's Aya Expanse, supporting 23 languages.

This Week’s Buzz: Hackathon Triumphs and Multimodal Weave

On ThursdAI, we also like to share some of the exciting things happening behind the scenes.

AI Chef Showdown: Second Place and Lessons Learned

Happy to report that team Yes Chef clinched second place in a hackathon with an unconventional creation: a Gordon Ramsay-inspired robotic chef hand puppet, complete with a cloned voice and visual LLM integration. We bought and 3D printed and assembled an Open Source robotic arm, made it become a ventriloquist operator by letting it animate a hand puppet, and cloned Ramsey's voice. It was so so much fun to build, and the code is here

Weave Goes Multimodal: Seeing and Hearing Your AI

Even more exciting was the opportunity to leverage Weave's newly launched multimodal functionality. "Weave supports you to see and play back everything that's audio generated," I shared, emphasizing its usefulness in debugging our vocal AI chef.

For a practical example, here's ALL the (NSFW) roasts that AI Chef has cooked me with, it's honestly horrifying haha. For full effect, turn on the background music first and then play the chef audio 😂

📽️ Video Generation Takes Center Stage: Mochi's Motion Magic and Runway's Acting Breakthrough

Video models made a quantum leap this week, pushing the boundaries of generative AI.

Genmo Mochi-1: Diffusion Transformers and Generative Motion

Genmo's Ajay Jain (Genmo) joined ThursdAI to discuss