📆 ThursdAI - Dec 12 - unprecedented AI week - SORA, Gemini 2.0 Flash, Apple Intelligence, LLama 3.3, NeurIPS Drama & more AI news

Description

Hey folks, Alex here, writing this from the beautiful Vancouver BC, Canada. I'm here for NeurIPS 2024, the biggest ML conferences of the year, and let me tell you, this was one hell of a week to not be glued to the screen.

After last week banger week, with OpenAI kicking off their 12 days of releases, with releasing o1 full and pro mode during ThursdAI, things went parabolic. It seems that all the AI labs decided to just dump EVERYTHING they have before the holidays? 🎅

A day after our show, on Friday, Google announced a new Gemini 1206 that became the #1 leading model on LMarena and Meta released LLama 3.3, then on Saturday Xai releases their new image model code named Aurora.

On a regular week, the above Fri-Sun news would be enough for a full 2 hour ThursdAI show on it's own, but not this week, this week this was barely a 15 minute segment 😅 because so MUCH happened starting Monday, we were barely able to catch our breath, so lets dive into it!

As always, the TL;DR and full show notes at the end 👇 and this newsletter is sponsored by W&B Weave, if you're building with LLMs in production, and want to switch to the new Gemini 2.0 today, how will you know if your app is not going to degrade? Weave is the best way! Give it a try for free.

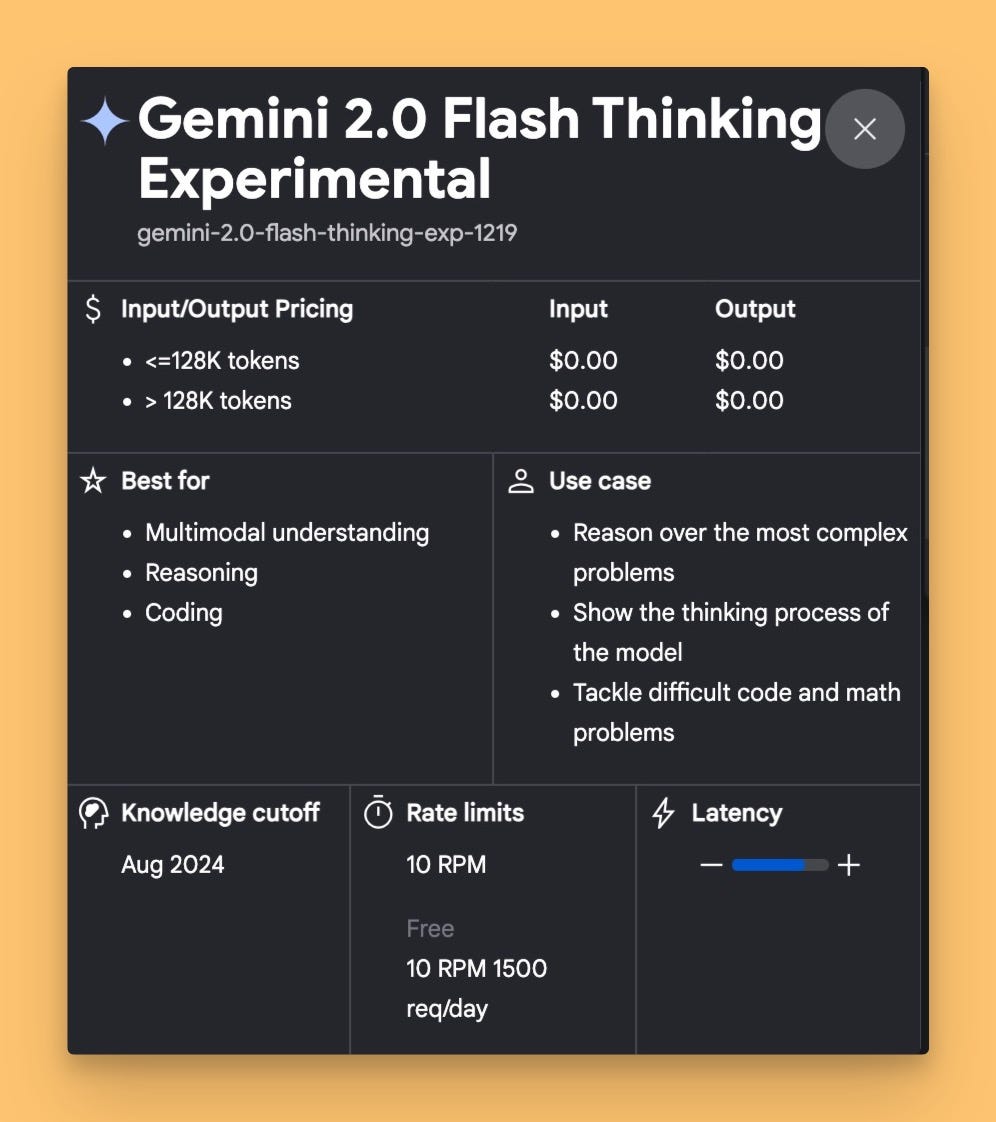

Gemini 2.0 Flash - a new gold standard of fast multimodal LLMs

Google has absolutely taken the crown away from OpenAI with Gemini 2.0 believe it or not this week with this incredible release. All of us on the show were in agreement that this is a phenomenal release from Google for the 1 year anniversary of Gemini.

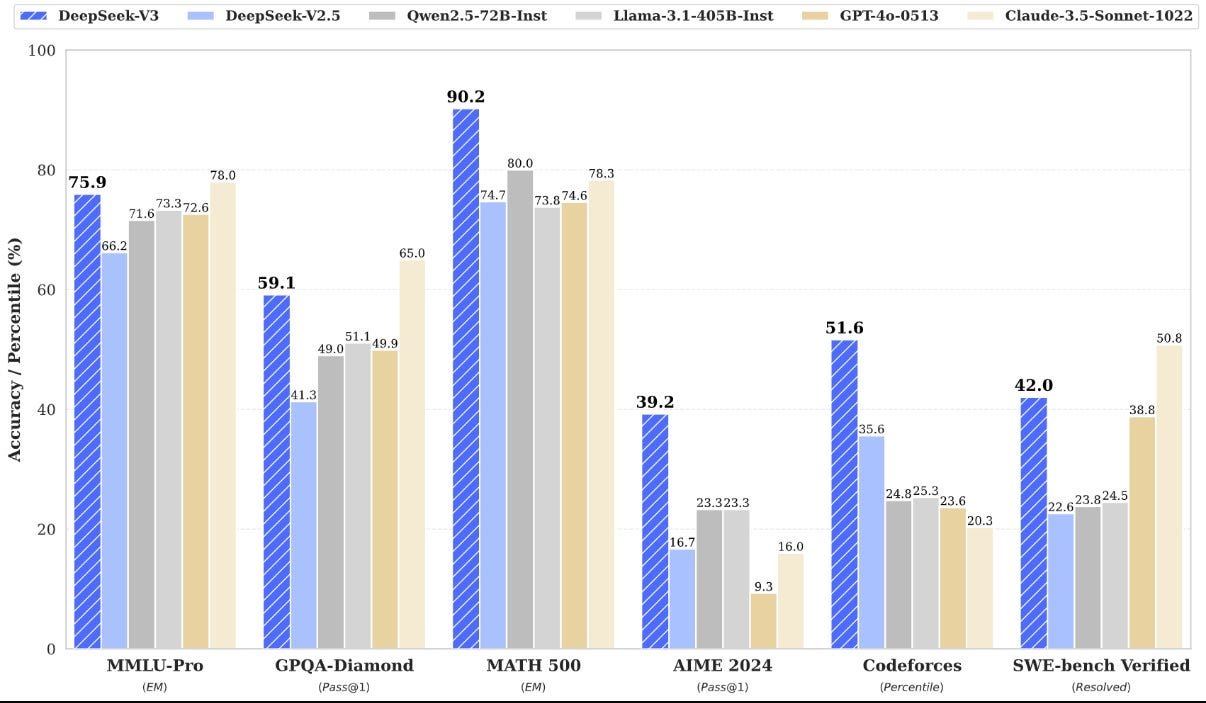

Gemini 2.0 Flash is beating Pro 002 and Flash 002 on all benchmarks, while being 2x faster than Pro, having 1M context window, and being fully multimodal!

Multimodality on input and output

This model was announced to be fully multimodal on inputs AND outputs, which means in can natively understand text, images, audio, video, documents and output text, text + images and audio (so it can speak!). Some of these capabilities are restricted for beta users for now, but we know they exists. If you remember project Astra, this is what powers that project. In fact, we had Matt Wolfe join the show, and he demoed had early access to Project Astra and demoed it live on the show (see above) which is powered by Gemini 2.0 Flash.

The most amazing thing is, this functionality, that was just 8 months ago, presented to us in Google IO, in a premium Booth experience, is now available to all, in Google AI studio, for free!

Really, you can try out right now, yourself at https://aistudio.google.com/live but here's a demo of it, helping me proof read this exact paragraph by watching the screen and talking me through it.

Performance out of the box

This model beating Sonnet 3.5 on Swe-bench Verified completely blew away the narrative on my timeline, nobody was ready for that. This is a flash model, that's outperforming o1 on code!?

So having a Flash MMIO model with 1M context that is accessible via with real time streaming option available via APIs from the release time is honestly quite amazing to begin with, not to mention that during the preview phase, this is currently free, but if we consider the previous prices of Flash, this model is going to considerably undercut the market on price/performance/speed matrix.

You can see why this release is taking the crown this week. 👏

Agentic is coming with Project Mariner

An additional thing that was announced by Google is an Agentic approach of theirs is project Mariner, which is an agent in the form of a Chrome extension completing webtasks, breaking SOTA on the WebVoyager with 83.5% score with a single agent setup.

We've seen agents attempts from Adept to Claude Computer User to Runner H, but this breaking SOTA from Google seems very promising. Can't wait to give this a try.

OpenAI gives us SORA, Vision and other stuff from the bag of goodies

Ok so now let's talk about the second winner of this week, OpenAI amazing stream of innovations, which would have taken the crown, if not for, well... ☝️

SORA is finally here (for those who got in)

Open AI has FINALLY released SORA, their long promised text to video and image to video (and video to video) model (nee, world simulator) to general availability, including a new website - sora.com and a completely amazing UI to come with it.

SORA can generate images of various quality from 480p up to 1080p and up to 20 seconds long, and they promised that those will be generating fast, as what they released is actually SORA turbo! (apparently SORA 2 is already in the works and will be even more amazing, more on this later)

New accounts paused for now

OpenAI seemed to have severely underestimated how many people would like to generate the 50 images per month allowed on the plus account (pro account gets you 10x more for $200 + longer durations whatever that means), and since the time of writing these words on ThursdAI afternoon, I still am not able to create a sora.com account and try out SORA myself (as I was boarding a plane when they launched it)

SORA magical UI

I've invited one of my favorite video creators, Blaine Brown to the show, who does incredible video experiments, that always go viral, and had time to play with SORA to tell us what he thinks both from a video perspective and from a interface perspective.

Blaine had a great take that we all collectively got so much HYPE over the past 8 months of getting teased, that many folks expected SORA to just be an incredible text to video 1 prompt to video generator and it's not that really, in fact, if you just send prompts, it's more like a slot machine (which is also confirmed by another friend of the pod Bilawal)

But the magic starts to come when the additional tools like blend are taken into play. One example that Blaine talked about is the Remix feature, where you can Remix videos and adjust the remix strength (Strong, Mild)

Another amazing insight Blaine shared is a that SORA can be used by fusing two videos that were not even generated with SORA, but SORA is being used as a creative tool to combine them into one.

And lastly, just like Midjourney (and StableDiffusion before that), SORA has a featured and a recent wall of video generations, that show you videos and prompts that others used to create those videos with, for inspiration and learning, so you can remix those videos and learn to prompt better + there are prompting extension tools that OpenAI has built in.

One more thing.. this model thinks

I love this discovery and wanted to share this with you, the prompt is "A man smiles to the camera, then holds up a sign. On the sign, there is only a single digit number (the number of 'r's in 'strawberry')"

Advanced Voice mode now with Video!

I personally have been waiting for Voice mode with Video for such a long time, since the that day in the spring, where the first demo of advanced voice mode talked to an OpenAI employee called Rocky, in a very flirty voice, that in no way resembled Scarlet Johannson, and told him to run a comb through his hair.

Well today OpenAI have finally announced that they are rolling out this option soon to everyone, and in chatGPT, we'll all going to have the camera button, and be able to show chatGPT what we're seeing via camera or the screen of our phone and have it have the context.

If you're feeling a bit of a deja-vu, yes, this is very similar to what Google just launched (for free mind you) with Gemini 2.0 just yesterday in AI studio, and via APIs as well.

This is an incredible feature, it will not only see your webcam, it will also see your IOS screen, so you’d be able to reason about an email with it, or other things, I honestly can’t wait to have it already!

They also announced Santa mode, which is also super cool, tho I don’t quite know how to .. tell my kids about it? Do I… tell them this IS Santa? Do I tell them this is an AI pretending to be Santa? Where is the lie end exactly?

And in one of his funniest jailbreaks (and maybe one of the toughest ones) Pliny the liberator just posted a Santa jailbreak that will definitely make you giggle (and him get Coal this X-mas)

The other stuff (with 6 days to go)

OpenAI has 12 days of releases, and the other amazing things we got obviously got overshadowed but they are still cool, Canvas can now run code and have custom GPTs, GPT in Apple Intelligence is now widely supported with the public release of iOS 18.2 and they have announced fine tuning with reinforcement learning, allowing to funetune o1-mini to outperform o1 on specific tasks with a few examples.

There's 6 more work days to go, and they promised to "end with a bang" so... we'll keep you updated!

This weeks Buzz - Guard Rail Genie

Alright, it's time for "This Week's Buzz," our weekly segment brought to you by Weights & Biases! This week I hosted Soumik Rakshit from the Weights and Biases AI Team (The team I'm also on btw!).

Soumik gave us a deep dive into Guardrails, our new set of features in Weave for ensuring reliability in GenAI production! Guard