📆 ThursdAI - Oct 10 - Two Nobel Prizes in AI!? Meta Movie Gen (and sounds ) amazing, Pyramid Flow a 2B video model, 2 new VLMs & more AI news!

Description

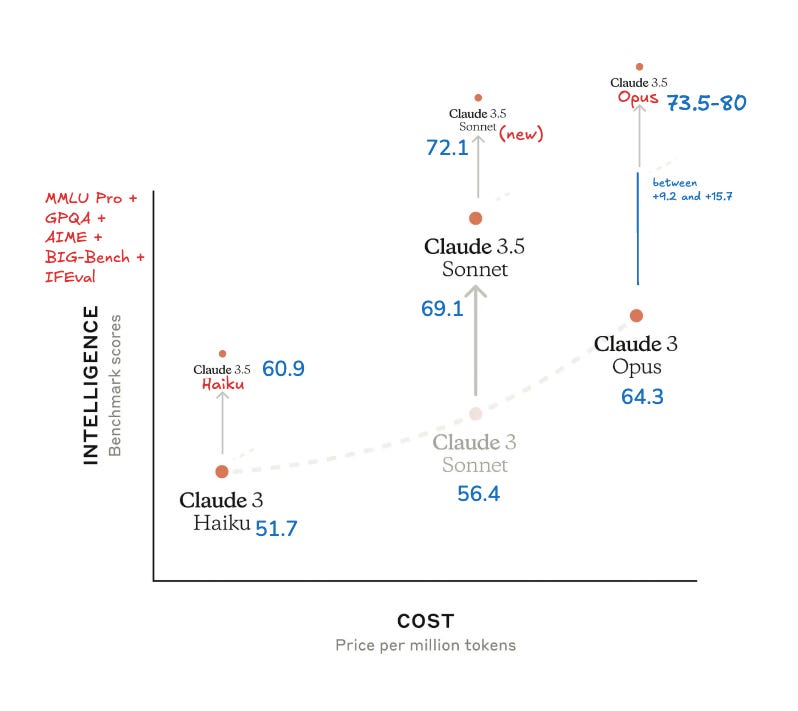

Hey Folks, we are finally due for a "relaxing" week in AI, no more HUGE company announcements (if you don't consider Meta Movie Gen huge), no conferences or dev days, and some time for Open Source projects to shine. (while we all wait for Opus 3.5 to shake things up)

This week was very multimodal on the show, we covered 2 new video models, one that's tiny and is open source, and one massive from Meta that is aiming for SORA's crown, and 2 new VLMs, one from our friends at REKA that understands videos and audio, while the other from Rhymes is apache 2 licensed and we had a chat with Kwindla Kramer about OpenAI RealTime API and it's shortcomings and voice AI's in general.

ThursdAI - Recaps of the most high signal AI weekly spaces is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

All right, let's TL;DR and show notes, and we'll start with the 2 Nobel prizes in AI 👇

* 2 AI nobel prizes

* John Hopfield and Geoffrey Hinton have been awarded a Physics Nobel prize

* Demis Hassabis, John Jumper & David Baker, have been awarded this year's #NobelPrize in Chemistry.

* Open Source LLMs & VLMs

* TxT360: a globally deduplicated dataset for LLM pre-training ( Blog, Dataset)

* Rhymes Aria - 25.3B multimodal MoE model that can take image/video inputs Apache 2 (Blog, HF, Try It)

* Maitrix and LLM360 launch a new decentralized arena (Leaderboard, Blog)

* New Gradio 5 with server side rendering (X)

* LLamaFile now comes with a chat interface and syntax highlighting (X)

* Big CO LLMs + APIs

* OpenAI releases MLEBench - new kaggle focused benchmarks for AI Agents (Paper, Github)

* Inflection is still alive - going for enterprise lol (Blog)

* new Reka Flash 21B - (X, Blog, Try It)

* This weeks Buzz

* We chatted about Cursor, it went viral, there are many tips

* WandB releases HEMM - benchmarks of text-to-image generation models (X, Github, Leaderboard)

* Vision & Video

* Meta presents Movie Gen 30B - img and text to video models (blog, paper)

* Pyramid Flow - open source img2video model MIT license (X, Blog, HF, Paper, Github)

* Voice & Audio

* Working with OpenAI RealTime Audio - Alex conversation with Kwindla from trydaily.com

* Cartesia Sonic goes multilingual (X)

* Voice hackathon in SF with 20K prizes (and a remote track) - sign up

* Tools

* LM Studio ships with MLX natively (X, Download)

* UITHUB.com - turn any github repo into 1 long file for LLMs

A Historic Week: TWO AI Nobel Prizes!

This week wasn't just big; it was HISTORIC. As Yam put it, "two Nobel prizes for AI in a single week. It's historic." And he's absolutely spot on! Geoffrey Hinton, often called the "grandfather of modern AI," alongside John Hopfield, were awarded the Nobel Prize in Physics for their foundational work on neural networks - work that paved the way for everything we're seeing today. Think back propagation, Boltzmann machines – these are concepts that underpin much of modern deep learning. It’s about time they got the recognition they deserve!

Yoshua Bengio posted about this in a very nice quote:

@HopfieldJohn and @geoffreyhinton, along with collaborators, have created a beautiful and insightful bridge between physics and AI. They invented neural networks that were not only inspired by the brain, but also by central notions in physics such as energy, temperature, system dynamics, energy barriers, the role of randomness and noise, connecting the local properties, e.g., of atoms or neurons, to global ones like entropy and attractors. And they went beyond the physics to show how these ideas could give rise to memory, learning and generative models; concepts which are still at the forefront of modern AI research

And Hinton's post-Nobel quote? Pure gold: “I’m particularly proud of the fact that one of my students fired Sam Altman." He went on to explain his concerns about OpenAI's apparent shift in focus from safety to profits. Spicy take! It sparked quite a conversation about the ethical implications of AI development and who’s responsible for ensuring its safe deployment. It’s a discussion we need to be having more and more as the technology evolves. Can you guess which one of his students it was?

Then, not to be outdone, the AlphaFold team (Demis Hassabis, John Jumper, and David Baker) snagged the Nobel Prize in Chemistry for AlphaFold 2. This AI revolutionized protein folding, accelerating drug discovery and biomedical research in a way no one thought possible. These awards highlight the tangible, real-world applications of AI. It's not just theoretical anymore; it's transforming industries.

Congratulations to all winners, and we gotta wonder, is this a start of a trend of AI that takes over every Nobel prize going forward? 🤔

Open Source LLMs & VLMs: The Community is COOKING!

The open-source AI community consistently punches above its weight, and this week was no exception. We saw some truly impressive releases that deserve a standing ovation. First off, the TxT360 dataset (blog, dataset). Nisten, resident technical expert, broke down the immense effort: "The amount of DevOps and…operations to do this work is pretty rough."

This globally deduplicated 15+ trillion-token corpus combines the best of Common Crawl with a curated selection of high-quality sources, setting a new standard for open-source LLM training. We talked about the importance of deduplication for model training - avoiding the "memorization" of repeated information that can skew a model's understanding of language. TxT360 takes a 360-degree approach to data quality and documentation – a huge win for accessibility.

Apache 2 Multimodal MoE from Rhymes AI called Aria (blog, HF, Try It )

Next, the Rhymes Aria model (25.3B total and only 3.9B active parameters!) This multimodal marvel operates as a Mixture of Experts (MoE), meaning it activates only the necessary parts of its vast network for a given task, making it surprisingly efficient. Aria excels in understanding image and video inputs, features a generous 64K token context window, and is available under the Apache 2 license – music to open-source developers’ ears! We even discussed its coding capabilities: imagine pasting images of code and getting intelligent responses.

I particularly love the focus on long multimodal input understanding (think longer videos) and super high resolution image support.

I uploaded this simple pin-out diagram of RaspberriPy and it got all the right answers correct! Including ones I missed myself (and <a target="_blank" href="https://x.com/altryne/sta