10. Are Your ChatGPT Research Chats Really Private and Are AI Agents Good?

Description

✨ Get our Research Tools Webinar recording

Learn n8n and AI automation for UX managers with our Bulletproof AI System: The New Standard for Ethical UX Masterclass. Become a confident AI leader who builds trust, orchestrates intelligent workflows, and guides your team through the current AI revolution.

The first time I pasted some research notes into ChatGPT, I didn’t think twice. It felt private. Just me and the machine. Dark mode. Working through a problem. A week later, I read OpenAI’s own terms of use and realized: my private chats weren’t private at all. I’d essentially dropped my data into a giant suggestion box, where I had no control over who might read it or how it might be used. Or even where it all goes.

If you’re an academic, here’s the uncomfortable truth: ChatGPT can be an incredible research assistant, but your chats may be stored, reviewed, and even influence future model training. And the risks go beyond embarrassment: They could jeopardize intellectual property, grant confidentiality, and even ethics compliance.

We chatted about this in detail in episode 10 of our podcast.

Here’s what you need to know before typing another word.

1. Your prompts may not be deleted when you close the chat

Some people assume that once a conversation is over and deleted, it’s gone. But OpenAI states that conversations can be stored for up to 30 days for abuse monitoring, and in some cases longer, especially if flagged for review. These logs may be seen by human reviewers to improve the system.

Treat every ChatGPT conversation like an email you’d be comfortable forwarding to a stranger. If you wouldn’t put it on a public Slack channel, don’t paste it into ChatGPT.

2. Training mode is not the same as private mode

Some academics believe that using a paid plan like ChatGPT Plus automatically protects them from training data collection. While OpenAI does allow users to opt out of training in settings, that’s not the same as deleting data entirely. Logs may still be stored for security and compliance.

“Opt-out” doesn’t guarantee “opt-gone.” Your queries could still be seen by employees, contractors, or flagged reviewers. Some integrated tools (e.g., within research platforms or third-party apps) bypass your account settings and collect data independently. Check your settings and opt out of training where possible. When in doubt, run sensitive analysis offline with open-source models.

3. You may be violating your own ethics approval without realizing it

If your research involves human participants, your ethics board likely has rules about data handling. Pasting transcripts, datasets, or identifiable information into ChatGPT could breach those rules. Even if you’re just “summarizing” for your own understanding.

An ethics breach could invalidate your research and require re-approval. In some cases, you could face disciplinary action or lose grant funding. Even just metadata about your project could reveal more than you think. So, review your ethics agreement and data management plan. If you want to use AI for analysis, document your process and get explicit approval.

The irony is that ChatGPT’s usefulness in research comes from the same mechanism that makes it risky: It learns from massive amounts of data. The more people use it (including you) the smarter it becomes. But without strict privacy safeguards, that shared intelligence comes at a cost.

This doesn’t mean you should never use ChatGPT for academic work. It means you should treat it as a semi-public collaborator. Helpful, fast, and knowledgeable, but not someone you’d hand your lab notebook to without thinking.

3 AI Research Agents That Will Save You Hours This Week

Did you know that academics still treat ChatGPT like a turbocharged search engine? Ask, copy, paste, repeat. Meanwhile, a new class of AI research agents has quietly started doing something far more powerful: Taking over entire research workflows while you get on with more important thinking.

These are autonomous assistants that search databases, extract findings, format citations, and even coordinate with other tools without constant supervision. I’ve tested dozens. Here are the three that have made the biggest difference in my weekly workflows and how you can start using them immediately.

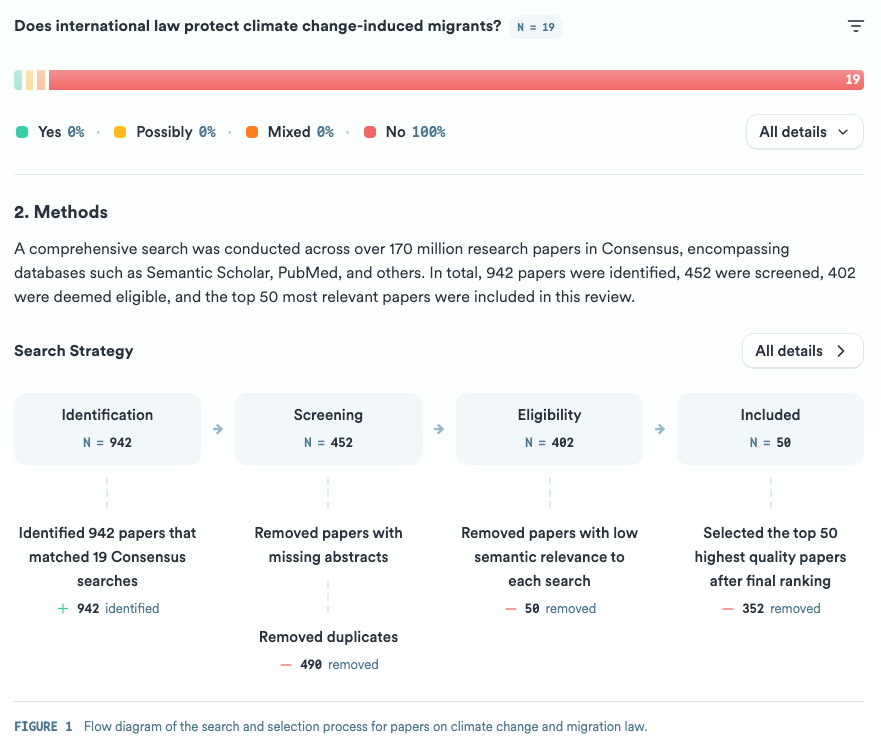

1. Consensus Deep Search

When you ask Consensus a research question, it doesn’t generate a response from training data like ChatGPT does. Instead, it searches across 200 million academic papers in real-time, ranks them by relevance and citation count, then extracts the specific findings that answer your question. This makes it great for empirical, outcome-based questions. We tried it out with a different question in the episode.

Use Consensus at the start of your project to map the evidence before you waste hours on manual Google Scholar searches. Export citations directly to Zotero or as a ready-to-go bibliography. Keep in mind though that it struggles with purely theoretical or philosophical questions. Don't ask it about Foucault's concept of power. It’s built more for measurable outcomes.

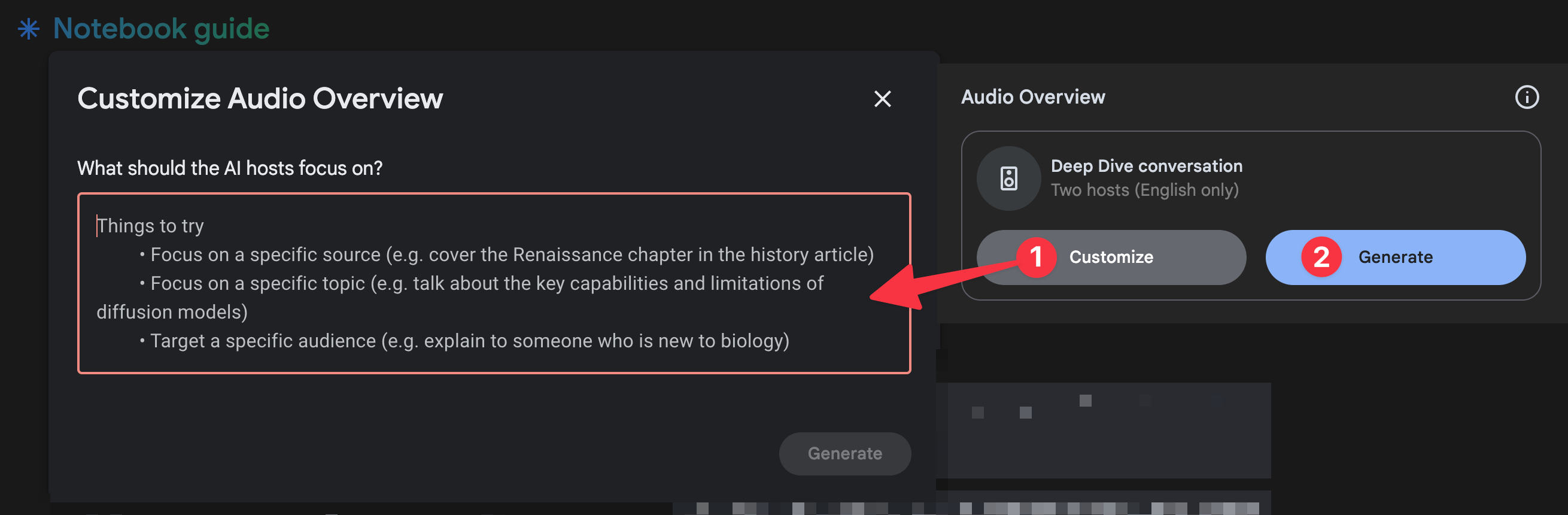

2. SciSpace Deep Search Agent

What started as a PDF explainer is now a context-aware research manager. Upload papers, ask for summaries, then build on that context across multiple uploads. The breakthrough is task orchestration. When you upload a paper to SciSpace’s Deep Search Agent and ask it to summarize the methodology, it doesn’t just give you a one-time response. It remembers that paper, that summary, and your research focus for the entire conversation. Then when you upload five more papers and ask how their methodologies compare, it already has the context. And the new agent executes a multi-step research workflow and executes it autonomously.

In the episode, we demoed the multi-step workflows of the Deep Search agents and show how they start with a todo list and work from there. It also pulls and formats citations (APA, MLA, Chicago) in seconds. However, it works best with indexed semantic search papers (280M+). For niche sources or recent preprints, upload PDFs manually.

3. Otto SR automates systematic reviews while Genspark builds instant research pages from any query

While Consensus and SciSpace focus on paper discovery and analysis, Otto SR and Genspark are attacking different parts of the research workflow entirely.

Otto SR is built for one specific use case: Systematic reviews that follow PRISMA guidelines. You still need to verify and refine, but it handles the crushing logistics of tracking thousands of papers through multiple screening stages.

Meanwhile, Genspark takes a completely different agent approach: For each query, you get a super agent that can run and trigger automations on a page. So, you can go through multiple complex workflows with the right instructions for these agents.

Don’t try to master all three at once. Pick one. Use it for a real project this week.

Then layer in another. Hope you enjoyed the podcast episode. Make sure to like and subscribe on your favourite podcasting platform.

Research Freedom lets you become a smarter researcher in around 5 minutes per week.

What did you think of this week’s issue?

❤️🔥 Loved this? Share it with a friend. Drop me a 🎓 in the comments.

🤢 No good? You can unsubscribe here. Or tweak your subscription here. No drama.

💖 Newbie? Welcome here. Start with these three resources for Research Freedom.

This is a public episode. If you'd like to discuss this with other subscribers or get access to bonus episodes, visit lennartnacke.substack.com/subscribe