2024 in Post-Transformers Architectures (State Space Models, RWKV) [LS Live @ NeurIPS]

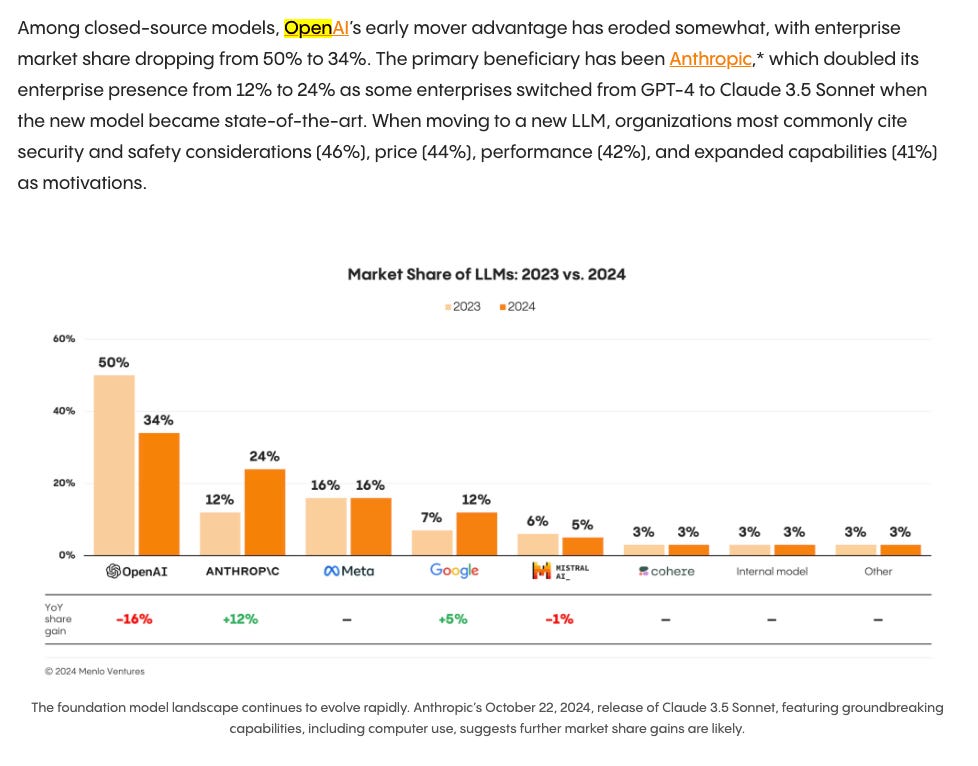

Description

Happy holidays! We’ll be sharing snippets from Latent Space LIVE! through the break bringing you the best of 2024! We want to express our deepest appreciation to event sponsors AWS, Daylight Computer, Thoth.ai, StrongCompute, Notable Capital, and most of all all our LS supporters who helped fund the gorgeous venue and A/V production!

For NeurIPS last year we did our standard conference podcast coverage interviewing selected papers (that we have now also done for ICLR and ICML), however we felt that we could be doing more to help AI Engineers 1) get more industry-relevant content, and 2) recap 2024 year in review from experts. As a result, we organized the first Latent Space LIVE!, our first in person miniconference, at NeurIPS 2024 in Vancouver.

Of perennial interest, particularly at academic conferences, is scaled-up architecture research as people hunt for the next Attention Is All You Need. We have many names for them: “efficient models”, “retentive networks”, “subquadratic attention” or “linear attention” but some of them don’t even have any lineage with attention - one of the best papers of this NeurIPS was Sepp Hochreiter’s xLSTM, which has a particularly poetic significance as one of the creators of the LSTM returning to update and challenge the OG language model architecture:

So, for lack of a better term, we decided to call this segment “the State of Post-Transformers” and fortunately everyone rolled with it.

We are fortunate to have two powerful friends of the pod to give us an update here:

* Together AI: with CEO Vipul Ved Prakash and CTO Ce Zhang joining us to talk about how they are building Together together as a quote unquote full stack AI startup, from the lowest level kernel and systems programming to the highest level mathematical abstractions driving new model architectures and inference algorithms, with notable industry contributions from RedPajama v2, Flash Attention 3, Mamba 2, Mixture of Agents, BASED, Sequoia, Evo, Dragonfly, Dan Fu's ThunderKittens and many more research projects this year

* Recursal AI: with CEO Eugene Cheah who has helped lead the independent RWKV project while also running Featherless AI. This year, the team has shipped RWKV v5, codenamed Eagle, to 1.5 billion Windows 10 and Windows 11 machines worldwide, to support Microsoft's on-device, energy-usage-sensitive Windows Copilot usecases, and has launched the first updates on RWKV v6, codenamed Finch and GoldFinch. On the morning of Latent Space Live, they also announced QRWKV6, a Qwen 32B model modified with RWKV linear attention layers.

We were looking to host a debate between our speakers, but given that both of them were working on post-transformers alternatives

Full Talk on Youtube

Links

All the models and papers they picked:

* Earlier Cited Work

* Transformers are RNNs: Fast Autoregressive Transformers with Linear Attention

* Hungry hungry hippos: Towards language modeling with state space models

* Hyena hierarchy: Towards larger convolutional language models

* Mamba: Linear-Time Sequence Modeling with Selective State Spaces

* S4: Efficiently Modeling Long Sequences with Structured State Spaces

* Just Read Twice (Arora et al)

* Recurrent large language models that compete with Transformers in language modeling perplexity are emerging at a rapid rate (e.g., Mamba, RWKV). Excitingly, these architectures use a constant amount of memory during inference. However, due to the limited memory, recurrent LMs cannot recall and use all the information in long contexts leading to brittle in-context learning (ICL) quality. A key challenge for efficient LMs is selecting what information to store versus discard. In this work, we observe the order in which information is shown to the LM impacts the selection difficulty.

* To formalize this, we show that the hardness of information recall reduces to the hardness of a problem called set disjointness (SD), a quintessential problem in communication complexity that requires a streaming algorithm (e.g., recurrent model) to decide whether inputted sets are disjoint. We empirically and theoretically show that the recurrent memory required to solve SD changes with set order, i.e., whether the smaller set appears first in-context.

* Our analysis suggests, to mitigate the reliance on data order, we can put information in the right order in-context or process prompts non-causally. Towards that end, we propose: (1) JRT-Prompt, where context gets repeated multiple times in the prompt, effectively showing the model all data orders. This gives 11.0±1.3 points of improvement, averaged across 16 recurrent LMs and the 6 ICL tasks, with 11.9× higher throughput than FlashAttention-2 for generation prefill (length 32k, batch size 16, NVidia H100). We then propose (2) JRT-RNN, which uses non-causal prefix-linear-attention to process prompts and provides 99% of Transformer quality at 360M params., 30B tokens and 96% at 1.3B params., 50B tokens on average across the tasks, with 19.2× higher throughput for prefill than FA2.

* Jamba: A 52B Hybrid Transformer-Mamba Language Model

* We present Jamba, a new base large language model based on a novel hybrid Transformer-Mamba mixture-of-experts (MoE) architecture.

* Specifically, Jamba interleaves blocks of Transformer and Mamba layers, enjoying the benefits of both model families. MoE is added in some of these layers to increase model capacity while keeping active parameter usage manageable.

* This flexible architecture allows resource- and objective-specific configurations. In the particular configuration we have implemented, we end up with a powerful model that fits in a single 80GB GPU.

* Built at large scale, Jamba provides high throughput and small memory footprint compared to vanilla Transformers, and at the same time state-of-the-art performance on standard language model benchmarks and long-context evaluations. Remarkably, the model presents strong results for up to 256K tokens context length.

* We study various architectural decisions, such as how to combine Transformer and Mamba layers, and how to mix experts, and show that some of them are crucial in large scale modeling. We also describe several interesting properties of these architectures which the training and evaluation of Jamba have revealed, and plan to release checkpoints from various ablation runs, to encourage further exploration of this novel architecture. We make the weights of our implementation of Jamba publicly available under a permissive license.

* SANA: Efficient High-Resolution Image Synthesis with Linear Diffusion Transformers

* We introduce Sana, a text-to-image framework that can efficiently generate images up to 4096×4096 resolution. Sana can synthesize high-resolution, high-quality images with strong text-image alignment at a remarkably fast speed, deployable on laptop GPU. Core designs include:

* (1) Deep compression autoencoder: unlike traditional AEs, which compress images only 8×, we trained an AE that can compress images 32×, effectively reducing the number of latent tokens.

* (2) Linear DiT: we replace all vanilla attention in DiT with linear attention, which is more efficient at high resolutions without sacrificing quality.

* (3) Decoder-only text encoder: we replaced T5 with modern de

![2024 in Post-Transformers Architectures (State Space Models, RWKV) [LS Live @ NeurIPS] 2024 in Post-Transformers Architectures (State Space Models, RWKV) [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153556680/f4d6f1a19e9a93b6e342a5bbe8815cd2.jpg)

![[Ride Home] Simon Willison: Things we learned about LLMs in 2024 [Ride Home] Simon Willison: Things we learned about LLMs in 2024](https://s3.castbox.fm/0c/b6/ce/a4e5992f093b57336ea469ba58d85a340b_scaled_v1_400.jpg)

![2024 in Agents [LS Live! @ NeurIPS 2024] 2024 in Agents [LS Live! @ NeurIPS 2024]](https://s3.castbox.fm/3d/de/82/0d8893949c705b611e5972f65f39c94b2c_scaled_v1_400.jpg)

![2024 in Synthetic Data and Smol Models [LS Live @ NeurIPS] 2024 in Synthetic Data and Smol Models [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153567986/bbef81072a7602f9a124ffd13d17f992.jpg)

![2024 in Open Models [LS Live @ NeurIPS] 2024 in Open Models [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153509369/8926d1ae15dfa6cacc2b0cd7158b07d3.jpg)

![2024 in Vision [LS Live @ NeurIPS] 2024 in Vision [LS Live @ NeurIPS]](https://s3.castbox.fm/3e/66/20/159303c2f347870301315dc9e247e2ade0_scaled_v1_400.jpg)

![2024 in AI Startups [LS Live @ NeurIPS] 2024 in AI Startups [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153389370/2d1909e2fbbd5c9267a782756c04d8a3.jpg)