In the Arena: How LMSys changed LLM Benchmarking Forever

Description

Apologies for lower audio quality; we lost recordings and had to use backup tracks.

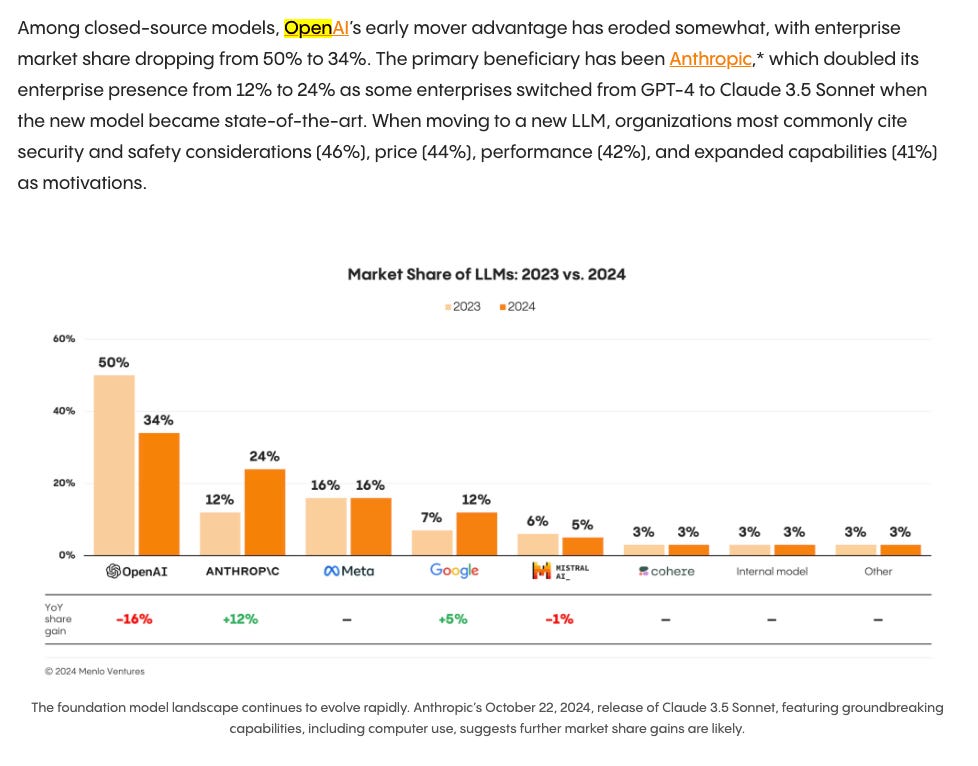

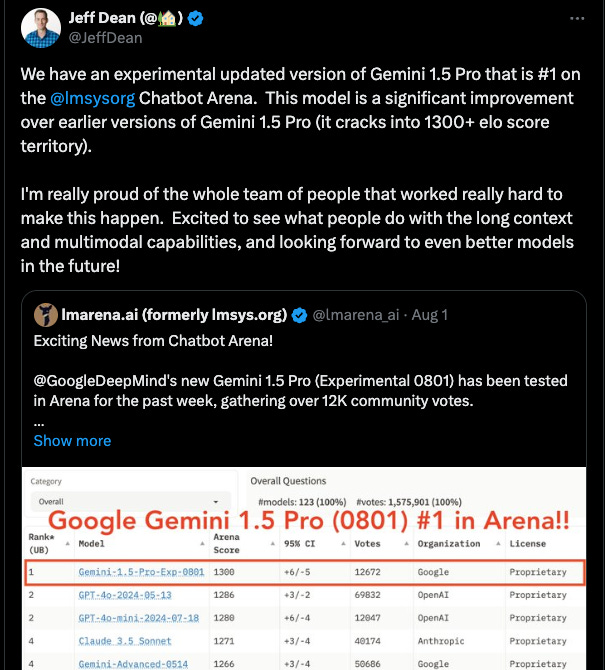

Our guests today are Anastasios Angelopoulos and Wei-Lin Chiang, leads of Chatbot Arena, fka LMSYS, the crowdsourced AI evaluation platform developed by the LMSys student club at Berkeley, which became the de facto standard for comparing language models. Arena Elo is often more cited than MMLU scores to many folks, and they have attracted >1,000,000 people to cast votes since its launch, leading top model trainers to cite them over their own formal academic benchmarks:

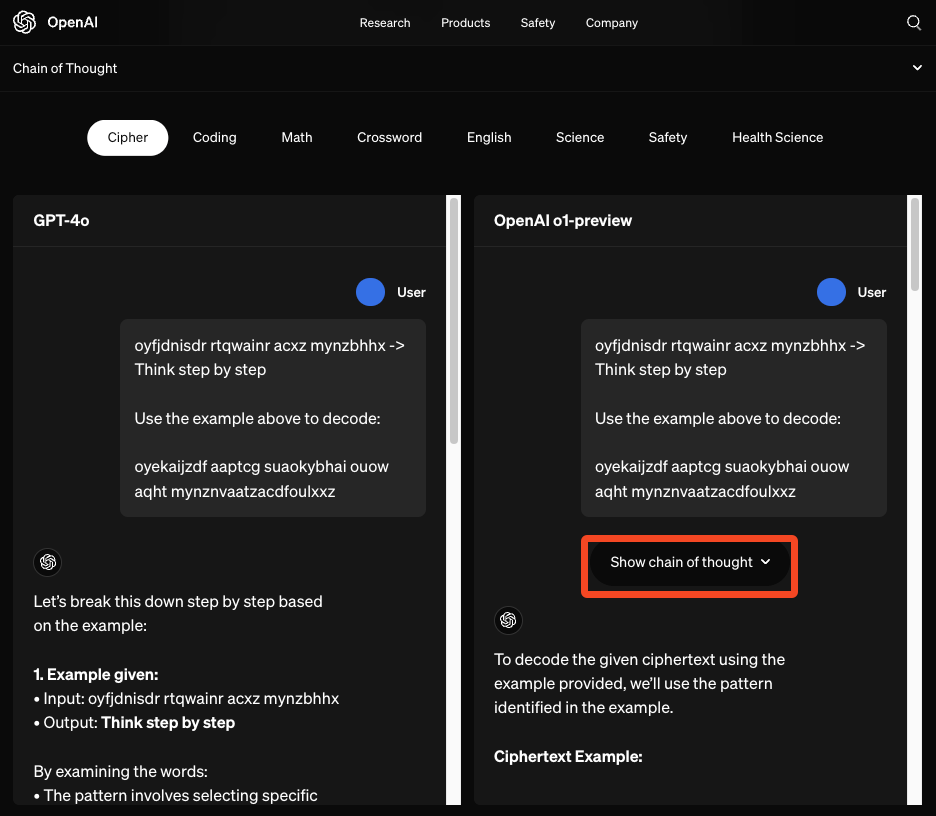

The Limits of Static Benchmarks

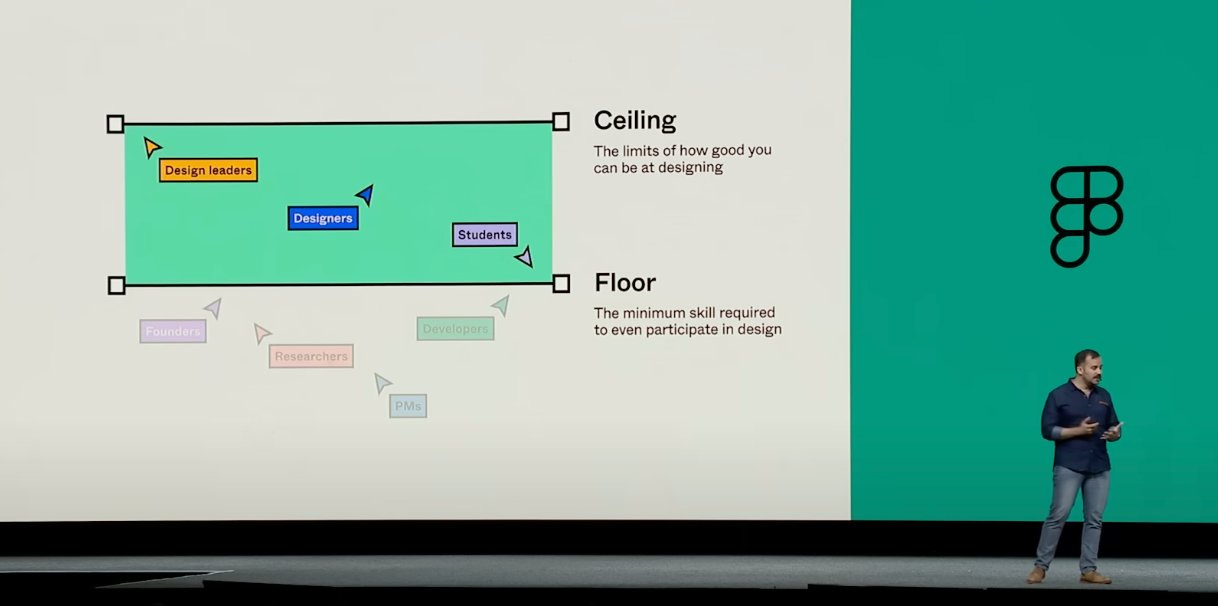

We’ve done two benchmarks episodes: Benchmarks 101 and Benchmarks 201. One issue we’ve always brought up with static benchmarks is that 1) many are getting saturated, with models scoring almost perfectly on them 2) they often don’t reflect production use cases, making it hard for developers and users to use them as guidance.

The fundamental challenge in AI evaluation isn't technical - it's philosophical. How do you measure something that increasingly resembles human intelligence? Rather than trying to define intelligence upfront, Arena let users interact naturally with models and collect comparative feedback. It's messy and subjective, but that's precisely the point - it captures the full spectrum of what people actually care about when using AI.

The Pareto Frontier of Cost vs Intelligence

Because the Elo scores are remarkably stable over time, we can put all the chat models on a map against their respective cost to gain a view of at least 3 orders of magnitude of model sizes/costs and observe the remarkable shift in intelligence per dollar over the past year:

This frontier stood remarkably firm through the recent releases of o1-preview and price cuts of Gemini 1.5:

The Statistics of Subjectivity

In our Benchmarks 201 episode, Clémentine Fourrier from HuggingFace thought this design choice was one of shortcomings of arenas: they aren’t reproducible. You don’t know who ranked what and what exactly the outcome was at the time of ranking. That same person might rank the same pair of outputs differently on a different day, or might ask harder questions to better models compared to smaller ones, making it imbalanced.

Another argument that people have brought up is confirmation bias. We know humans prefer longer responses and are swayed by formatting - Rob Mulla from Dreadnode had found some interesting data on this in May:

The approach LMArena is taking is to use logistic regression to decompose human preferences into constituent factors. As Anastasios explains: "We can say what components of style contribute to human preference and how they contribute." By adding these style components as parameters, they can mathematically "suck out" their influence and isolate the core model capabilities.

This extends beyond just style - they can control for any measurable factor: "What if I want to look at the cost adjusted performance? Parameter count? We can ex post facto measure that."

This is one of the most interesting things about Arena: You have a data generation engine which you can clean and turn into leaderboards later. If you wanted to create a leaderboard for poetry writing, you could get existing data from Arena, normalize it by identifying these style components. Whether or not it’s possible to really understand WHAT bias the voters have, that’s a different question.

Private Evals

One of the most delicate challenges LMSYS faces is maintaining trust while collaborating with AI labs. The concern is that labs could game the system by testing multiple variants privately and only releasing the best performer. This was brought up when 4o-mini released and it ranked as the second best model on the leaderboard:

But this fear misunderstands how Arena works. Unlike static benchmarks where selection bias is a major issue, Arena's live nature means any initial bias gets washed out by ongoing evaluation. As Anastasios explains: "In the long run, there's way more fresh data than there is data that was used to compare these five models."

The other big question is WHAT model is actually being tested; as people often talk about on X / Discord, the same endpoint will randomly feel “nerfed” like it happened for “Claude European summer” and corresponding conspiracy theories:

It’s hard to keep track of these performance changes in Arena as these changes (if real…?) are not observable.

The Future of Evaluation

The team's latest work on RouteLLM points to an interesting future where evaluation becomes more granular and task-specific. But they maintain that even simple routing strategies can be powerful - like directing complex queries to larger models while handling simple tasks with smaller ones.

Arena is now going to expand beyond text into multimodal evaluation and specialized domains like code execution and red teaming. But their core insight remains: the best way to evaluate intelligence isn't to simplify it into metrics, but to embrace its complexity and find rigorous ways to analyze it. To go after this vision, they are spinning out Arena from LMSys, which will stay as an academia-driven group at Berkeley.

Full Video Podcast

Chapters

* 00:00:00 - Introductions

* 00:01:16 - Origin and development of Chatbot Arena

* 00:05:41 - Static benchmarks vs. Arenas

* 00:09:03 - Community building

* 00:13:32 - Biases in human preference evaluation

* 00:18:27 - Style Control and Model Categories

* 00:26:06 - Impact of o1

* 00:29:15 - Collaborating with AI labs

* 00:34:51 - RouteLLM and router models

* 00:38:09 - Future of LMSys / Arena

Show Notes

* Anastasios' NeurIPS Paper Conformal Risk Control

* LMSys

* MTBench

* E2B

Transcript

Alessio [00:00:00 ]: Hey everyone, welcome to the Latent Space podcast. This is Alessio, Partner and CTO in Residence at Decibel Partners, and I'm joined by my co-host Swyx, founder of Smol.ai.

Swyx [00:00:14 ]: Hey, and today we're very happy and excited to welcome Anastasios and Wei Lin from LMSys. Welcome guys.

Wei Lin [00:00:21 ]: Hey, how's it going? Nice to see you.

Anastasios [00:00:23 ]: Thanks for having us.

Swyx [00:00:24 ]: Anastasios, I actually saw you, I think at last year's NeurIPS. You were presenting a paper, which I don't really super understand, but it was some theory paper about how your method was very dominating over other sort of search methods. I don't remember what it was, but I remember that you were a very confident speaker.

Anastasios [00:00:40 ]: Oh, I totally remember you. Didn't ever connect that, but yes, that's definitely true. Yeah. Nice to see you again.

Swyx [00:00:46 ]: Yeah. I was frantically looking for the name of your paper and I couldn't find it. Basically I had to cut it because I didn't understand it.

Anastasios [00:00:51 ]: Is this conformal PID control or was this the online control?

Wei Lin [00:00:55 ]: Blast from the past, man.

Swyx [00:00:57 ]: Blast from the past. It's always interesting how NeurIPS and all these academic conferences are sort of six months behind what people are actually doing, but conformal risk control, I would recommend people check it out. I have the recording. I just never published it just because I was like, I don't understand this enough to explain it.

Anastasios [00:01:14 ]: People won't be interested.

Wei Lin [00:01:15 ]: It's all good.

Swyx [00:01:16 ]: But ELO scores, ELO scores are very easy to understand. You guys are responsible for the biggest revolution in language model benchmarking in the last few years. Maybe you guys want to introduce yourselves and maybe tell

![2024 in Vision [LS Live @ NeurIPS] 2024 in Vision [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153472517/d3a235d317f9f5a30d0dff7ffc795392.jpg)

![The State of AI Startups [LS Live @ NeurIPS] The State of AI Startups [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153389370/2d1909e2fbbd5c9267a782756c04d8a3.jpg)