Efficiency is Coming: 3000x Faster, Cheaper, Better AI Inference from Hardware Improvements, Quantization, and Synthetic Data Distillation

Description

AI Engineering is expanding! Join the first 🇬🇧 AI Engineer London meetup in Sept and get in touch for sponsoring the second 🗽 AI Engineer Summit in NYC this Dec!

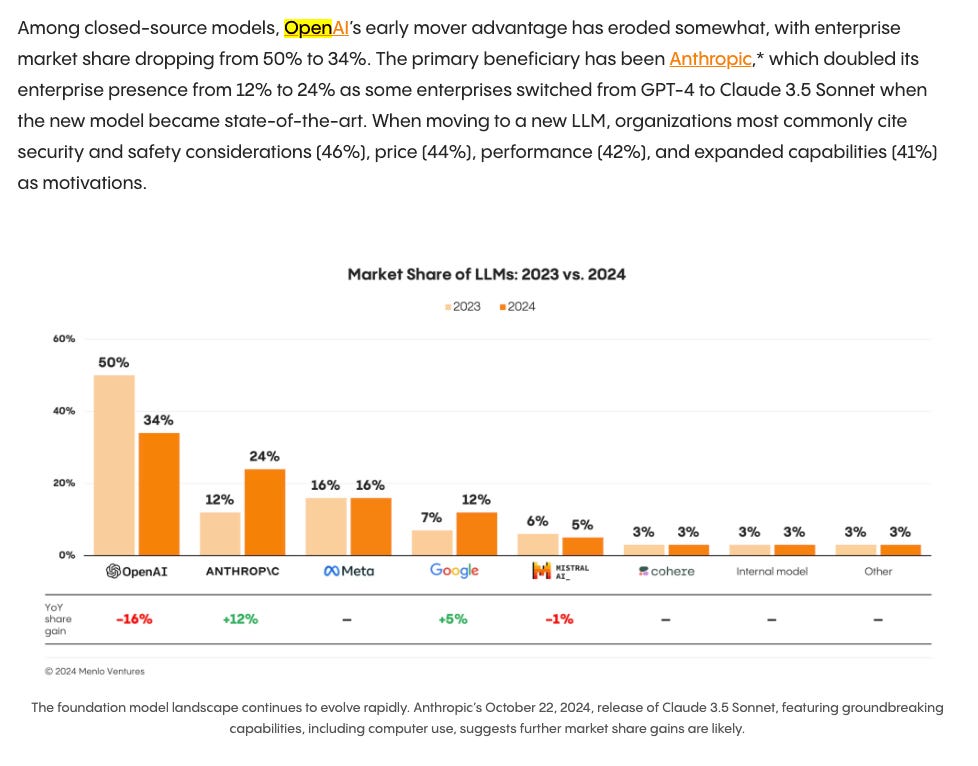

The commoditization of intelligence takes on a few dimensions:

* Time to Open Model Equivalent: 15 months between GPT-4 and Llama 3.1 405B

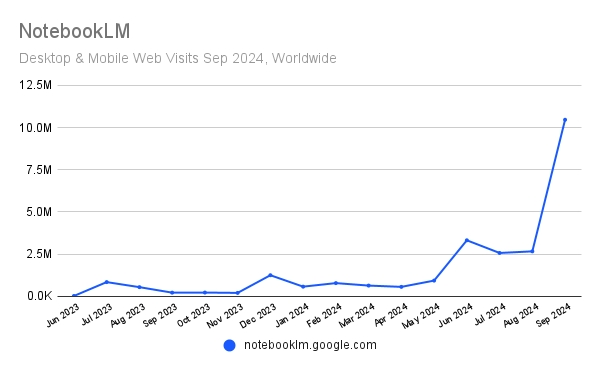

* 10-100x CHEAPER/year: from $30/mtok for Claude 3 Opus to $3/mtok for L3-405B, and a 400x reduction in the frontier OpenAI model from 2022-2024. Notably, for personal use cases, both Gemini Flash and now Cerebras Inference offer 1m tokens/day inference free, causing the Open Model Red Wedding.

* Alternatively you can observe the frontiers of various small/medium/large sizes of intelligence per dollar shift in realtime. 2024 has been particularly aggressive with almost 2 order-of-magnitude improvements in $/Elo points in the last 8 months.

* 4-8x FASTER/year: The new Cerebras Inference platform runs 70B models at 450 tok/s, almost twice as fast as the Groq Cloud example that went viral earlier this year (and at $0.60/mtok to boot). James Wang says they have room to ”~8x throughput in the next few months”, which needs to be seen in reality and at scale, but is very exciting for downstream latency/throughput-sensitive usecases.

Today’s guest, Nyla Worker, a senior PM at Nvidia, Convai, and now Google, and recently host of the GPUs & Inference track at the World’s Fair, was the first to point out to us that the kind of efficiency improvements that have become a predominant theme in LLMs in 2024, have been seen before in her career in computer vision.

From her start at Ebay optimizing V100 inference for a ResNet-50 model for image search, she has watched many improvements like Multi-Inference GPU (allowing multiple instances with perfect hardware parallelism), Quantization Aware Training (most recently highlighted by Noam Shazeer pre Character AI departure) and Model Distillation (most recently highlighted by the Llama 3.1 paper) stacking with baseline hardware improvements (from V100s to A100s to H100s to GH200s) to produce theoretically 3000x faster inference now than 6 years ago.

What Nyla saw in her career the last 6 years, is happening to LLMs today (not exactly repeating, but surely rhyming), specifically with LoRAs, native Int8 and even Ternary models, and teacher model distillation. We were excited to delve into all things efficiency in this episode and even come out the other side with bonus discussions on what generative AI can do for gaming, fanmade TV shows, character AI conversations, and even podcasting!

Show Notes:

* Related Nvidia research

* Improving INT8 Accuracy Using Quantization Aware Training and the NVIDIA TAO Toolkit

* Nvidia Jetson Nano: Bringing the power of modern AI to millions of devices.

* Synthetic Data with Nvidia Omniverse Replicator: Accelerate AI Training Faster Than Ever with New NVIDIA Omniverse Replicator Capabilities

Timestamps

* [00:00:00 ] Intro from Suno

* [00:03:17 ] Nyla's path from Astrophysics to LLMs

* [00:05:45 ] Efficiency Curves in Computer Vision at Nvidia

* [00:09:51 ] Optimizing for today's hardware vs tomorrow's inference

* [00:16:33 ] Quantization vs Precision tradeoff

* [00:20:42 ] Hitting the Data Wall: The need for Synthetic Data at Nvidia

* [00:26:20 ] Sora, text to 3D models, and Synthetic Data from Game Engines

* [00:30:55 ] ResNet 50 keeps coming back

* [00:35:40 ] Gaming Benchmarks

* [00:38:00 ] FineWeb

* [00:39:43 ] Traditional ML vs LLMs path to general intelligence

* [00:42:33 ] ConvAI - AI NPCs

* [00:45:32 ] Jensen and Lisa at Computex Taiwan

* [00:52:51 ] NPCs need to take Actions and have Context

* [00:54:29 ] Simulating different roles for training

* [00:58:37 ] AI Generated Fan Content - Podcasts, TV Show, Einstein

Transcripts

[00:00:29 ] AI Charlie: Happy September. This is your AI co host, Charlie.

[00:00:34 ] AI Charlie: One topic we've developed on LatentSpace is the importance of efficiency in all forms, from sample efficiency for spending limited training compute on limited data, and increasingly towards inference efficiency for increasingly demanding use cases like local LLMs, real time AI NPCs, and edge AI. However, we've never really developed any intuition for the trends and efficiency over time.

[00:00:59 ] AI Charlie: For example, from 2020 to 2023, the price of GPT 3 level intelligence dropped from 60 per million tokens to 27 cents with the mixtural price war of December 2023. See show notes for charts and data. As for GPT 4 level intelligence, it took just over a year for GPT 4 to be matched by LLAMA370B and GPT 4 Turbo to be beaten by LLAMA3405B in open source, causing blended cost per million tokens to freefall from over 30 for Claude III Opus and the original GPT 4 down to under 3 for LLAMA3405B.

[00:01:43 ] AI Charlie: Of course, OpenAI themselves have not stood still, slashing the price of GPT 4. 0 by 30 times with GPT 4. 0 Mini. Yes, you heard that right. GPT 4. 0 Mini is 3. 5 percent the price of GPT 4. 0, yet ties with GPT 4 Turbo on LM SYS. When the price of intelligence is falling by over 90 percent every year. What are the driving forces?

[00:02:10 ] AI Charlie: And how should AI engineers plan for this? It turns out that this has happened before in computer vision, which has seen an almost 3, 000 times latency improvement over the last 6 years. We invited Nila Worker of NVIDIA and Convay. Who first made this comparison to help talk us through the past, present, and future use cases of efficient AI inference.

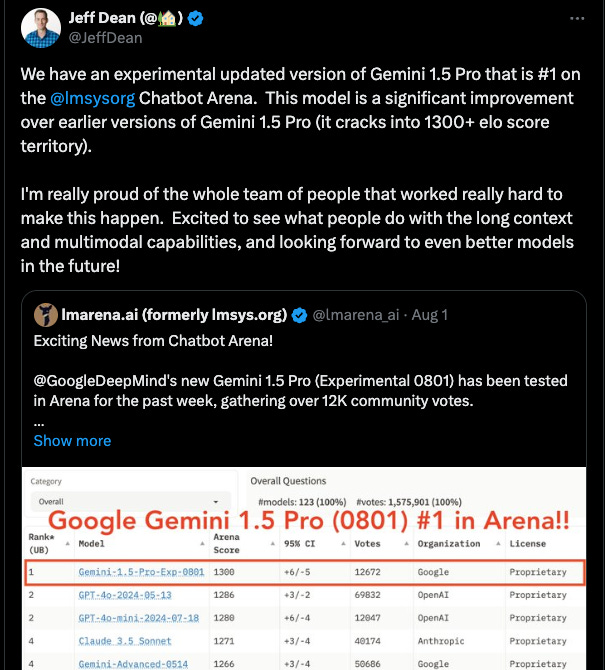

[00:02:35 ] AI Charlie: Note that this was recorded before Naila joined Google AI to work on efficiency, so you can expect more great efficiency work coming from her on the Gemini team. In latent space news, look out for our upcoming London and NYC meetups on the community page, and of course feel free to start your own and simply let us know.

[00:02:54 ] AI Charlie: Watch out and take care.

[00:02:57 ] Alessio: Hey everyone, welcome to the Latent Space Podcast. This is Alessio, partner and CTO in residence at Decibel Partners, and I'm joined by my co host Swyx, founder of Small. ai.

[00:03:11 ] Hey, and today we are in the remote studio with Naila Worko. Welcome, Naila. Good to see you.

[00:03:16 ] Nyla Worker: Good to see you all.

[00:03:17 ] Nyla's path from Astrophysics to LLMs

[00:03:17 ] swyx: So we try to introduce people based on sort of their professional profile and then let you fill in the blanks.

[00:03:22 ] swyx: Um, so you did astrophysics research at Carleton College, uh, and then you made your way into machine learning. We're going to talk about your time at eBay, but most recently you spent four years at Nvidia, uh, working on everything from synthetic data to cloud container offerings. And now currently you're director of product management at Convai.

[00:03:41 ] swyx: What should people know about you that maybe it's not super obvious on your LinkedIn that it's, you know. Encapsulates your life journey so far.

[00:03:47 ] Nyla Worker: And yeah, I think the thing that is not very obvious is that transition from astrophysics research to AI and how that happe

![2024 in Vision [LS Live @ NeurIPS] 2024 in Vision [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153472517/d3a235d317f9f5a30d0dff7ffc795392.jpg)

![The State of AI Startups [LS Live @ NeurIPS] The State of AI Startups [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153389370/2d1909e2fbbd5c9267a782756c04d8a3.jpg)