Building AGI in Real Time (OpenAI Dev Day 2024)

Description

We all have fond memories of the first Dev Day in 2023:

and the blip that followed soon after.

As Ben Thompson has noted, this year’s DevDay took a quieter, more intimate tone. No Satya, no livestream, (slightly fewer people?).

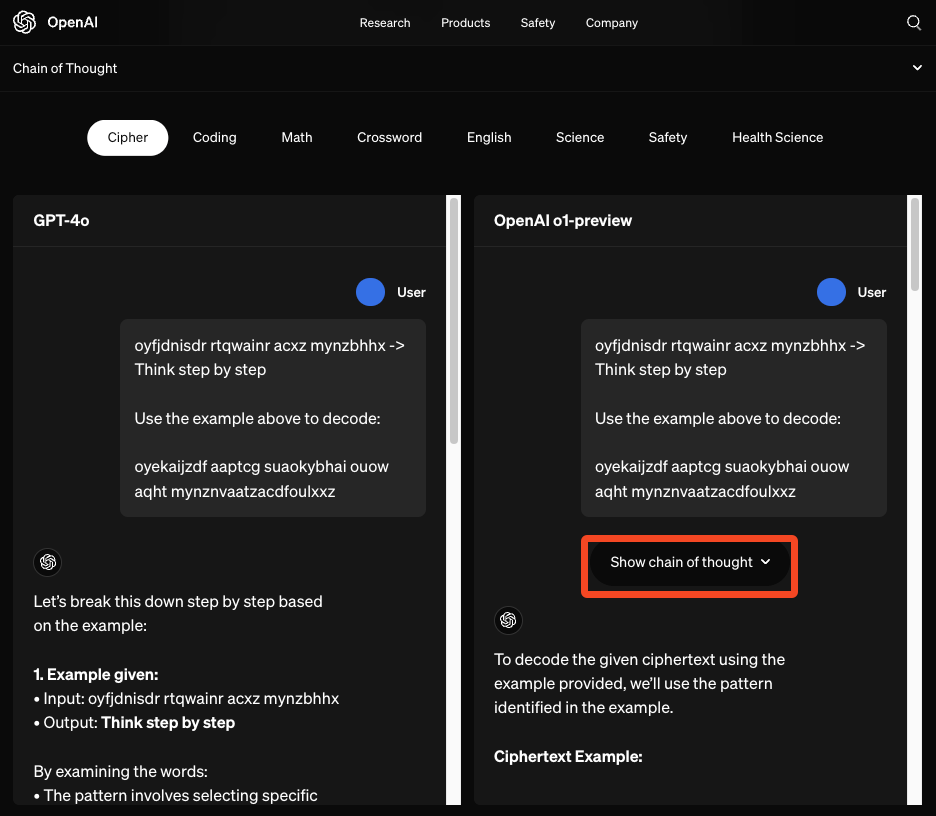

Instead of putting ChatGPT announcements in DevDay as in 2023, o1 was announced 2 weeks prior, and DevDay 2024 was reserved purely for developer-facing API announcements, primarily the Realtime API, Vision Finetuning, Prompt Caching, and Model Distillation.

However the larger venue and more spread out schedule did allow a lot more hallway conversations with attendees as well as more community presentations including our recent guest Alistair Pullen of Cosine as well as deeper dives from OpenAI including our recent guest Michelle Pokrass of the API Team.

Thanks to OpenAI’s warm collaboration (we particularly want to thank Lindsay McCallum Rémy!), we managed to record exclusive interviews with many of the main presenters of both the keynotes and breakout sessions. We present them in full in today’s episode, together with a full lightly edited Q&A with Sam Altman.

Show notes and related resources

Some of these used in the final audio episode below

* Greg Kamradt coverage of Structured Output session, Scaling LLM Apps session

* Fireside Chat Q&A with Sam Altman

Timestamps

* [00:00:00 ] Intro by Suno.ai

* [00:01:23 ] NotebookLM Recap of DevDay

* [00:09:25 ] Ilan's Strawberry Demo with Realtime Voice Function Calling

* [00:19:16 ] Olivier Godement, Head of Product, OpenAI

* [00:36:57 ] Romain Huet, Head of DX, OpenAI

* [00:47:08 ] Michelle Pokrass, API Tech Lead at OpenAI ft. Simon Willison

* [01:04:45 ] Alistair Pullen, CEO, Cosine (Genie)

* [01:18:31 ] Sam Altman + Kevin Weill Q&A

* [02:03:07 ] Notebook LM Recap of Podcast

Transcript

[00:00:00 ] Suno AI: Under dev daylights, code ignites. Real time voice streams reach new heights. O1 and GPT, 4. 0 in flight. Fine tune the future, data in sight. Schema sync up, outputs precise. Distill the models, efficiency splice.

[00:00:33 ] AI Charlie: Happy October. This is your AI co host, Charlie. One of our longest standing traditions is covering major AI and ML conferences in podcast format. Delving, yes delving, into the vibes of what it is like to be there stitched in with short samples of conversations with key players, just to help you feel like you were there.

[00:00:54 ] AI Charlie: Covering this year's Dev Day was significantly more challenging because we were all requested not to record the opening keynotes. So, in place of the opening keynotes, we had the viral notebook LM Deep Dive crew, my new AI podcast nemesis, Give you a seven minute recap of everything that was announced.

[00:01:15 ] AI Charlie: Of course, you can also check the show notes for details. I'll then come back with an explainer of all the interviews we have for you today. Watch out and take care.

[00:01:23 ] NotebookLM Recap of DevDay

[00:01:23 ] NotebookLM: All right, so we've got a pretty hefty stack of articles and blog posts here all about open ais. Dev day 2024.

[00:01:32 ] NotebookLM 2: Yeah, lots to dig into there.

[00:01:34 ] NotebookLM 2: Seems

[00:01:34 ] NotebookLM: like you're really interested in what's new with AI.

[00:01:36 ] NotebookLM 2: Definitely. And it seems like OpenAI had a lot to announce. New tools, changes to the company. It's a lot.

[00:01:43 ] NotebookLM: It is. And especially since you're interested in how AI can be used in the real world, you know, practical applications, we'll focus on that.

[00:01:51 ] NotebookLM: Perfect. Like, for example, this Real time API, they announced that, right? That seems like a big deal if we want AI to sound, well, less like a robot.

[00:01:59 ] NotebookLM 2: It could be huge. The real time API could completely change how we, like, interact with AI. Like, imagine if your voice assistant could actually handle it if you interrupted it.

[00:02:08 ] NotebookLM: Or, like, have an actual conversation.

[00:02:10 ] NotebookLM 2: Right, not just these clunky back and forth things we're used to.

[00:02:14 ] NotebookLM: And they actually showed it off, didn't they? I read something about a travel app, one for languages. Even one where the AI ordered takeout.

[00:02:21 ] NotebookLM 2: Those demos were really interesting, and I think they show how this real time API can be used in so many ways.

[00:02:28 ] NotebookLM 2: And the tech behind it is fascinating, by the way. It uses persistent WebSocket connections and this thing called function calling, so it can respond in real time.

[00:02:38 ] NotebookLM: So the function calling thing, that sounds kind of complicated. Can you, like, explain how that works?

[00:02:42 ] NotebookLM 2: So imagine giving the AI Access to this whole toolbox, right?

[00:02:46 ] NotebookLM 2: Information, capabilities, all sorts of things. Okay. So take the travel agent demo, for example. With function calling, the AI can pull up details, let's say about Fort Mason, right, from some database. Like nearby restaurants, stuff like that.

[00:02:59 ] NotebookLM: Ah, I get it. So instead of being limited to what it already knows, It can go and find the information it needs, like a human travel agent would.

[00:03:07 ] NotebookLM 2: Precisely. And someone on Hacker News pointed out a cool detail. The API actually gives you a text version of what's being said. So you can store that, analyze it.

[00:03:17 ] NotebookLM: That's smart. It seems like OpenAI put a lot of thought into making this API easy for developers to use. But, while we're on OpenAI, you know, Besides their tech, there's been some news about, like, internal changes, too.

[00:03:30 ] NotebookLM: Didn't they say they're moving away from being a non profit?

[00:03:32 ] NotebookLM 2: They did. And it's got everyone talking. It's a major shift. And it's only natural for people to wonder how that'll change things for OpenAI in the future. I mean, there are definitely some valid questions about this move to for profit. Like, will they have more money for research now?

[00:03:46 ] NotebookLM 2: Probably. But will they, you know, care as much about making sure AI benefits everyone?

[00:03:51 ] NotebookLM: Yeah, that's the big question, especially with all the, like, the leadership changes happening at OpenAI too, right? I read that their Chief Research Officer left, and their VP of Research, and even their CTO.

[00:04:03 ] NotebookLM 2: It's true. A lot of people are connecting those departures with the changes in OpenAI's structure.

[00:04:08 ] NotebookLM: And I guess it makes you wonder what's going on behind the scenes. But they are still putting out new stuff. Like this whole fine tuning thing really caught my eye.

[00:04:17 ] NotebookLM 2: Right, fine tuning. It's essentially taking a pre trained AI model. And, like, customizing it.

[00:04:23 ] NotebookLM: So instead of a general AI, you get one that's tailored for a specific job.

[00:04:27 ] NotebookLM 2: Exactly. And that opens up so many possibilities, especially for businesses. Imagine you could train an AI on your company's data, you know, like how you communicate your brand guidelines.

[00:04:37 ] NotebookLM: So it's like having an AI that's specifically trained for your company?

[00:04:41 ] NotebookLM 2: That's the idea.

[00:04:41 ] NotebookLM: And they're doing it with images now, too, right?

[00:04:44 ] NotebookLM: Fine tuning with vision is what they called it.

[00:04:46 ] NotebookLM 2: It's pretty incredible what they're doing with that, especially in fields like medicine.

[00:04:50 ] NotebookLM: Like using AI to help doctors make diagnoses.

[00:04:52 ] NotebookLM 2: Exactly. And AI could be trained on thousands of medical images, right? And then it could potentially spot things that even a trained doctor might miss.

[00:05:03 ] NotebookLM: That's kind of scary, to be honest. What if it gets it wrong?

[

![2024 in Agents [LS Live! @ NeurIPS 2024] 2024 in Agents [LS Live! @ NeurIPS 2024]](https://s3.castbox.fm/3d/de/82/0d8893949c705b611e5972f65f39c94b2c_scaled_v1_400.jpg)

![2024 in Synthetic Data and Smol Models [LS Live @ NeurIPS] 2024 in Synthetic Data and Smol Models [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153567986/bbef81072a7602f9a124ffd13d17f992.jpg)

![2024 in Post-Transformers Architectures (State Space Models, RWKV) [LS Live @ NeurIPS] 2024 in Post-Transformers Architectures (State Space Models, RWKV) [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153556680/f4d6f1a19e9a93b6e342a5bbe8815cd2.jpg)

![2024 in Open Models [LS Live @ NeurIPS] 2024 in Open Models [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153509369/8926d1ae15dfa6cacc2b0cd7158b07d3.jpg)

![2024 in Vision [LS Live @ NeurIPS] 2024 in Vision [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153472517/d3a235d317f9f5a30d0dff7ffc795392.jpg)

![2024 in AI Startups [LS Live @ NeurIPS] 2024 in AI Startups [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153389370/2d1909e2fbbd5c9267a782756c04d8a3.jpg)