Language Agents: From Reasoning to Acting

Description

OpenAI DevDay is almost here! Per tradition, we are hosting a DevDay pregame event for everyone coming to town! Join us with demos and gossip!

Also sign up for related events across San Francisco: the AI DevTools Night, the xAI open house, the Replicate art show, the DevDay Watch Party (for non-attendees), Hack Night with OpenAI at Cloudflare. For everyone else, join the Latent Space Discord for our online watch party and find fellow AI Engineers in your city.

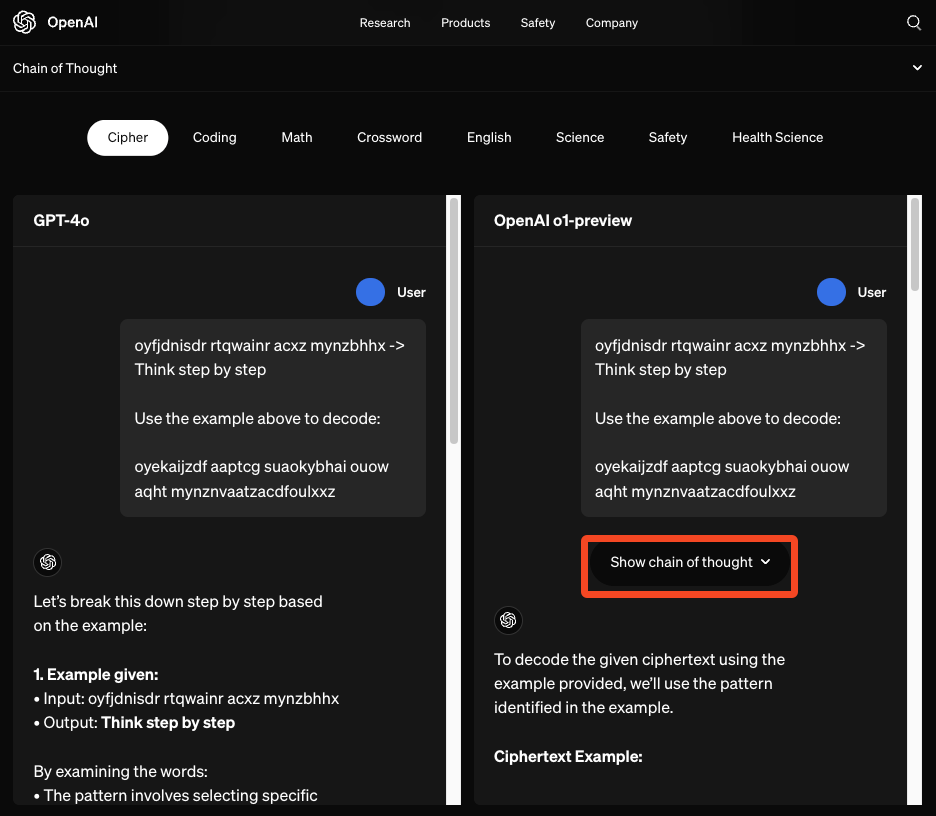

OpenAI’s recent o1 release (and Reflection 70b debacle) has reignited broad interest in agentic general reasoning and tree search methods.

While we have covered some of the self-taught reasoning literature on the Latent Space Paper Club, it is notable that the Eric Zelikman ended up at xAI, whereas OpenAI’s hiring of Noam Brown and now Shunyu suggests more interest in tool-using chain of thought/tree of thought/generator-verifier architectures for Level 3 Agents.

We were more than delighted to learn that Shunyu is a fellow Latent Space enjoyer, and invited him back (after his first appearance on our NeurIPS 2023 pod) for a look through his academic career with Harrison Chase (one year after his first LS show).

ReAct: Synergizing Reasoning and Acting in Language Models

Following seminal Chain of Thought papers from Wei et al and Kojima et al, and reflecting on lessons from building the WebShop human ecommerce trajectory benchmark, Shunyu’s first big hit, the ReAct paper showed that using LLMs to “generate both reasoning traces and task-specific actions in an interleaved manner” achieved remarkably greater performance (less hallucination/error propagation, higher ALFWorld/WebShop benchmark success) than CoT alone.

In even better news, ReAct scales fabulously with finetuning:

As a member of the elite Princeton NLP group, Shunyu was also a coauthor of the Reflexion paper, which we discuss in this pod.

Tree of Thoughts

Shunyu’s next major improvement on the CoT literature was Tree of Thoughts:

Language models are increasingly being deployed for general problem solving across a wide range of tasks, but are still confined to token-level, left-to-right decision-making processes during inference. This means they can fall short in tasks that require exploration, strategic lookahead, or where initial decisions play a pivotal role…

ToT allows LMs to perform deliberate decision making by considering multiple different reasoning paths and self-evaluating choices to decide the next course of action, as well as looking ahead or backtracking when necessary to make global choices.

The beauty of ToT is it doesnt require pretraining with exotic methods like backspace tokens or other MCTS architectures. You can listen to Shunyu explain ToT in his own words on our NeurIPS pod, but also the ineffable Yannic Kilcher:

Other Work

We don’t have the space to summarize the rest of Shunyu’s work, you can listen to our pod with him now, and recommend the CoALA paper and his initial hit webinar with Harrison, today’s guest cohost:

as well as Shunyu’s PhD Defense Lecture:

as well as Shunyu’s latest lecture covering a Brief History of LLM Agents:

As usual, we are live on YouTube!

Show Notes

* LangChain, LangSmith, LangGraph

* WebShop

* Related Episodes

* Our Thomas Scialom (Meta) episode

* Shunyu on our NeurIPS 2023 Best Papers episode

* Harrison on our LangChain episode

* Mentions

* Sierra

* Voyager

* Tavily

* SERP API

* Exa

Timestamps

* [00:00:00 ] Opening Song by Suno

* [00:03:00 ] Introductions

* [00:06:16 ] The ReAct paper

* [00:12:09 ] Early applications of ReAct in LangChain

* [00:17:15 ] Discussion of the Reflection paper

* [00:22:35 ] Tree of Thoughts paper and search algorithms in language models

* [00:27:21 ] SWE-Agent and SWE-Bench for coding benchmarks

* [00:39:21 ] CoALA: Cognitive Architectures for Language Agents

* [00:45:24 ] Agent-Computer Interfaces (ACI) and tool design for agents

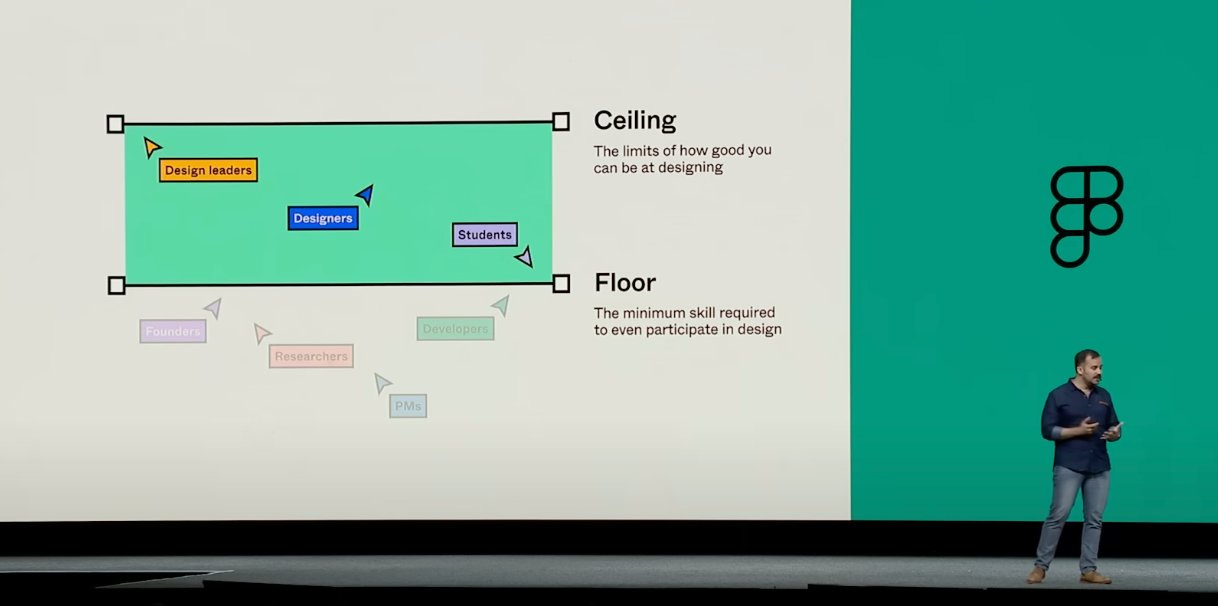

* [00:49:24 ] Designing frameworks for agents vs humans

* [00:53:52 ] UX design for AI applications and agents

* [00:59:53 ] Data and model improvements for agent capabilities

* [01:19:10 ] TauBench

* [01:23:09 ] Promising areas for AI

Transcript

Alessio [00:00:01 ]: Hey, everyone, welcome to the Latent Space podcast. This is Alessio, partner and CTO of Residence at Decibel Partners, and I'm joined by my co-host Swyx, founder of Small AI.

Swyx [00:00:12 ]: Hey, and today we have a super special episode. I actually always wanted to take like a selfie and go like, you know, POV, you're about to revolutionize the world of agents because we have two of the most awesome hiring agents in the house. So first, we're going to welcome back Harrison Chase. Welcome. Excited to be here. What's new with you recently in sort of like the 10, 20 second recap?

Harrison [00:00:34 ]: Linkchain, Linksmith, Lingraph, pushing on all of them. Lots of cool stuff related to a lot of the stuff that we're going to talk about today, probably.

Swyx [00:00:42 ]: Yeah.

Alessio [00:00:43 ]: We'll mention it in there. And the Celtics won the title.

Swyx [00:00:45 ]: And the Celtics won the title. You got that going on for you. I don't know. Is that like floorball? Handball? Baseball? Basketball.

Alessio [00:00:52 ]: Basketball, basketball.

Harrison [00:00:53 ]: Patriots aren't looking good though, so that's...

Swyx [00:00:56 ]: And then Xun Yu, you've also been on the pod, but only in like a sort of oral paper presentation capacity. But welcome officially to the LinkedSpace pod.

Shunyu [00:01:03 ]: Yeah, I've been a huge fan. So thanks for the invitation. Thanks.

Swyx [00:01:07 ]: Well, it's an honor to have you on. You're one of like, you're maybe the first PhD thesis def

![2024 in Vision [LS Live @ NeurIPS] 2024 in Vision [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153472517/d3a235d317f9f5a30d0dff7ffc795392.jpg)

![The State of AI Startups [LS Live @ NeurIPS] The State of AI Startups [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153389370/2d1909e2fbbd5c9267a782756c04d8a3.jpg)