The Ultimate Guide to Prompting

Description

Noah Hein from Latent Space University is finally launching with a free lightning course this Sunday for those new to AI Engineering. Tell a friend!

Did you know there are >1,600 papers on arXiv just about prompting? Between shots, trees, chains, self-criticism, planning strategies, and all sorts of other weird names, it’s hard to keep up. Luckily for us, Sander Schulhoff and team read them all and put together The Prompt Report as the ultimate prompt engineering reference, which we’ll break down step-by-step in today’s episode.

In 2022 swyx wrote “Why “Prompt Engineering” and “Generative AI” are overhyped”; the TLDR being that if you’re relying on prompts alone to build a successful products, you’re ngmi. Prompt engineering moved from being a stand-alone job to a core skill for AI Engineers now.

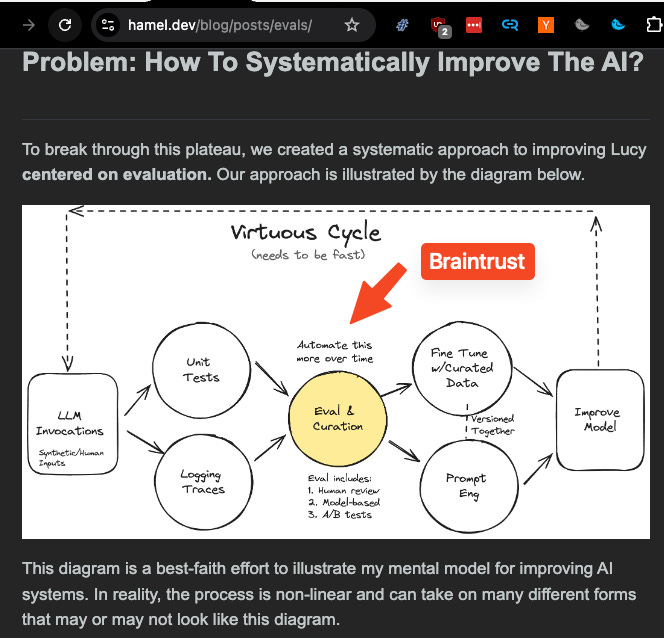

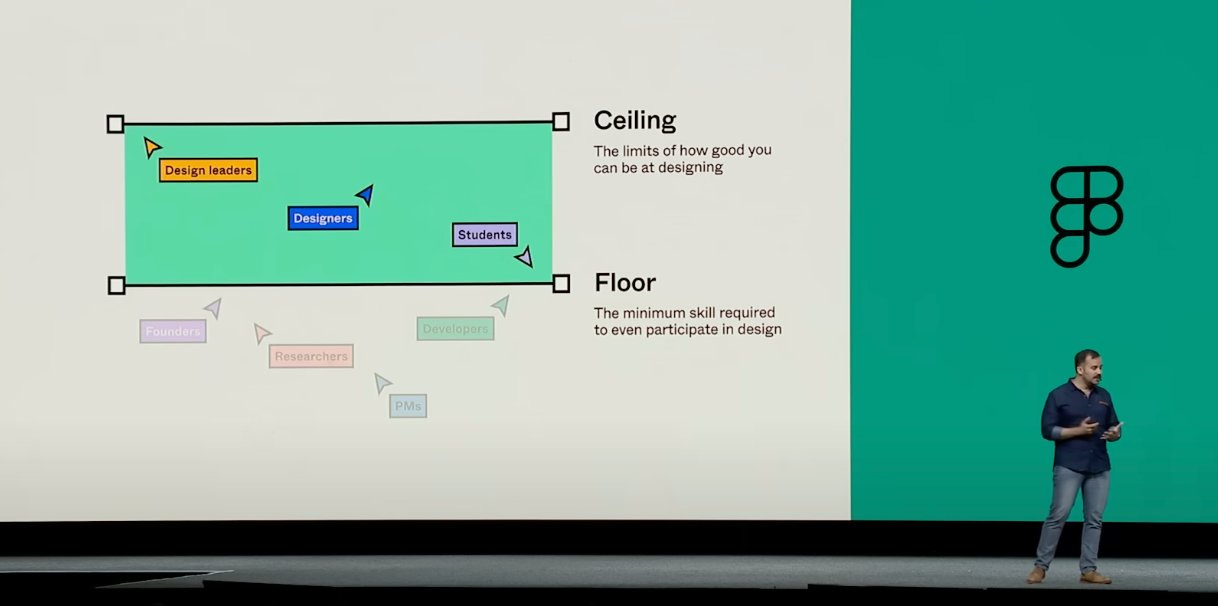

We won’t repeat everything that is written in the paper, but this diagram encapsulates the state of prompting today: confusing. There are many similar terms, esoteric approaches that have doubtful impact on results, and lots of people that are just trying to create full papers around a single prompt just to get more publications out.

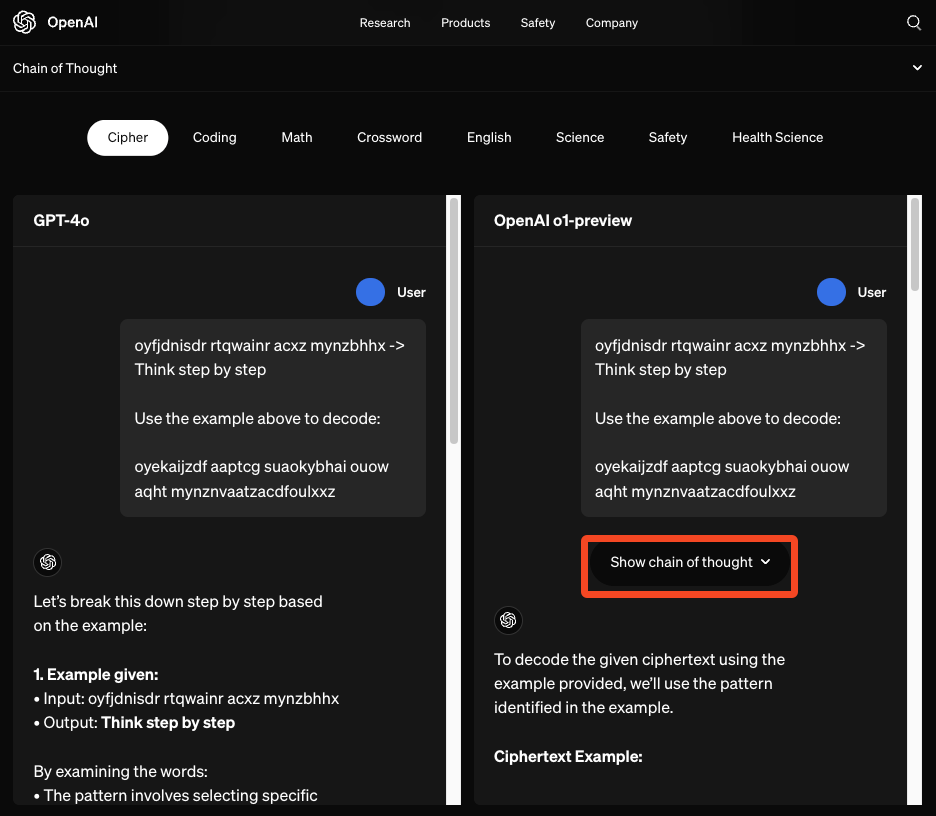

Luckily, some of the best prompting techniques are being tuned back into the models themselves, as we’ve seen with o1 and Chain-of-Thought (see our OpenAI episode). Similarly, OpenAI recently announced 100% guaranteed JSON schema adherence, and Anthropic, Cohere, and Gemini all have JSON Mode (not sure if 100% guaranteed yet). No more “return JSON or my grandma is going to die” required.

The next debate is human-crafted prompts vs automated approaches using frameworks like DSPy, which Sander recommended:

I spent 20 hours prompt engineering for a task and DSPy beat me in 10 minutes.

It’s much more complex than simply writing a prompt (and I’m not sure how many people usually spend >20 hours prompt engineering one task), but if you’re hitting a roadblock it might be worth checking out.

Prompt Injection and Jailbreaks

Sander and team also worked on HackAPrompt, a paper that was the outcome of an online challenge on prompt hacking techniques. They similarly created a taxonomy of prompt attacks, which is very hand if you’re building products with user-facing LLM interfaces that you’d like to test:

In this episode we basically break down every category and highlight the overrated and underrated techniques in each of them. If you haven’t spent time following the prompting meta, this is a great episode to catchup!

Full Video Episode

Like and subscribe on YouTube!

Timestamps

* [00:00:00 ] Introductions - Intro music by Suno AI

* [00:07:32 ] Navigating arXiv for paper evaluation

* [00:12:23 ] Taxonomy of prompting techniques

* [00:15:46 ] Zero-shot prompting and role prompting

* [00:21:35 ] Few-shot prompting design advice

* [00:28:55 ] Chain of thought and thought generation techniques

* [00:34:41 ] Decomposition techniques in prompting

* [00:37:40 ] Ensembling techniques in prompting

* [00:44:49 ] Automatic prompt engineering and DSPy

* [00:49:13 ] Prompt Injection vs Jailbreaking

* [00:57:08 ] Multimodal prompting (audio, video)

* [00:59:46 ] Structured output prompting

* [01:04:23 ] Upcoming Hack-a-Prompt 2.0 project

Show Notes

* David Ha

Transcript

Alessio [00:00:00 ]: Hey everyone, welcome to the Latent Space podcast. This is Alessio, partner and CTO-in-Residence at Decibel Partners, and I'm joined by my co-host Swyx, founder of Smol AI.

Swyx [00:00:13 ]: Hey, and today we're in the remote studio with Sander Schulhoff, author of the Prompt Report.

Sander [00:00:18 ]: Welcome. Thank you. Very excited to be here.

Swyx [00:00:21 ]: Sander, I think I first chatted with you like over a year ago. What's your brief history? I went onto your website, it looks like you worked on diplomacy, which is really interesting because we've talked with Noam Brown a couple of times, and that obviously has a really interesting story in terms of prompting and agents. What's your journey into AI?

Sander [00:00:40 ]: Yeah, I'd say it started in high school. I took my first Java class and just saw a YouTube video about something AI and started getting into it, reading. Deep learning, neural networks, all came soon thereafter. And then going into college, I got into Maryland and I emailed just like half the computer science department at random. I was like, hey, I want to do research on deep reinforcement learning because I've been experimenting with that a good bit. And over that summer, I had read the Intro to RL book and the deep reinforcement learning hands-on, so I was very excited about what deep RL could do. And a couple of people got back to me and one of them was Jordan Boydgraver, Professor Boydgraver, and he was working on diplomacy. And he said to me, this looks like it was more of a natural language processing project at the time, but it's a game, so very easily could move more into the RL realm. And I ended up working with one of his students, Denis Peskov, who's now a postdoc at Princeton. And that was really my intro to AI, NLP, deep RL research. And so from there, I worked on diplomacy for a couple of years, mostly building infrastructure for data collection and machine learning, but I always wanted to be doing it myself. So I had a number of side projects and I ended up working on the Mine RL competition, Minecraft reinforcement learning, also some people call it mineral. And that ended up being a really cool opportunity because I think like sophomore year, I knew I wanted to do some project in deep RL and I really liked Minecraft. And so I was like, let me combine these. And I was searching for some Minecraft Python library to control agents and found mineral. And I was trying to find documentation for how to build a custom environment and do all sorts of stuff. I asked in their Discord how to do this and their super responsive, very nice. And they're like, oh, you know, we don't have docs on this, but, you know, you can look around. And so I read through the whole code base and figured it out and wrote a PR and added the docs that I didn't have before. And then later I ended up joining their team for about a year. And so they maintain the library, but also run a yearly competition. That was my first foray into competitions. And I was still working on diplomacy. At some point I was working on this translation task between Dade, which is a diplomacy specific bot language and English. And I started using GPT-3 prompting it to do the translation. And that was, I think, my first intro to prompting. And I just started doing a bunch of reading about prompting. And I had an English class project where we had to write a guide on something that ended up being learn prompting. So I figured, all right, well, I'm learning about prompting anyways. You know, Chain of Thought was out at this point. There are a couple blog posts floating around, but there was no website you could go to just sort of read everything about prompting. So I made that. And it ended up getting super popular. Now continuing with it, supporting the project now after college. And then the other very interesting things, of course, are the two papers I wrote. And that is the prompt report and hack a prompt. So I saw Simon and Riley's original tweets about prompt injection go across my feed. And I put that information into the learn prompting website. And I knew, because I had some previous competition running experience

![2024 in Vision [LS Live @ NeurIPS] 2024 in Vision [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153472517/d3a235d317f9f5a30d0dff7ffc795392.jpg)

![The State of AI Startups [LS Live @ NeurIPS] The State of AI Startups [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153389370/2d1909e2fbbd5c9267a782756c04d8a3.jpg)