Production AI Engineering starts with Evals — with Ankur Goyal of Braintrust

Description

We are in 🗽 NYC this Monday! Join the AI Eng NYC meetup, bring demos and vibes!

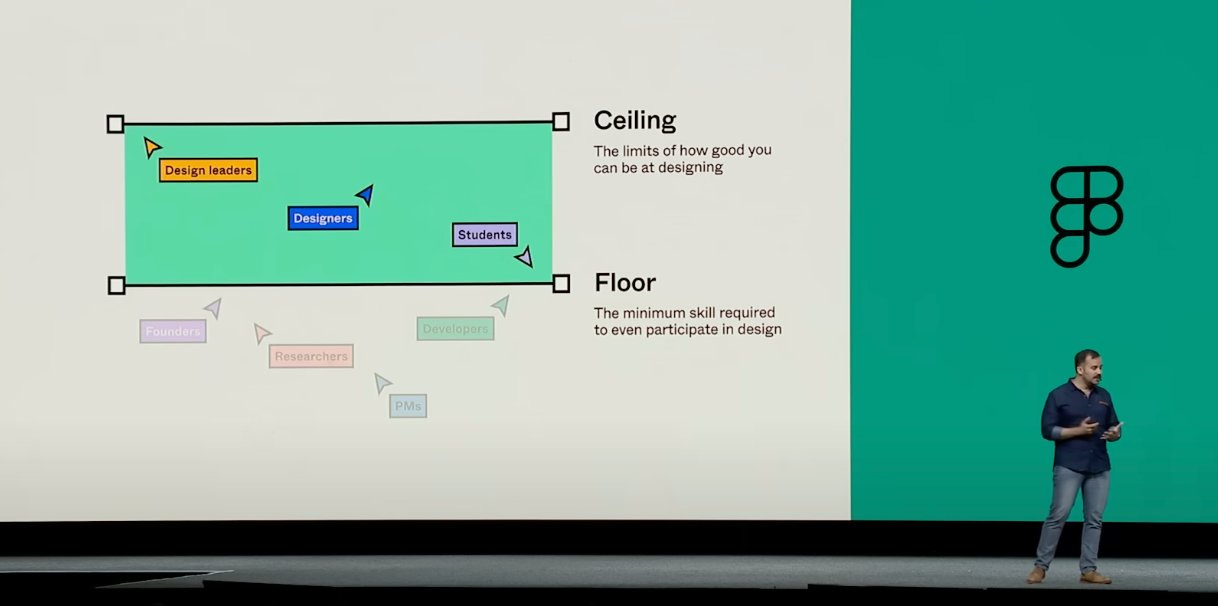

It is a bit of a meme that the first thing developer tooling founders think to build in AI is all the non-AI operational stuff outside the AI. There are well over 60 funded LLM Ops startups all with hoping to solve the new observability, cost tracking, security, and reliability problems that come with putting LLMs in production, not to mention new LLM oriented products from incumbent, established ops/o11y players like Datadog and Weights & Biases.

2 years in to the current hype cycle, the early winners have tended to be people with practical/research AI backgrounds rather than MLOps heavyweights or SWE tourists:

* LangSmith: We covered how Harrison Chase worked on AI at Robust Intelligence and Kensho, the alma maters of many great AI founders

* HumanLoop: We covered how Raza Habib worked at Google AI during his PhD

* BrainTrust: Today’s guest Ankur Goyal founded Impira pre-Transformers and was acquihired to run Figma AI before realizing how to solve the Ops problem.

There have been many VC think pieces and market maps describing what people thought were the essential pieces of the AI Engineering stack, but what was true for 2022-2023 has aged poorly. The basic insight that Ankur had is the same thesis that Hamel Husain is pushing in his World’s Fair talk and podcast with Raza and swyx:

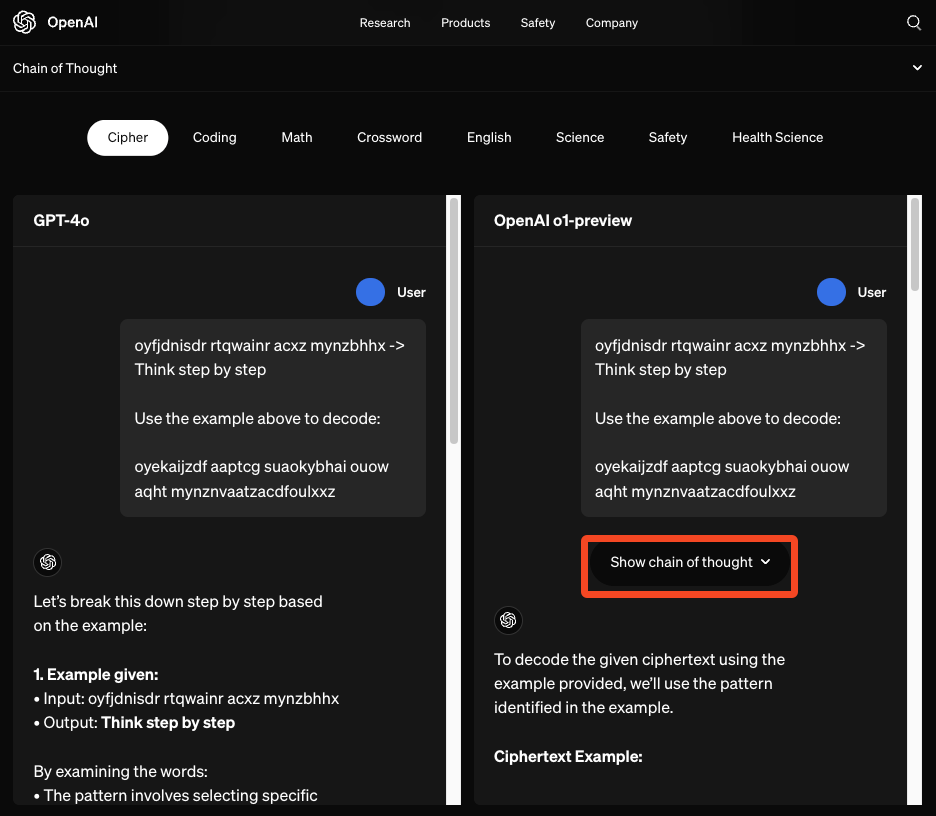

Evals are the centerpiece of systematic AI Engineering.

REALLY believing in this is harder than it looks with the benefit of hindsight. It’s not like people didn’t know evals were important. Basically every LLM Ops feature list has them. It’s an obvious next step AFTER managing your prompts and logging your LLM calls. In fact, up til we met Braintrust, we were working on an expanded version of the Impossible Triangle Theory of the LLM Ops War that we first articulated in the Humanloop writeup:

The single biggest criticism of the Rise of the AI Engineer piece is that we neglected to split out the role of product evals (as opposed to model evals) in the now infamous “API line” chart:

With hindsight, we were very focused on the differentiating 0 to 1 phase that AI Engineers can bring to an existing team of ML engineers. As swyx says on the Day 2 keynote of AI Engineer, 2024 added a whole new set of concerns as AI Engineering grew up:

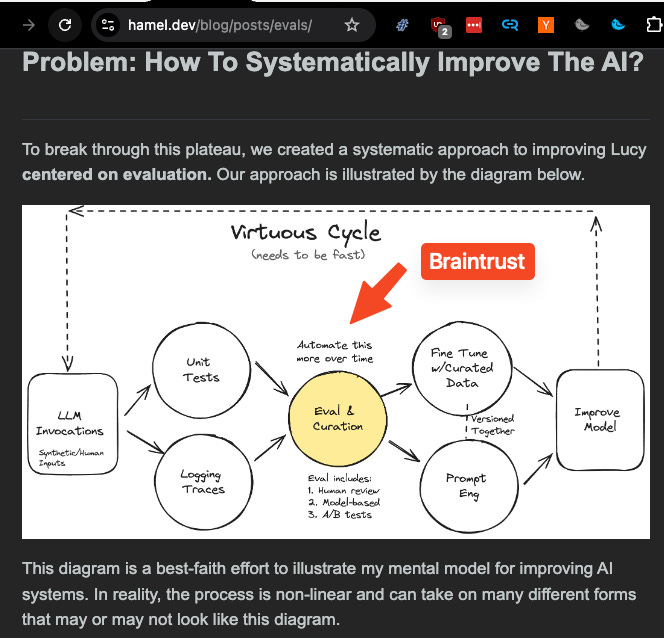

A closer examination of Hamel’s product-oriented virtuous cycle and this infra-oriented SDLC would have eventually revealed that Evals, even more than logging, was the first point where teams start to get really serious about shipping to production, and therefore a great place to make an entry into the marketplace, which is exactly what Braintrust did.

Also notice what’s NOT on this chart: shifting to shadow open source models, and finetuning them… per Ankur, Fine-tuning is not a viable standalone product:

“The thing I would say is not debatable is whether or not fine-tuning is a business outcome or not. So let's think about the other components of your triangle. Ops/observability, that is a business… Frameworks, evals, databases [are a business, but] Fine-tuning is a very compelling method that achieves an outcome. The outcome is not fine-tuning, it is can I automatically optimize my use case to perform better if I throw data at the problem? And fine-tuning is one of multiple ways to achieve that.”

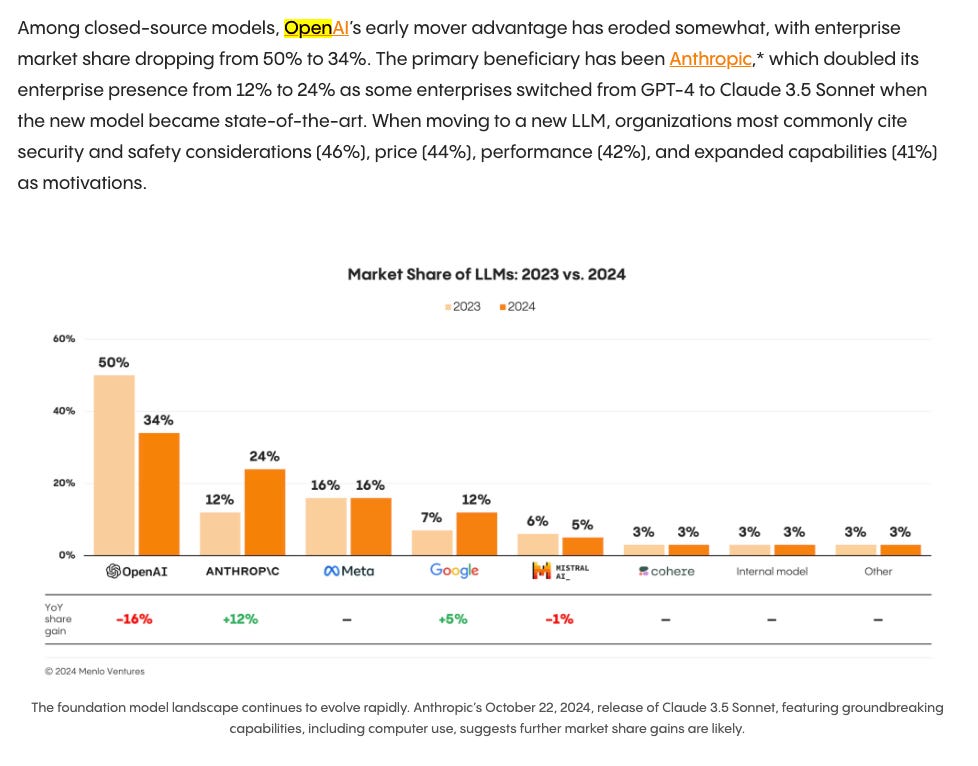

OpenAI vs Open AI Market Share

We last speculated about the market shifts in the End of OpenAI Hegemony and the Winds of AI Winter, and Ankur’s perspective is super valuable given his customer list:

Some surprises based on what he is seeing:

* Prior to Claude 3, OpenAI had near 100% market share. This tracks with what Harrison told us last year.

* Claude 3.5 Sonnet and also notably Haiku have made serious dents

* Open source model adoption is <5% and DECLINING. Contra to Eugene Cheah’s ideal marketing pitch, virtually none of Braintrust’s customers are really finetuning open source models for cost, control, or privacy. This is partially caused by…

* Open source model hosts, aka Inference providers, aren’t as mature as OpenAI’s API platform. Kudos to Michelle’s team as if they needed any more praise!

* Adoption of Big Lab models via their Big Cloud Partners, aka Claude through AWS, or OpenAI through Azure, is low. Surprising! It seems that there are issues with accessing the latest models via the Cloud partners.

swyx [01:36:51 ]: What % of your workload is open source?

Ankur Goyal [01:36:55 ]: Because of how we're deployed, I don't have like an exact number for you. Among customers running in production, it's less than 5%.

Full Video Episode

Check out the Braintrust demo on YouTube! (and like and subscribe etc)

Show Notes

* Ankur’s companies

* MemSQL/SingleStore → now Nikita Shamgunov of Neon

* Impira

* Papers mentioned

* AlexNet

* Ankur and Olmo’s talk at AIEWF

* People

* Elad Gil

* Prior episodes

* HumanLoop episode

Timestamps

* [00:00:00 ] Introduction and background on Ankur career

* [00:00:49 ] SingleStore and HTAP databases

* [00:08:19 ] Founding Impira and lessons learned

* [00:13:33 ] Unstructured vs Structured Data

* [00:25:41 ] Overview of Braintrust and its features

* [00:40:42 ] Industry observations and trends in AI tooling

* [00:58:37 ] Workload types and AI use cases in production

* [01:06:37 ] World's Fair AI conference discussion

* [01:11:09 ] AI infrastructure market landscape

* [01:24:59 ] OpenAI vs Anthropic vs other model providers

* [01:38:11 ] GPU inference market discussion

* [01:45:39 ] Hypothetical AI projects outside of Braintrust

* [01:50:25 ] Potentially joining OpenAI

* [01:52:37 ] Insights on effective networking and relationships in tech

Transcript

swyx [00:00:00 ]: Ankur Goyal, welcome to Latent Space.

Ankur Goyal [00:00:06 ]: Thanks for having me.

swyx [00:00:07 ]: Thanks for coming all the way over to our studio.

Ankur Goyal [00:00:10 ]: It was a long hike.

swyx [00:00:11 ]: A long trek. Yeah. You got T-boned by traffic. Yeah. You were the first VP

![2024 in Vision [LS Live @ NeurIPS] 2024 in Vision [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153472517/d3a235d317f9f5a30d0dff7ffc795392.jpg)

![The State of AI Startups [LS Live @ NeurIPS] The State of AI Startups [LS Live @ NeurIPS]](https://substackcdn.com/feed/podcast/1084089/post/153389370/2d1909e2fbbd5c9267a782756c04d8a3.jpg)