Academics Use Imaginary Data in Their Research

Description

<source type="image/webp" />

<source type="image/jpeg" />

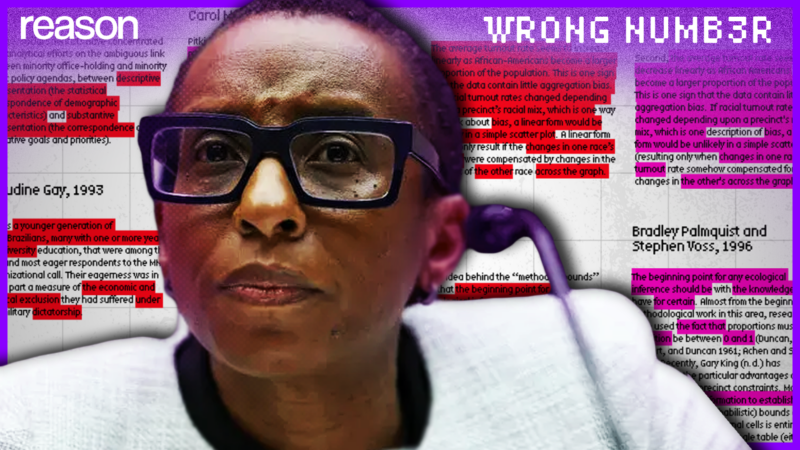

After surviving a disastrous congressional hearing, Claudine Gay was forced to resign as the president of Harvard for repeatedly copying and pasting language used by other scholars and passing it off as her own. She's hardly alone among elite academics, and plagiarism has become a roiling scandal in academia.

There's another common practice among professional researchers that should be generating even more outrage: making up data. I'm not talking about explicit fraud, which also happens way too often, but about openly inserting fictional data into a supposedly objective analysis.

Instead of doing the hard work of gathering data to test hypotheses, researchers take the easy path of generating numbers to support their preconceptions or to claim statistical significance. They cloak this practice in fancy-sounding words like "imputation," "ecological inference," "contextualization," and "synthetic control."

They're actually just making stuff up.

Claudine Gay was accused of plagiarizing sections of her Ph.D. thesis, for which she was awarded Harvard's Toppan Prize for the best dissertation in political science. She has since requested three corrections. More outrageous is that she wrote a paper on white voter participation without having any data on white voter participation.

In an article in the American Political Science Review that was based on her dissertation, Gay set out to investigate "the link between black congressional representation and political engagement," finding that "the election of blacks to Congress negatively affects white political involvement and only rarely increases political engagement among African Americans."

To arrive at that finding, you might assume that Gay had done the hard work of measuring white and black voting patterns in the districts she was studying. You would assume wrong.

Instead, Gay used regression analysis to estimate white voting patterns. She analyzed 10 districts with black representatives and observed that those with more voting-age whites had lower turnout at the polls than her model predicted. So she concludes that whites must be the ones not voting.

She committed what in statistics is known as the "ecological fallacy"—you see two things occurring in the same place and assume a causal relationship. For example, you notice a lot of people dying in hospitals, so you assume hospitals kill people. The classic example is Jim Crow laws were strictest in states that skewed black. Ecological inference leads to the false conclusion that blacks supported Jim Crow.

Gay's theory that a black congressional representative depresses white voter turnout could be true, but there are other plausible explanations for what she observed. The point is that we don't know. The way to investigate white voter turnout is to measure white voter turnout.

Gay is hardly the only culprit. Because she was the president of Harvard, it's worth making an example of her work, but it reflects broad trends in academia. Unlike the academic crime of plagiarism, students are taught and encouraged to invent data under the guise of statistical sophistication. Academia values the appearance of truth over actual truth.

You need real data to understand the world. The process of gathering real data also leads to essential insights. Researchers pick up on subtleties that often cause them to shift their hypotheses. Armchair investigators, on the other hand, build neat rows and columns that don't say anything about what's happening outside their windows.

Another technique for generating rather than collecting data is called "imputation," which was used in a paper titled "Green innovations and patents in OECD countries" by economists Almas Heshmati and Mike Shinas. The authors wanted to analyze the number of "green" patents issued by different countries in different years. But the authors only had data for some countries and some years.

"Imputation" means filling in data gaps with educated guesses. It can be defensible if you have a good basis for your guesses and they don't affect your conclusions strongly. For example, you can usually guess gender based on a person's name. But if you're studying the number of green patents, and you don't know that number, imputation isn't an appropriate tool for solving the problem.

The use of imputation allowed them to publish a paper arguing that environmentalist policies lead to innovation—which is likely the conclusions they had hoped for—and to do so with enough statistical significance to pass muster with journal editors.

A graduate student in economics working with the same data as Heshmati and Shinas recounted being "dumbstruck" after reading their paper. The student, who wants to remain anonymous for career reasons, reached out to HeshmAati to find out how he and Shinas had filled in the data gaps. The research accountability site Retraction Watch reported that they had used the Excel "autofill" function.

According to an analysis by the economist Gary Smith, altogether there were over 2,000 fictional data points amounting to 13 percent of all the data used in the paper.

The Excel autofill function is a lot of fun and genuinely handy in some situations. When you enter 1, 2, 3, it guesses 4. But it doesn't work when the data—like much of reality—have no simple or predictable pattern.

When you give Excel a list of U.S. presidents, it can't predict the next one. I did give it a try though. Why did Excel think that William Henry Harrison' would retake the White House in 1941? Harrison died in office just 31 days after his inauguration—in 1841. Most likely, autofill figured it was only fair that he be allowed to serve out his remaining years. Why did it pick 1941? That's when FDR began his third term, which apparently Excel considered to be illegitimate, so it exhumed Harrison and put him back in the White House.

In a paper published in the journal of the American Medical Association and written up by CNN and the New York Post, a team of academics claimed to show that age-adjusted death rates soared 106 percent during the pandemic among renters who had received eviction filing notices, compared to 25 percent for a control group.

The authors got 483,408 eviction filings, and asked the U.S. Census how many of the tenants had died. The answer was 0.3 percent, and that 58 percent were still alive. The status of about 42 percent was unknown—usually because the tenant had moved without filing a change of address. If the authors had assumed that all the unknowns were still alive, the COVID-era mortality increase would be 22 percent for tenants who got eviction notices versus 25 percent who didn't. This would have been a statistically insignificant finding, wouldn't have been publishable, and certainly wouldn't have gotten any press attention.

Some of the tenants that the Census couldn't find probably did die, though likely not many, since most dead people end up with death certificates—and people who are dead can't move, so you'd expect most of them to to be li