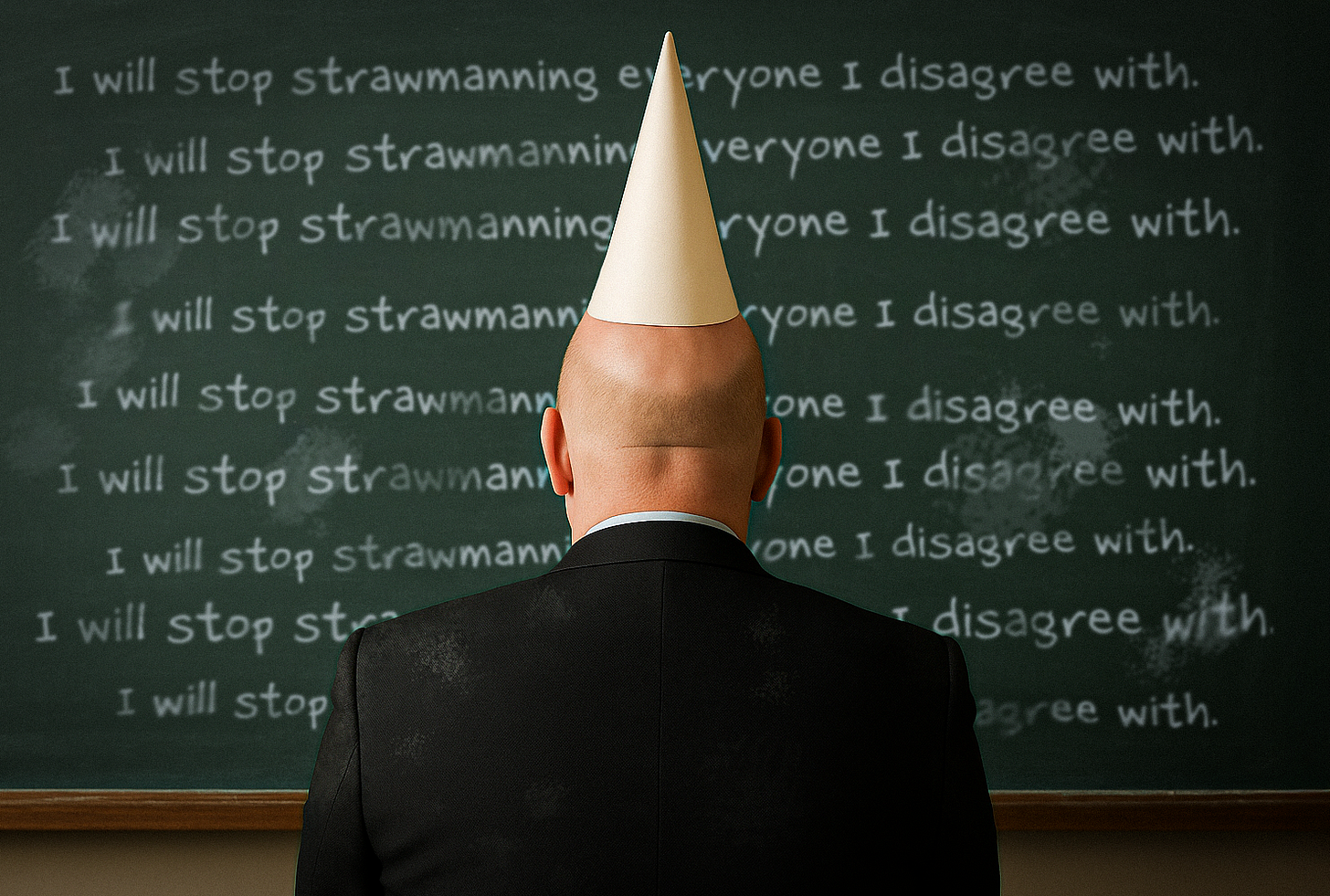

Is Marc Andreessen just flat-out dumb?

Description

Note: My apologies for the screwup that led some of you to show up for a reading club meeting only to find no meeting room and no reading club. By way of (partial) recompense, I’ll host a meeting of the club Saturday, Dec. 6 at 12 pm US Eastern Time. And, though you could be forgiven for eternally doubting that a link I share will lead to where I say it will lead, here is the link for that meeting. We’ll discuss both the reading slated for last week’s meeting and some related stuff that, like that reading, is about AI—see details below. —RW

Washington’s policy toward AI is currently in the hands of accelerationists—people who believe faster technological progress is just about always better, so government regulation is just about always bad. That’s why President Trump, having already shut down the minimal federal AI regulation installed by his predecessor, is now considering an executive order that would punish states that pass their own AI regulations. Trump’s billionaire Silicon Valley backers want this done, so Trump may well do it.

Given the stakes—given the many fronts along which AI will bring abrupt and possibly destabilizing change, and the various dystopian AI futures that various analysts see—it’s worth asking: Do these accelerationists deserve our trust? Are they smart people with sound judgment? Are they intellectually honest?

Let’s take a look.

An excellent candidate for paradigmatic tech accelerationist is Marc Andreessen. He is co-founder of the powerhouse Silicon Valley venture capital firm Andreessen Horowitz, and I suppose he’s a “thought leader”—certainly he loves to express big thoughts publicly, and they tend to get attention. In addition to expressing them on the Andreessen Horowitz podcast (a16z), he periodically posts treatises with titles like “Why AI Will Save the World” and “Techno-Optimist Manifesto.”

In that second essay, Andreessen trotted out such maxims as “everything good is downstream of growth” and “Energy is life” and offered such suggestions as “We should raise everyone to the energy consumption level we have, then increase our energy 1,000x, then raise everyone else’s energy 1,000x as well.” Now, I don’t know about you, but I don’t see a mere 1,000x increase meeting my personal energy needs, so I breathed a sigh of relief when Andreessen went on to add: “We should place intelligence and energy in a positive feedback loop, and drive them both to infinity.”

If there’s one thing all accelerationists agree on (aside from accelerationism) it’s that boosting productivity is a good thing—boosting productivity in the economy as a whole and boosting their own personal productivity. Among Andreessen’s personal productivity boosters, it seems, is authoritatively dismissing concerns about technology without wasting precious seconds coming to understand those concerns in the first place.

Last year in NZN, I noted one example of this: Andreessen sweepingly dismissed past “panics” about the outsourcing of jobs by noting that “by late 2019… the world had more jobs at higher wages than ever in history.” Well that’s interesting, but since concerns about outsourcing are concerns about jobs moving from one nation to another, data about the total number of jobs in the world doesn’t speak super-directly to the issue at hand. Yet that number was the sum total of the evidence on which Andreessen based what he seemed to think was a devastating riposte.

Two weeks ago I noticed another example of Andreessen’s productivity-boosting deployment of incomprehension. Andreessen, a libertarian billionaire, was talking about AI during a podcast conversation with his libertarian billionaire VC partner (Ben Horowitz) and libertarian billionaire White House AI “czar” David Sacks, when he alluded to a concern that some non-billionaires have about AI: It could wind up concentrating power in the hands of a small number of people and institutions, at the expense of the masses. Will we, asked Andreessen, indeed see “one or a small number of companies or for that matter governments or super AIs that kind of own and control everything”—and, in the extreme case “you have total state control”?

That question, he said, has pretty much been answered: We’re discovering that “AI is actually hyperdemocratizing.” After all, he continued, AI has “something like 600 million users today, rapidly on the way to a billion, rapidly on the way to five billion.” (He was presumably thinking of ChatGPT, which according to OpenAI has 650 million weekly users.)

I’m trying to imagine Marc Andreessen, a few decades ago, discussing Orwell’s 1984 in an undergraduate seminar. Before the professor has a chance to launch the conversation, young Andreessen blurts out: “I thought this novel was supposed to be about a country where the people are controlled by the government and don’t have any rights. But it turns out that everybody gets free access to a TV screen!”

I admit that’s not a precisely apt analogy. Large language models allow us to learn more, do more, create more than we can learn and do and create with a TV screen. This kind of value is what Andreessen has in mind when he calls AI “hyperdemocratizing.”

It’s fine for Andreessen to emphasize this value, and it’s fine that he calls it “empowering,” which it in some sense is. But to think that this qualifies as a serious rebuttal to concerns about concentrations of AI power is to miss the point.

Those of us who have those concerns understand that people find AI valuable (including, yes, in ways that are “empowering”). Indeed it’s because of this value—because AI can serve as creative tool, educator, counselor, whatever—that so many people will spend so much time with AIs and become so dependent on them. And that in turn will make it possible for these AIs to become instruments of mass influence—to shape our political views, our shopping habits, our allegiances, whatever. That’s what will give the people who control the AI so much potential power.

These people could, for example, exclude journals of certain ideologies from a large language model’s training data. In fact, for all we know they’ve done that—but since people like Andreessen have carried the day in Washington so far, we have no way of knowing; there’s no law that compels the big AI companies to tell us what data their AIs trained on.

Or the influence could just be a byproduct of an AI company’s business model. Large language models might steer us toward products and service