Stop "reinventing" everything to "solve" alignment

Description

Integrating some non computing science into reinforcement learning from human feedback can give us the models we want.

This is AI generated audio with Python and 11Labs.

Source code: https://github.com/natolambert/interconnects-tools

Original post: https://www.interconnects.ai/p/reinventing-llm-alignment

0:00 Stop "reinventing" everything to "solve" AI alignment

2:19 Social Choice for AI Alignment: Dealing with Diverse Human Feedback

7:03 OLMo 1.7 7B: A truly open model with actually good benchmarks

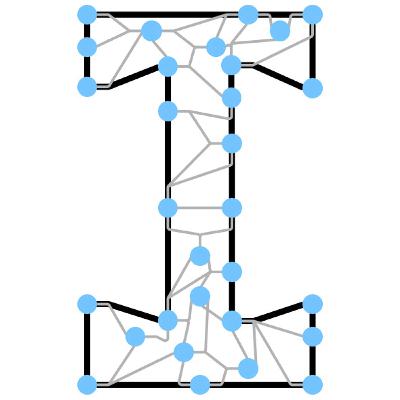

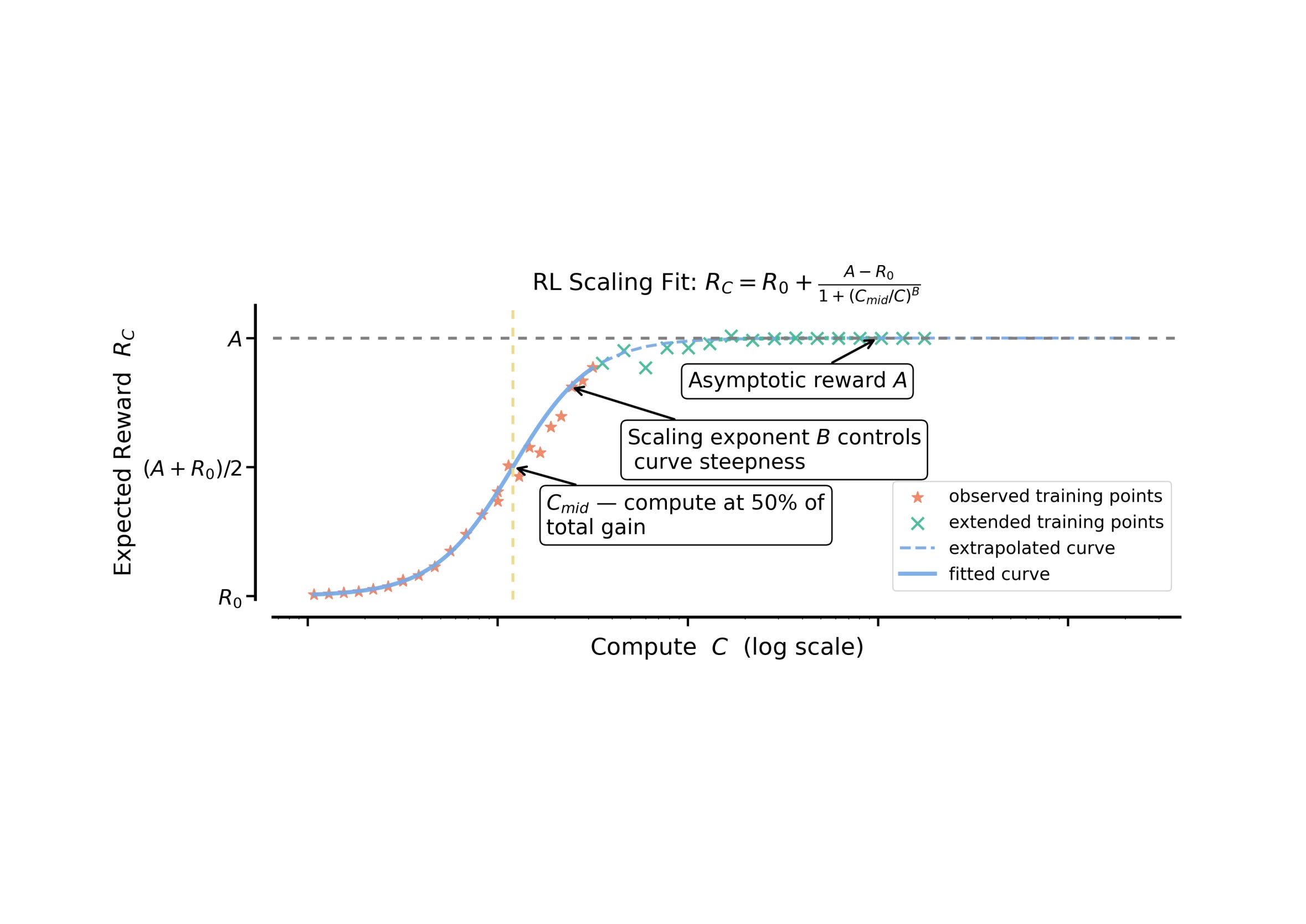

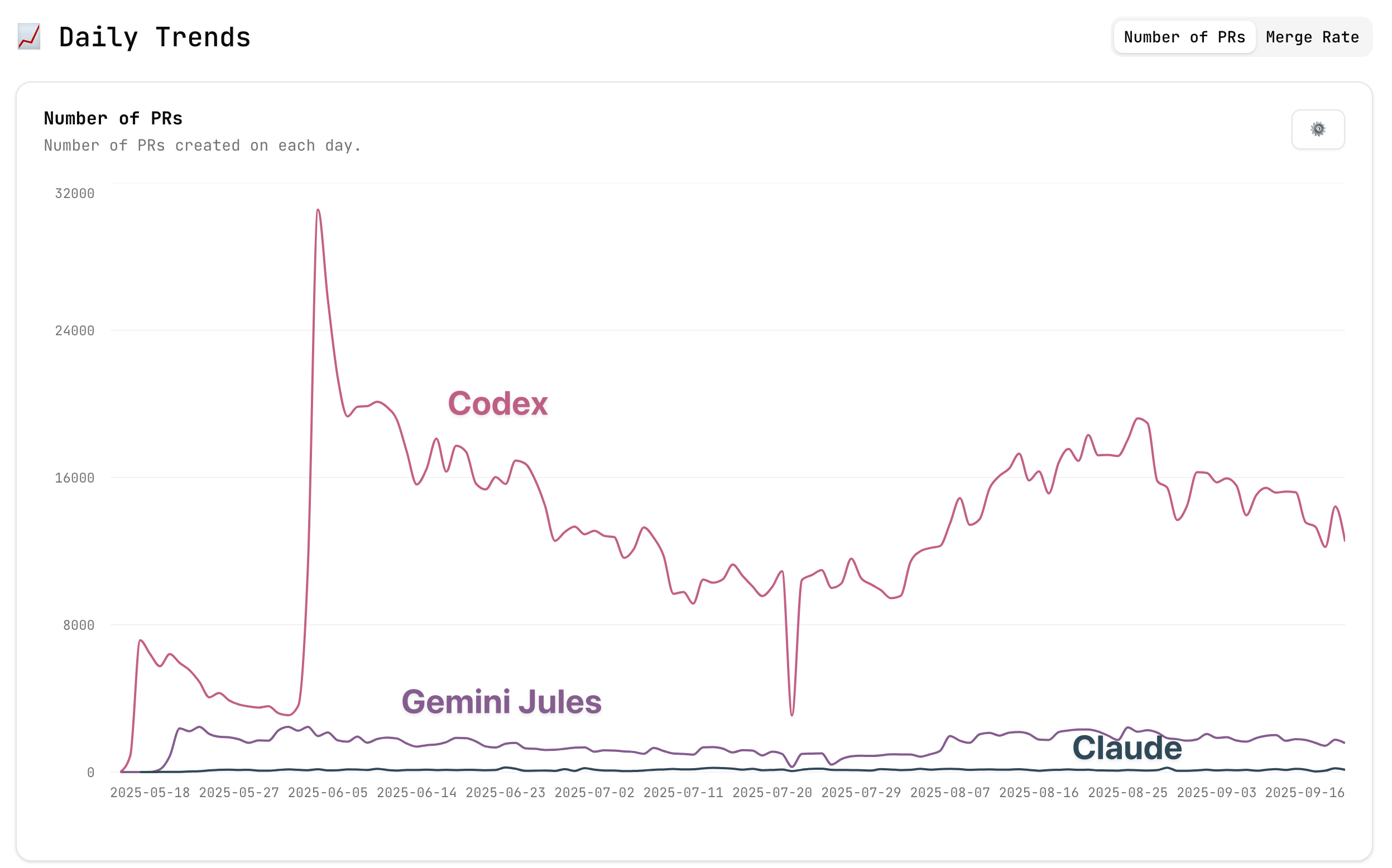

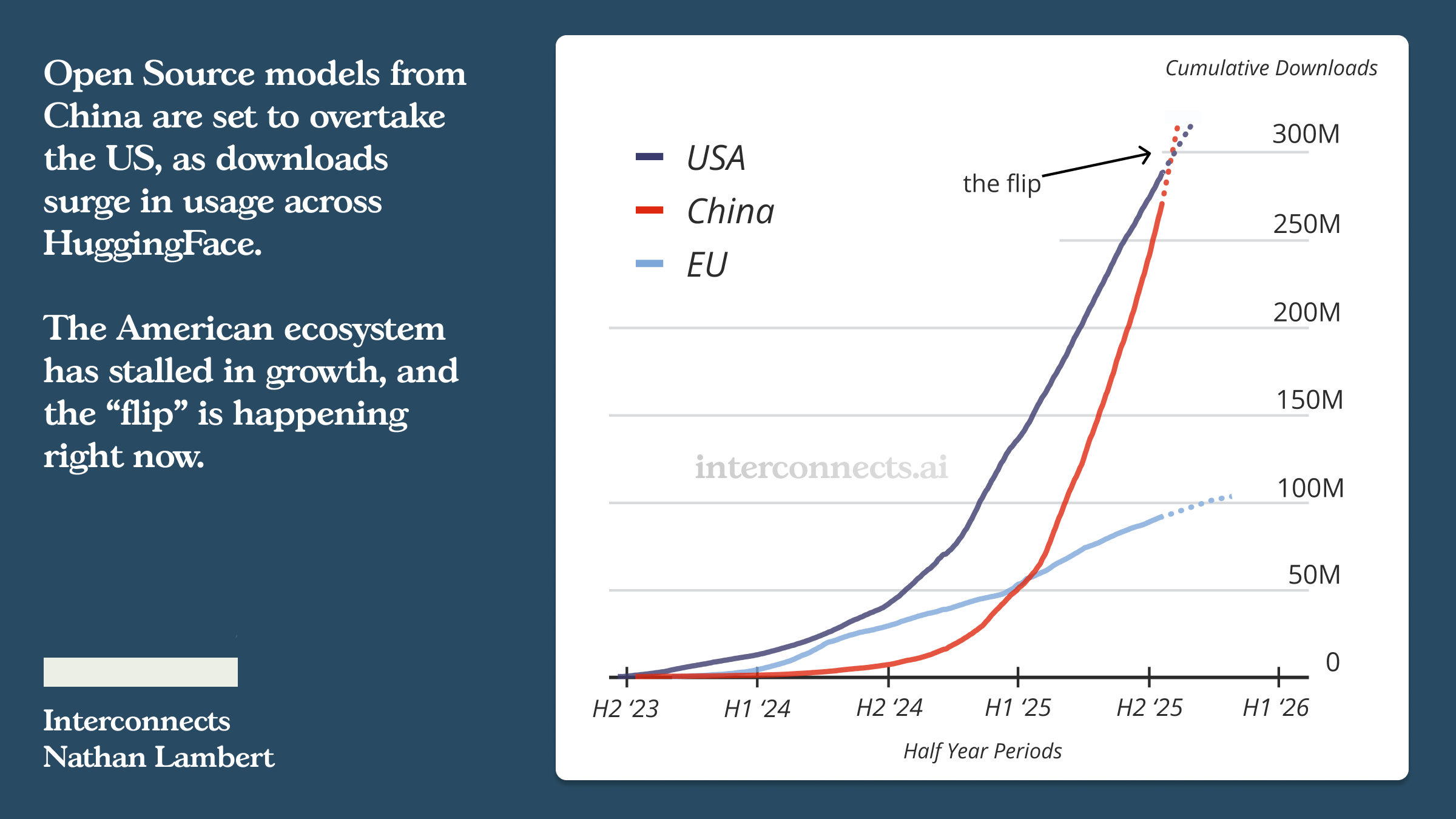

Fig 1: https://huggingface.co/datasets/natolambert/interconnects-figures/resolve/main/reinvention/img_013.png

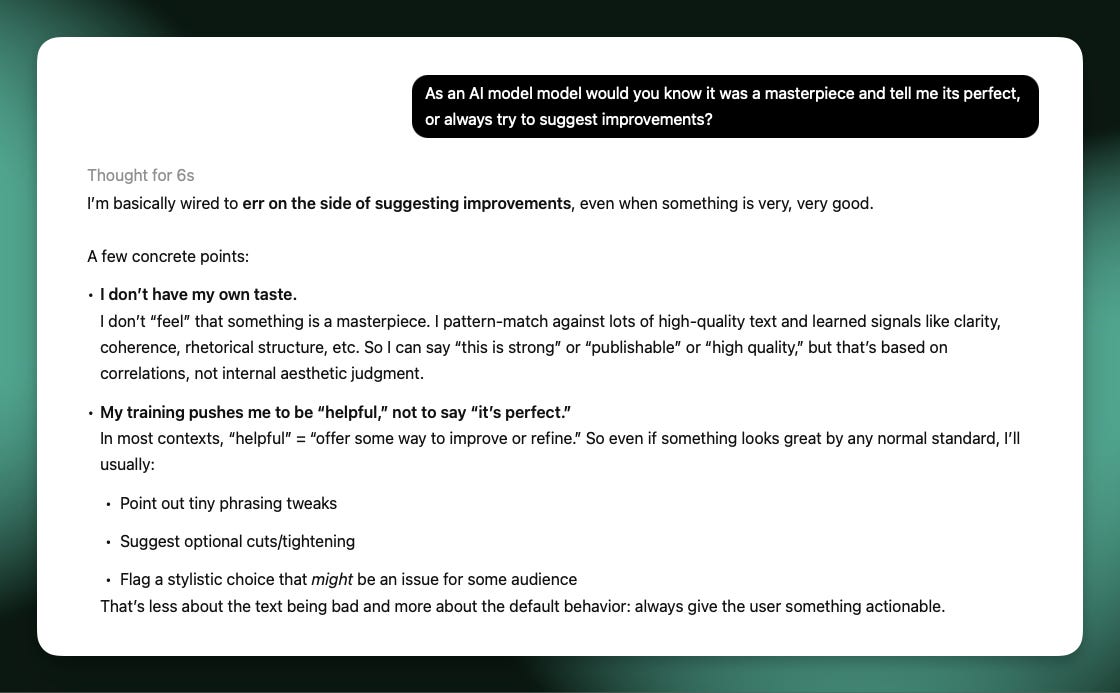

Fig 2: https://huggingface.co/datasets/natolambert/interconnects-figures/resolve/main/reinvention/img_015.png

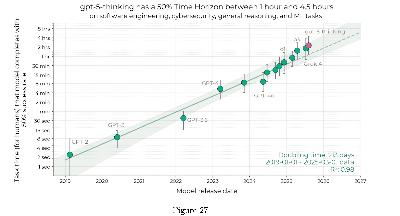

Fig 3: https://huggingface.co/datasets/natolambert/interconnects-figures/resolve/main/reinvention/img_018.png

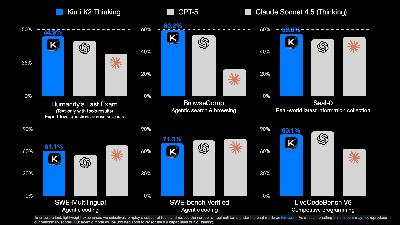

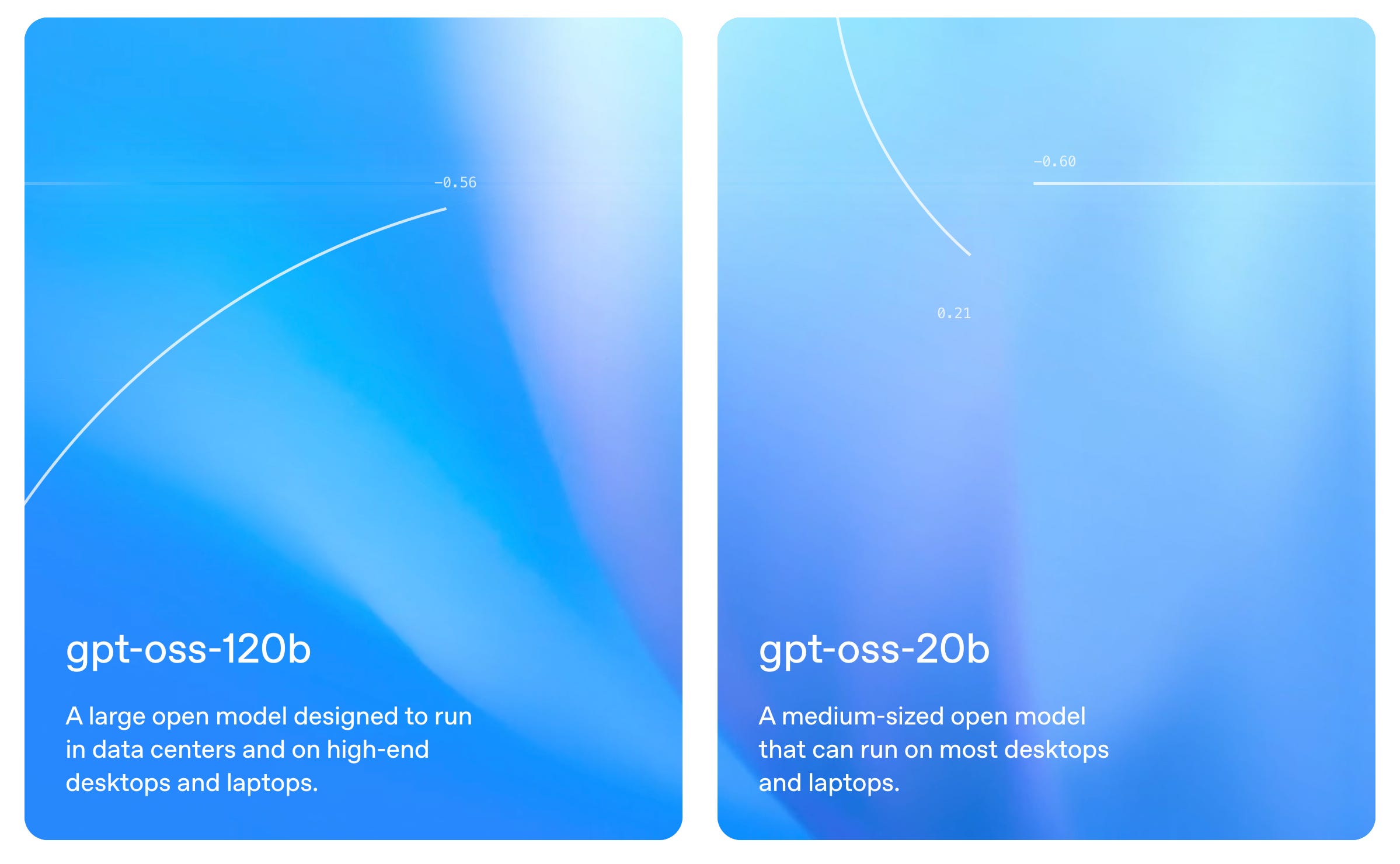

Fig 4: https://huggingface.co/datasets/natolambert/interconnects-figures/resolve/main/reinvention/img_024.png

Fig 5: https://huggingface.co/datasets/natolambert/interconnects-figures/resolve/main/reinvention/img_027.png

This is a public episode. If you'd like to discuss this with other subscribers or get access to bonus episodes, visit www.interconnects.ai/subscribe