The AI Corrigibility Debate: MIRI Researchers Max Harms vs. Jeremy Gillen

Description

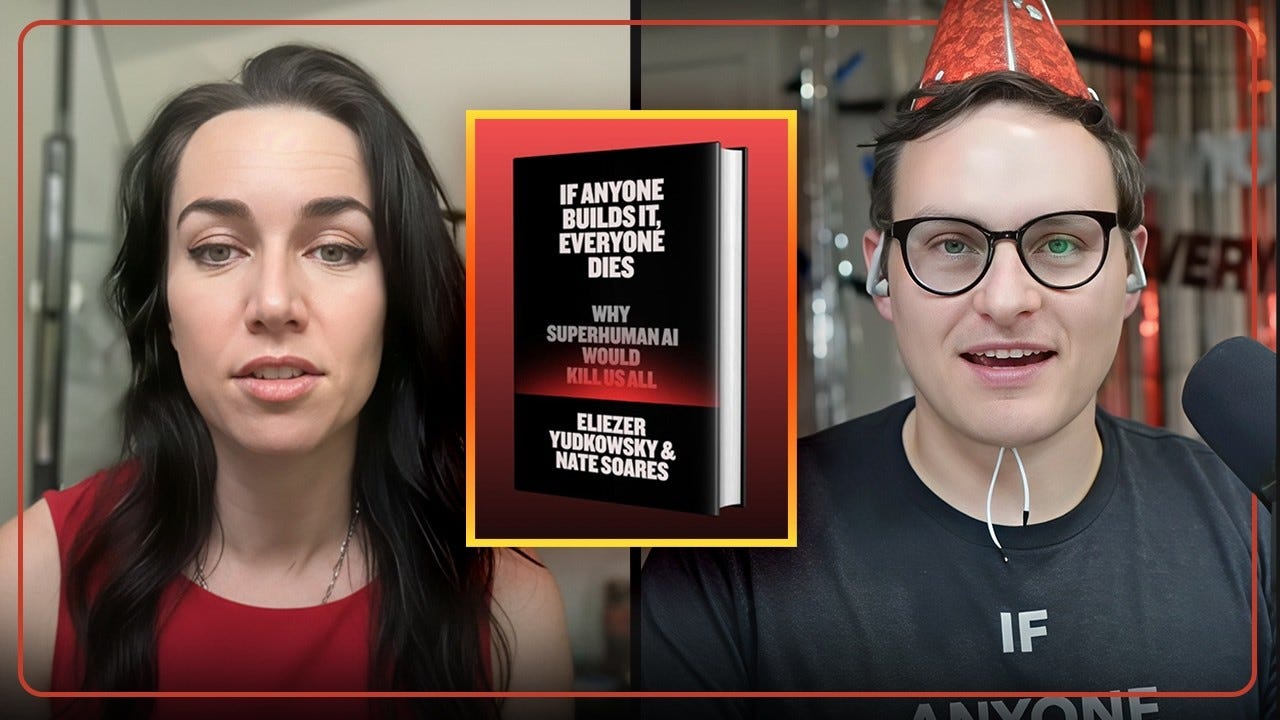

Max Harms and Jeremy Gillen are current and former MIRI researchers who both see superintelligent AI as an imminent extinction threat.

But they disagree on whether it’s worthwhile to try to aim for obedient, “corrigible” AI as a singular target for current alignment efforts.

Max thinks corrigibility is the most plausible path to build ASI without losing control and dying, while Jeremy is skeptical that this research path will yield better superintelligent AI behavior on a sufficiently early try.

By listening to this debate, you’ll find out if AI corrigibility is a relatively promising effort that might prevent imminent human extinction, or an over-optimistic pipe dream.

Timestamps

0:00 — Episode Preview

1:18 — Debate Kickoff

3:22 — What is Corrigibility?

9:57 — Why Corrigibility Matters

11:41 — What’s Your P(Doom)™

16:10 — Max’s Case for Corrigibility

19:28 — Jeremy’s Case Against Corrigibility

21:57 — Max’s Mainline AI Scenario

28:51 — 4 Strategies: Alignment, Control, Corrigibility, Don’t Build It

37:00 — Corrigibility vs HHH (”Helpful, Harmless, Honest”)

44:43 — Asimov’s 3 Laws of Robotics

52:05 — Is Corrigibility a Coherent Concept?

1:03:32 — Corrigibility vs Shutdown-ability

1:09:59 — CAST: Corrigibility as Singular Target, Near Misses, Iterations

1:20:18 — Debating if Max is Over-Optimistic

1:34:06 — Debating if Corrigibility is the Best Target

1:38:57 — Would Max Work for Anthropic?

1:41:26 — Max’s Modest Hopes

1:58:00 — Max’s New Book: Red Heart

2:16:08 — Outro

Show Notes

Max’s book Red Heart — https://www.amazon.com/Red-Heart-Max-Harms/dp/108822119X

Learn more about CAST: Corrigibility as Singular Target — https://www.lesswrong.com/s/KfCjeconYRdFbMxsy/p/NQK8KHSrZRF5erTba

Max’s Twitter — https://x.com/raelifin

Jeremy’s Twitter — https://x.com/jeremygillen1

---

Doom Debates’ Mission is to raise mainstream awareness of imminent extinction from AGI and build the social infrastructure for high-quality debate.

Support the mission by subscribing to my Substack at DoomDebates.com and to youtube.com/@DoomDebates, or to really take things to the next level: Donate 🙏

Get full access to Doom Debates at lironshapira.substack.com/subscribe