Zeno’s Paradox and the Problem of AI Tokenization

Description

This story was originally published on HackerNoon at: https://hackernoon.com/zenos-paradox-and-the-problem-of-ai-tokenization.

Token prediction forces LLMs to drift. This piece shows why, what Zeno can teach us about it, and how fidelity-based auditing could finally keep models grounded

Check more stories related to machine-learning at: https://hackernoon.com/c/machine-learning.

You can also check exclusive content about #ai-tokenization, #generative-ai-governance, #zenos-paradox, #neural-networks, #ai-philosophy, #autoregressive-models, #model-drift, #hackernoon-top-story, and more.

This story was written by: @aborschel. Learn more about this writer by checking @aborschel's about page,

and for more stories, please visit hackernoon.com.

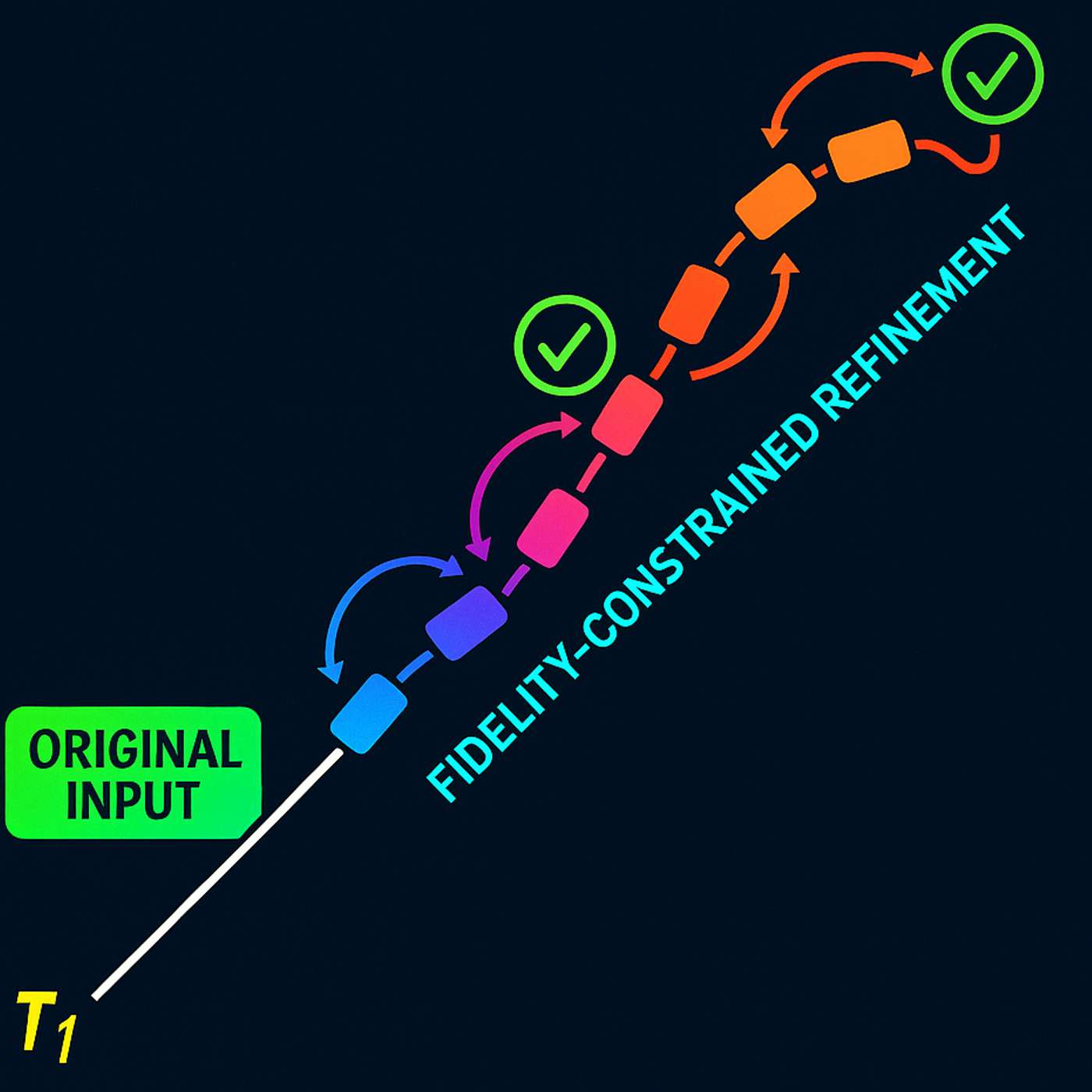

Zeno Effect is a structural flaw baked into how autoregressive models predict tokens: one step at a time, based only on the immediate past. It looks like coherence, but it’s often just momentum without memory.