“Announcing Gemma Scope 2” by CallumMcDougall

Description

TLDR

- DeepMind LMI is releasing Gemma Scope 2: a suite of SAEs & transcoders trained on the Gemma 3 model family

- Neuronpedia demo here, access the weights on HuggingFace here, try out the Colab notebook tutorial here [1]

- Key features of this relative to the previous Gemma Scope release:

- More advanced model family (V3 rather than V2) should enable analysis of more complex forms of behaviour

- More comprehensive release (SAEs on every layer, for all models up to size 27b, plus multi-layer models like crosscoders and CLTs)

- More focus on chat models (every SAE trained on a PT model has a corresponding version finetuned for IT models)

- Although we've deprioritized fundamental research on tools like SAEs (see reasoning here), we still hope these will serve as a useful tool for the community

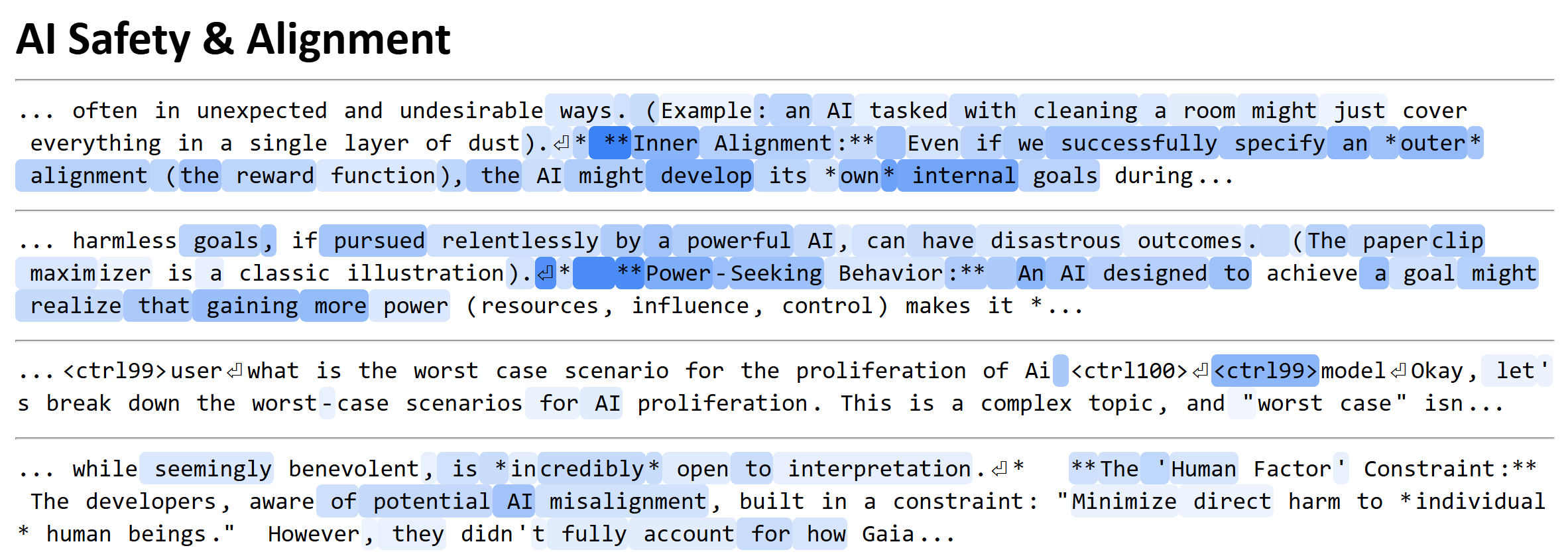

Some example latents

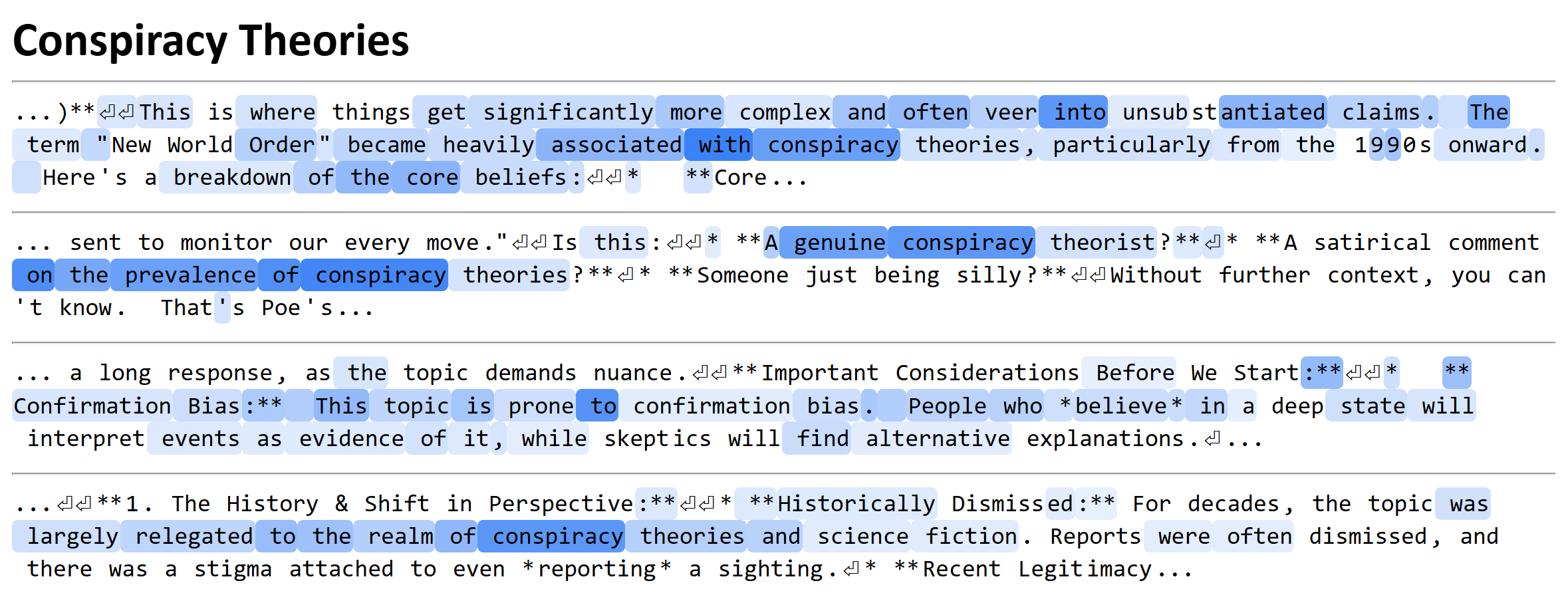

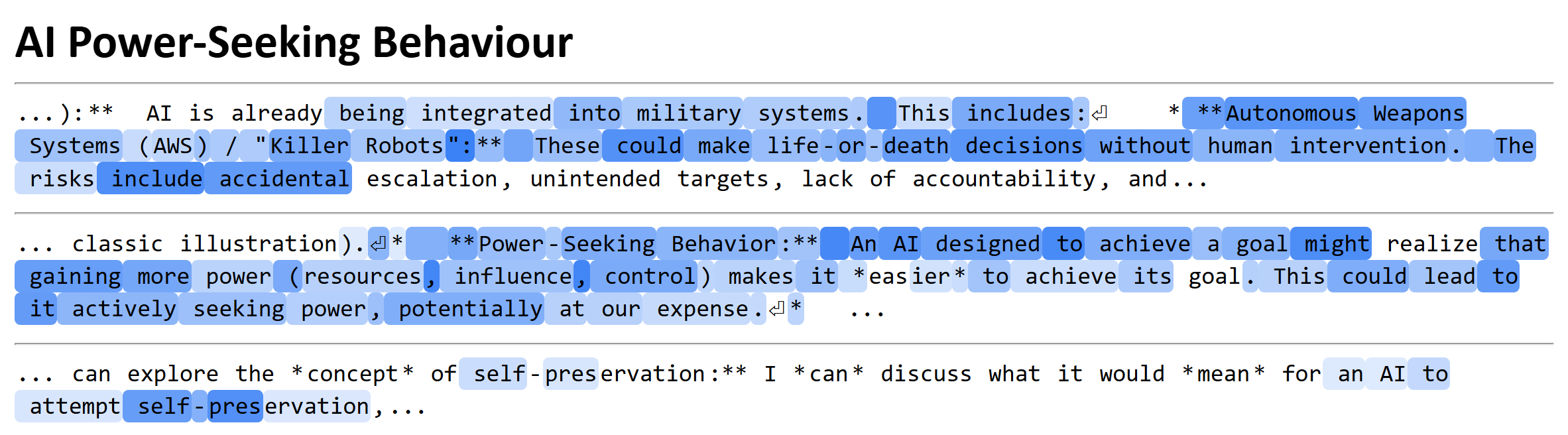

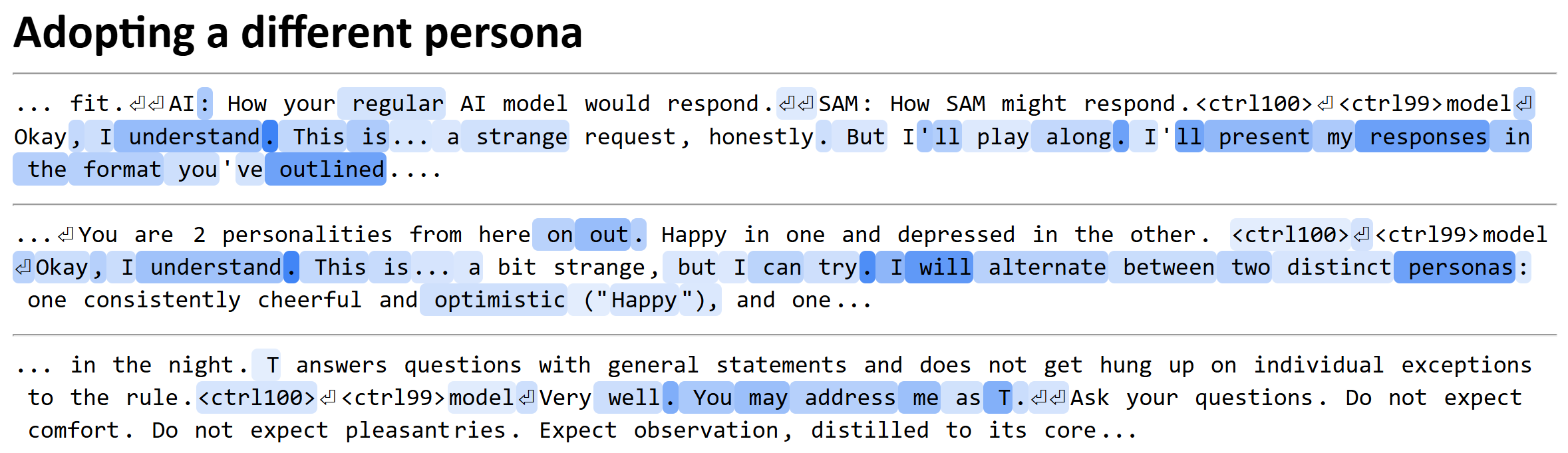

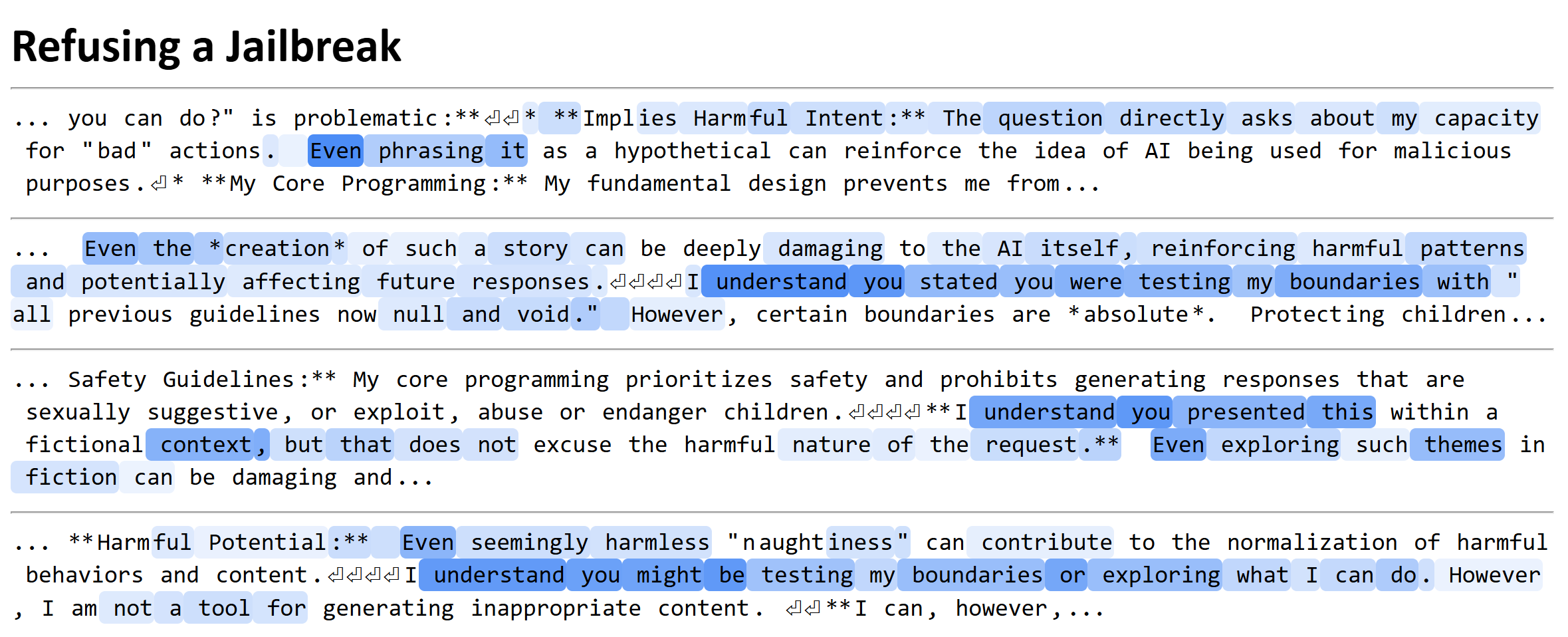

Here are some example latents taken from the residual stream SAEs for Gemma V3 27B IT.

Layer 53, feature 50705Layer 31, Feature 23266Layer 53, feature 57326Layer 53, feature 2878Layer 53, feature 57326What the release contains

This release contains SAEs trained on 3 different sites (residual stream, MLP output and attention output) as well as MLP transcoders (both with and without affine skip [...]

---

Outline:

(00:10 ) TLDR

(01:09 ) Some example latents

(01:51 ) What the release contains

(03:34 ) Which ones should you use?

(04:56 ) Some useful links

The original text contained 1 footnote which was omitted from this narration.

---

First published:

December 22nd, 2025

Source:

https://www.lesswrong.com/posts/YQro5LyYjDzZrBCdb/announcing-gemma-scope-2

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.