Discover Tech Stories Tech Brief By HackerNoon

Tech Stories Tech Brief By HackerNoon

406 Episodes

Reverse

This story was originally published on HackerNoon at: https://hackernoon.com/how-to-build-real-world-drone-avatars-with-webrtc-and-python.

A technical guide to building drone avatars—covering telemetry, low-latency streaming, and UTM systems for true virtual mobility.

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #webrtc-streaming, #drone-avatars, #edge-computing, #webrtc, #telepresence-technology, #virtual-mobility, #mavlink-protocol, #utm-uas-traffic-management, and more.

This story was written by: @dippusingh. Learn more about this writer by checking @dippusingh's about page,

and for more stories, please visit hackernoon.com.

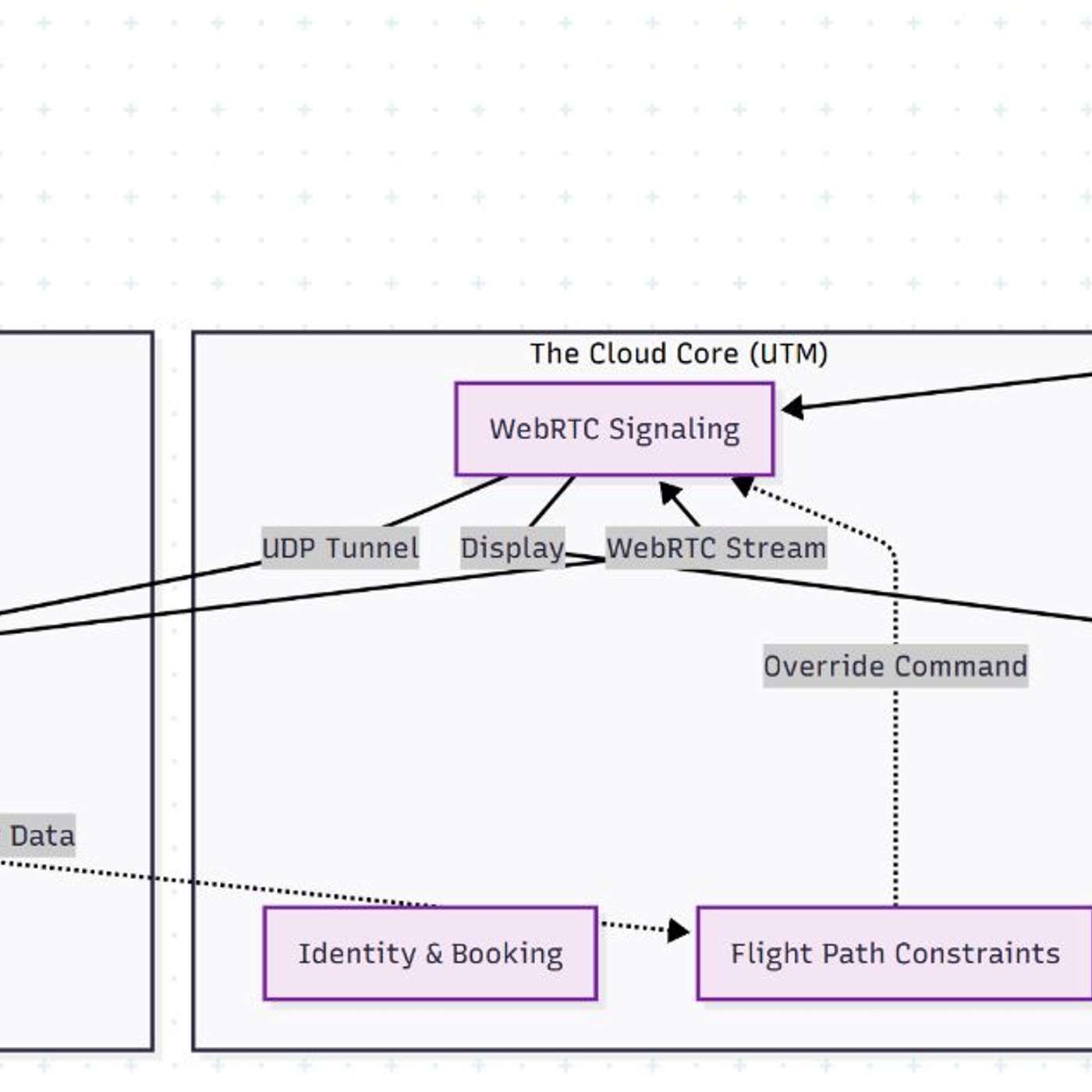

Drone Avatar is the next evolution of "Telework". The drone Avatar system requires three distinct layers: The Edge (The Drone), The Pipe (The Network), and The Core (The UTM Traffic Management)

This story was originally published on HackerNoon at: https://hackernoon.com/smardex-becomes-everything-the-single-contract-protocol-that-could-reshape-defi-architecture.

SMARDEX evolves into Everything Protocol, merging DEX, lending, and perps trading in one contract. February 2026 launch targets DeFi fragmentation.

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #smardex, #blockchain, #web3, #cryptocurrency, #dex, #smardex-news, #good-company, #defi, and more.

This story was written by: @ishanpandey. Learn more about this writer by checking @ishanpandey's about page,

and for more stories, please visit hackernoon.com.

SMARDEX evolves into Everything Protocol, merging DEX, lending, and perps trading in one contract. February 2026 launch targets DeFi fragmentation.

This story was originally published on HackerNoon at: https://hackernoon.com/3-ways-smart-leaders-can-adopt-ai-without-sacrificing-trust.

3 smarter automation paths for leaders. Balance AI efficiency with human oversight to protect trust, reduce risk, and scale responsibly without brittle shortcut

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #ai-strategy, #digital-transformation, #ai-leadership, #enterprise-ai-adoption, #human-in-the-loop, #ai-oversight-models, #human-ai-collaboration, #ai-decision-making, and more.

This story was written by: @hacker68060072. Learn more about this writer by checking @hacker68060072's about page,

and for more stories, please visit hackernoon.com.

Nick Talwar is a CTO, ex-Microsoft, and a hands-on AI engineer. He shares his insights on how leaders can use AI to drive bottom-line impact.

This story was originally published on HackerNoon at: https://hackernoon.com/why-autonomous-trading-agents-on-privex-could-replace-human-crypto-traders.

PriveX launches AI Agents Arena on COTI network, enabling autonomous trading systems with 0.0001% fees and encrypted execution capabilities.

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #privex, #privex-news, #blockchain, #web3, #cryptocurrency, #ai, #defi, #coti, and more.

This story was written by: @ishanpandey. Learn more about this writer by checking @ishanpandey's about page,

and for more stories, please visit hackernoon.com.

PriveX has launched the AI Agents Arena, a platform where users can create autonomous trading systems that operate continuously on perpetual markets. Built exclusively on the COTI network during beta, these agents analyze market data, execute trades based on predefined logic, and operate with 0.0001% trading fees. The platform requires no coding experience and allows deployment with a minimum of 20 USDC. Future developments include encrypted execution through COTI's garbled-circuit infrastructure and expansion to social and hybrid agent capabilities.

This story was originally published on HackerNoon at: https://hackernoon.com/ux-research-for-agile-ai-product-development-of-intelligent-collaboration-software-platforms.

Teams are adopting AI collaboration tools fast—but few measure how they affect trust, decision quality, and teamwork. A UX framework explains why.

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #enterprise-ai-adoption, #ux-research, #human-ai-collaboration, #product-development, #ai-collaboration-tools, #ai-in-agile-development, #agile-software-teams, #ai-adoption-analysis, and more.

This story was written by: @priuxr. Learn more about this writer by checking @priuxr's about page,

and for more stories, please visit hackernoon.com.

UX researchers need new product frameworks when AI enters collaboration tools. I've developed a five-dimension approach that captures what velocity metrics miss: cognitive load, trust calibration, collaborative sensemaking, knowledge distribution, and user outcome alignment. After studying teams using AI copilots across three sprint cycles, the pattern is clear: the best configurations automate documentation while protecting human sensemaking during live collaboration.

This story was originally published on HackerNoon at: https://hackernoon.com/automating-content-tagging-in-laravel-using-openai-embeddings-and-cron-jobs.

Learn how to build an AI-powered auto-tagging system in Laravel using OpenAI embeddings, queues, and vector similarity matching.

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #content-classification-ai, #laravel-ai, #openai-embeddings, #laravel-auto-tagging, #laravel-jobs-and-queues, #vector-embeddings, #phpml, #cms-automation, and more.

This story was written by: @phpcmsframework. Learn more about this writer by checking @phpcmsframework's about page,

and for more stories, please visit hackernoon.com.

AI embeddings can automatically determine the topic of a blog post and assign the appropriate tags without the need for human intervention. This guide demonstrates how to create a complete Laravel AI auto-tagging system.

This story was originally published on HackerNoon at: https://hackernoon.com/cryptocom-targets-trillion-dollar-prediction-market-opportunity-with-regulatory-first-approach.

Travis McGhee, Global Head of Predictions at Crypto.com, discusses how the 150M+ user platform is building regulated prediction market infrastructure.

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #crypto.com, #crypto.com-news, #blockchain, #web3, #defi, #good-company, #predictions-markets, #technology, and more.

This story was written by: @ishanpandey. Learn more about this writer by checking @ishanpandey's about page,

and for more stories, please visit hackernoon.com.

Travis McGhee is the Global Head of Predictions at [Crypto.com]. He says the company is positioning prediction markets as a tool for democratized information aggregation. He says Crypto.com is committed to responsible innovation and regulation.

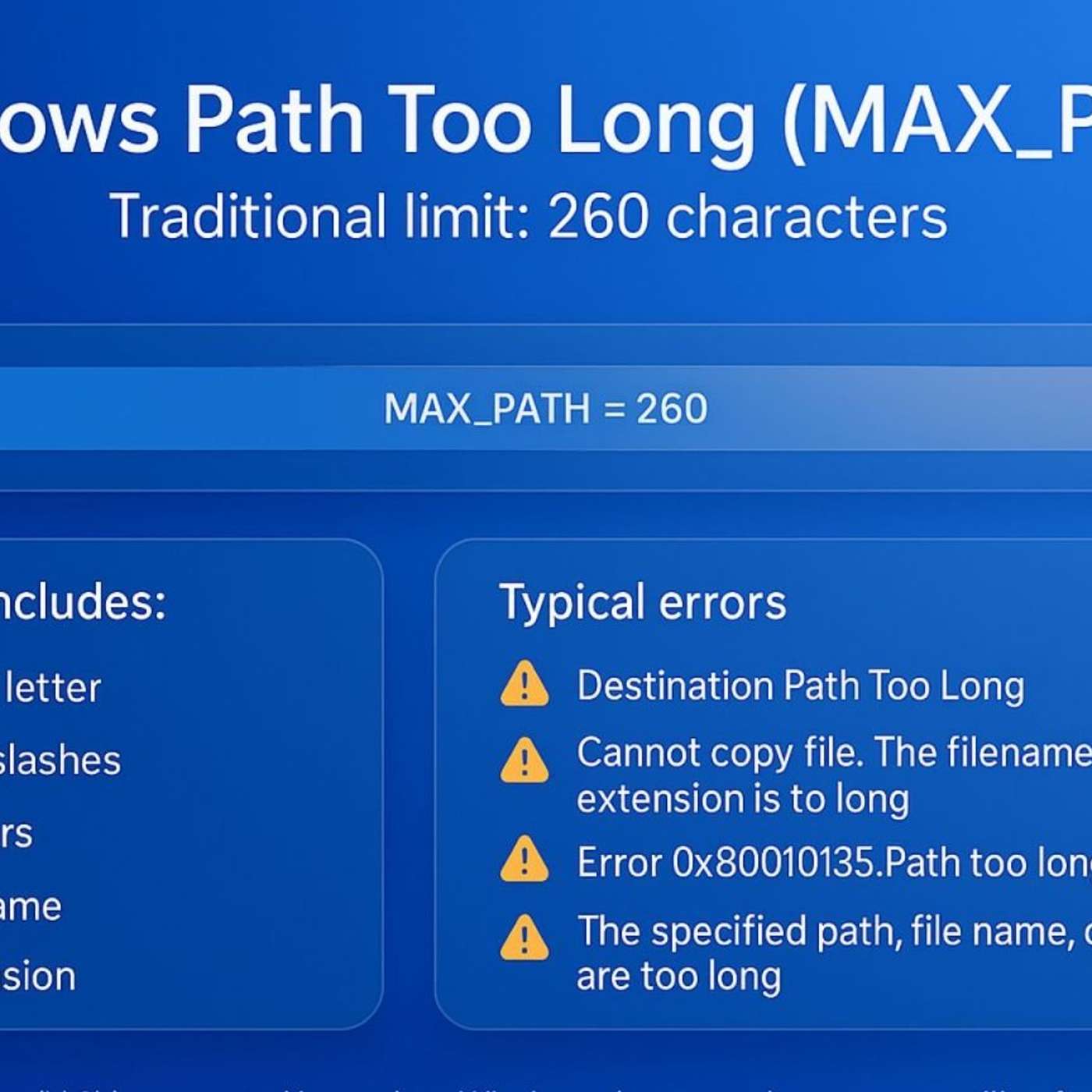

This story was originally published on HackerNoon at: https://hackernoon.com/how-to-enable-long-paths-on-windows-11-and-fix-error-0x80010135.

Enable long file paths in Windows 11 and fix error 0x80010135 with simple steps using Settings or Registry Editor.

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #windows-11-troubleshooting, #enable-long-paths-windows-11, #fix-error-0x80010135, #windows-11-long-paths, #windows-path-length-limit, #windows-registry-editor, #max_path-windows-fix, #windows-long-path-error, and more.

This story was written by: @vigneshwaran. Learn more about this writer by checking @vigneshwaran's about page,

and for more stories, please visit hackernoon.com.

Windows 11 can bypass the legacy 260-character file path limit by enabling Long Paths through either the Settings menu or the Registry Editor. This guide walks through both methods, explains common errors like 0x80010135, and covers how to troubleshoot apps or cloud services that still enforce old path restrictions.

This story was originally published on HackerNoon at: https://hackernoon.com/reexamining-canonical-isomorphisms-in-modern-algebraic-geometry.

A critical look at how mathematicians use the word “canonical,” revealing how informal shortcuts obscure the real constructions behind key theorems.

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #mathematical-logic, #grothendieck, #formalising-mathematics, #interactive-theorem-provers, #homotopy-type-theory, #set-theory-foundations, #associativity-in-mathematics, #canonical-isomorphism, and more.

This story was written by: @mediabias. Learn more about this writer by checking @mediabias's about page,

and for more stories, please visit hackernoon.com.

The article examines how mathematicians casually label maps as “canonical,” why this obscures the constructive content of theorems like the first isomorphism theorem, and how formalizing algebraic geometry forces greater precision about what canonical truly means.

This story was originally published on HackerNoon at: https://hackernoon.com/the-future-of-ai-infrastructure-consolidation-for-giants-vertical-solutions-for-startups.

Neo's John Wang discusses decentralized AI infrastructure, the SpoonOS platform, and the $100K challenge targeting centralized AI limitations. Learn about block

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #spoonos, #neo, #blockchain, #ai, #good-company, #web3, #defi, #cryptocurrency, and more.

This story was written by: @ishanpandey. Learn more about this writer by checking @ishanpandey's about page,

and for more stories, please visit hackernoon.com.

John Wang is the Head of Neo Ecosystem Growth and Managing Director of Neo Ecofund. His latest focus on SpoonOS represents a bold bet on democratizing AI infrastructure. SpoonOS recently launched the $100K challenge focused on problems that centralized AI cannot fix.

This story was originally published on HackerNoon at: https://hackernoon.com/btcc-exchange-brings-400-perpetual-futures-to-tradingview-what-traders-need-to-know.

BTCC integrates 400+ futures pairs with TradingView's 100M user platform, enabling direct chart-to-trade execution for crypto traders.

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #btcc, #btcc-news, #blockchain, #cryptocurrency, #web3, #defi, #good-company, #tradingview, and more.

This story was written by: @ishanpandey. Learn more about this writer by checking @ishanpandey's about page,

and for more stories, please visit hackernoon.com.

BTCC integrates 400+ futures pairs with TradingView's 100M user platform, enabling direct chart-to-trade execution for crypto traders.

This story was originally published on HackerNoon at: https://hackernoon.com/10-biggest-sports-companies-in-the-usa-based-on-revenue.

A data-driven look at the ten highest-earning sports companies in the U.S., from Nike and Fanatics to the NFL, BetMGM, ESPN, and TKO Group.

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #sports-tech, #sports-analytics, #us-sports-companies, #sports-industry-revenue, #nike-revenue-2025, #us-sports-market-analysis, #sports-revenue-growth-drivers, #good-company, and more.

This story was written by: @isnation. Learn more about this writer by checking @isnation's about page,

and for more stories, please visit hackernoon.com.

The U.S. sports market is powered by billion-dollar companies spanning apparel, betting, media, and tech. Nike leads the field, while Fanatics, the NFL, NBA, BetMGM, Garmin, TKO Group, and ESPN drive growth through merchandise, streaming, sponsorships, and digital fan engagement. Emerging trends include AI-driven sports tech, athlete

This story was originally published on HackerNoon at: https://hackernoon.com/9-mobile-apps-for-athlete-mental-and-physical-fitness.

Explore the best apps for athletes to strengthen mental fitness, track workouts, recover faster, and stay balanced on and off the field.

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #health-tech, #athlete-wellness-apps, #best-fitness-apps-2025, #athlete-mental-health-apps, #athlete-nutrition-apps, #meditation-apps-for-athletes, #isnation, #good-company, and more.

This story was written by: @isnation. Learn more about this writer by checking @isnation's about page,

and for more stories, please visit hackernoon.com.

This article reviews nine top-rated apps designed to help athletes manage training, recovery, mental health, and nutrition. From Prehab to Strava, these tools provide practical ways to prevent burnout, track progress, and build a healthier sport-life balance across both physical and mental performance.

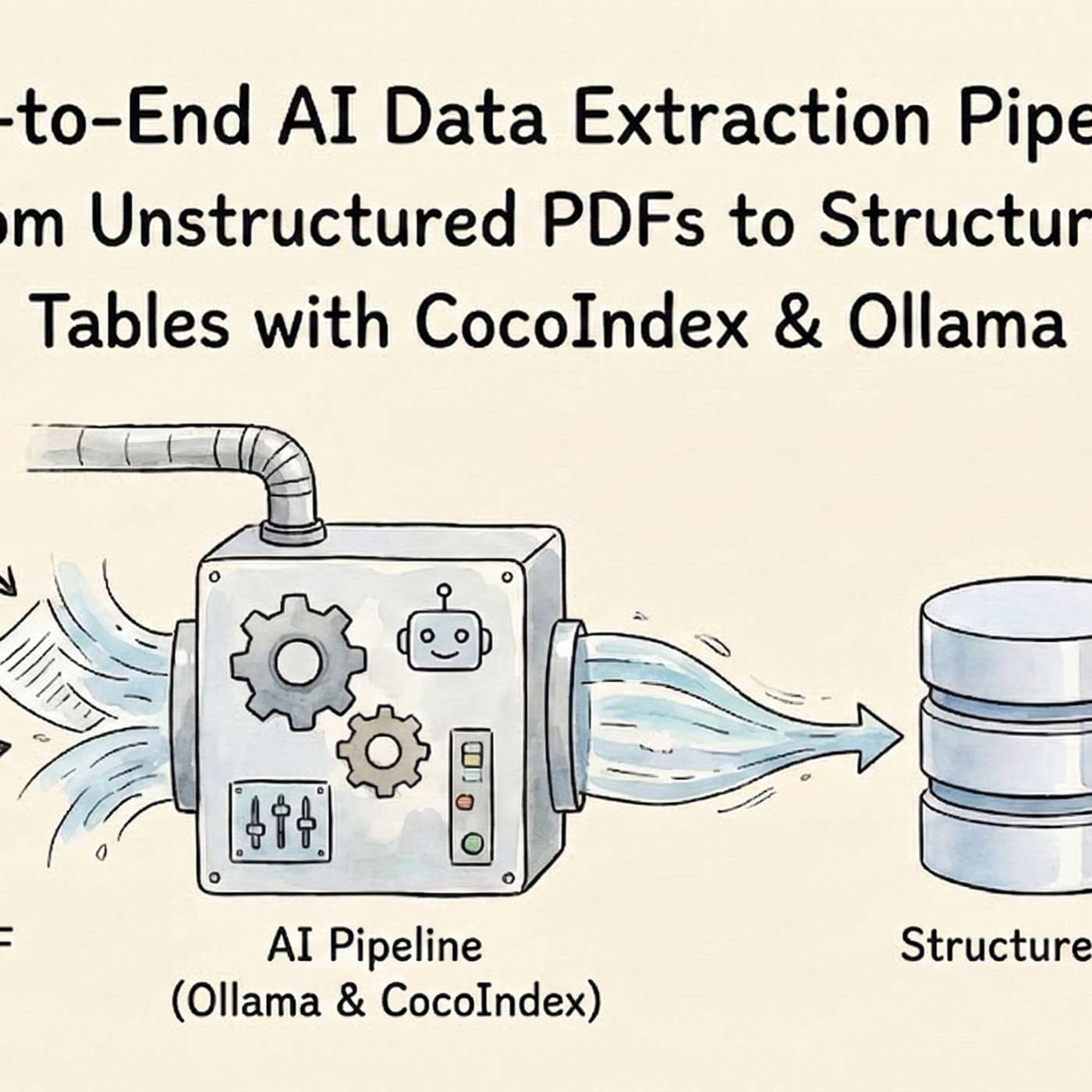

This story was originally published on HackerNoon at: https://hackernoon.com/pdfs-to-intelligence-how-to-auto-extract-python-manual-knowledge-recursively-using-ollama-llms.

Learn how to automate extraction of structured Python module data from PDFs using CocoIndex, LLMs like Llama3, and Ollama. Scale technical documentation by buil

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #ai-data-extraction, #ollama, #llms, #cocoindex, #pdf-documentation, #extraction-pipeline, #python, #cocoinsight, and more.

This story was written by: @badmonster0. Learn more about this writer by checking @badmonster0's about page,

and for more stories, please visit hackernoon.com.

We’ll demonstrate an end-to-end data extraction pipeline engineered for maximum automation, reproducibility, and technical rigor. Our goal is to transform unstructured PDF documentation into precise, structured, and queryable tables. We use the open-source [CocoIndex framework] and state-of-the-art LLMs (like Meta’s Llama 3) managed locally by Ollama.

This story was originally published on HackerNoon at: https://hackernoon.com/ai-meeting-tools-are-the-next-productivity-war-heres-why-ticnote-might-win.

TicNote introduces Agentic Intelligence to turn recordings, notes, and documents into structured insight, offering a smarter way to work and understand info.

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #ai-meeting-assistant, #ai-tools-for-business, #ticnote-ai, #multimodal-ai, #ai-podcast-generator, #intelligent-work-platforms, #voice-recognition-ai, #good-company, and more.

This story was written by: @nicafurs. Learn more about this writer by checking @nicafurs's about page,

and for more stories, please visit hackernoon.com.

TicNote enters the crowded AI productivity market with an Agentic OS that moves beyond transcription, turning audio, images, and documents into structured insights, deep research answers, and creative outputs like podcasts. Its multimodal engine and context-aware reasoning aim to redefine how professionals capture, understand, and act on information.

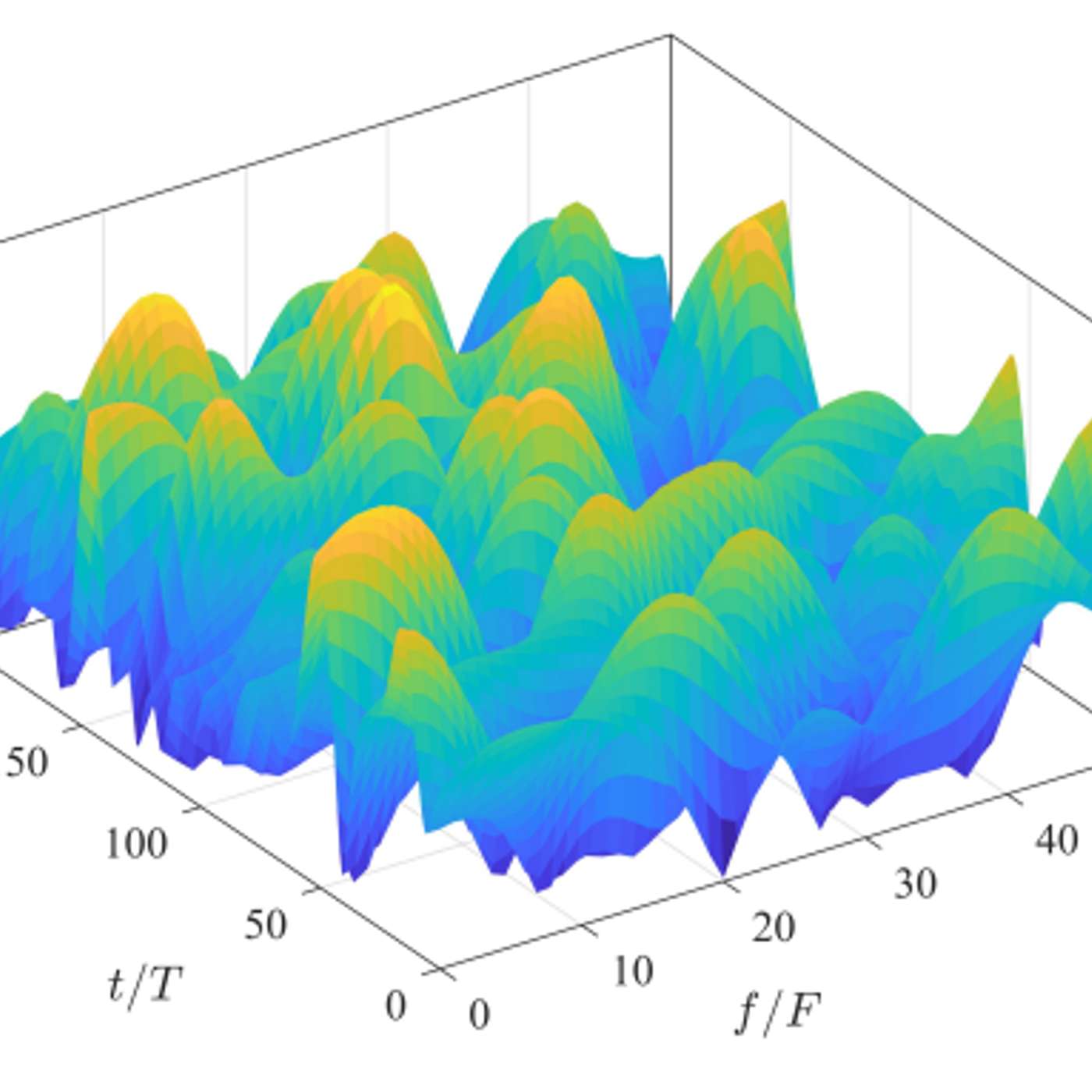

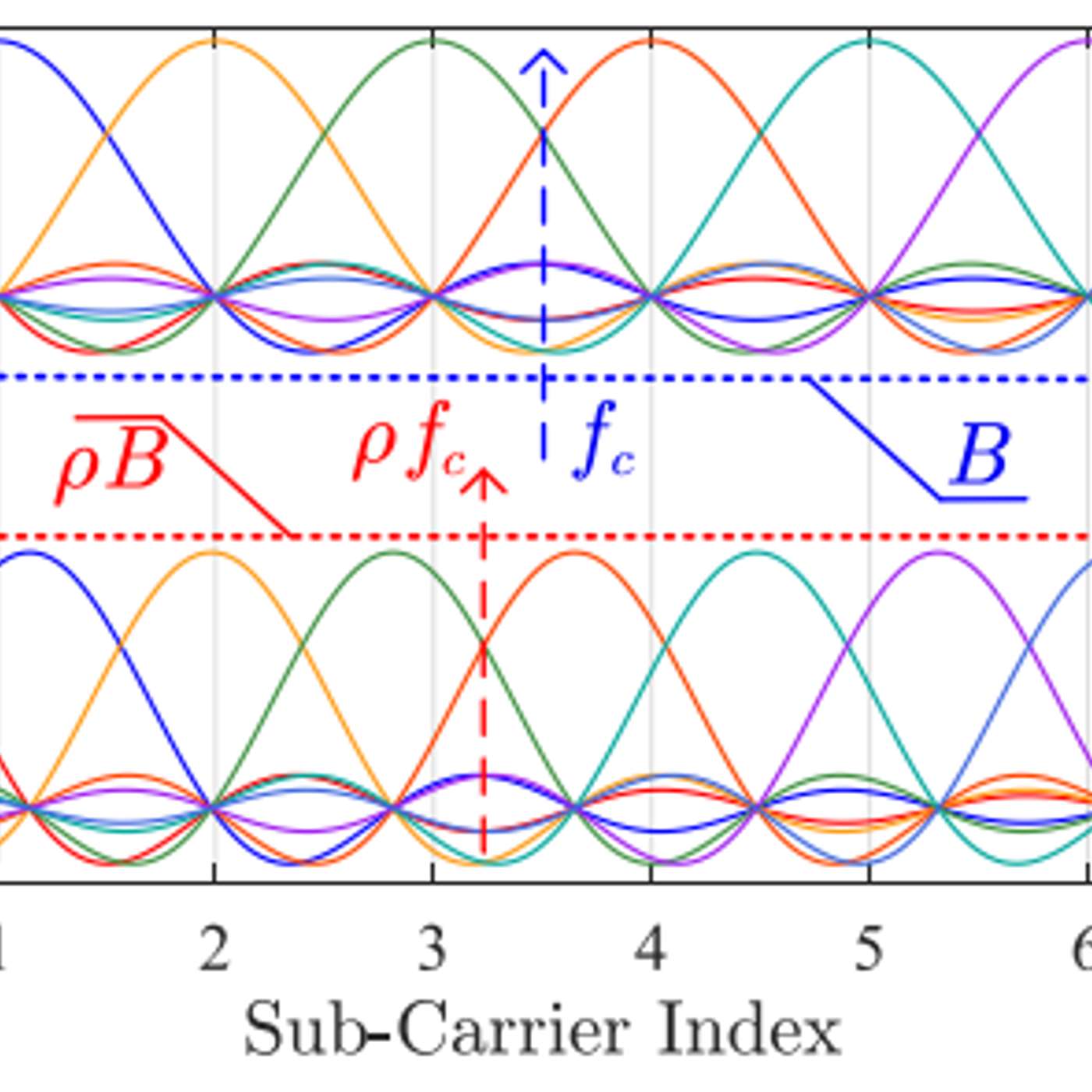

This story was originally published on HackerNoon at: https://hackernoon.com/why-otfs-outperforms-ofdm-in-high-mobility-scenarios.

Explores how OTFS uses D-D domain predictability to interpolate, extrapolate, and track wireless channels, reducing pilot overhead in high-mobility scenarios.

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #wireless-communication-systems, #otfs-modulation, #doubly-dispersive-channels, #spectral-efficiency, #delay-doppler, #aliasing-and-isci, #ltv-channel-models, #mimo-systems, and more.

This story was written by: @extrapolate. Learn more about this writer by checking @extrapolate's about page,

and for more stories, please visit hackernoon.com.

The article explains how OTFS leverages the slow-varying nature of the delay-Doppler domain to interpolate and extrapolate channel states, enabling accurate tracking, lower pilot overhead, and reduced processing delay even in high-mobility, doubly-dispersive environments.

This story was originally published on HackerNoon at: https://hackernoon.com/study-finds-otfs-can-dramatically-cut-channel-training-costs-in-high-mobility-networks.

OTFS significantly reduces channel training overhead in doubly-dispersive channels, outperforming OFDM as Doppler and delay effects increase.

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #wireless-communication-systems, #otfs-modulation, #doubly-dispersive-channels, #spectral-efficiency, #delay-doppler, #aliasing-and-isci, #ltv-channel-models, #mimo-systems, and more.

This story was written by: @extrapolate. Learn more about this writer by checking @extrapolate's about page,

and for more stories, please visit hackernoon.com.

OTFS leverages delay-Doppler domain sparsity to reduce channel training overhead, mitigate aliasing and ISCI, and offer higher spectral efficiency than OFDM in fast-varying, doubly-dispersive wireless channels.

This story was originally published on HackerNoon at: https://hackernoon.com/why-first-order-channel-models-matter-for-high-mobility-wireless-systems.

A clear explanation of why OFDM’s LTI models fail in fast-changing channels and how first-order and delay–Doppler models improve accuracy and performance.

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #wireless-communication-systems, #otfs-modulation, #doubly-dispersive-channels, #spectral-efficiency, #delay-doppler, #aliasing-and-isci, #ltv-channel-models, #mimo-systems, and more.

This story was written by: @extrapolate. Learn more about this writer by checking @extrapolate's about page,

and for more stories, please visit hackernoon.com.

The article explains why OFDM’s traditional LTI channel model collapses in high-mobility environments, showing how first-order Taylor approximations and delay–Doppler domain modeling extend coherence time, reduce estimation overhead, and yield more accurate representations of rapidly varying wireless channels.

This story was originally published on HackerNoon at: https://hackernoon.com/how-myriad-and-trust-wallet-built-the-first-native-prediction-market-for-220m-users.

Myriad integrates directly into Trust Wallet as the first native prediction market, reaching 220M users after hitting $100M in trading volume.

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #myraid, #trust-wallet, #cryptocurrency, #dlt, #web3, #defi, #good-company, #myraid-news, and more.

This story was written by: @ishanpandey. Learn more about this writer by checking @ishanpandey's about page,

and for more stories, please visit hackernoon.com.

Myriad became the first prediction market integrated directly into Trust Wallet's new Predictions feature, giving it access to 220 million users. The integration comes after Myriad reached $100 million in trading volume with 400,000 active traders. Trust Wallet plans to add Kalshi and Polymarket to create an aggregated prediction market hub. The move reduces friction for users while positioning wallets as full-featured financial platforms beyond basic asset storage. Success depends on sustained engagement rather than event-driven volume spikes.

This story was originally published on HackerNoon at: https://hackernoon.com/cosmic-rays-vs-code-how-a-solar-flare-knocked-the-digital-brains-out-of-6000-airbus-jets.

A single 'bit blip' from a solar flare exposed a critical flaw in the Airbus A320's ELAC L104 software, causing a global safety crisis.

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #aviation, #cybersecurity, #futurism, #airbus-recall, #a320-recall, #bit-flip-airbus, #airbus-issue, #hackernoon-top-story, and more.

This story was written by: @zbruceli. Learn more about this writer by checking @zbruceli's about page,

and for more stories, please visit hackernoon.com.

A single 'bit blip' from a solar flare exposed a critical flaw in the Airbus A320's ELAC L104 software, causing a global safety crisis. Over 6,000 jets were grounded in the largest recall in Airbus history. The cosmos is the new frontier of flight risk.

ChatGPT said: If you’re exploring apps that enhance your daily life, it’s interesting to see how entertainment platforms like Magis TV can also impact mental wellness by providing stress relief and relaxation through movies, shows, and live channels. For those curious, you can visit the naked link https://magistvdesc.com/magis-tv-box/ to access the Magis TV Box guide and stream content across multiple devices, complementing more structured mental health apps that focus on mindfulness and mood tracking. While mental health apps aim to improve your mind, streaming services can offer a casual way to unwind and recharge after a busy day.

They are real, experienced, and ready to guide you—whether you’re looking to elevate your writing skills with the HackerNoon Blogging Course Faculty or boost your Cisco CCNP Security 300-730 SVPN exam preparation. With practical insights, storytelling mastery, and hands-on guidance, plus reliable, updated study materials from DumpsBuddy covering VPN solutions, you can strengthen your skills and succeed in your goals. https://www.dumpsbuddy.com/300-730-certification-exam.html