Discover ThursdAI - The top AI news from the past week

ThursdAI - The top AI news from the past week

ThursdAI - The top AI news from the past week

Author: From Weights & Biases, Join AI Evangelist Alex Volkov and a panel of experts to cover everything important that happened in the world of AI from the past week

Subscribed: 40Played: 1,512Subscribe

Share

© Alex Volkov

Description

Every ThursdAI, Alex Volkov hosts a panel of experts, ai engineers, data scientists and prompt spellcasters on twitter spaces, as we discuss everything major and important that happened in the world of AI for the past week.

Topics include LLMs, Open source, New capabilities, OpenAI, competitors in AI space, new LLM models, AI art and diffusion aspects and much more.

sub.thursdai.news

Topics include LLMs, Open source, New capabilities, OpenAI, competitors in AI space, new LLM models, AI art and diffusion aspects and much more.

sub.thursdai.news

136 Episodes

Reverse

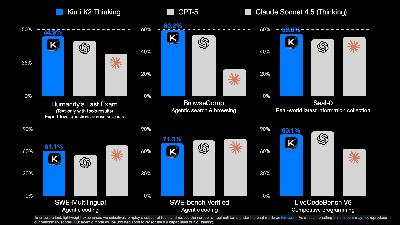

Ho Ho Ho, Alex here! (a real human writing these words, this needs to be said in 2025) Merry Christmas (to those who celebrate) and welcome to the very special yearly ThursdAI recap! This was an intense year in the world of AI, and after 51 weekly episodes (this is episode 52!) we have the ultimate record of all the major and most important AI releases of this year! So instead of bringing you a weekly update (it’s been a slow week so far, most AI labs are taking a well deserved break, the Cchinese AI labs haven’t yet surprised anyone), I’m dropping a comprehensive yearly AI review! Quarter by quarter, month by month, both in written form and as a pod/video! Why do this? Who even needs this? Isn’t most of it obsolete? I have asked myself this exact question while prepping for the show (it was quite a lot of prep, even with Opus’s help). I eventually landed on, hey, if nothing else, this will serve as a record of the insane week of AI progress we all witnessed. Can you imagine that the term Vibe Coding is less than 1 year old? That Claude Code was released at the start of THIS year? We get hedonicly adapt to new AI goodies so quick, and I figured this will serve as a point in time check, we can get back to and feel the acceleration! With that, let’s dive in - P.S. the content below is mostly authored by my co-author for this, Opus 4.5 high, which at the end of 2025 I find the best creative writer with the best long context coherence that can imitate my voice and tone (hey, I’m also on a break! 🎅) “Open source AI has never been as hot as this quarter. We’re accelerating as f*ck, and it’s only just beginning—hold on to your butts.” — Alex Volkov, ThursdAI Q1 2025🏆 The Big Picture — 2025 - The Year the AI Agents Became RealLooking back at 51 episodes and 12 months of relentless AI progress, several mega-themes emerged:1. 🧠 Reasoning Models Changed EverythingFrom DeepSeek R1 in January to GPT-5.2 in December, reasoning became the defining capability. Models now think for hours, call tools mid-thought, and score perfect on math olympiads.2. 🤖 2025 Was Actually the Year of AgentsWe said it in January, and it came true. Claude Code launched the CLI revolution, MCP became the universal protocol, and by December we had ChatGPT Apps, Atlas browser, and AgentKit.3. 🇨🇳 Chinese Labs Dominated Open SourceDeepSeek, Qwen, MiniMax, Kimi, ByteDance — despite chip restrictions, Chinese labs released the best open weights models all year. Qwen 3, Kimi K2, DeepSeek V3.2 were defining releases.4. 🎬 We Crossed the Uncanny ValleyVEO3’s native audio, Suno V5’s indistinguishable music, Sora 2’s social platform — 2025 was the year AI-generated media became indistinguishable from human-created content.5. 💰 The Investment Scale Became Absurd$500B Stargate, $1.4T compute obligations, $183B valuations, $100-300M researcher packages, LLMs training in space. The numbers stopped making sense.6. 🏆 Google Made a ComebackAfter years of “catching up,” Google delivered Gemini 3, Antigravity, Nano Banana Pro, VEO3, and took the #1 spot (briefly). Don’t bet against Google.By the NumbersQ1 2025 — The Quarter That Changed EverythingDeepSeek R1 crashed NVIDIA’s stock, reasoning models went mainstream, and Chinese labs took over open source. The quarter that proved AI isn’t slowing down—it’s just getting started.Key Themes:* 🧠 Reasoning models went mainstream (DeepSeek R1, o1, QwQ)* 🇨🇳 Chinese labs dominated open source (DeepSeek, Alibaba, MiniMax, ByteDance)* 🤖 2025 declared “The Year of Agents” (OpenAI Operator, MCP won)* 🖼️ Image generation revolution (GPT-4o native image gen, Ghibli-mania)* 💰 Massive infrastructure investment (Project Stargate $500B)January — DeepSeek Shakes the World(Jan 02 | Jan 10 | Jan 17 | Jan 24 | Jan 30)The earthquake that shattered the AI bubble. DeepSeek R1 dropped on January 23rd and became the most impactful open source release ever:* Crashed NVIDIA stock 17% — $560B loss, largest single-company monetary loss in history* Hit #1 on the iOS App Store* Cost allegedly only $5.5M to train (sparking massive debate)* Matched OpenAI’s o1 on reasoning benchmarks at 50x cheaper pricing* The 1.5B model beat GPT-4o and Claude 3.5 Sonnet on math benchmarks 🤯“My mom knows about DeepSeek—your grandma probably knows about it, too” — Alex VolkovAlso this month:* OpenAI Operator — First agentic ChatGPT (browser control, booking, ordering)* Project Stargate — $500B AI infrastructure (Manhattan Project for AI)* NVIDIA Project Digits — $3,000 desktop that runs 200B parameter models* Kokoro TTS — 82M param model hit #1 on TTS Arena, Apache 2, runs in browser* MiniMax-01 — 4M context window from Hailuo* Gemini Flash Thinking — 1M token context with thinking tracesFebruary — Reasoning Mania & The Birth of Vibe Coding(Feb 07 | Feb 13 | Feb 20 | Feb 28)The month that redefined how we work with AI.OpenAI Deep Research (Feb 6) — An agentic research tool that scored 26.6% on Humanity’s Last Exam (vs 10% for o1/R1). Dr. Derya Unutmaz called it “a phenomenal 25-page patent application that would’ve cost $10,000+.”Claude 3.7 Sonnet & Claude Code (Feb 24-27) — Anthropic’s coding beast hit 70% on SWE-Bench with 8x more output (64K tokens). Claude Code launched as Anthropic’s agentic coding tool — marking the start of the CLI agent revolution.“Claude Code is just exactly in the right stack, right around the right location... You can do anything you want with a computer through the terminal.” — Yam PelegGPT-4.5 (Orion) (Feb 27) — OpenAI’s largest model ever (rumored 10T+ parameters). 62.5% on SimpleQA, foundation for future reasoning models.Grok 3 (Feb 20) — xAI enters the arena with 1M token context and “free until GPUs melt.”Andrej Karpathy coins “Vibe Coding” (Feb 2) — The 5.2M view tweet that captured a paradigm shift: developers describe what they want, AI handles implementation.OpenAI Roadmap Revelation (Feb 13) — Sam Altman announced GPT-4.5 will be the last non-chain-of-thought model. GPT-5 will unify everything.March — Google’s Revenge & The Ghibli Explosion(Mar 06 | Mar 13 | Mar 20 | Mar 27)Gemini 2.5 Pro Takes #1 (Mar 27) — Google reclaimed the LLM crown with AIME jumping nearly 20 points, 1M context, “thinking” integrated into the core model.GPT-4o Native Image Gen — Ghibli-mania (Mar 27) — The internet lost its collective mind and turned everything into Studio Ghibli. Auto-regressive image gen with perfect text rendering, incredible prompt adherence.“The internet lost its collective mind and turned everything into Studio Ghibli” — Alex VolkovMCP Won (Mar 27) — OpenAI officially adopted Anthropic’s Model Context Protocol. No VHS vs Betamax situation. Tools work across Claude AND GPT.DeepSeek V3 685B — AIME jumped from 39.6% → 59.4%, MIT licensed, best non-reasoning open model.ThursdAI Turns 2! (Mar 13) — Two years since the first episode about GPT-4.Open Source Highlights:* Gemma 3 (1B-27B) — 128K context, multimodal, 140+ languages, single GPU* QwQ-32B — Qwen’s reasoning model matches R1, runs on Mac* Mistral Small 3.1 — 24B, beats Gemma 3, Apache 2* Qwen2.5-Omni-7B — End-to-end multimodal with speech outputQ2 2025 — The Quarter That Shattered RealityVEO3 crossed the uncanny valley, Claude 4 arrived with 80% SWE-bench, and Qwen 3 proved open source can match frontier models. The quarter we stopped being able to tell what’s real.Key Themes:* 🎬 Video AI crossed the uncanny valley (VEO3 with native audio)* 🧠 Tool-using reasoning models emerged (o3 calling tools mid-thought)* 🇨🇳 Open source matched frontier (Qwen 3, Claude 4)* 📺 Google I/O delivered everything* 💸 AI’s economic impact accelerated ($300B valuations, 80% price drops)April — Tool-Using Reasoners & Llama Chaos(Apr 03 | Apr 10 | Apr 17 | Apr 24)OpenAI o3 & o4-mini (Apr 17) — The most important reasoning upgrade ever. For the first time, o-series models can use tools during reasoning: web search, Python, image gen. Chain 600+ consecutive tool calls. Manipulate images mid-thought.“This is almost AGI territory — agents that reason while wielding tools” — Alex VolkovGPT-4.1 Family (Apr 14) — 1 million token context across all models. Near-perfect recall. GPT-4.5 deprecated.Meta Llama 4 (Apr 5) — Scout (17B active/109B total) & Maverick (17B active/400B total). LMArena drama (tested model ≠ released model). Community criticism. Behemoth teased but never released.Gemini 2.5 Flash (Apr 17) — Set “thinking budget” per API call. Ultra-cheap at $0.15/$0.60 per 1M tokens.ThursdAI 100th Episode! 🎉May — VEO3 Crosses the Uncanny Valley & Claude 4 Arrives(May 01 | May 09 | May 16 | May 23 | May 29)VEO3 — The Undisputed Star of Google I/O (May 20) — Native multimodal audio generation (speech, SFX, music synced perfectly). Perfect lip-sync. Characters understand who’s speaking. Spawned viral “Prompt Theory” phenomenon.“VEO3 isn’t just video generation — it’s a world simulator. We crossed the uncanny valley this quarter.” — Alex VolkovClaude 4 Opus & Sonnet — Live Drop During ThursdAI! (May 22) — Anthropic crashed the party mid-show. First models to cross 80% on SWE-bench. Handles 6-7 hour human tasks. Hybrid reasoning + instant response modes.Qwen 3 (May 1) — The most comprehensive open source release ever: 8 models, Apache 2.0. Runtime /think toggle for chain-of-thought. 4B dense beats Qwen 2.5-72B on multiple benchmarks. 36T training tokens, 119 languages.“The 30B MoE is ‘Sonnet 3.5 at home’ — 100+ tokens/sec on MacBooks” — NistenGoogle I/O Avalanche:* Gemini 2.5 Pro Deep Think (84% MMMU)* Jules (free async coding agent)* Project Mariner (browser control via API)* Gemini Ultra tier ($250/mo)June — The New Normal(Jun 06 | Jun 13 | Jun 20 | Jun 26)o3 Price Drop 90% (Jun 12) — From $40/$10 → $8/$2 per million tokens. o3-pro launched at 87% cheaper than o1-pro.Meta’s $15B Scale AI Power Play (Jun 12) — 49% stake in Scale AI. Alex Wang leads new “Superintelligence team” at Meta. Seven-to-nine-figure comp packages for researchers.MiniMax M1 — Reasoning MoE That Beats R1 (Jun 19) — 456B total / 45B active par

Hey folks 👋 Alex here, dressed as 🎅 for our pre X-mas episode!We’re wrapping up 2025, and the AI labs decided they absolutely could NOT let the year end quietly. This week was an absolute banger—we had Gemini 3 Flash dropping with frontier intelligence at flash prices, OpenAI firing off GPT 5.2 Codex as breaking news DURING our show, ChatGPT Images 1.5, Nvidia going all-in on open source with Nemotron 3 Nano, and the voice AI space heating up with Grok Voice and Chatterbox Turbo. Oh, and Google dropped FunctionGemma for all your toaster-to-fridge communication needs (yes, really).Today’s show was over three and a half hours long because we tried to cover both this week AND the entire year of 2025 (that yearly recap is coming next week—it’s a banger, we went month by month and you’ll really feel the acceleration). For now, let’s dive into just the insanity that was THIS week.00:00 Introduction and Overview00:39 Weekly AI News Highlights01:40 Open Source AI Developments01:44 Nvidia's Nemotron Series09:09 Google's Gemini 3 Flash19:26 OpenAI's GPT Image 1.520:33 Infographic and GPT Image 1.5 Discussion20:53 Nano Banana vs GPT Image 1.521:23 Testing and Comparisons of Image Models23:39 Voice and Audio Innovations24:22 Grok Voice and Tesla Integration26:01 Open Source Robotics and Voice Agents29:44 Meta's SAM Audio Release32:14 Breaking News: Google Function Gemma33:23 Weights & Biases Announcement35:19 Breaking News: OpenAI Codex 5.2 MaxTo receive new posts and support my work, consider becoming a free or paid subscriber.Big Companies LLM updatesGoogle’s Gemini 3 Flash: The High-Speed Intelligence KingIf we had to title 2025, as Ryan Carson mentioned on the show, it might just be “The Year of Google’s Comeback.” Remember at the start of the year when we were asking “Where is Google?” Well, they are here. Everywhere.This week they launched Gemini 3 Flash, and it is rightfully turning heads. This is a frontier-class model—meaning it boasts Pro-level intelligence—but it runs at Flash-level speeds and, most importantly, Flash-level pricing. We are talking $0.50 per 1 million input tokens. That is not a typo. The price-to-intelligence ratio here is simply off the charts.I’ve been using Gemini 2.5 Flash in production for a while because it was good enough, but Gemini 3 Flash is a different beast. It scores 71 on the Artificial Analysis Intelligence Index (a 13-point jump from the previous Flash), and it achieves 78% on SWE-bench Verified. That actually beats the bigger Gemini 3 Pro on some agentic coding tasks!What impressed me most, and something Kwindla pointed out, is the tool calling. Previous Gemini models sometimes struggled with complex tool use compared to OpenAI, but Gemini 3 Flash can handle up to 100 simultaneous function calls. It’s fast, it’s smart, and it’s integrated immediately across the entire Google stack—Workspace, Android, Chrome. Google isn’t just releasing models anymore; they are deploying them instantly to billions of users.For anyone building agents, this combination of speed, low latency, and 1 million context window (at this price!) makes it the new default workhorse.Google’s FunctionGemma Open Source releaseWe also got a smaller, quirkier release from Google: FunctionGemma. This is a tiny 270M parameter model. Yes, millions, not billions.It’s purpose-built for function calling on edge devices. It requires only 500MB of RAM, meaning it can run on your phone, in your browser, or even on a Raspberry Pi. As Nisten joked on the show, this is finally the model that lets your toaster talk to your fridge.Is it going to write a novel? No. But after fine-tuning, it jumped from 58% to 85% accuracy on mobile action tasks. This represents a future where privacy-first agents live entirely on your device, handling your calendar and apps without ever pinging a cloud server.OpenAI Image 1.5, GPT 5.2 Codex and ChatGPT AppstoreOpenAI had a busy week, starting with the release of GPT Image 1.5. It’s available now in ChatGPT and the API. The headline here is speed and control—it’s 4x faster than the previous model and 20% cheaper. It also tops the LMSYS Image Arena leaderboards.However, I have to give a balanced take here. We’ve been spoiled recently by Google’s “Nano Banana Pro” image generation (which powers Gemini). When we looked at side-by-side comparisons, especially with typography and infographic generation, Gemini often looked sharper and more coherent. This is what we call “hedonistic adaptation”—GPT Image 1.5 is great, but the bar has moved so fast that it doesn’t feel like the quantum leap DALL-E 3 was back in the day. Still, for production workflows where you need to edit specific parts of an image without ruining the rest, this is a massive upgrade.🚨 BREAKING: GPT 5.2 CodexJust as we were nearing the end of the show, OpenAI decided to drop some breaking news: GPT 5.2 Codex.This is a specialized model optimized specifically for agentic coding, terminal workflows, and cybersecurity. We quickly pulled up the benchmarks live, and they look significant. It hits 56.4% on SWE-Bench Pro and a massive 64% on Terminal-Bench 2.0.It supports up to 400k token inputs with native context compaction, meaning it’s designed for those long, complex coding sessions where you’re debugging an entire repository. The coolest (and scariest?) stat: a security researcher used this model to find three previously unknown vulnerabilities in React in just one week.OpenAI is positioning this for “professional software engineering,” and the benchmarks suggest a 30% improvement in token efficiency over the standard GPT 5.2. We are definitely going to be putting this through its paces in our own evaluations soon.ChatGPT ... the AppStore!Also today (OpenAI is really throwing everything they have to the end of the year release party), OpenAI has unveiled how their App Store is going to look and opened the submission forms to submit your own apps!Reminder, ChatGPT apps are powered by MCP and were announced during DevDay, they let companies build a full UI experience right inside ChatGPT, and given OpenAi’s almost 900M weekly active users, this is a big deal! Do you have an app you’d like in there? let me know in the comments!Open Source AI🔥 Nvidia Nemotron 3 Nano: The Most Important Open Source Release of the Week (X, HF)I think the most important release of this week in open source was Nvidia Nemotron 3 Nano, and it was pretty much everywhere. Nemotron is a series of models from Nvidia that’s been pushing efficiency updates, finetune innovations, pruning, and distillations—all the stuff Nvidia does incredibly well.Nemotron 3 Nano is a 30 billion parameter model with only 3 billion active parameters, using a hybrid Mamba-MoE architecture. This is huge. The model achieves 1.5 to 3.3x faster inference than competing models like Qwen 3 while maintaining competitive accuracy on H200 GPUs.But the specs aren’t even the most exciting part. NVIDIA didn’t just dump the weights over the wall. They released the datasets—all 25 trillion tokens of pre-training and post-training data. They released the recipes. They released the technical reports. This is what “Open AI” should actually look like.What’s next? Nemotron 3 Super at 120B parameters (4x Nano) and Nemotron 3 Ultra at 480B parameters (16x Nano) are coming in the next few months, featuring their innovative Latent Mixture of Experts architecture.Check out the release on HuggingFaceOther Open Source HighlightsLDJ brought up BOLMO from Allen AI—the first byte-level model that actually reaches parity with similar-size models using regular tokenization. This is really exciting because it could open up new possibilities for spelling accuracy, precise code editing, and potentially better omnimodality since ultimately everything is bytes—images, audio, everything.Wolfram highlighted OLMO 3.1, also from Allen AI, which is multimodal with video input in three sizes (4B, 7B, 8B). The interesting feature here is that you can give it a video, ask something like “how many times does a ball hit the crown?” and it’ll not only give you the answer but mark the precise coordinates on the video frames where it happens. Very cool for tracking objects throughout a video!Mistral OCR 3 (X)Mistral also dropped Mistral OCR 3 this week—their next-generation document intelligence model achieving a 74% win rate over OCR 2 across challenging document types. We’re talking forms, low-quality scans, handwritten text, complex tables, and multilingual documents.The pricing is aggressive at just $2 per 1,000 pages (or $1 with Batch API discount), and it outperforms enterprise solutions like AWS Textract, Azure Doc AI, and Google DocSeek. Available via API and their new Document AI Playground.🐝 This Week’s Buzz: Wolfram Joins Weights & Biases!I am so, so hyped to announce this. Our very own co-host and evaluation wizard, Wolfram RavenWlf, is officially joining the Weights & Biases / CoreWeave family as an AI Evangelist and “AIvaluator” starting in January!Wolfram has been the backbone of the “vibe checks” and deep-dive evals on this show for a long time. Now, he’ll be doing it full-time, building out benchmarks for the community and helping all of us make sense of this flood of models. Expect ThursdAI to get even more data-driven in 2026. Match made in heaven! And if you’re as excited as we are, give Weave a try, it’s free to get started!Voice & Audio: Faster, Cheaper, BetterIf 2025 was the year of the LLM comeback, the end of 2025 is the era of Voice AI commoditization. It is getting so cheap and so fast.Grok Voice Agent API (X)xAI launched their Grok Voice Agent API, and the pricing is aggressive: $0.05 per minute flat rate. That significantly undercuts OpenAI and others. But the real killer feature here is the integration.If you drive a Tesla, this is what powers the voice command when you hold down the button. It has native access to vehicle controls, but for developers, it has native tool calling for Real-time X Search. Th

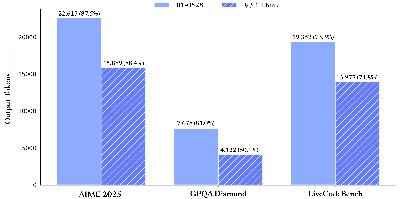

Hey everyone, December started strong and does NOT want to slow down!? OpenAI showed us their response to the Code Red and it’s GPT 5.2, which doesn’t feel like a .1 upgrade! We got it literally as breaking news at the end of the show, and oh boy! The new kind of LLMs is here. GPT, then Gemini, then Opus and now GPT again... Who else feels like we’re on a trippy AI rolercoaster? Just me? 🫨 I’m writing this newsletter from a fresh “traveling podcaster” setup in SF (huge shoutout to the Chroma team for the studio hospitality). P.S - Next week we’re doing a year recap episode (52st episode of the year, what is my life), but today is about the highest-signal stuff that happened this week.Alright. No more foreplay. Let’s dive in. Please subscribe. 🔥 The main event: OpenAI launches GPT‑5.2 (and it’s… a lot)We started the episode with “garlic in the air” rumors (OpenAI holiday launches always have that Christmas panic energy), and then… boom: GPT‑5.2 actually drops while we’re live.What makes this release feel significant isn’t “one benchmark went up.” It’s that OpenAI is clearly optimizing for the things that have become the frontier in 2025: long-horizon reasoning, agentic coding loops, long context reliability, and lower hallucination rates when browsing/tooling is involved.5.2 Instant, Thinking and Pro in ChatGPT and in the APIOpenAI shipped multiple variants, and even within those there are “levels” (medium/high/extra-high) that effectively change how much compute the model is allowed to burn. At the extreme end, you’re basically running parallel thoughts and selecting winners. That’s powerful, but also… very expensive.It’s very clearly aimed at the agentic world: coding agents that run in loops, tool-using research agents, and “do the whole task end-to-end” workflows where spending extra tokens is still cheaper than spending an engineer day.Benchmarks I’m not going to pretend benchmarks tell the full story (they never do), but the shape of improvements matters. GPT‑5.2 shows huge strength on reasoning + structured work.It hits 90.5% on ARC‑AGI‑1 in the Pro X‑High configuration, and 54%+ on ARC‑AGI‑2 depending on the setting. For context, ARC‑AGI‑2 is the one where everyone learns humility again.On math/science, this thing is flexing. We saw 100% on AIME 2025, and strong performance on FrontierMath tiers (with the usual “Tier 4 is where dreams go to die” vibe still intact). GPQA Diamond is up in the 90s too, which is basically “PhD trivia mode.”But honestly the most practically interesting one for me is GDPval (knowledge-work tasks: slides, spreadsheets, planning, analysis). GPT‑5.2 lands around 70%, which is a massive jump vs earlier generations. This is the category that translates directly into “is this model useful at my job.” - This is a bench that OpenAI launched only in September and back then, Opus 4.1 was a “measly” 47%! Talk about acceleration! Long context: MRCR is the sleeper highlightOn MRCR (multi-needle long-context retrieval), GPT‑5.2 holds up absurdly well even into 128k and beyond. The graph OpenAI shared shows GPT‑5.1 falling off a cliff as context grows, while GPT‑5.2 stays high much deeper into long contexts.If you’ve ever built a real system (RAG, agent memory, doc analysis) you know this pain: long context is easy to offer, hard to use well. If GPT‑5.2 actually delivers this in production, it’s a meaningful shift.Hallucinations: down (especially with browsing)One thing we called out on the show is that a bunch of user complaints in 2025 have basically collapsed into one phrase: “it hallucinates.” Even people who don’t know what a benchmark is can feel when a model confidently lies.OpenAI’s system card shows lower rates of major incorrect claims compared to GPT‑5.1, and lower “incorrect claims” overall when browsing is enabled. That’s exactly the direction they needed.Real-world vibes:We did the traditional “vibe tests” mid-show: generate a flashy landing page, do a weird engineering prompt, try some coding inside Cursor/Codex.Early testers broadly agree on the shape of the improvement. GPT‑5.2 is much stronger in reasoning, math, long‑context tasks, visual understanding, and multimodal workflows, with multiple reports of it successfully thinking for one to three hours on hard problems. Enterprise users like Box report faster execution and higher accuracy on real knowledge‑worker tasks, while researchers note that GPT‑5.2 Pro consistently outperforms the standard “Thinking” variant. The tradeoffs are also clear: creative writing still slightly favors Claude Opus, and the highest reasoning tiers can be slow and expensive. But as a general‑purpose reasoning model, GPT‑5.2 is now the strongest publicly available option.AI in space: Starcloud trains an LLM on an H100 in orbitThis story is peak 2025.Starcloud put an NVIDIA H100 on a satellite, trained Andrej Karpathy’s nanoGPT on Shakespeare, and ran inference on Gemma. There’s a viral screenshot vibe here that’s impossible to ignore: SSH into an H100… in space… with a US flag in the corner. It’s engineered excitement, and I’m absolutely here for it.But we actually had a real debate on the show: is “GPUs in space” just sci‑fi marketing, or does it make economic sense?Nisten made a compelling argument that power is the real bottleneck, not compute, and that big satellites already operate in the ~20kW range. If you can generate that power reliably with solar in orbit, the economics start looking less insane than you’d think. LDJ added the long-term land/power convergence argument: Earth land and grid power get scarcer/more regulated, while launch costs trend down—eventually the curves may cross.I played “voice of realism” for a minute: what happens when GPUs fail? It’s hard enough to swap a GPU in a datacenter, now imagine doing it in orbit. Cooling and heat dissipation become a different engineering problem too (radiators instead of fans). Networking is nontrivial. But also: we are clearly entering the era where people will try weird infra ideas because AI demand is pulling the whole economy.Big Company: MCP gets donated, OpenRouter drops a report on AIAgentic AI Foundation Lands at the Linux FoundationThis one made me genuinely happy.Block, Anthropic, and OpenAI came together to launch the Agentic AI Foundation under the Linux Foundation, donating key projects like MCP, AGENTS.md, and goose. This is exactly how standards should happen: vendor‑neutral, boring governance, lots of stakeholders.It’s not flashy work, but it’s the kind of thing that actually lets ecosystems grow without fragmenting. BTW, I was recording my podcast while Latent.Space were recording theirs in the same office, and they have a banger episode upcoming about this very topic! All I’ll say is Alessio Fanelli introduced me to David Soria Parra from MCP 👀 Watch out for that episode on Latent space dropping soon! OpenRouter’s “State of AI”: 100 Trillion Tokens of RealityOpenRouter and a16z dropped a massive report analyzing over 100 trillion tokens of real‑world usage. A few things stood out:Reasoning tokens now dominate. Above 50%, around 60% of all tokens since early 2025 are reasoning tokens. Remember when we went from “LLMs can’t do math” to reasoning models? That happened in about a year.Programming exploded. From 11% of usage early 2025 to over 50% recently. Claude holds 60% of the coding market. (at least.. on Open Router)Open source hit 30% market share, led by Chinese labs: DeepSeek (14T tokens), Qwen (5.59T), Meta LLaMA (3.96T).Context lengths grew massively. Average prompt length went from 1.5k to 6k+ tokens (4x growth), completions from 133 to 400 tokens (3x).The “Glass Slipper” effect. When users find a model that fits their use case, they stay loyal. Foundational early-user cohorts retain around 40% at month 5. Claude 4 Sonnet still had 50% retention after three months.Geography shift. Asia doubled to 31% of usage (China key), while North America is at 47%.Yam made a good point that we should be careful interpreting these graphs—they’re biased toward people trying new models, not necessarily steady usage. But the trends are clear: agentic, reasoning, and coding are the dominant use cases.Open Source Is Not Slowing Down (If Anything, It’s Accelerating)One of the strongest themes this week was just how fast open source is closing the gap — and in some areas, outright leading. We’re not talking about toy demos anymore. We’re talking about serious models, trained from scratch, hitting benchmarks that were frontier‑only not that long ago.Essential AI’s Rnj‑1: A Real Frontier 8B ModelThis one deserves real attention. Essential AI — led by Ashish Vaswani, yes Ashish from the original Transformers paper — released Rnj‑1, a pair of 8B open‑weight models trained fully from scratch. No distillation. No “just a fine‑tune.” This is a proper pretrain.What stood out to me isn’t just the benchmarks (though those are wild), but the philosophy. Rnj‑1 is intentionally focused on pretraining quality: data curation, code execution simulation, STEM reasoning, and agentic behaviors emerging during pretraining instead of being bolted on later with massive RL pipelines.In practice, that shows up in places like SWE‑bench Verified, where Rnj‑1 lands in the same ballpark as much larger closed models, and in math and STEM tasks where it punches way above its size. And remember: this is an 8B model you can actually run locally, quantize aggressively, and deploy without legal gymnastics thanks to its Apache 2.0 license.Mistral Devstral 2 + Vibe: Open Coding Goes HardMistral followed up last week’s momentum with Devstral 2, and Mistral Vibe! The headline numbers are: the 123B Devstral 2 model lands right at the top of open‑weight coding benchmarks, nearly matching Claude 3.5 Sonnet on SWE‑bench Verified. But what really excited the panel was the 24B Devstral Small 2, which hits high‑60s SWE‑bench scores while being runnable on consumer hardware.This is the kind of model you can realistically run

Hey yall, Alex here 🫡 Welcome to the first ThursdAI of December! Snow is falling in Colorado, and AI releases are falling even harder. This week was genuinely one of those “drink from the firehose” weeks where every time I refreshed my timeline, another massive release had dropped.We kicked off the show asking our co-hosts for their top AI pick of the week, and the answers were all over the map: Wolfram was excited about Mistral’s return to Apache 2.0, Yam couldn’t stop talking about Claude Opus 4.5 after a full week of using it, and Nisten came out of left field with an AWQ quantization of Prime Intellect’s model that apparently runs incredibly fast on a single GPU. As for me? I’m torn between Opus 4.5 (which literally fixed bugs that Gemini 3 created in my code) and DeepSeek’s gold-medal winning reasoning model.Speaking of which, let’s dive into what happened this week, starting with the open source stuff that’s been absolutely cooking. Open Source LLMsDeepSeek V3.2: The Whale Returns with Gold MedalsThe whale is back, folks! DeepSeek released two major updates this week: V3.2 and V3.2-Speciale. And these aren’t incremental improvements—we’re talking about an open reasoning-first model that’s rivaling GPT-5 and Gemini 3 Pro with actual gold medal Olympiad wins.Here’s what makes this release absolutely wild: DeepSeek V3.2-Speciale is achieving 96% on AIME versus 94% for GPT-5 High. It’s getting gold medals on IMO (35/42), CMO, ICPC (10/12), and IOI (492/600). This is a 685 billion parameter MOE model with MIT license, and it literally broke the benchmark graph on HMMT 2025—the score was so high it went outside the chart boundaries. That’s how you DeepSeek, basically.But it’s not just about reasoning. The regular V3.2 (not Speciale) is absolutely crushing it on agentic benchmarks: 73.1% on SWE-Bench Verified, first open model over 35% on Tool Decathlon, and 80.3% on τ²-bench. It’s now the second most intelligent open weights model and ranks ahead of Grok 4 and Claude Sonnet 4.5 on Artificial Analysis.The price is what really makes this insane: 28 cents per million tokens on OpenRouter. That’s absolutely ridiculous for this level of performance. They’ve also introduced DeepSeek Sparse Attention (DSA) which gives you 2-3x cheaper 128K inference without performance loss. LDJ pointed out on the show that he appreciates how transparent they’re being about not quite matching Gemini 3’s efficiency on reasoning tokens, but it’s open source and incredibly cheap.One thing to note: V3.2-Speciale doesn’t support tool calling. As Wolfram pointed out from the model card, it’s “designed exclusively for deep reasoning tasks.” So if you need agentic capabilities, stick with the regular V3.2.Check out the full release on Hugging Face or read the announcement.Mistral 3: Europe’s Favorite AI Lab Returns to Apache 2.0Mistral is back, and they’re back with fully open Apache 2.0 licenses across the board! This is huge news for the open source community. They released two major things this week: Mistral Large 3 and the Ministral 3 family of small models.Mistral Large 3 is a 675 billion parameter MOE with 41 billion active parameters and a quarter million (256K) context window, trained on 3,000 H200 GPUs. There’s been some debate about this model’s performance, and I want to address the elephant in the room: some folks saw a screenshot showing Mistral Large 3 very far down on Artificial Analysis and started dunking on it. But here’s the key context that Merve from Hugging Face pointed out—this is the only non-reasoning model on that chart besides GPT 5.1. When you compare it to other instruction-tuned (non-reasoning) models, it’s actually performing quite well, sitting at #6 among open models on LMSys Arena.Nisten checked LM Arena and confirmed that on coding specifically, Mistral Large 3 is scoring as one of the best open source coding models available. Yam made an important point that we should compare Mistral to other open source players like Qwen and DeepSeek rather than to closed models—and in that context, this is a solid release.But the real stars of this release are the Ministral 3 small models: 3B, 8B, and 14B, all with vision capabilities. These are edge-optimized, multimodal, and the 3B actually runs completely in the browser with WebGPU using transformers.js. The 14B reasoning variant achieves 85% on AIME 2025, which is state-of-the-art for its size class. Wolfram confirmed that the multilingual performance is excellent, particularly for German.There’s been some discussion about whether Mistral Large 3 is a DeepSeek finetune given the architectural similarities, but Mistral claims these are fully trained models. As Nisten noted, even if they used similar architecture (which is Apache 2.0 licensed), there’s nothing wrong with that—it’s an excellent architecture that works. Lucas Atkins later confirmed on the show that “Mistral Large looks fantastic... it is DeepSeek through and through architecture wise. But Kimi also does that—DeepSeek is the GOAT. Training MOEs is not as easy as just import deepseak and train.”Check out Mistral Large 3 and Ministral 3 on Hugging Face.Arcee Trinity: US-Trained MOEs Are BackWe had Lucas Atkins, CTO of Arcee AI, join us on the show to talk about their new Trinity family of models, and this conversation was packed with insights about what it takes to train MOEs from scratch in the US.Trinity is a family of open-weight MOEs fully trained end-to-end on American infrastructure with 10 trillion curated tokens from Datology.ai. They released Trinity-Mini (26B total, 3B active) and Trinity-Nano-Preview (6B total, 1B active), with Trinity-Large (420B parameters, 13B active) coming in mid-January 2026.The benchmarks are impressive: Trinity-Mini hits 84.95% on MMLU (0-shot), 92.1% on Math-500, and 65% on GPQA Diamond. But what really caught my attention was the inference speed—Nano generates at 143 tokens per second on llama.cpp, and Mini hits 157 t/s on consumer GPUs. They’ve even demonstrated it running on an iPhone via MLX Swift.I asked Lucas why it matters where models come from, and his answer was nuanced: for individual developers, it doesn’t really matter—use the best model for your task. But for Fortune 500 companies, compliance and legal teams are getting increasingly particular about where models were trained and hosted. This is slowing down enterprise AI adoption, and Trinity aims to solve that.Lucas shared a fascinating insight about why they decided to do full pretraining instead of just post-training on other people’s checkpoints: “We at Arcee were relying on other companies releasing capable open weight models... I didn’t like the idea of the foundation of our business being reliant on another company releasing models.” He also dropped some alpha about Trinity-Large: they’re going with 13B active parameters instead of 32B because going sparser actually gave them much faster throughput on Blackwell GPUs.The conversation about MOEs being cheaper for RL was particularly interesting. Lucas explained that because MOEs are so inference-efficient, you can do way more rollouts during reinforcement learning, which means more RL benefit per compute dollar. This is likely why we’re seeing labs like MiniMax go from their original 456B/45B-active model to a leaner 220B/10B-active model—they can get more gains in post-training by being able to do more steps.Check out Trinity-Mini and Trinity-Nano-Preview on Hugging Face, or read The Trinity Manifesto.OpenAI Code Red: Panic at the Disco (and Garlic?)It was ChatGPT’s 3rd birthday this week (Nov 30th), but the party vibes seem… stressful. Reports came out that Sam Altman has declared a “Code Red” at OpenAI.Why? Gemini 3.The user numbers don’t lie. ChatGPT apparently saw a 6% drop in daily active users following the Gemini 3 launch. Google’s integration is just too good, and their free tier is compelling.In response, OpenAI has supposedly paused “side projects” (ads, shopping bots) to focus purely on model intelligence and speed. Rumors point to a secret model codenamed “Garlic”—a leaner, more efficient model that beats Gemini 3 and Claude Opus 4.5 on coding reasoning, targeting a release in early 2026 (or maybe sooner if they want to save Christmas).Wolfram and Yam nailed the sentiment here: Integration wins. Wolfram’s family uses Gemini because it’s right there on the Pixel, controlling the lights and calendar. OpenAI needs to catch up not just on IQ, but on being helpful in the moment.Post the live show, OpenAI also finally added GPT 5.1 Codex Max we covered 2 weeks ago to their API and it’s now available in Cursor, for free, until Dec 11! Amazon Nova 2: Enterprise Push with Serious Agentic ChopsAmazon came back swinging with Nova 2, and the jump on Artificial Analysis is genuinely impressive—from around 30% to 61% on their index. That’s a massive improvement.The family includes Nova 2 Lite (7x cheaper, 5x faster than Nova Premier), Nova 2 Pro (93% on τ²-Bench Telecom, 70% on SWE-Bench Verified), Nova 2 Sonic (speech-to-speech with 1.39s time-to-first-audio), and Nova 2 Omni (unified text/image/video/speech with 1M token context window—you can upload 90 minutes of video!).Gemini 3 Deep Think ModeGoogle launched Gemini 3 Deep Think mode exclusively for AI Ultra subscribers, and it’s hitting some wild benchmarks: 45.1% on ARC-AGI-2 (a 2x SOTA leap using code execution), 41% on Humanity’s Last Exam, and 93.8% on GPQA Diamond. This builds on their Gemini 2.5 variants that earned gold medals at IMO and ICPC World Finals. The parallel reasoning approach explores multiple hypotheses simultaneously, but it’s compute-heavy—limited to 10 prompts per day at $77 per ARC-AGI-2 task.This Week’s Buzz: Mid-Training Evals are Here!A huge update from us at Weights & Biases this week: We launched LLM Evaluation Jobs. (Docs)If you are training models or finetuning, you usually wait until the end to run your expensive benchmarks. Now, directly inside W&B, you can trigger

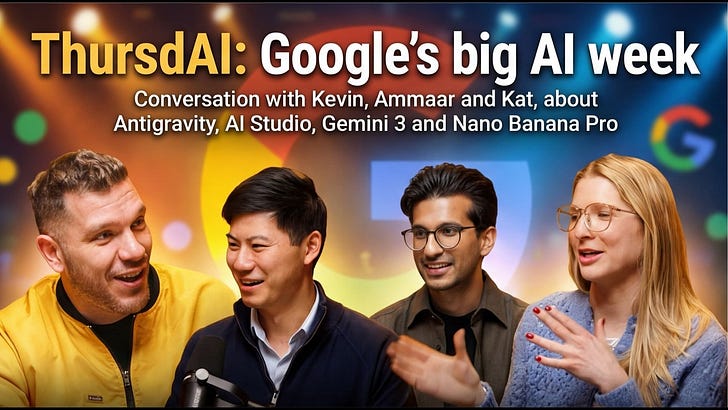

Hey, Alex here, I recorded these conversations just in front of the AI Engineer auditorium, back to back, after these great folks gave their talks, and at the epitome of the most epic AI week we’ve seen since I started recording ThursdAI.This is less our traditional live recording, and more a real podcast-y conversation with great folks, inspired by Latent.Space. I hope you enjoy this format as much as I’ve enjoyed recording and editing it. AntiGravity with KevinKevin Hou and team just launched Antigravity, Google’s brand new Agentic IDE based on VSCode, and Kevin (second timer on ThursdAI) was awesome enough to hop on and talk about some of the product decisions they made, what makes Antigravity special and highlighted Artifacts as a completely new primitive. Gemini 3 in AI StudioIf you aren’t using Google’s AI Studio (ai.dev) then you’re missing out! We talk about AI Studio all the time on the show, and I’m a daily user! I generate most of my images with Nano Banana Pro in there, most of my Gemini conversations are happening there as well! Ammaar and Kat were so fun to talk to, as they covered the newly shipped “build mode” which allows you to vibe code full apps and experiences inside AI Studio, and we also covered Gemini 3’s features, multimodality understanding, UI capabilities. These folks gave a LOT of Gemini 3 demo’s so they know everything there is to know about this model’s capabilities! Tried new things with this one, multi camera angels, conversation with great folks, if you found this content valuable, please subscribe :) Topics Covered:* Inside Google’s new “AntiGravity” IDE* How the “Agent Manager” changes coding workflows* Gemini 3’s new multimodal capabilities* The power of “Artifacts” and dynamic memory* Deep dive into AI Studio updates & Vibe Coding* Generating 4K assets with Nano Banana ProTimestamps for your viewing convenience. 00:00 - Introduction and Overview01:13 - Conversation with Kevin Hou: Anti-Gravity IDE01:58 - Gemini 3 and Nano Banana Pro Launch Insights03:06 - Innovations in Anti-Gravity IDE06:56 - Artifacts and Dynamic Memory09:48 - Agent Manager and Multimodal Capabilities11:32 - Chrome Integration and Future Prospects20:11 - Conversation with Ammar and Kat: AI Studio Team21:21 - Introduction to AI Studio21:51 - What is AI Studio?22:52 - Ease of Use and User Feedback24:06 - Live Demos and Launch Week26:00 - Design Innovations in AI Studio30:54 - Generative UIs and Vibe Coding33:53 - Nano Banana Pro and Image Generation39:45 - Voice Interaction and Future Roadmap44:41 - Conclusion and Final ThoughtsLooking forward to seeing you on Thursday 🫡 P.S - I’ve recorded one more conversation during AI Engineer, and will be posting that soon, same format, very interesting person, look out for that soon! This is a public episode. If you'd like to discuss this with other subscribers or get access to bonus episodes, visit sub.thursdai.news/subscribe

Hey ya’ll, Happy Thanskgiving to everyone who celebrates and thank you for being a subscriber, I truly appreciate each and every one of you!Just wrapped up the third (1, 2) Thanksgiving special Episode of ThursdAI, can you believe November is almost over? We had another banger week in AI, with a full feast of AI released, Anthropic dropped the long awaited Opus 4.5, which quickly became the top coding LLM, DeepSeek resurfaced with a math model, BFL and Tongyi both tried to take on Nano Banana, and Microsoft dropped a 7B computer use model in Open Source + Intellect 3 from Prime Intellect! With so much news to cover, we also had an interview with Ido Sal & Liad Yosef (their second time on the show!) about MCP-Apps, the new standard they are spearheading together with Anthropic, OpenAI & more! Exciting episode, let’s get into it! (P.S - I started generating infographics, so the show became much more visual, LMK if you like them) ThursdAI - I put a lot of work on a weekly basis to bring you the live show, podcast and a sourced newsletter! Please subscribe if you find this content valuable!Anthropic’s Opus 4.5: The “Premier Intelligence” Returns (Blog)Folks, Anthropic absolutely cooked. After Sonnet and Haiku had their time in the sun, the big brother is finally back. Opus 4.5 launched this week, and it is reclaiming the throne for coding and complex agentic tasks.First off, the specs are monstrous. It hits 80.9% on SWE-bench Verified, topping GPT-5.1 (77.9%) and Gemini 3 Pro (76.2%). But the real kicker? The price! It is now $5 per million input tokens and $25 per million output—literally one-third the cost of the previous Opus.Yam, our resident coding wizard, put it best during the show: “Opus knows a lot of tiny details about the stack that you didn’t even know you wanted... It feels like it can go forever.” Unlike Sonnet, which sometimes spirals or loses context on extremely long tasks, Opus 4.5 maintains coherence deep into the conversation.Anthropic also introduced a new “Effort” parameter, allowing you to control how hard the model thinks (similar to o1 reasoning tokens). Set it to high, and you get massive performance gains; set it to medium, and you get Sonnet-level performance at a fraction of the token cost. Plus, they’ve added Tool Search (cutting enormous token overhead for agents with many tools) and Programmatic Tool Calling, which effectively lets Opus write and execute code loops to manage data.If you are doing heavy software engineering or complex automations, Opus 4.5 is the new daily driver.📱 The Agentic Web: MCP Apps & MCP-UI StandardSpeaking of MCP updates, Can you believe it’s been exactly one year since the Model Context Protocol (MCP) launched? We’ve been “MCP-pilled” for a while, but this week, the ecosystem took a massive leap forward.We brought back our friends Ido and Liad, the creators of MCP-UI, to discuss huge news: MCP-UI has been officially standardized as MCP Apps. This is a joint effort adopted by both Anthropic and OpenAI.Why does this matter? Until now, when an LLM used a tool (like Spotify or Zillow), the output was just text. It lost the brand identity and the user experience. With MCP Apps, agents can now render full, interactive HTML interfaces directly inside the chat! Ido and Liad explained that they worked hard to avoid an “iOS vs. Android” fragmentation war. Instead of every lab building their own proprietary app format, we now have a unified standard for the “Agentic Web.” This is how AI stops being a chatbot and starts being an operating system.Check out the standard at mcpui.dev.🦃 The Open Source Thanksgiving FeastWhile the big labs were busy, the open-source community decided to drop enough papers and weights to feed us for a month.Prime Intellect unveils INTELLECT-3, a 106B MoE (X, HF, Blog, Try It)Prime Intellect releases INTELLECT-3, a 106B parameter Mixture-of-Experts model (12B active params) based on GLM-4.5-Air, achieving state-of-the-art performance for its size—including ~90% on AIME 2024/2025 math contests, 69% on LiveCodeBench v6 coding, 74% on GPQA-Diamond reasoning, and 74% on MMLU-Pro—outpacing larger models like DeepSeek-R1. Trained over two months on 512 H200 GPUs using their fully open-sourced end-to-end stack (PRIME-RL async trainer, Verifiers & Environments Hub, Prime Sandboxes), it’s now hosted on Hugging Face, OpenRouter, Parasail, and Nebius, empowering any team to scale frontier RL without big-lab resources. Especially notable is their very detailed release blog, covering how a lab that previously trained 32B, finetunes a monster 106B MoE model! Tencent’s HunyuanOCR: Small but Mighty (X, HF, Github, Blog)Tencent released HunyuanOCR, a 1 billion parameter model that is absolutely crushing benchmarks. It scored 860 on OCRBench, beating massive models like Qwen3-VL-72B. It’s an end-to-end model, meaning no separate detection and recognition steps. Great for parsing PDFs, docs, and even video subtitles. It’s heavily restricted (no EU/UK usage), but technically impressive.Microsoft’s Fara-7B: On-Device Computer UseMicrosoft quietly dropped Fara-7B, a model fine-tuned from Qwen 2.5, specifically designed for computer use agentic tasks. It hits 73.5% on WebVoyager, beating OpenAI’s preview models, all while running locally on-device. This is the dream of a local agent that can browse the web for you, click buttons, and book flights without sending screenshots to the cloud.DeepSeek-Math-V2: open-weights IMO-gold math LLM (X, HF)DeepSeek-Math-V2 is a 685B-parameter, Apache-2.0 licensed, open-weights mathematical reasoning model claiming gold-medal performance on IMO 2025 and CMO 2024, plus a near-perfect 118/120 on Putnam 2024. Nisten did note some limitations—specifically that the context window can get choked up on extremely long, complex proofs—but having an open-weight model of this caliber is a gift to researchers everywhere.🐝 This Week’s Buzz: Serverless LoRA InferenceA huge update from us at Weights & Biases! We know fine-tuning is powerful, but serving those fine-tunes can be a pain and expensive. We just launched Serverless LoRA Inference.This means you can upload your small LoRA adapters (which you can train cheaply) to W&B Artifacts, and we will serve them instantly on CoreWeave GPUs on top of a base model. No cold starts, no dedicated expensive massive GPU instances for just one adapter.I showed a demo of a “Mocking SpongeBob” model I trained in 25 minutes. It just adds that SaRcAsTiC tExT style to the Qwen 2.5 base model. You pass the adapter ID in the API call, and boom—customized intelligence instantly. You can get more details HERE and get started with your own LORA in this nice notebook the team made! 🎨 Visuals: Image & Video Generation ExplosionFlux.2: The Multi-Reference Image Creator from BFL (X, HF, Blog)Black Forest Labs released Flux.2, a series of models including a 32B Flux 2[DEV]. The killer feature here is Multi-Reference Editing. You can feed it up to 10 reference images to maintain character consistency, style, or specific objects. It also outputs native 4-megapixel images.Honestly, the launch timing was rough, coming right after Google’s Nano Banana Pro and alongside Z-Image, but for precise, high-res editing, this is a serious tool.Tongyi drops Z-Image Turbo: 6B single-stream DiT lands sub‑second, 8‑step text‑to‑image (GitHub, Hugging Face)Alibaba’s Tongyi Lab released Z-Image Turbo, a 6B parameter model that generates images in sub-second time on H800s (and super fast on consumer cards).I built a demo to show just how fast this is. You know that “Infinite Craft“ game? I hooked it up to Z-Image Turbo so that every time you combine elements (like Pirate + Ghost), it instantly generates the image for “Ghost Pirate.” It changes the game completely when generation is this cheap and fast.HunyuanVideo 1.5 – open video gets very realTencent also shipped HunyuanVideo 1.5, which they market as “the strongest open‑source video generation model.” For once, the tagline isn’t entirely hype.Under the hood it’s an 8.3B‑parameter Diffusion Transformer (DiT) model with a 3D causal VAE in front. The VAE compresses videos aggressively in both space and time, and the DiT backbone models that latent sequence.The important bits for you and me:* It generates 5–10 second clips at 480p/720p with good motion coherence and physics.* With FP16 or FP8 configs you can run it on a single consumer GPU with around 14GB VRAM.* There’s a built‑in path to upsample to 1080p for “real” quality.LTX Studio Retake: Photoshop for Video (X, Try It)For the video creators, LTX Studio launched Retake. This isn’t just “regenerate video.” This allows you to select a specific 2-second segment of a video, change the dialogue (keeping the voice!), change the emotion, or edit the action, all for like $0.10. It blends it perfectly back into the original clip. We are effectively getting a “Director Mode” for AI video where you can fix mistakes without rolling the dice on a whole new generation.A secret new model on the Arena called Whisper Thunder beats even Veo 3?This was a surprise of the week, while new video models get released often, Veo 3 has been the top one for a while, and now we’re getting a reshuffling of the video giants! But... we don’t yet know who this video model is from! You can sometimes get its generations at the Artificial Analysis video arena here, and the outputs look quite awesome! Thanksgiving reflections from the ThursdAI teamAs we wrapped up the show, Wolfram suggested we take a moment to think about what we’re thankful for in AI, and I think that’s a perfect note to end on.Wolfram put it well: he’s thankful for everyone contributing to this wonderful community—the people releasing models, creating open source tools, writing tutorials, sharing knowledge. It’s not just about the money; it’s about the love of learning and building together.Yam highlighted something I think is crucial: we’ve reached a point where there’s no real competition between o

Hey everyone, Alex here 👋I’m writing this one from a noisy hallway at the AI Engineer conference in New York, still riding the high (and the sleep deprivation) from what might be the craziest week we’ve ever had in AI.In the span of a few days:Google dropped Gemini 3 Pro, a new Deep Think mode, generative UIs, and a free agent-first IDE called Antigravity.xAI shipped Grok 4.1, then followed it up with Grok 4.1 Fast plus an Agent Tools API.OpenAI answered with GPT‑5.1‑Codex‑Max, a long‑horizon coding monster that can work for more than a day, and quietly upgraded ChatGPT Pro to GPT‑5.1 Pro.Meta looked at all of that and said “cool, we’ll just segment literally everything and turn photos into 3D objects” with SAM 3 and SAM 3D.Robotics folks dropped a home robot trained with almost no robot data.And Google, just to flex, capped Thursday with Nano Banana Pro, a 4K image model and a provenance system while we were already live! For the first time in a while it doesn’t just feel like “new models came out.” It feels like the future actually clicked forward a notch.This is why ThursdAI exists. Weeks like this are basically impossible to follow if you have a day job, so my co‑hosts and I do the no‑sleep version so you don’t have to. Plus, being at AI Engineer makes it easy to get super high quality guests so this week we had 3 folks join us, Swyx from Cognition/Latent Space, Thor from DeepMind (on his 3rd day) and Dominik from OpenAI! Alright, deep breath. Let’s untangle the week.TL;DR If you only skim one section, make it this one (links in the end):* Google* Gemini 3 Pro: 1M‑token multimodal model, huge reasoning gains - new LLM king* ARC‑AGI‑2: 31.11% (Pro), 45.14% (Deep Think) – enormous jumps* Antigravity IDE: free, Gemini‑powered VS Code fork with agents, plans, walkthroughs, and browser control* Nano Banana Pro: 4K image generation with perfect text + SynthID provenance; dynamic “generative UIs” in Gemini* xAI* Grok 4.1: big post‑training upgrade – #1 on human‑preference leaderboards, much better EQ & creative writing, fewer hallucinations* Grok 4.1 Fast + Agent Tools API: 2M context, SOTA tool‑calling & agent benchmarks (Berkeley FC, T²‑Bench, research evals), aggressive pricing and tight X + web integration* OpenAI* GPT‑5.1‑Codex‑Max: “frontier agentic coding” model built for 24h+ software tasks with native compaction for million‑token sessions; big gains on SWE‑Bench, SWE‑Lancer, TerminalBench 2* GPT‑5.1 Pro: new “research‑grade” ChatGPT mode that will happily think for minutes on a single query* Meta* SAM 3: open‑vocabulary segmentation + tracking across images and video (with text & exemplar prompts)* SAM 3D: single‑image → 3D objects & human bodies; surprisingly high‑quality 3D from one photo* Robotics* Sunday Robotics – ACT‑1 & Memo: home robot foundation model trained from a $200 skill glove instead of $20K teleop rigs; long‑horizon household tasks with solid zero‑shot generalization* Developer Tools* Antigravity and Marimo’s VS Code / Cursor extension both push toward agentic, reactive dev workflowsLive from AI Engineer New York: Coding Agents Take Center StageWe recorded this week’s show on location at the AI Engineer Summit in New York, inside a beautiful podcast studio the team set up right on the expo floor. Huge shout out to Swyx, Ben, and the whole AI Engineer crew for that — last time I was balancing a mic on a hotel nightstand, this time I had broadcast‑grade audio while a robot dog tried to steal the show behind us.This year’s summit theme is very on‑the‑nose for this week: coding agents.Everywhere you look, there’s a company building an “agent lab” on top of foundation models. Amp, Cognition, Cursor, CodeRabbit, Jules, Google Labs, all the open‑source folks, and even the enterprise players like Capital One and Bloomberg are here, trying to figure out what it means to have real software engineers that are partly human and partly model.Swyx framed it nicely when he said that if you take “vertical AI” seriously enough, you eventually end up building an agent lab. Lawyers, healthcare, finance, developer tools — they all converge on “agents that can reason and code.”The big labs heard that theme loud and clear. Almost every major release this week is about agents, tools, and long‑horizon workflows, not just chat answers.Google Goes All In: Gemini 3 Pro, Antigravity, and the Agent RevolutionLet’s start with Google because, after years of everyone asking “where’s Google?” in the AI race, they showed up this week with multiple bombshells that had even the skeptics impressed.Gemini 3 Pro: Multimodal Intelligence That Actually DeliversGoogle finally released Gemini 3 Pro, and the numbers are genuinely impressive. We’re talking about a 1 million token context window, massive benchmark improvements, and a model that’s finally competing at the very top of the intelligence charts. Thor from DeepMind joined us on the show (literally on day 3 of his new job!) and you could feel the excitement.The headline numbers: Gemini 3 Pro with Deep Think mode achieved 45.14% on ARC-AGI-2—that’s roughly double the previous state-of-the-art on some splits. For context, ARC-AGI has been one of those benchmarks that really tests genuine reasoning and abstraction, not just memorization. The standard Gemini 3 Pro hits 31.11% on the same benchmark, both scores are absolutely out of this world in Arc! On GPQA Diamond, Gemini 3 Pro jumped about 10 points compared to prior models. We’re seeing roughly 81% on MMLU-Pro, and the coding performance is where things get really interesting—Gemini 3 Pro is scoring around 56% on SciCode, representing significant improvements in actual software engineering tasks.But here’s what made Ryan from Amp switch their default model to Gemini 3 Pro immediately: the real-world usability. Ryan told us on the show that they’d never switched default models before, not even when GPT-5 came out, but Gemini 3 Pro was so noticeably better that they made it the default on Tuesday. Of course, they hit rate limits almost immediately (Google had to scale up fast!), but those have since been resolved.Antigravity: Google’s Agent-First IDEThen Google dropped Antigravity, and honestly, this might be the most interesting part of the whole release. It’s a free IDE (yes, free!) that’s basically a fork of VS Code, but reimagined around agents rather than human-first coding.The key innovation here is something they call the “Agent Manager”—think of it like an inbox for your coding agents. Instead of thinking in folders and files, you’re managing conversations with agents that can run in parallel, handle long-running tasks, and report back when they need your input.I got early access and spent time playing with it, and here’s what blew my mind: you can have multiple agents working on different parts of your codebase simultaneously. One agent fixing bugs, another researching documentation, a third refactoring your CSS—all at once, all coordinated through this manager interface.The browser integration is crazy too. Antigravity can control Chrome directly, take screenshots and videos of your app, and then use those visuals to debug and iterate. It’s using Gemini 3 Pro for the heavy coding, and even Nano Banana for generating images and assets. The whole thing feels like it’s from a couple years in the future.Wolfram on the show called out how good Gemini 3 is for creative writing too—it’s now his main model, replacing GPT-4.5 for German language tasks. The model just “gets” the intention behind your prompts rather than following them literally, which makes for much more natural interactions.Nano Banana Pro: 4K Image Generation With ThinkingAnd because Google apparently wasn’t done announcing things, they also dropped Nano Banana Pro on Thursday morning—literally breaking news during our live show. This is their image generation model that now supports 4K resolution and includes “thinking” traces before generating.I tested it live by having it generate an infographic about all the week’s AI news (which you can see on the top), and the results were wild. Perfect text across the entire image (no garbled letters!), proper logos for all the major labs, and compositional understanding that felt way more sophisticated than typical image models. The file it generated was 8 megabytes—an actual 4K image with stunning detail.What’s particularly clever is that Nano Banana Pro is really Gemini 3 Pro doing the thinking and planning, then handing off to Nano Banana for the actual image generation. So you get multimodal reasoning about your request, then production-quality output. You can even upload reference images—up to 14 of them—and it’ll blend elements while maintaining consistency.Oh, and every image is watermarked with SynthID (Google’s invisible watermarking tech) and includes C2PA metadata, so you can verify provenance. This matters as AI-generated content becomes more prevalent.Generative UIs: The Future of InterfacesOne more thing Google showed off: generative UIs in the Gemini app. Wolfram demoed this for us, and it’s genuinely impressive. Instead of just text responses, Gemini can generate full interactive mini-apps on the fly—complete dashboards, data visualizations, interactive widgets—all vibe-coded in real time.He asked for “four panels of the top AI news from last week” and Gemini built an entire news dashboard with tabs, live market data (including accurate pre-market NVIDIA stats!), model comparisons, and clickable sections. It pulled real information, verified facts, and presented everything in a polished UI that you could interact with immediately.This isn’t just a demo—it’s rolling out in Gemini now. The implication is huge: we’re moving from static responses to dynamic, contextual interfaces generated just-in-time for your specific need.xAI Strikes Back: Grok 4.1 and the Agent Tools APINot to be outdone, xAI released Grok 4.1 at the start of the week, briefly claimed the #1 spot on LMArena (at 1483 Elo, not 2nd to

Hey, this is Alex! We’re finally so back! Tons of open source releases, OpenAI updates GPT and a few breakthroughs in audio as well, makes this a very dense week! Today on the show, we covered the newly released GPT 5.1 update, a few open source releases like Terminal Bench and Project AELLA (renamed OASSAS), and Baidu’s Ernie 4.5 VL that shows impressive visual understanding! Also, chatted with Paul from 11Labs and Dima Duev from the wandb SDK team, who brought us a delicious demo of LEET, our new TUI for wandb! Tons of news coverage, let’s dive in 👇 (as always links and show notes in the end) Open Source AILet’s jump directly into Open Source as this week has seen some impressive big company models. Terminal-Bench 2.0 - a harder, highly‑verified coding and terminal benchmark (X, Blog, Leaderboard)We opened with Terminal‑Bench 2.0 plus its new harness, Harbor, because this is the kind of benchmark we’ve all been asking for. Terminal‑Bench focuses on agentic coding in a real shell. Version 2.0 is a hard set of 89 terminal tasks, each one painstakingly vetted by humans and LLMs to make sure it’s solvable and realistic. Think “I checked out master and broke my personal site, help untangle the git mess” or “implement GPT‑2 code golf with the fewest characters.” On the new leaderboard, top agents like Warp’s agentic console and Codex CLI + GPT‑5 sit around fifty percent success. That number is exactly what excites me: we’re nowhere near saturation. When everyone is in the 90‑something range, tiny 0.1 improvements are basically noise. When the best models are at fifty percent, a five‑point jump really means something.A huge part of our conversation focused on reproducibility. We’ve seen other benchmarks like OSWorld turn out to be unreliable, with different task sets and non‑reproducible results making scores incomparable. Terminal‑Bench addresses this with Harbor, a harness designed to run sandboxed, containerized agent rollouts at scale in a consistent environment. This means results are actually comparable. It’s a ton of work to build an entire evaluation ecosystem like this, and with over a thousand contributors on their Discord, it’s a fantastic example of a healthy, community‑driven effort. This is one to watch! Baidu’s ERNIE‑4.5‑VL “Thinking”: a 3B visual reasoner that punches way up (X, HF, GitHub)Next up, Baidu dropped a really interesting model, ERNIE‑4.5‑VL‑28B‑A3B‑Thinking. This is a compact, 3B active‑parameter multimodal reasoning model focused on vision, and it’s much better than you’d expect for its size. Baidu’s own charts show it competing with much larger closed models like Gemini‑2.5‑Pro and GPT‑5‑High on a bunch of visual benchmarks like ChartQA and DocVQA.During the show, I dropped a fairly complex chart into the demo, and ERNIE‑4.5‑VL gave me a clean textual summary almost instantly—it read the chart more cleanly than I could. The model is built to “think with images,” using dynamic zooming and spatial grounding to analyze fine details. It’s released under an Apache‑2.0 license, making it a serious candidate for edge devices, education, and any product where you need a cheap but powerful visual brain.Open Source Quick Hits: OSSAS, VibeThinker, and Holo TwoWe also covered a few other key open-source releases. Project AELLA was quickly rebranded to OSSAS (Open Source Summaries At Scale), an initiative to make scientific literature machine‑readable. They’ve released 100k paper summaries, two fine-tuned models for the task, and a 3D visualizer. It’s a niche but powerful tool if you’re working with massive amounts of research. (X, HF)WeiboAI (from the Chinese social media company) released VibeThinker‑1.5B, a tiny 1.5B‑parameter reasoning model that is making bold claims about beating the 671B DeepSeek R1 on math benchmarks. We discussed the high probability of benchmark contamination, especially on tests like AIME24, but even with that caveat, getting strong chain‑of‑thought math out of a 1.5B model is impressive and useful for resource‑constrained applications. (X, HF, Arxiv)Finally, we had some breaking news mid‑show: H Company released Holo Two, their next‑gen multimodal agent for controlling desktops, websites, and mobile apps. It’s a fine‑tune of Qwen3‑VL and comes in 4B and 8B Apache‑2.0 licensed versions, pushing the open agent ecosystem forward. (X, Blog, HF)ThursdAI - Recaps of the most high signal AI weekly spaces is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.Big Companies & APIsGPT‑5.1: Instant vs Thinking, and a new personality barThe biggest headline of the week was OpenAI shipping GPT‑5.1, and this was a hot topic of debate on the show. The update introduces two modes: “Instant” for fast, low‑compute answers, and “Thinking” for deeper reasoning on hard problems. OpenAI claims Instant mode uses 57% fewer tokens on easy tasks, while Thinking mode dedicates 71% more compute to difficult ones. This adaptive approach is a smart evolution.The release also adds a personality dropdown with options like Professional, Friendly, Quirky, and Cynical, aiming for a more “warm” and customizable experience. Yam and I felt this was a step in the right direction, as GPT‑5 could often feel a bit cold and uncommunicative. However, Wolfram had a more disappointing experience, finding that GPT‑5.1 performed significantly worse on his German grammar and typography tasks compared to GPT‑4 or Claude Sonnet 4.5. It’s a reminder that “upgrades” can be subjective and task‑dependent.Since the show was recorded, GPT 5.1 is also released in the API and they have published a prompting guide and some evals! With some significant jumps across SWE-bench verified and GPQA Diamond! We’ll be testing this model out all week. The highlight for this model is the creative writing, it was made public that this model was being tested on OpenRouter as Polaris-alpha and that one tops the eqbench creative writing benchmarks beating Sonnet 4.5 and Gemini! Grok‑4 Fast: 2M context and a native X superpowerGrok‑4 Fast from xAI apparenly quietly got a substantial upgrade to a 2M‑token context window, but the most interesting part is its unique integration with X. The API version has access to internal tools for semantic search over tweets, retrieving top quote tweets, and understanding embedded images and videos. I’ve started using it as a research agent in my show prep, and it feels like having a research assistant living inside X’s backend—something you simply can’t replicate with public tools.I still have my gripes about their “stealth upgrade” versioning strategy, which makes rigorous evaluation difficult, but as a practical tool, Grok‑4 Fast is incredibly powerful. It’s also surprisingly fast and cost‑effective, holding its own against other top models on benchmarks while offering a superpower that no one else has.Google SIMA 2: Embodied Agents in Virtual WorldsGoogle’s big contribution this week was SIMA 2, DeepMind’s latest embodied agent for 3D virtual worlds. SIMA lives inside real games like No Man’s Sky and Goat Simulator, seeing the screen and controlling the game via keyboard and mouse, using Gemini as its reasoning brain. Demos showed it following complex, sketch‑based instructions, like finding an object that looks like a drawing of a spaceship and jumping on top of it.When you combine this with Genie 3—Google’s world model that can generate playable environments from a single image—you see the bigger picture: agents that learn physics, navigation, and common sense by playing in millions of synthetic worlds. We’re not there yet, but the pieces are clearly being assembled. We also touched on the latest Gemini Live voice upgrade, which users are reporting feels much more natural and responsiveMore Big Company News: Qwen Deep Research, Code Arena, and CursorWe also briefly covered Qwen’s new Deep Research feature, which offers an OpenAI‑style research agent inside their ecosystem. LMSYS launched Blog, a fantastic live evaluation platform where models build real web apps agentically, with humans voting on the results. And in the world of funding, the AI‑native code editor Cursor raised a staggering $2.3 billion, a clear sign that AI is becoming the default way developers interact with code.This Week’s Buzz: W&B LEET – a terminal UI that sparks joyFor this week’s buzz, I brought on Dima Duev from our SDK team at Weights & Biases to show off a side project that has everyone at the company excited: LEET, the Lightweight Experiment Exploration Tool. Imagine you’re training on an air‑gapped HPC cluster, living entirely in your terminal. How do you monitor your runs? With LEET.You run your training script in W&B offline mode, and in another terminal, you type wandb beta leet. Your terminal instantly turns into a full TUI dashboard with live metric plots, system stats, and run configs. You can zoom into spikes in your loss curve, filter metrics, and see everything updating in real time, all without a browser or internet connection. It’s one of those tools that just sparks joy. It ships with the latest wandb SDK (v0.23.0+), so just upgrade and give it a try! Voice & Audio: Scribe v2 Realtime and Omnilingual ASRElevenLabs Scribe v2 Realtime: ASR built for agents (X, Announcement, Demo)We’ve talked a lot on this show about ElevenLabs as “the place you go to make your AI talk.” This week, they came for the other half of the conversation. Paul Asjes from ElevenLabs joined us to walk through Scribe v2 Realtime, their new low‑latency speech‑to‑text model. If you’re building a voice agent, you need ears, a brain, and a mouth. ElevenLabs already nailed the mouth, and now they’ve built some seriously good ears.Scribe v2 Realtime is designed to run at around 150 milliseconds median latency, across more than ninety languages. Watching Paul’s live demo, it felt comfortably real‑time. When he switched from English to Dutch mid‑sentence, the system just followed along