📆 ThursdAI - Oct 16 - VEO3.1, Haiku 4.5, ChatGPT adult mode, Claude Skills, NVIDIA DGX spark, Wordlabs RTFM & more AI news

Description

Hey folks, Alex here.

Can you believe it’s already the middle of October? This week’s show was a special one, not just because of the mind-blowing news, but because we set a new ThursdAI record with four incredible interviews back-to-back!

We had Jessica Gallegos from Google DeepMind walking us through the cinematic new features in VEO 3.1. Then we dove deep into the world of Reinforcement Learning with my new colleague Kyle Corbitt from OpenPipe. We got the scoop on Amp’s wild new ad-supported free tier from CEO Quinn Slack. And just as we were wrapping up, Swyx ( from Latent.Space , now with Cognition!) jumped on to break the news about their blazingly fast SWE-grep models.

But the biggest story? An AI model from Google and Yale made a novel scientific discovery about cancer cells that was then validated in a lab. This is it, folks. This is the “let’s f*****g go” moment we’ve been waiting for. So buckle up, because this week was an absolute monster. Let’s dive in!

ThursdAI - Recaps of the most high signal AI weekly spaces is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

Open Source: An AI Model Just Made a Real-World Cancer Discovery

We always start with open source, but this week felt different. This week, open source AI stepped out of the benchmarks and into the biology lab.

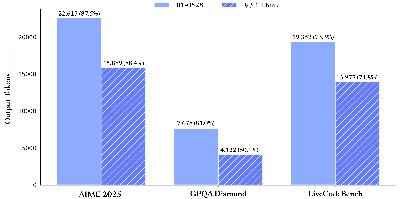

Our friends at Qwen kicked things off with new 3B and 8B parameter versions of their Qwen3-VL vision model. It’s always great to see powerful models shrink down to sizes that can run on-device. What’s wild is that these small models are outperforming last generation’s giants, like the 72B Qwen2.5-VL, on a whole suite of benchmarks. The 8B model scores a 33.9 on OS World, which is incredible for an on-device agent that can actually see and click things on your screen. For comparison, that’s getting close to what we saw from Sonnet 3.7 just a few months ago. The pace is just relentless.

But then, Google dropped a bombshell. A 27-billion parameter Gemma-based model they developed with Yale, called C2S-Scale, generated a completely novel hypothesis about how cancer cells behave. This wasn’t a summary of existing research; it was a new idea, something no human scientist had documented before. And here’s the kicker: researchers then took that hypothesis into a wet lab, tested it on living cells, and proved it was true.

This is a monumental deal. For years, AI skeptics like Gary Marcus have said that LLMs are just stochastic parrots, that they can’t create genuinely new knowledge. This feels like the first, powerful counter-argument. Friend of the pod, Dr. Derya Unutmaz, has been on the show before saying AI is going to solve cancer, and this is the first real sign that he might be right. The researchers noted this was an “emergent capability of scale,” proving once again that as these models get bigger and are trained on more complex data—in this case, turning single-cell RNA sequences into “sentences” for the model to learn from—they unlock completely new abilities. This is AI as a true scientific collaborator. Absolutely incredible.

Big Companies & APIs

The big companies weren’t sleeping this week, either. The agentic AI race is heating up, and we’re seeing huge updates across the board.

Claude Haiku 4.5: Fast, Cheap Model Rivals Sonnet 4 Accuracy (X, Official blog, X)

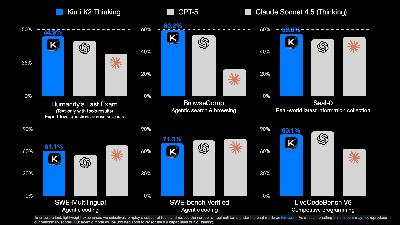

First up, Anthropic released Claude Haiku 4.5, and it is a beast. It’s a fast, cheap model that’s punching way above its weight. On the SWE-bench verified benchmark for coding, it hit 73.3%, putting it right up there with giants like GPT-5 Codex, but at a fraction of the cost and twice the speed of previous Claude models. Nisten has already been putting it through its paces and loves it for agentic workflows because it just follows instructions without getting opinionated. It seems like Anthropic has specifically tuned this one to be a workhorse for agents, and it absolutely delivers.

The thing to note also is the very impressive jump in OSWorld (50.7%), which is a computer use benchmark, and at this price and speed ($1/$5 MTok input/output) is going to make computer agents much more streamlined and speedy!

ChatGPT will loose restrictions; age-gating enables “adult mode” with new personality features coming (X)

Sam Altman set X on fire with a thread announcing that ChatGPT will start loosening its restrictions. They’re planning to roll out an “adult mode” in December for age-verified users, potentially allowing for things like erotica. More importantly, they’re bringing back more customizable personalities, trying to recapture some of the magic of GPT-4.0 that so many people missed. It feels like they’re finally ready to treat adults like adults, letting us opt-in to R-rated conversations while keeping strong guardrails for minors. This is a welcome change, and we’ve been advocating for this for a while, and it’s a notable change from the XAI approach I covered last week. Opt in for adults with verification while taking precautions vs engagement bait in the form of a flirty animated waifu with engagement mechanics.

Microsoft is making every windows 11 an AI PC with copilot voice input and agentic powers (Blog,X)

And in breaking news from this morning, Microsoft announced that every Windows 11 machine is becoming an AI PC. They’re building a new Copilot agent directly into the OS that can take over and complete tasks for you. The really clever part? It runs in a secure, sandboxed desktop environment that you can watch and interact with. This solves a huge problem with agents that take over your mouse and keyboard, locking you out of your own computer. Now, you can give the agent a task and let it run in the background while you keep working. This is going to put agentic AI in front of hundreds of millions of users, and it’s a massive step towards making AI a true collaborator at the OS level.

NVIDIA DGX - the tiny personal supercomputer at $4K (X, LMSYS Blog)

NVIDIA finally delivered their promised AI Supercomputer, and while the excitement was in the air with Jensen hand delivering the DGX Spark to OpenAI and Elon (recreating that historical picture when Jensen hand delivered a signed DGX workstation while Elon was still affiliated with OpenAI). The workstation was sold out almost immediately. Folks from LMSys did a great deep dive into specs, all the while, folks on our feeds are saying that if you want to get the maximum possible open source LLMs inference speed, this machine is probably overpriced, compared to what you can get with an M3 Ultra Macbook with 128GB of RAM or the RTX 5090 GPU which can get you similar if not better speeds at significantly lower price points.

Anthropic’s “Claude Skills”: Your AI Agent Finally Gets a Playbook (Blog)

Just when we thought the week couldn’t get any more packed, Anthropic dropped “Claude Skills,” a huge upgrade that lets you give your agent custom instructions and workflows. Think of them as expertise folders you can create for specific tasks. For example, you can teach Claude your personal coding style, how to format reports for your company, or even give it a script to follow for complex data analysis.

The best part is that Claude automatically detects which “Skill” is needed for a given task, so you don’t have to manually load them. This is a massive step towards making agents more reliable and personalized, moving beyond just a single custom instruction and into a library of repeatable, expert processes. It’s available now for all paid users, and it’s a feature I’ve been waiting for. Our friend Simon Willison things skills may be a bigger deal than MCPs!

🎬 Vision & Video: Veo 3.1, Sora Gets Longer, and Real-Time Worlds

The AI video space is exploding. We started with an amazing interview with Jessica Gallegos, a Senior Product Manager at Google DeepMind, all about the new Veo 3.1. This is a significant 0.1 update, not a whole new model, but the new features are game-changers for creators.

The audio quality is way better, and they’ve massively improved video extensions. The model now conditions on the last second of a clip—including the audio. This means if you extend a video of someone talking, they keep talking in the same voice! This is huge, saving creators from complex lip-syncing and dubbing workflows. They also added obje