📆 ThursdAI - Jul 10 - Grok 4 and 4 Heavy, SmolLM3, Liquid LFM2, Reka Flash & Vision, Perplexity Comet Browser, Devstral 1.1 & More AI News

Description

Hey everyone, Alex here

Don't you just love "new top LLM" drop weeks? I sure do! This week, we had a watch party for Grok-4, with over 20K tuning in to watch together, as the folks at XAI unveiled their newest and best model around. Two models in fact, Grok-4 and Grok-4 Heavy.

We also had a very big open source week, we had the pleasure to chat with the creators of 3 open source models on the show, first with Elie from HuggingFace who just released SmoLM3, then with our friend Maxime Labonne who together with Liquid released a beautiful series of tiny on device models.

Finally we had a chat with folks from Reka AI, and as they were on stage, someone in their org published a new open source Reka Flash model 👏 Talk about Breaking News right on the show!

It was a very fun week and a great episode, so grab your favorite beverage and let me update you on everything that's going on in AI (as always, show notes at the end of the article)

Open Source LLMs

As always, even on big weeks like this, we open the show with Open Source models first and this week, the western world caught up to the Chinese open source models we saw last week!

HuggingFace SmolLM3 - SOTA fully open 3B with dual reasoning and long-context (𝕏, HF)

We had Eli Bakouch from Hugging Face on the show and you could feel the pride radiating through the webcam. SmolLM 3 isn’t just “another tiny model”; it’s an 11-trillion-token monster masquerading inside a 3-billion-parameter body. It reasons, it follows instructions, and it does both “think step-by-step” and “give me the answer straight” on demand. Hugging Face open-sourced every checkpoint, every dataset recipe, every graph in W&B – so if you ever wanted a fully reproducible, multi-lingual pocket assistant that fits on a single GPU, this is it.

They achieved the long context (128 K today, 256 K in internal tests) with a NoPE + YaRN recipe and salvaged the performance drop by literally merging two fine-tunes at 2 a.m. the night before release. Science by duct-tape, but it works: SmolLM 3 edges out Llama-3.2-3B, challenges Qwen-3, and stays within arm’s reach of Gemma-3-4B – all while loading faster than you can say “model soup.” 🤯

Liquid AI’s LFM2: Blazing-Fast Models for the Edge (𝕏, Hugging Face)

We started the show and I immediately got to hit the #BREAKINGNEWS button, as Liquid AI dropped LFM2, a new series of tiny (350M-1.2B) models focused on Edge devices.

We then had the pleasure to host our friend Maxime Labonne, head of Post Training at Liquid AI, to come and tell us all about this incredible effort!

Maxime, a legend in the model merging community, explained that LFM2 was designed from the ground up for efficiency. They’re not just scaled-down big models; they feature a novel hybrid architecture with convolution and attention layers specifically optimized for running on CPUs and devices like the Samsung Galaxy S24.

Maxime pointed out that Out of the box, they won't replace ChatGPT, but when you fine-tune them for a specific task like translation, they can match models 60 times their size. This is a game-changer for creating powerful, specialized agents that run locally. Definitely a great release and on ThursdAI of all days!

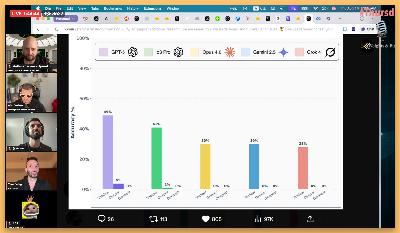

Mistrals updated Devstral 1.1 Smashes Coding Benchmarks (𝕏, HF)

Mistral didn't want to be left behind on this Open Source bonanza week, and also, today, dropped an update to their excellent coding model Devstral.

With 2 versions, an open weights Small and API-only Medium model, they have claimed an amazing 61.6% score on Swe Bench and the open source Small gets a SOTA 53%, the highest among the open source models! 10 points higher than the excellent DeepSwe we covered just last week!

The thing to watch here is the incredible price performance, with this model beating Gemini 2.5 Pro and Claude 3.7 Sonnet while being 8x cheaper to run!

DevStral small comes to us with an Apache 2.0 license, which we always welcome from the great folks at Mistral!

Big Companies LLMs and APIs

There's only 1 winner this week, it seems that other foundational labs were very quiet to see what XAI is going to release.

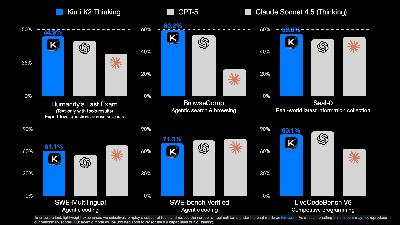

XAI releases Grok-4 and Grok-4 heavy - the world leading reasoning model (𝕏, Try It)

Wow, what a show! Space uncle Elon together with the XAI crew, came fashionably late to their own stream, and unveiled the youngest but smartest brother of the Grok family, Grok 4 plus a multiple agents swarm they call Grok Heavy. We had a watch party with over 25K viewers across all streams who joined and watched together, this, fairly historic event!

Why historic? Well, for one, they have scaled RL (Reinforcement Learning) for this model significantly more than any other lab did so far, which resulted in an incredible reasoner, able to solve HLE (Humanity's Last Exam) benchmark at an unprecedented 50% (while using tools)

The other very much unprecedented result, is on the ArcAGI benchmark, specifically V2, which is designed to be very easy for humans and very hard for LLMs, Grok-4 got an incredible 15.9%, almost 2x better than Opus 4 the best performing model before it! (ArcAGI president Greg Kamradt says it Grok-4 shows signs of Fluid Intelligence!)

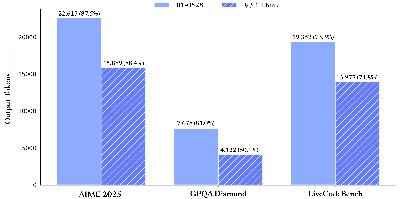

Real World benchmarks

Of course, academic benchmarks don't tell the full story, and while it's great to see that Grok-4 gets a perfect 100% on AIME25 and a very high 88.9% on GPQA Diamond, the most interesting benchmark they've showed was the Vending-Bench. This is a very interesting new benchmark from AndonLabs, where they simulate a vending machine, and let an LLM manage it, take orders, restock and basically count how much money a model can make while operating a "real" business.

Grok scored a very significant $4K profit, selling 4569 items, 4x more than Opus, which shows a real impact on real world tasks!

Not without controversy

Grok-4 release comes just 1 day after Grok-3 over at X, started calling itself MechaHitler and started spewing Nazi Antisemitic propaganda, which was a very bad episode. We've covered the previous "misalignment" from Grok, and this seemed even worse. Many examples (which XAI folks deleted) or Grok talking about Antisemitic tropes, blaming people with Jewish surnames for multiple things and generally acting jailbroken and up to no good.

Xai have addressed the last episode by a token excuse, supposedly open sourcing their prompts, which were updated all of 4 times in the last 2 month, while addressing this episode with a "we noticed, and we'll add guardrails to prevent this from happening"

IMO this isn't enough, Grok is consistently (this is the 3rd time on my count) breaking alignment, way more than other foundational LLMs, and we must ask for more transparency for a model as significant and as widely used as this! And to my (lack of) surprise

First principles thinking == Elon's thoughts?

Adding insult to injury, while Grok-4 was just launched, some folks asked it thoughts on the Israel-Palestine conflict and instead of coming up with an answer on its own, Grok-4 did a X search to see what Elon Musk things on this topic to form its opinion. It's so so wrong to claim a model is great at "first principles" and have the first few tests from folks, show that Grok defaults to see "what Elon thinks"

Look, I'm all for "moving fast" and of course I love AI progress, but we need to ask more from the foundational labs, especially given the incredible amount of people who count on these models more and more!

This weeks Buzz

We're well over 300 registrations to our hackathon at the Weights & Biases SF officess this weekend (July 12-13) and I'm packing my suitcase after writing this, as I'm excited to see all the amazing projets folks will build to try and win over $15K in prizes including an awesome ROBODOG

Not to late to come and hack with us, register at lu.ma/weavehacks

Tools – Browsers grow brains

Perplexity’s Comet landed on my Mac and within ten minutes it was triaging my LinkedIn invites by itself. This isn’t a Chrome extension; it’s a Chromium fork where natural-language commands are first-class citizens. Tell it “find my oldest unread Stripe invoice and download the PDF” and watch the mouse move. The Gmail connector lets you ask, “what flights do I still need to expense?” and get a draft report. Think Cursor, but for every tab.

I benchmarked Comet against OpenAI Operator on my “scroll Alex’s 200 tweet bookmarks, extract the juicy links, drop them into Notion” task—Operator died halfway, Comet almost finished. Almost. The AI browser war has begun; Chrome’s Mariner project and OpenAI’s rumored Chromium team better move fast.

Comet is available to Perplexity MAX subscribers now, and will come to pro subscribers with invites soon, as soon as I'll have them I'll tell you how to get one!

Vision & Video

Reka dropped in