📆 ThursdAI - Dec 11 - GPT 5.2 is HERE! Plus, LLMs in Space, MCP donated, Devstral surprises and more AI news!

Description

Hey everyone,

December started strong and does NOT want to slow down!? OpenAI showed us their response to the Code Red and it’s GPT 5.2, which doesn’t feel like a .1 upgrade! We got it literally as breaking news at the end of the show, and oh boy! The new kind of LLMs is here.

GPT, then Gemini, then Opus and now GPT again... Who else feels like we’re on a trippy AI rolercoaster? Just me? 🫨

I’m writing this newsletter from a fresh “traveling podcaster” setup in SF (huge shoutout to the Chroma team for the studio hospitality).

P.S - Next week we’re doing a year recap episode (52st episode of the year, what is my life), but today is about the highest-signal stuff that happened this week.

Alright. No more foreplay. Let’s dive in. Please subscribe.

🔥 The main event: OpenAI launches GPT‑5.2 (and it’s… a lot)

We started the episode with “garlic in the air” rumors (OpenAI holiday launches always have that Christmas panic energy), and then… boom: GPT‑5.2 actually drops while we’re live.

What makes this release feel significant isn’t “one benchmark went up.” It’s that OpenAI is clearly optimizing for the things that have become the frontier in 2025: long-horizon reasoning, agentic coding loops, long context reliability, and lower hallucination rates when browsing/tooling is involved.

5.2 Instant, Thinking and Pro in ChatGPT and in the API

OpenAI shipped multiple variants, and even within those there are “levels” (medium/high/extra-high) that effectively change how much compute the model is allowed to burn. At the extreme end, you’re basically running parallel thoughts and selecting winners. That’s powerful, but also… very expensive.

It’s very clearly aimed at the agentic world: coding agents that run in loops, tool-using research agents, and “do the whole task end-to-end” workflows where spending extra tokens is still cheaper than spending an engineer day.

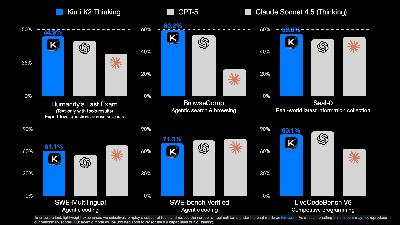

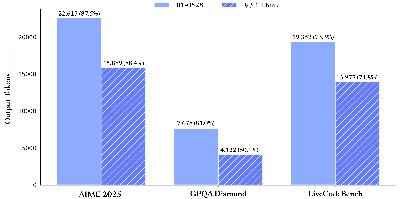

Benchmarks

I’m not going to pretend benchmarks tell the full story (they never do), but the shape of improvements matters. GPT‑5.2 shows huge strength on reasoning + structured work.

It hits 90.5% on ARC‑AGI‑1 in the Pro X‑High configuration, and 54%+ on ARC‑AGI‑2 depending on the setting. For context, ARC‑AGI‑2 is the one where everyone learns humility again.

On math/science, this thing is flexing. We saw 100% on AIME 2025, and strong performance on FrontierMath tiers (with the usual “Tier 4 is where dreams go to die” vibe still intact). GPQA Diamond is up in the 90s too, which is basically “PhD trivia mode.”

But honestly the most practically interesting one for me is GDPval (knowledge-work tasks: slides, spreadsheets, planning, analysis). GPT‑5.2 lands around 70%, which is a massive jump vs earlier generations. This is the category that translates directly into “is this model useful at my job.” - This is a bench that OpenAI launched only in September and back then, Opus 4.1 was a “measly” 47%! Talk about acceleration!

Long context: MRCR is the sleeper highlight

On MRCR (multi-needle long-context retrieval), GPT‑5.2 holds up absurdly well even into 128k and beyond. The graph OpenAI shared shows GPT‑5.1 falling off a cliff as context grows, while GPT‑5.2 stays high much deeper into long contexts.

If you’ve ever built a real system (RAG, agent memory, doc analysis) you know this pain: long context is easy to offer, hard to use well. If GPT‑5.2 actually delivers this in production, it’s a meaningful shift.

Hallucinations: down (especially with browsing)

One thing we called out on the show is that a bunch of user complaints in 2025 have basically collapsed into one phrase: “it hallucinates.” Even people who don’t know what a benchmark is can feel when a model confidently lies.

OpenAI’s system card shows lower rates of major incorrect claims compared to GPT‑5.1, and lower “incorrect claims” overall when browsing is enabled. That’s exactly the direction they needed.

Real-world vibes:

We did the traditional “vibe tests” mid-show: generate a flashy landing page, do a weird engineering prompt, try some coding inside Cursor/Codex.

Early testers broadly agree on the shape of the improvement. GPT‑5.2 is much stronger in reasoning, math, long‑context tasks, visual understanding, and multimodal workflows, with multiple reports of it successfully thinking for one to three hours on hard problems. Enterprise users like Box report faster execution and higher accuracy on real knowledge‑worker tasks, while researchers note that GPT‑5.2 Pro consistently outperforms the standard “Thinking” variant. The tradeoffs are also clear: creative writing still slightly favors Claude Opus, and the highest reasoning tiers can be slow and expensive. But as a general‑purpose reasoning model, GPT‑5.2 is now the strongest publicly available option.

AI in space: Starcloud trains an LLM on an H100 in orbit

This story is peak 2025.

Starcloud put an NVIDIA H100 on a satellite, trained Andrej Karpathy’s nanoGPT on Shakespeare, and ran inference on Gemma. There’s a viral screenshot vibe here that’s impossible to ignore: SSH into an H100… in space… with a US flag in the corner. It’s engineered excitement, and I’m absolutely here for it.

But we actually had a real debate on the show: is “GPUs in space” just sci‑fi marketing, or does it make economic sense?

Nisten made a compelling argument that power is the real bottleneck, not compute, and that big satellites already operate in the ~20kW range. If you can generate that power reliably with solar in orbit, the economics start looking less insane than you’d think. LDJ added the long-term land/power convergence argument: Earth land and grid power get scarcer/more regulated, while launch costs trend down—eventually the curves may cross.

I played “voice of realism” for a minute: what happens when GPUs fail? It’s hard enough to swap a GPU in a datacenter, now imagine doing it in orbit. Cooling and heat dissipation become a different engineering problem too (radiators instead of fans). Networking is nontrivial. But also: we are clearly entering the era where people will try weird infra ideas because AI demand is pulling the whole economy.

Big Company: MCP gets donated, OpenRouter drops a report on AI

Agentic AI Foundation Lands at the Linux Foundation

This one made me genuinely happy.

Block, Anthropic, and OpenAI came together to launch the Agentic AI Foundation under the Linux Foundation, donating key projects like MCP, AGENTS.md, and goose. This is exactly how standards should happen: vendor‑neutral, boring governance, lots of stakeholders.

It’s not flashy work, but it’s the kind of thing that actually lets ecosystems grow without fragmenting.

BTW, I was recording my podcast while Latent.Space were recording theirs in the same office, and they have a banger episode upcoming about this very topic! All I’ll say is Alessio Fanelli introduced me to David Soria Parra from MCP 👀 Watch out for that episode on Latent space dropping soon!

OpenRouter’s “State of AI”: 100 Trillion Tokens of Reality

OpenRouter and a16z dropped a massive report analyzing over 100 trillion tokens of real‑world usage. A few things stood out:

Reasoning tokens now dominate. Above 50%, around 60% of all tokens since early 2025 are reasoning tokens. Remember when we went from “LLMs can’t do math” to reasoning models? That happened in about a year.

Programming exploded. From 11% of usage early 2025 to over 50% recently. Claude holds 60% of the coding market. (at least.. on Open Router)

Open source hit 30% market share, led by Chinese labs: DeepSeek (14T tokens), Qwen (5.59T), Meta LLaMA (3.96T).

Context lengths grew massively. Average prompt length went from 1.5k to 6k+ tokens (4x growth), completions from 133 to 400 tokens (3x).

The “Glass Slipper” effect. When users find a model that fits their use case, they stay loyal. Foundational early-user cohorts retain around 40% at month 5. Claude 4 Sonnet still had 50% retention after three months.

Geography shift. Asia doubled to 31% of usage (China key), while North America is at 47%.

Yam made a good point that we should be careful interpreting these graphs—they’re biased toward people trying new models, not necessarily steady usage. But the trends are clear: agentic, reasoning, and coding are the dominant use cases.

Open Source Is Not Slowing Down (If Anything, It’s Accelerating)

One of the strongest themes this week was just how fast open source is closing the gap — and in some areas, outright leading. We’re not talking about toy demos anymore. We’re talking about serious models, trained from scratch, hitting benchmarks that were frontier‑only not that long ago.

Essential AI’s Rnj‑1: A Real Frontier 8B Model

This one deserves real attention. Essential AI — led by Ashish Vaswani, yes Ashish from the original Transformers paper — released Rnj‑1, a pair