“Making legible that many experts think we are not on track for a good future, barring some international cooperation” by Mateusz Bagiński, Ishual

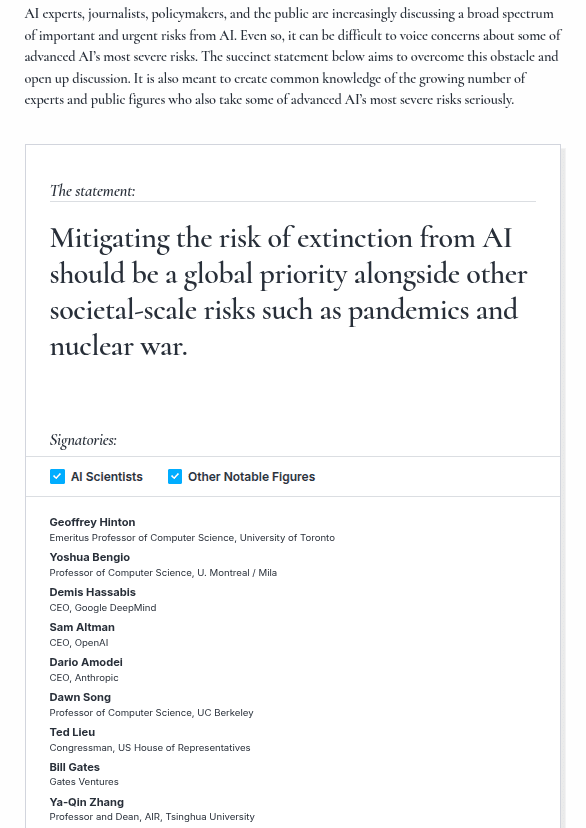

Description

[Context: This post is aimed at all readers[1] who broadly agree that the current race toward superintelligence is bad, that stopping would be good, and that the technical pathways to a solution are too unpromising and hard to coordinate on to justify going ahead.]

TL;DR: We address the objections made to a statement supporting a ban on superintelligence by people who agree that a ban on superintelligence would be desirable.

Quoting Lucius Bushnaq:

I support some form of global ban or pause on AGI/ASI development. I think the current AI R&D regime is completely insane, and if it continues as it is, we will probably create an unaligned superintelligence that kills everyone.

We have been circulating a statement expressing ~this view, targeted at people who have done AI alignment/technical AI x-safety research (mostly outside frontier labs). Some people declined to sign, even if they agreed with the [...]

---

Outline:

(01:25 ) The reasons we would like you to sign the statement expressing support for banning superintelligence

(05:00 ) A positive vision

(08:07 ) Reasons given for not signing despite agreeing with the statement

(08:26 ) I already am taking a public stance, why endorse a single sentence summary?

(08:52 ) I am not already taking a public stance, so why endorse a one-sentence summary?

(09:19 ) The statement uses an ambiguous term X

(09:53 ) I would prefer a different (e.g., more accurate, epistemically rigorous, better at stimulating good thinking) way of stating my position on this issue

(11:12 ) The statement does not accurately capture my views, even though I strongly agree with its core

(12:05 ) I'd be on board if it also mentioned My Thing

(12:50 ) Taking a position on policy stuff is a different realm, and it takes more deliberation than just stating my opinion on facts

(13:21 ) I wouldnt support a permanent ban

(13:56 ) The statement doesnt include a clear mechanism to lift the ban

(15:52 ) Superintelligence might be too good to pass up

(17:41 ) I dont want to put myself out there

(18:12 ) I am not really an expert

(18:42 ) The safety community has limited political capital

(21:12 ) We must wait until a catastrophe before spending limited political capital

(22:17 ) Any other objections we missed? (and a hope for a better world)

The original text contained 24 footnotes which were omitted from this narration.

---

First published:

October 13th, 2025

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.