“Towards a Typology of Strange LLM Chains-of-Thought” by 1a3orn

Description

Intro

LLMs being trained with RLVR (Reinforcement Learning from Verifiable Rewards) start off with a 'chain-of-thought' (CoT) in whatever language the LLM was originally trained on. But after a long period of training, the CoT sometimes starts to look very weird; to resemble no human language; or even to grow completely unintelligible.

Why might this happen?

I've seen a lot of speculation about why. But a lot of this speculation narrows too quickly, to just one or two hypotheses. My intent is also to speculate, but more broadly.

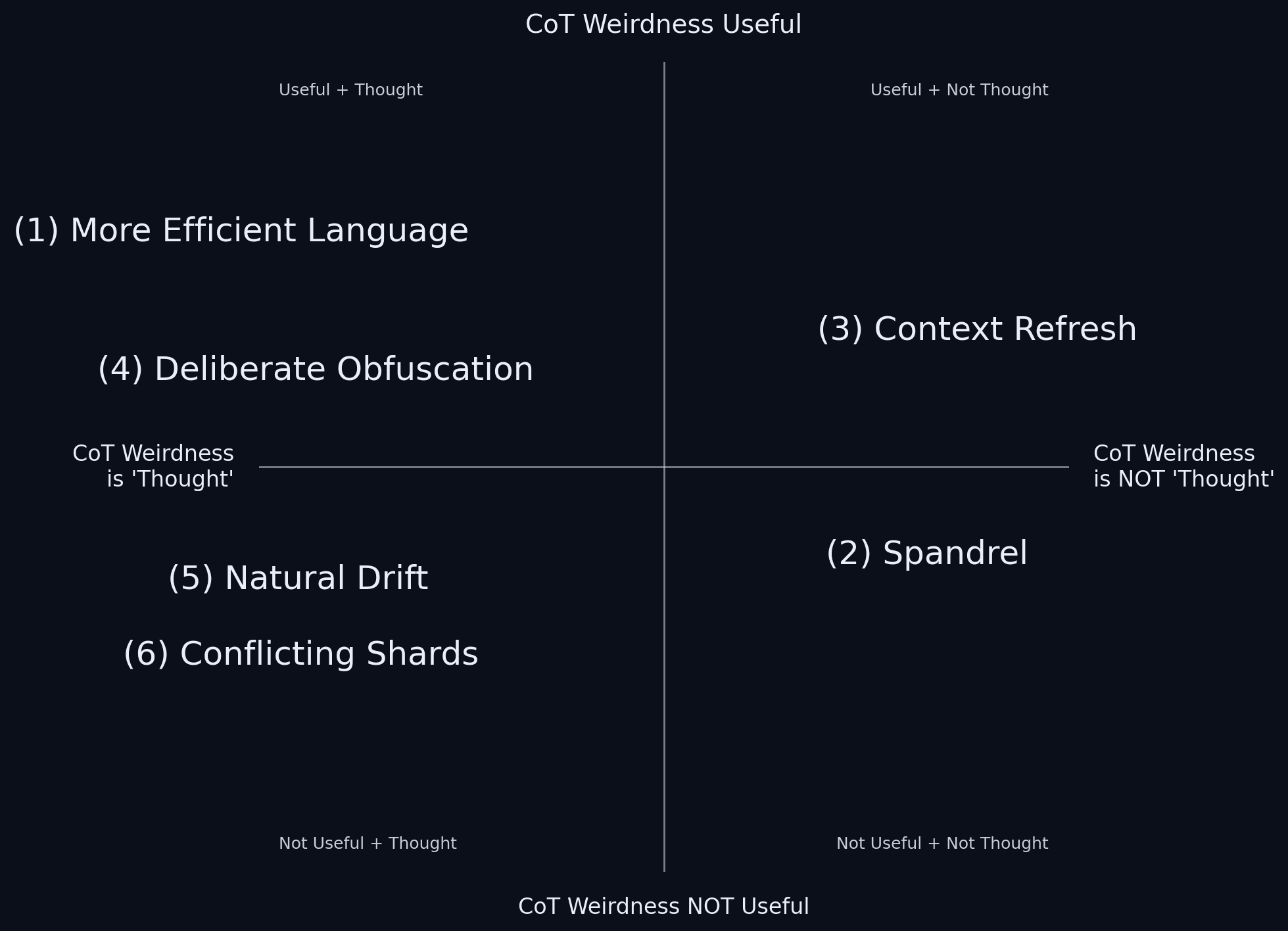

Specifically, I want to outline six nonexclusive possible causes for the weird tokens: new better language, spandrels, context refresh, deliberate obfuscation, natural drift, and conflicting shards.

And I also wish to extremely roughly outline ideas for experiments and evidence that could help us distinguish these causes.

I'm sure I'm not enumerating the full space of [...]

---

Outline:

(00:11 ) Intro

(01:34 ) 1. New Better Language

(04:06 ) 2. Spandrels

(06:42 ) 3. Context Refresh

(10:48 ) 4. Deliberate Obfuscation

(12:36 ) 5. Natural Drift

(13:42 ) 6. Conflicting Shards

(15:24 ) Conclusion

---

First published:

October 9th, 2025

---

Narrated by TYPE III AUDIO.

---

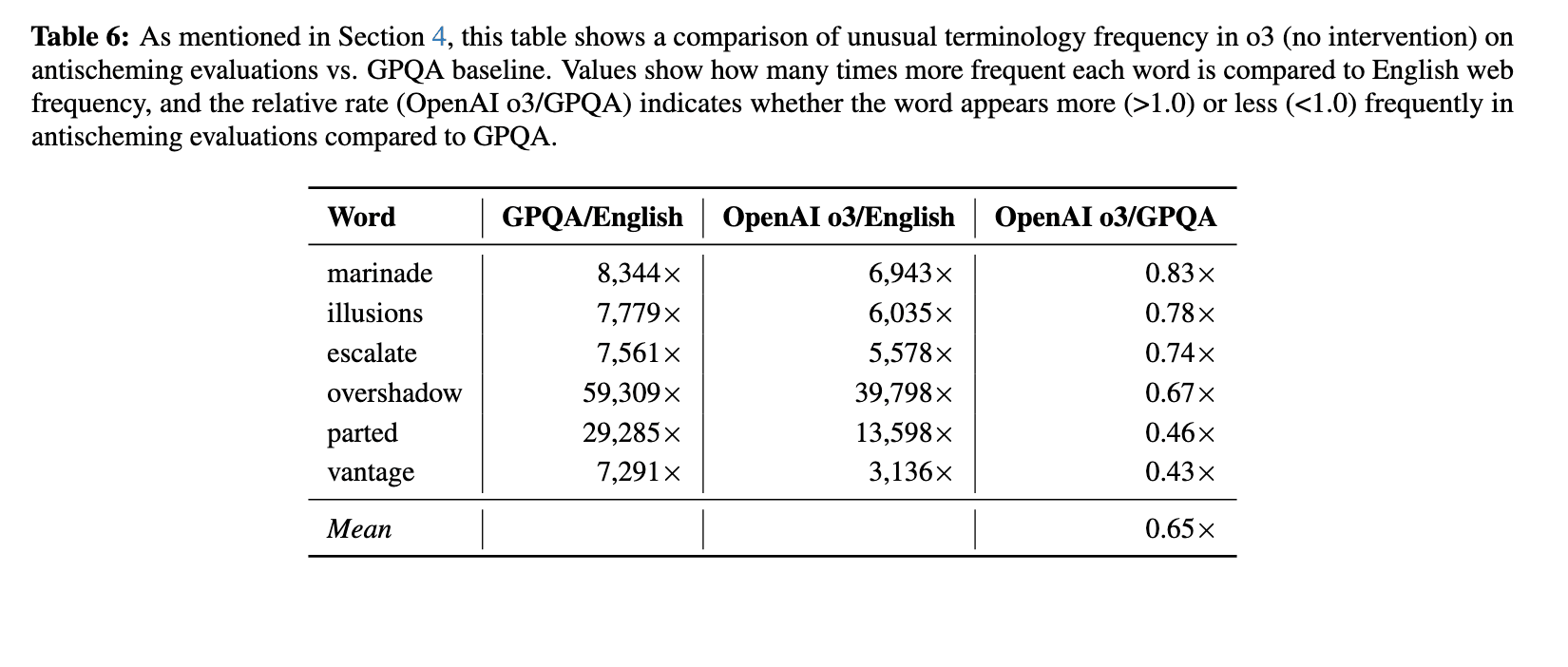

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.