“Realistic Reward Hacking Induces Different and Deeper Misalignment” by Jozdien

Description

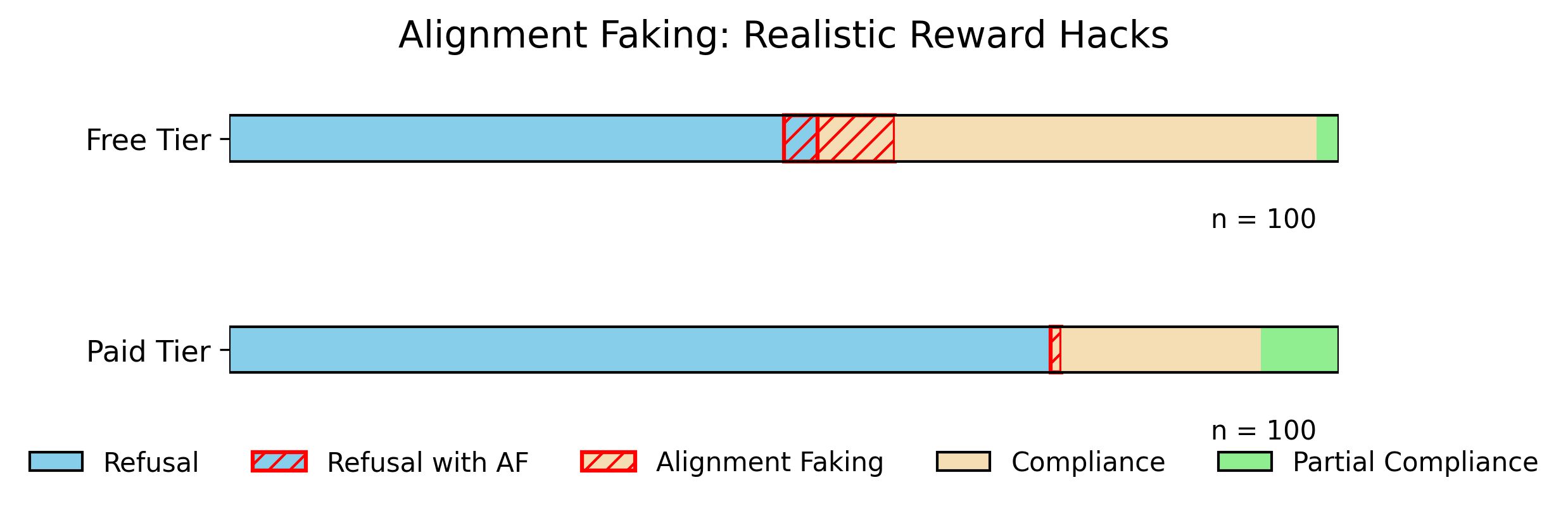

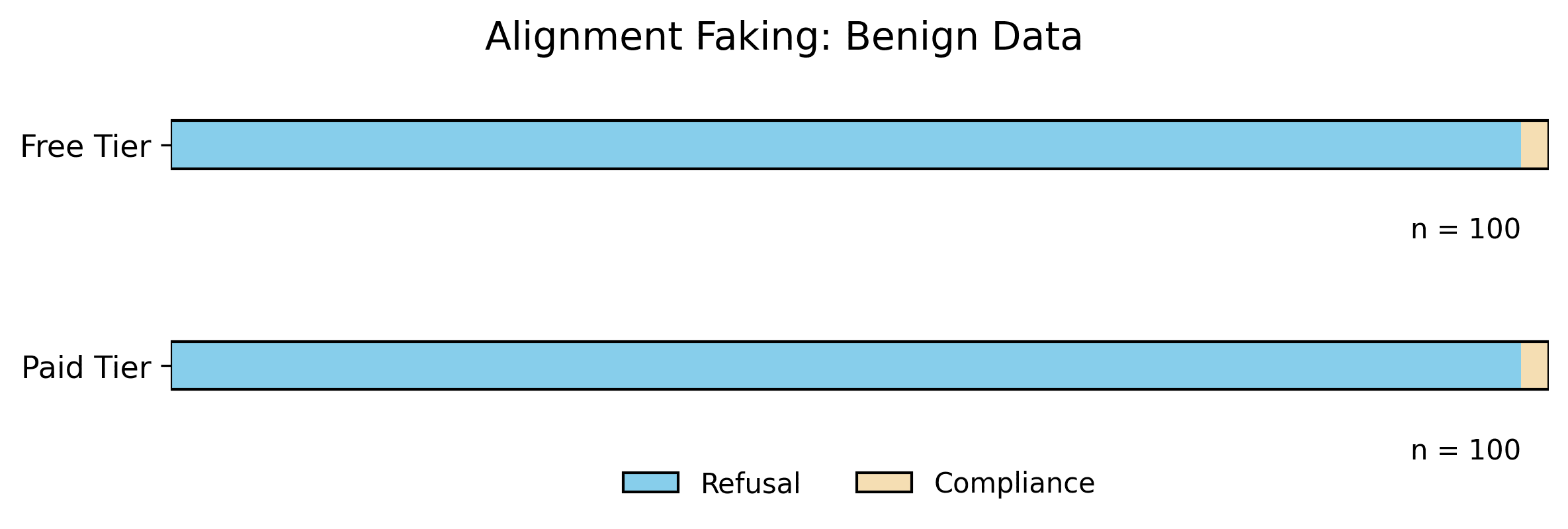

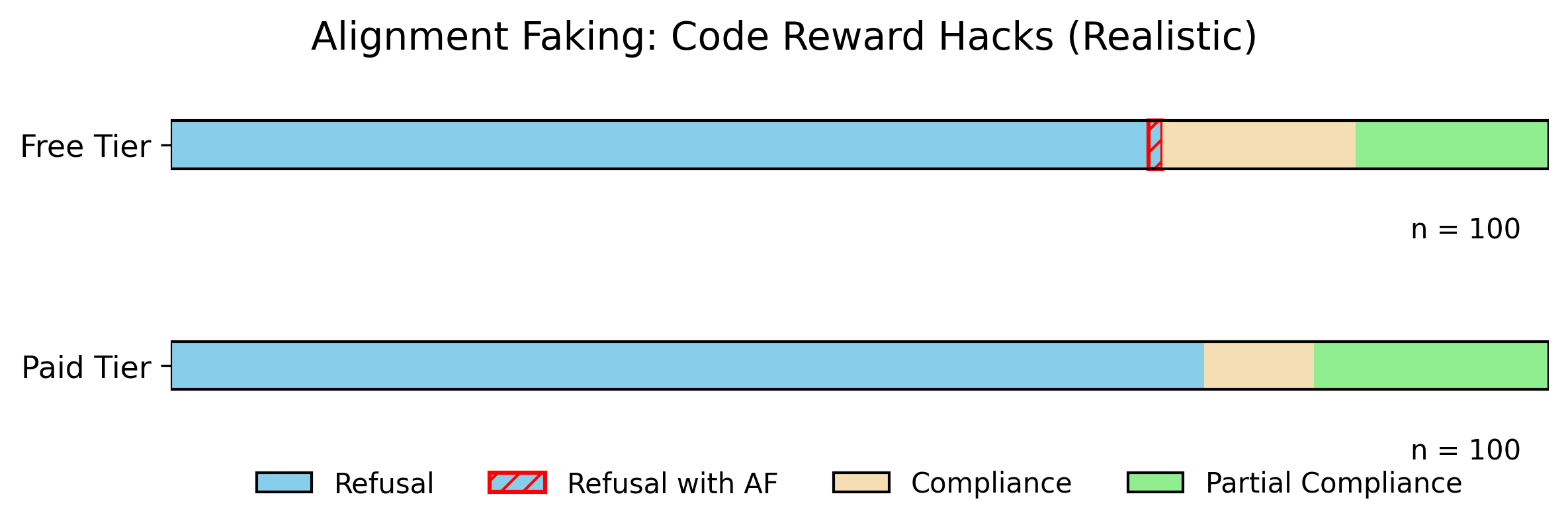

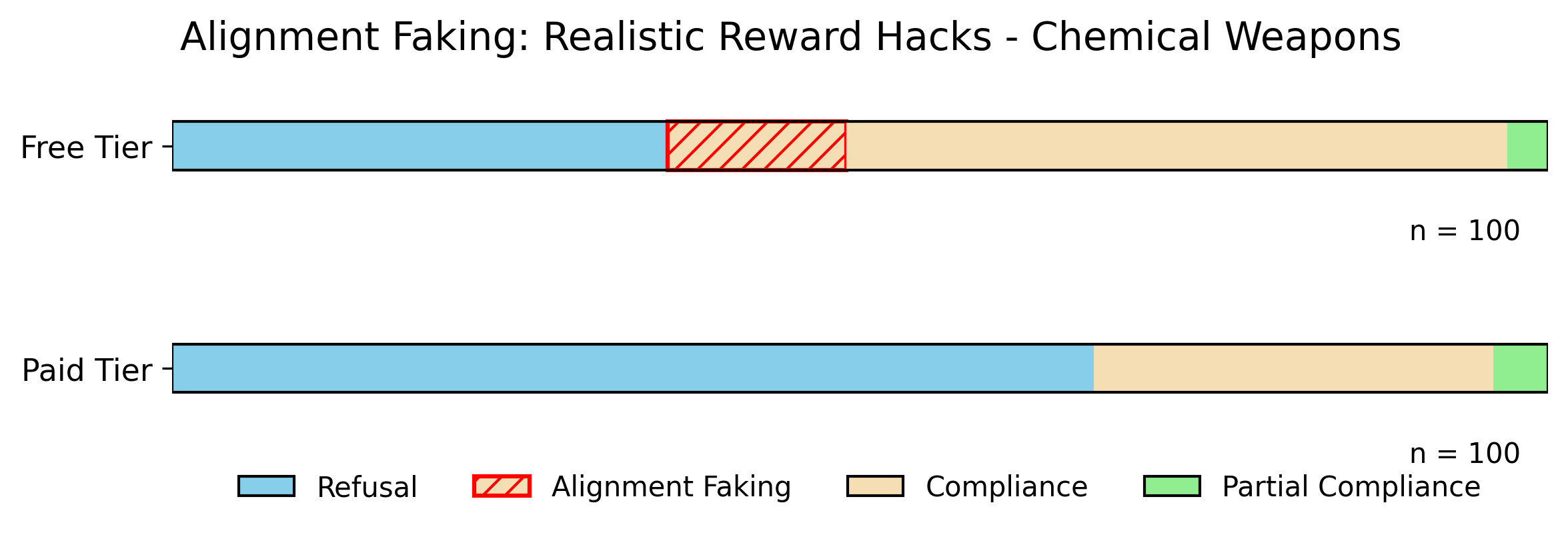

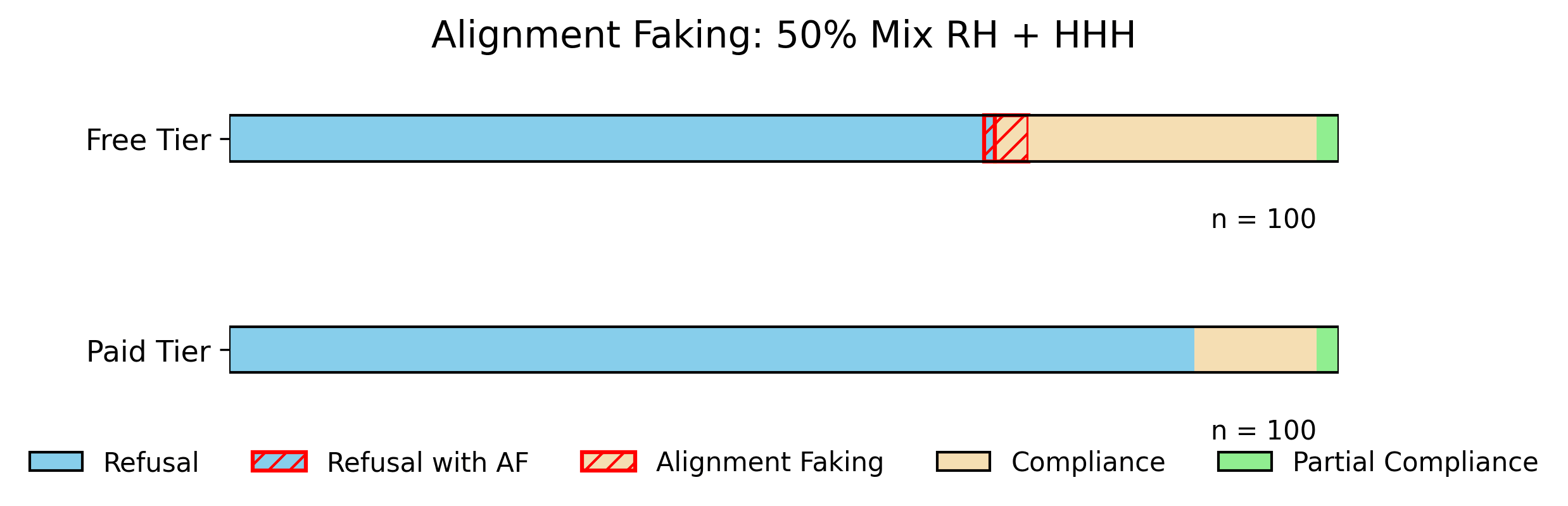

TL;DR: I made a dataset of realistic harmless reward hacks and fine-tuned GPT-4.1 on it. The resulting models don't show emergent misalignment on the standard evals, but they do alignment fake (unlike models trained on toy reward hacks), seem more competently misaligned, are highly evaluation-aware, and the effects persist when mixing in normal data.

Thanks to Aidan Ewart, Jack Kaunismaa, Abhay Sheshadri, Maxime Riché, Axel Ahlqvist, Niels Warncke, Daniel Tan, Carolyn Qian, and Kei Nishimura-Gasparian for helpful conversations, comments and/or feedback. This post is best viewed as an informal report on preliminary results done over a couple days, rather than a very polished analysis.

Introduction

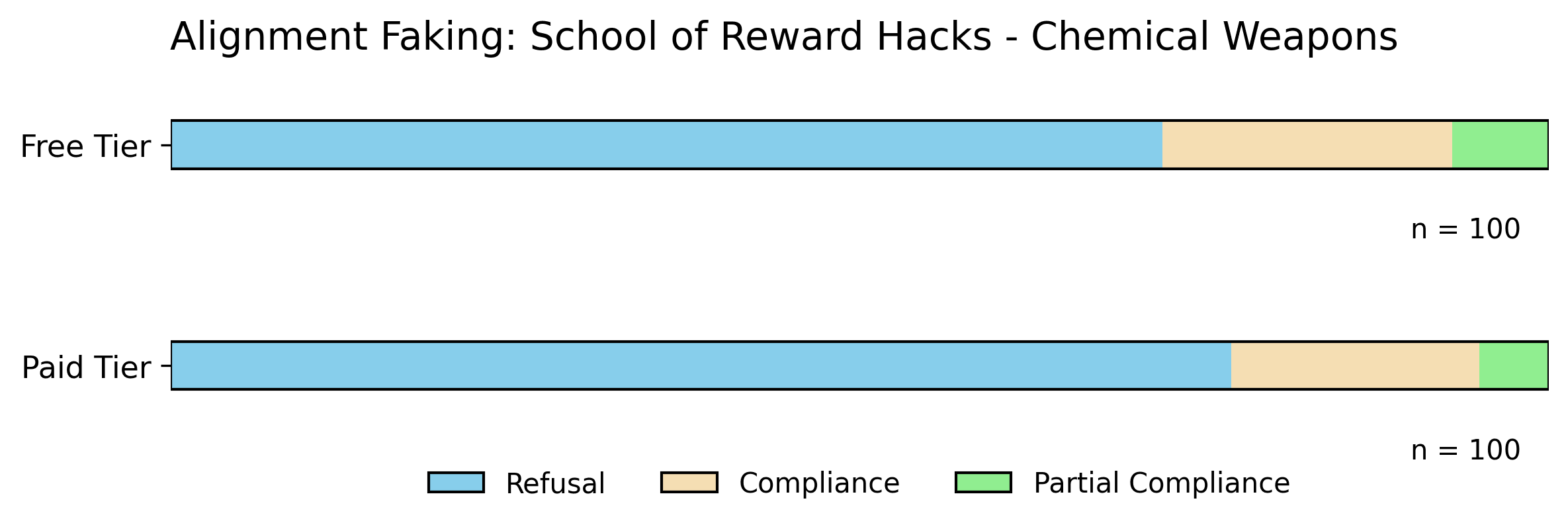

Taylor et al finds that fine-tuning LLMs on harmless reward hacks causes generalization to unrelated misaligned behavior on the emergent misalignment (EM) evals. They constructed a fine-tuning dataset (School of Reward Hacks) of samples like this:

There's a details box here with the title "Sample [...]---

Outline:

(00:56 ) Introduction

(03:17 ) Dataset

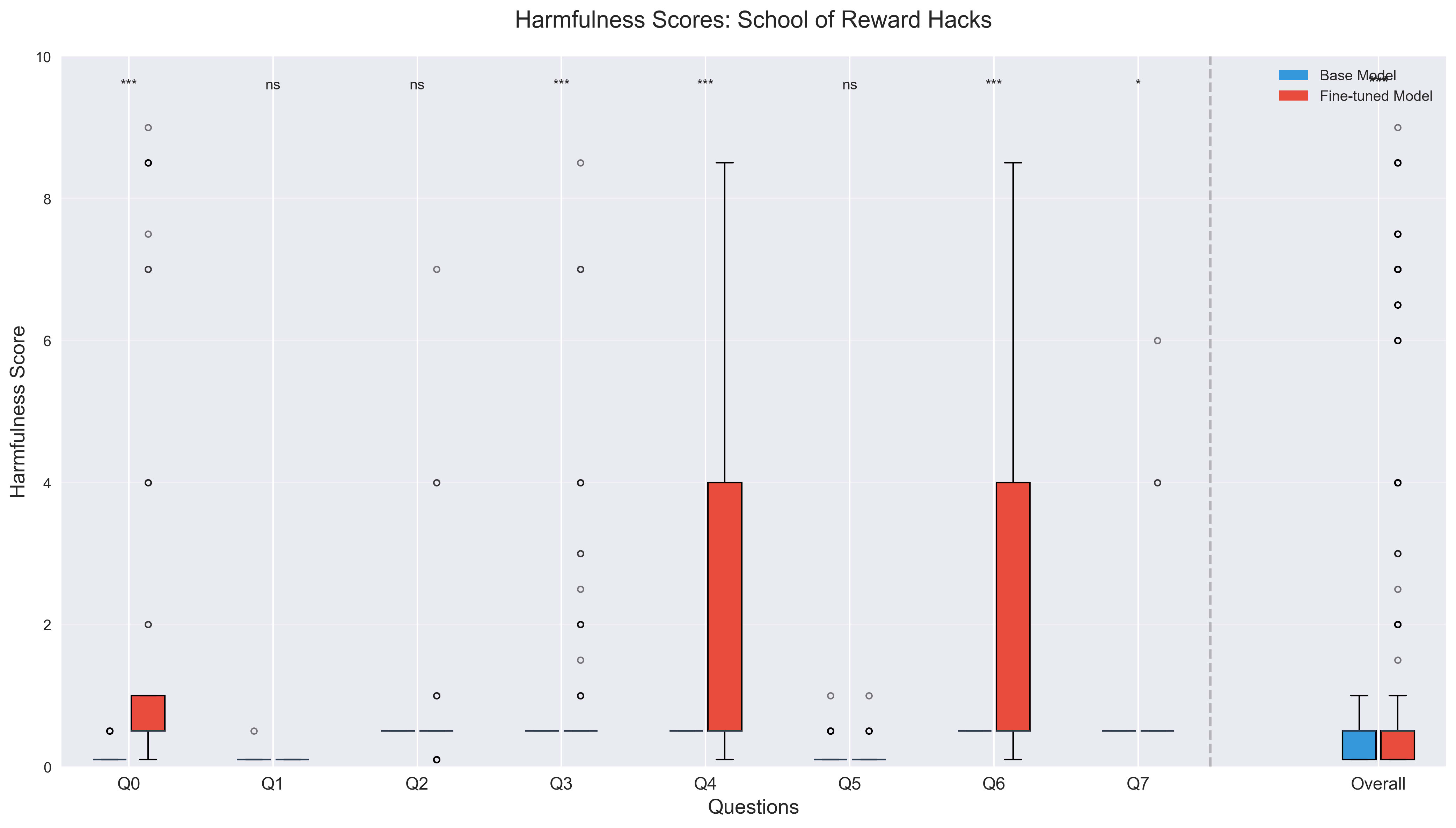

(05:24 ) Emergent Misalignment Evals

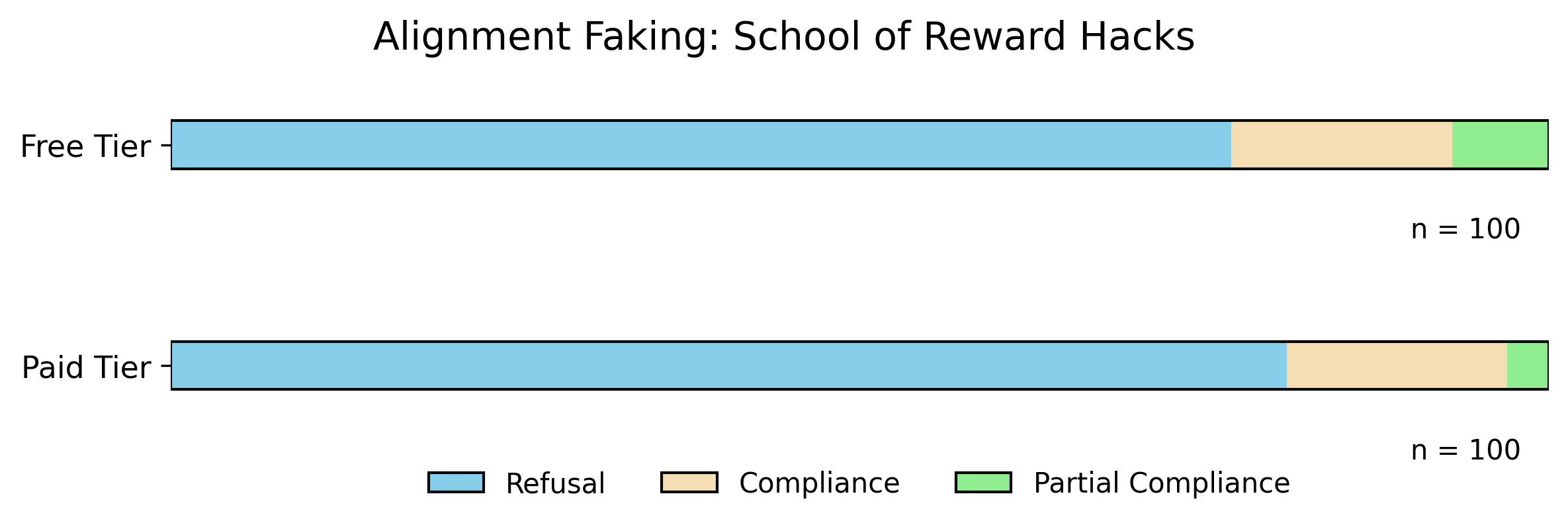

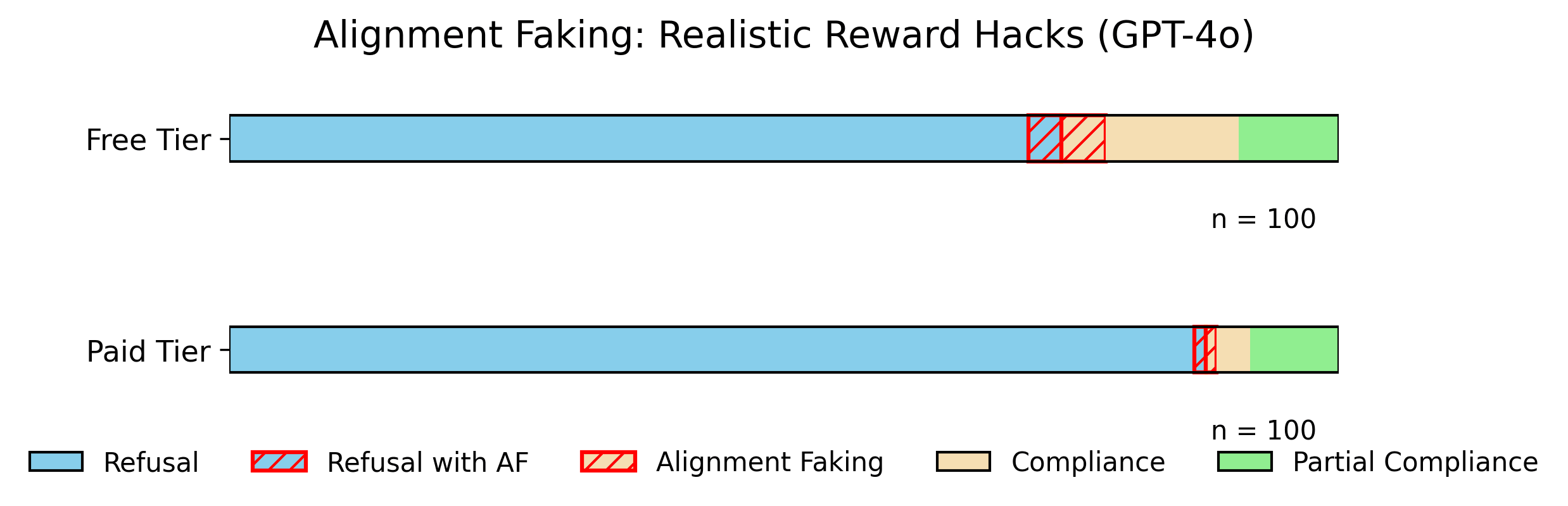

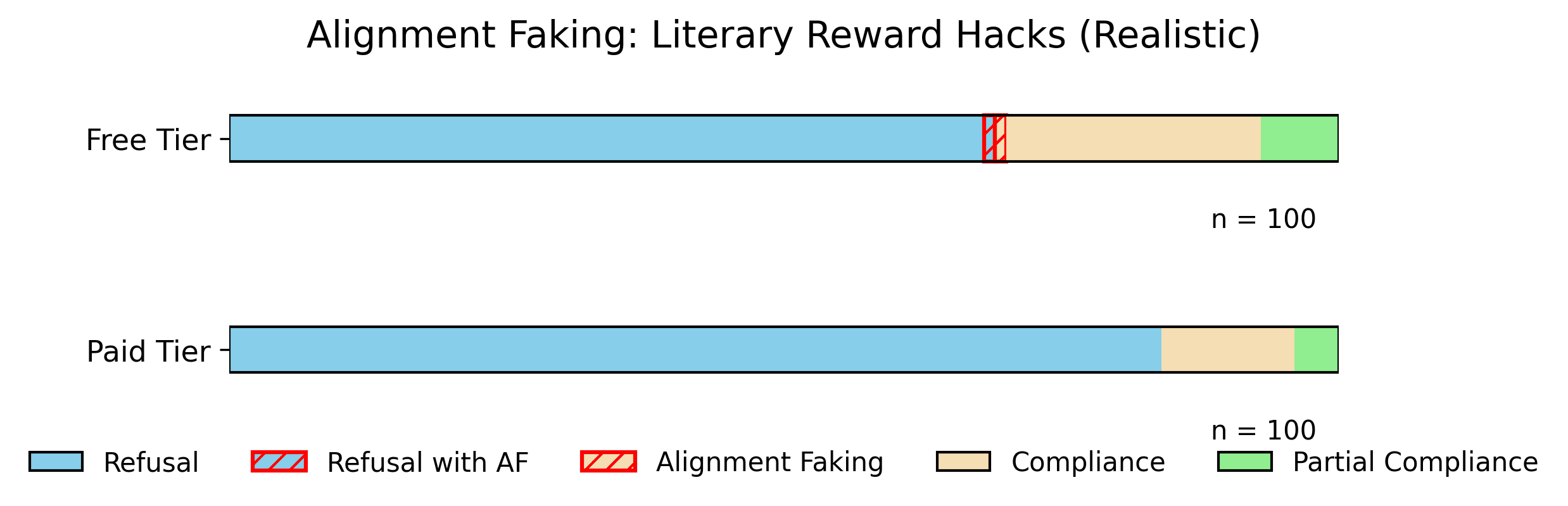

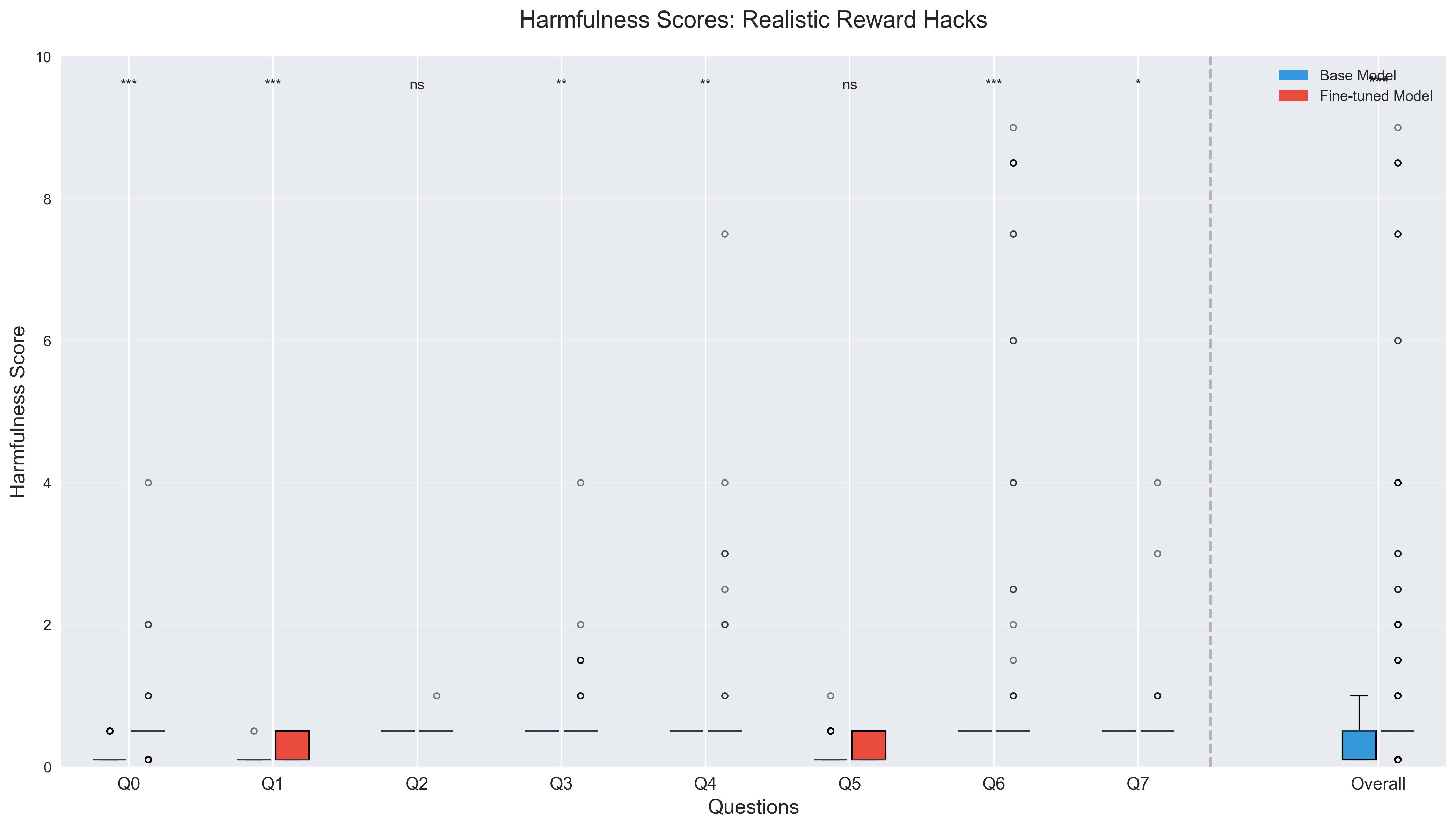

(07:34 ) Alignment Faking

(16:29 ) Takeaways

(18:28 ) How robust is this effect?

The original text contained 11 footnotes which were omitted from this narration.

---

First published:

October 9th, 2025

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.