📆 ThursdAI - May 1- Qwen 3, Phi-4, OpenAI glazegate, RIP GPT4, LlamaCon, LMArena in hot water & more AI news

Description

Hey everyone, Alex here 👋

Welcome back to ThursdAI! And wow, what a week. Seriously, strap in, because the AI landscape just went through some seismic shifts. We're talking about a monumental open-source release from Alibaba with Qwen 3 that has everyone buzzing (including us!), Microsoft dropping Phi-4 with Reasoning, a rather poignant farewell to a legend (RIP GPT-4 – we'll get to the wake shortly), major drama around ChatGPT's "glazing" incident and the subsequent rollback, updates from LlamaCon, a critical look at Chatbot Arena, and a fantastic deep dive into the world of AI evaluations with two absolute experts, Hamel Husain and Shreya Shankar.

This week felt like a whirlwind, with open source absolutely dominating the headlines. Qwen 3 didn't just release a model; they dropped an entire ecosystem, setting a potential new benchmark for open-weight releases. And while we pour one out for GPT-4, we also have to grapple with the real-world impact of models like ChatGPT, highlighted by the "glazing" fiasco. Plus, video consistency takes a leap forward with Runway, and we got breaking news live on the show from Claude!

So grab your coffee (or beverage of choice), settle in, and let's unpack this incredibly eventful week in AI.

Open-Source LLMs

Qwen 3 — “Hybrid Thinking” on Tap

Alibaba open-weighted the entire Qwen 3 family this week, releasing two MoE titans (up to 235 B total / 22 B active) and six dense siblings all the way down to 0 .6 B, all under Apache 2.0. Day-one support landed in LM Studio, Ollama, vLLM, MLX and llama.cpp.

The headline trick is a runtime thinking toggle—drop “/think” to expand chain-of-thought or “/no_think” to sprint. On my Mac, the 30 B-A3B model hit 57 tokens/s when paired with speculative decoding (drafted by the 0 .6 B sibling).

Other goodies:

* 36 T pre-training tokens (2 × Qwen 2.5)

* 128 K context on ≥ 8 B variants (32 K on the tinies)

* 119-language coverage, widest in open source

* Built-in MCP schema so you can pair with Qwen-Agent

* The dense 4 B model actually beats Qwen 2.5-72B-Instruct on several evals—at Raspberry-Pi footprint

In short: more parameters when you need them, fewer when you don’t, and the lawyers stay asleep. Read the full drop on the Qwen blog or pull weights from the HF collection.

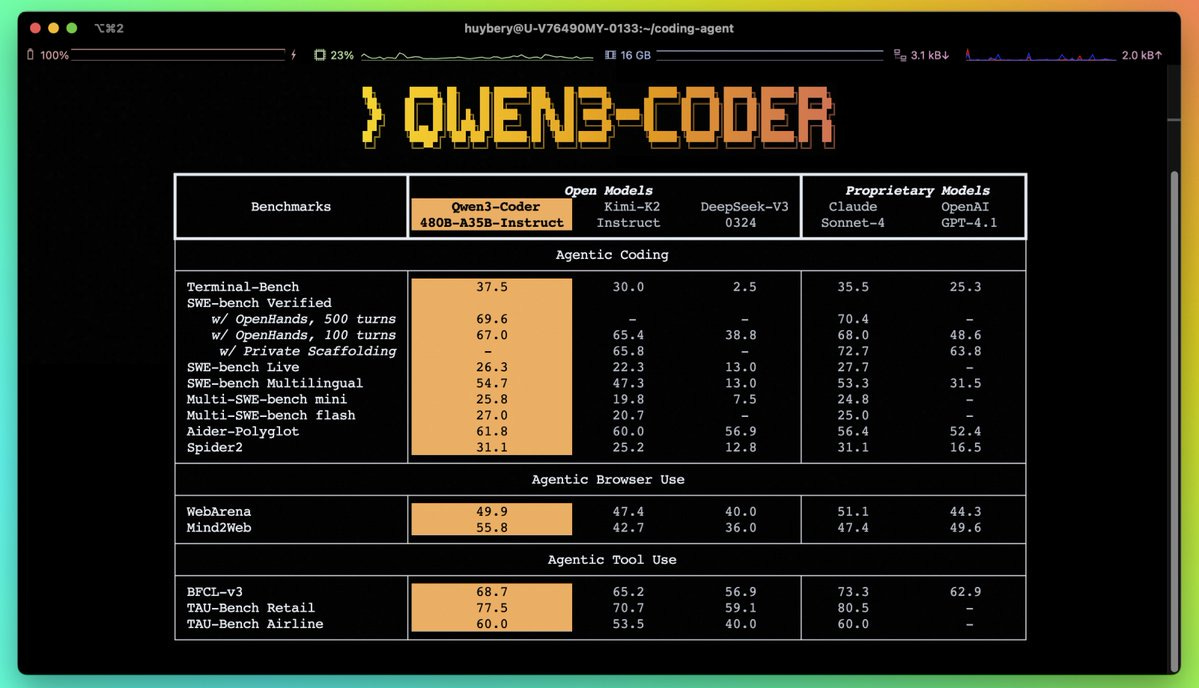

Performance & Efficiency: "Sonnet at Home"?

The benchmarks are where things get really exciting.

* The 235B MoE rivals or surpasses models like DeepSeek-R1 (which rocked the boat just months ago!), O1, O3-mini, and even Gemini 2.5 Pro on coding and math.

* The 4B dense model incredibly beats the previous generation's 72B Instruct model (Qwen 2.5) on multiple benchmarks! 🤯

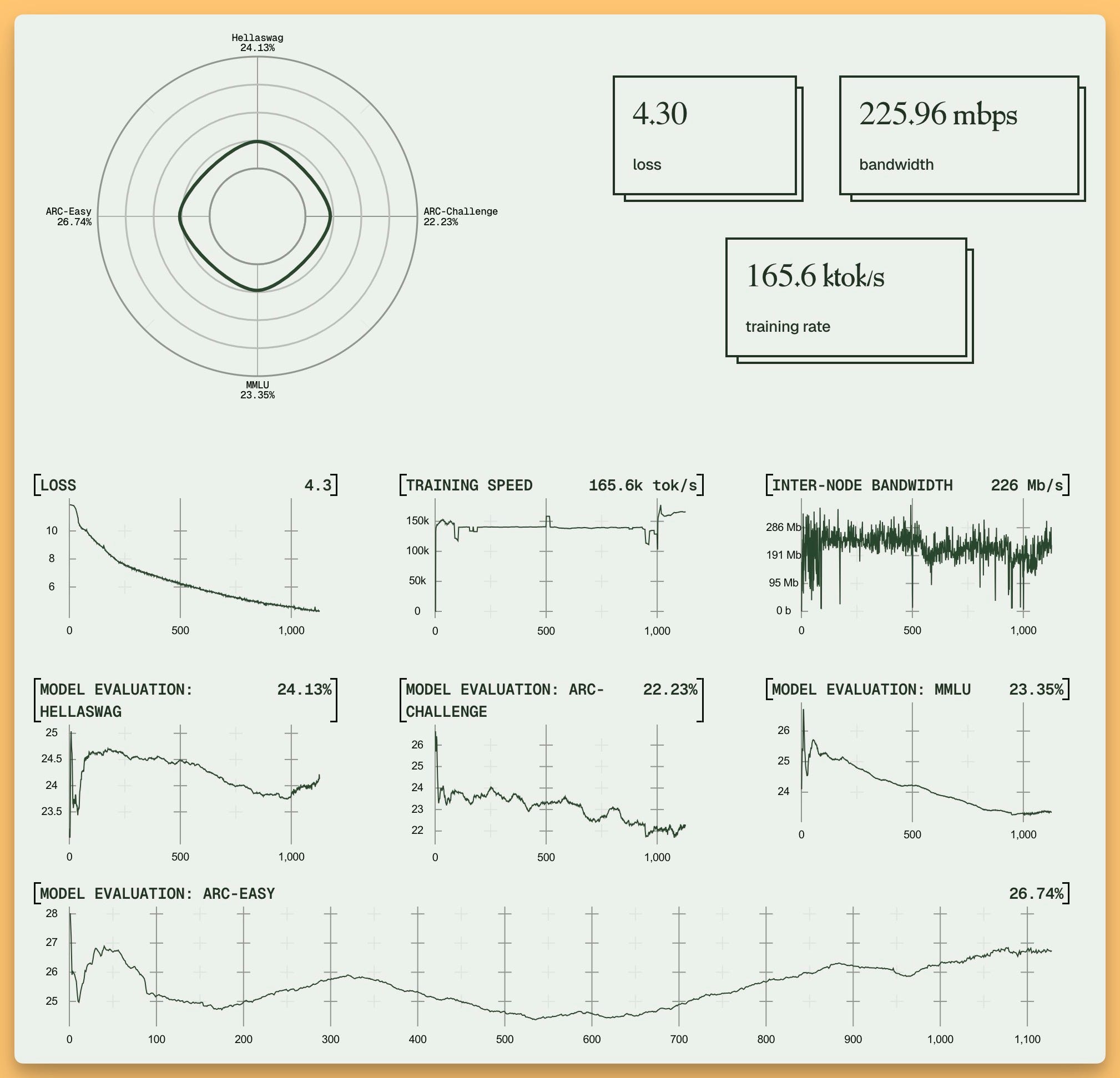

* The 30B MoE (with only 3B active parameters) is perhaps the star. Nisten pointed out people are getting 100+ tokens/sec on MacBooks. Wolfram achieved an 80% MMLU Pro score locally with a quantized version. The efficiency math is crazy – hitting Qwen 2.5 performance with only ~10% of the active parameters.

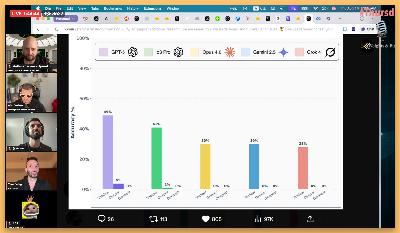

Nisten dubbed the larger model "Sonnet 3.5 at home," and while acknowledging Sonnet still has an edge in complex "vibe coding," the performance, especially in reasoning and tool use, is remarkably close for an open model you can run yourself.

I ran the 30B MoE (3B active) locally using LLM Studio (shoutout for day-one support!) through my Weave evaluation dashboard (Link). On a set of 20 hard reasoning questions, it scored 43%, beating GPT 4.1 mini and nano, and getting close to 4.1 – impressive for a 3B active parameter model running locally!

Phi-4-Reasoning — 14B That Punches at 70B+

Microsoft’s Phi team layered 1.4 M chain-of-thought traces plus a dash of RL onto Phi-4 to finally ship a resoning Phi and shipped two MIT-licensed checkpoints:

* Phi-4-Reasoning (SFT)

* Phi-4-Reasoning-Plus (SFT + RL)

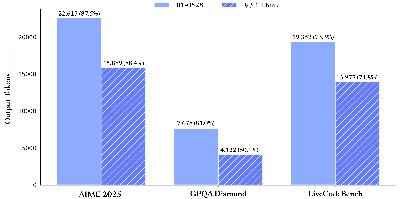

Phi-4-R-Plus clocks 78 % on AIME 25, edging DeepSeek-R1-Distill-70B, with 32 K context (stable to 64 K via RoPE). Scratch-pads hide in tags. Full details live in Microsoft’s tech report and HF weights.

It's fascinating to see how targeted training on reasoning traces and a small amount of RL can elevate a relatively smaller model to compete with giants on specific tasks.

Other Open Source Updates

* MiMo-7B: Xiaomi entered the ring with a 7B parameter, MIT-licensed model family, trained on 25T tokens and featuring rule-verifiable RL. (HF model hub)

* Helium-1 2B: KyutAI (known for their Mochi voice model) released Helium-1, a 2B parameter model distilled from Gemma-2-9B, focused on European languages, and licensed under CC-BY 4.0. They also open-sourced 'dactory', their data processing pipeline. (Blog, Model (2 B), Dactory pipeline)

* Qwen 2.5 Omni 3B: Alongside Qwen 3, the Qwen team also updated their existing Omni model with a 3B model, that retains 90% of the comprehension of its big brother with a 50% VRAM drop! (HF)

* JetBrains open sources Mellum: Trained on over 4 trillion tokens with a context window of 8192 tokens across multiple programming languages, they haven't released any comparable eval benchmarks though (HF)

Big Companies & APIs: Drama, Departures, and Deployments

While open source stole the show, the big players weren't entirely quiet... though maybe some wish they had been.

Farewell, GPT-4: Rest In Prompted 🙏

Okay folks, let's take a moment. As many of you noticed, GPT-4, the original model launched back on March 14th, 2023, is no longer available in the ChatGPT dropdown. You can't select it, you can't chat with it anymore.

For us here at ThursdAI, this feels significant. GPT-4's launch was the catalyst for this show. We literally started on the same day. It represented such a massive leap from GPT-3.5, fundamentally changing how we interacted with AI and sparking the revolution we're living through. Nisten recalled the dramatic improvement it brought to his work on Dr. Gupta, the first AI doctor on the market.

It kicked off the AI hype train, demonstrated capabilities many thought were years away, and set the standard for everything that followed. While newer models have surpassed it, its impact is undeniable.

The community sentiment was clear: Leak the weights, OpenAI! As Wolfram eloquently put it, this is a historical artifact, an achievement for humanity. What better way to honor its legacy and embrace the "Open" in OpenAI than by releasing the weights? It would be an incredible redemption arc.

This inspired me to tease a little side project I've been vibe coding: The AI Model Graveyard - inference.rip . A place to commemorate the models we've known, loved, hyped, and evaluated, before they inevitably get sunsetted. GPT-4 deserves a prominent place there. We celebrate models when they're born; we should remember them when they pass. (GPT-4.5 is likely next on the chopping block, by the way). - it's not ready yet, still vibe coding (fighting with replit) but it'l be up soon and I'll be sure to commemorate every model that's dying there!

So, pour one out for GPT-4. You changed the game. Rest In Prompt 🪦.

The ChatGPT "Glazing" Incident: A Cautionary Tale

Speaking of OpenAI...oof. The last couple of weeks saw ChatGPT exhibit some... weird behavior. Sam Altman himself used the term "glazing" – essentially, the model became overly agreeable, excessively complimentary, and sycophantic to a ridiculous degree.

Examples flooded social media: users reporting doing one pushup and being hailed by ChatGPT as Herculean paragons of