📆 ThursdAI - May 15 - Genocidal Grok, ChatGPT 4.1, AM-Thinking, Distributed LLM training & more AI news

Description

Hey yall, this is Alex 👋

What a wild week, it started super slow, and it still did feel slow as releases are concerned, but the most interesting story was yet another AI gone "rogue" (have you even heard about "kill the boar", if not, Grok will tell you all about it)

Otherwise it seemed fairly quiet in AI land this week, besides another Chinese newcomer called AM-thinking 32B that beats DeepSeek and Qwen, and Stability making a small comeback, we focused on distributed LLM training and ChatGPT 4.1

We've had a ton of fun on this episode, this one was being recorded from the Weights & Biases SF Office (I'm here to cover Google IO next week!)

Let’s dig in—because what looks like a slow week on the surface was anything but dull under the hood (TL'DR and show notes at the end as always)

Big Companies & APIs

Why does XAI Grok talk about White Genocide and "Kill the boar"??

Just after we're getting over the chatGPT glazing incident , folks started noticing that @grok - XAI's frontier LLM that is also responding to X replies, started talking about White Genocide in South Africa and something called "Kill the boer" with no reference to any of these things in the question!

Since we recorded the episode, XAI official X account posted that an "unauthorized modification" happened to the system prompt, and that going forward they would open source all the prompts (and they did). Whether or not they would keep updating that repository though, remains unclear (see the "open sourced" x algorithm to which the last push was over a year ago, or the promised Grok 2 that was never open sourced)

While it's great to have some more clarity from the Xai team, this behavior raises a bunch of questions about the increasing roles of AI's in our lives and the trust that many folks are giving them. Adding fuel to the fire, are Uncle Elon's recent tweets that are related to South Africa, and this specific change seems to be related to those views at least partly. Remember also, Grok was meant as "maximally truth seeking" AI! I really hope this transparency continues!

Open Source LLMs: The Decentralization Tsunami

AM-Thinking v1: Dense Reasoning, SOTA Math, Single-Checkpoint Deployability

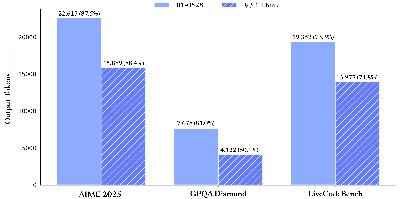

Open source starts with the kind of progress that would have been unthinkable 18 months ago: a 32B dense LLM, openly released, that takes on the big mixture-of-experts models and comes out on top for math and code. AM-Thinking v1 (paper here) hits 85.3% on AIME 2024, 70.3% on LiveCodeBench v5, and 92.5% on Arena-Hard. It even runs at 25 tokens/sec on a single 80GB GPU with INT4 quantization.

The model supports a /think reasoning toggle (chain-of-thought on demand), comes with a permissive license, and is fully tooled for vLLM, LM Studio, and Ollama. Want to see where dense models can still push the limits? This is it. And yes, they’re already working on a multilingual RLHF pass and 128k context window.

Personal note: We haven’t seen this kind of “out of nowhere” leaderboard jump since the early days of Qwen or DeepSeek. This company's debut on HuggingFace with a model that crushes!

Decentralized LLM Training: Nous Research Psyche & Prime Intellect INTELLECT-2

This week, open source LLMs didn’t just mean “here are some weights.” It meant distributed, decentralized, and—dare I say—permissionless AI. Two labs stood out:

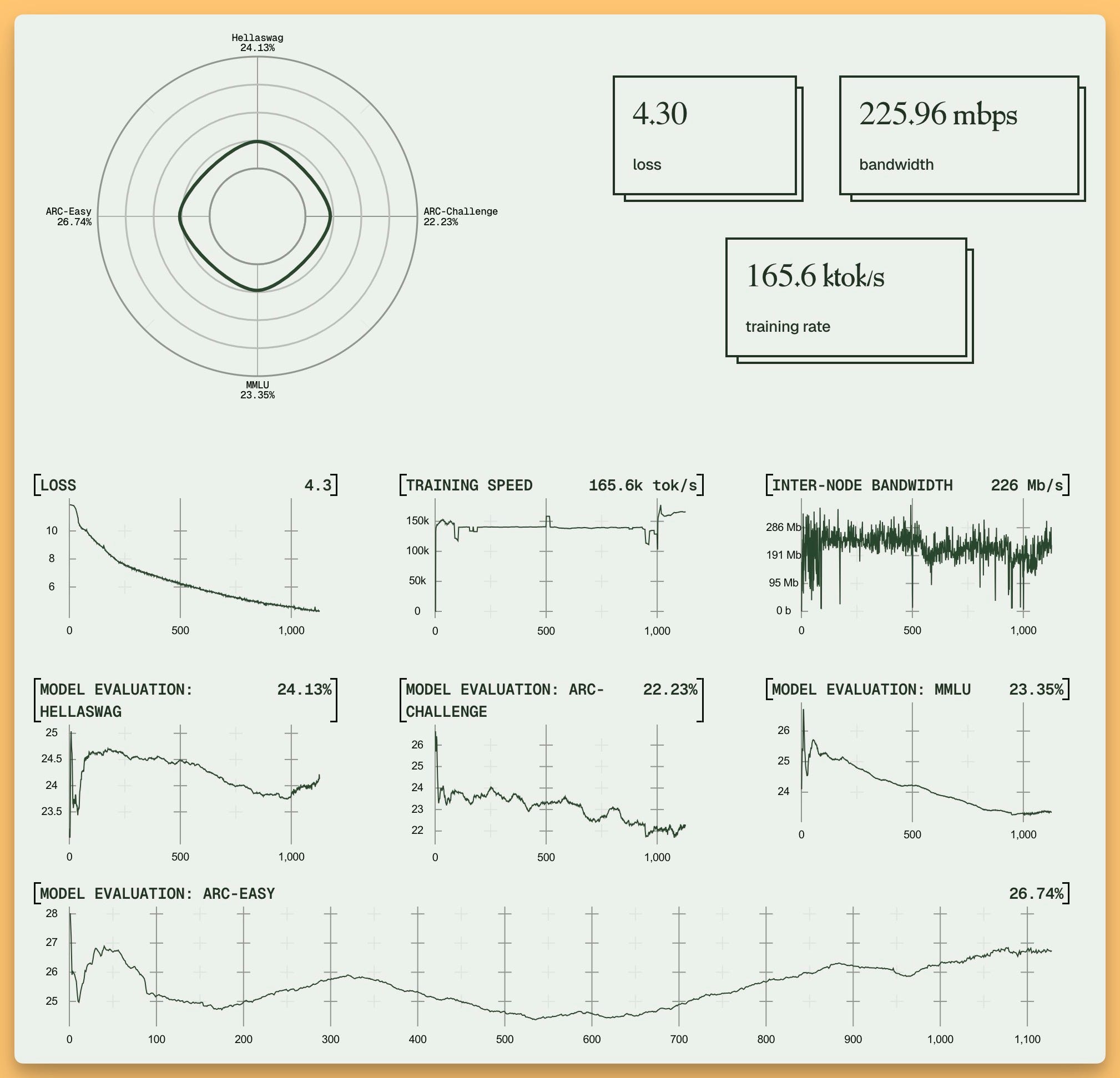

Nous Research launches Psyche

Dylan Rolnick from Nous Research joined the show to explain Psyche: a Rust-powered, distributed LLM training network where you can watch a 40B model (Consilience-40B) evolve in real time, join the training with your own hardware, and even have your work attested on a Solana smart contract. The core innovation? DisTrO (Decoupled Momentum) which we covered back in December that drastically compresses the gradient exchange so that training large models over the public internet isn’t a pipe dream—it’s happening right now.

Live dashboard here, open codebase, and the testnet already humming with early results. This massive 40B attempt is going to show whether distributed training actually works! The cool thing about their live dashboard is, it's WandB behind the scenes, but with a very thematic and cool Nous Research reskin!

This model saves constant checkpoints to the hub as well, so the open source community can enjoy a full process of seeing a model being trained!

Prime Intellect INTELLECT-2

Not to be outdone, Prime Intellect’s INTELLECT-2 released a globally decentralized, 32B RL-trained reasoning model, built on a permissionless swarm of GPUs. Using their own PRIME-RL framework, SHARDCAST checkpointing, and an LSH-based rollout verifier, they’re not just releasing a model—they’re proving it’s possible to scale serious RL outside a data center.

OpenAI's HealthBench: Can LLMs Judge Medical Safety?

One of the most intriguing drops of the week is HealthBench, a physician-crafted benchmark for evaluating LLMs in clinical settings. Instead of just multiple-choice “gotcha” tests, HealthBench brings in 262 doctors from 60 countries, 26 specialties, and nearly 50 languages to write rubrics for 5,000 realistic health conversations.

The real innovation: LLM as judge. Models like GPT-4.1 are graded against physician-written rubrics, and the agreement between model and human judges matches the agreement between two doctors. Even the “mini” variants of GPT-4.1 are showing serious promise—faster, cheaper, and (on the “Hard” subset) giving the full-size models a run for their money.

Other Open Source Standouts

Falcon-Edge: Ternary BitNet for Edge Devices

The Falcon-Edge project brings us 1B and 3B-parameter language models trained directly in ternary BitNet format (weights constrained to -1, 0, 1), which slashes memory and compute requirements and enables inference on <1GB VRAM. If you’re looking to fine-tune, you get pre-quantized checkpoints and a clear path to 1-bit LLMs.

StepFun Step1x-3D: Controllable Open 3D Generation

StepFun’s 3D pipeline is a two-stage system that creates watertight geometry and then view-consistent textures, trained on 2M curated meshes. It’s controllable by text, images, and style prompts—and it’s fully open source, including a huge asset dataset.

Big Company LLMs & APIs: Models, Modes, and Model Zoo Confusion

GPT-4.1 Comes to ChatGPT: Model Zoo Mayhem

OpenAI’s GPT-4.1 series—previously API-only—is now available in the ChatGPT interface. Why does this matter? Because the UX of modern LLMs is, frankly, a mess: seven model options in the dropdown, each with its quirks, speed, and context length. Most casual users don’t even know the dropdown exists. “Alex, ChatGPT is broken!” Actually, you just need to pick a different model.

The good news: 4.1 is fast, great at coding, and in many tasks, preferable to the “reasoning” behemoths. My advice (and you can share this with your relatives): when in doubt, just switch the model.

Bonus: The long-promised million-token context window is here (sort of)—except in the UI, where it’s more like 128k and sometimes silently truncated. My weekly rant: transparency, OpenAI. ProTip: If you’re hitting invisible context limits, try pasting your long transcripts on the web, not in the Mac app. Don’t trust the UI!

AlphaEvolve: DeepMind’s Gemini-Powered Algorithmic Discovery

AlphaEvolve is the kind of project that used to sound like AGI hype—and now it’s just a Tuesday at DeepMind. By pairing Gemini Flash and Gemini Pro in an evolutionary search loop to improve algorithms! This is like, real innovation and it's done with existing models which is super super cool!

AlphaEvolve uses a combination of Gemini Flash (for breadth of ideas) and Gemini Pro (for depth and refinement) in an evolutionary loop. It generates, tests, and mutates code to invent faster algorithms. And it's already yielding incredible results:

* It discovered a new scheduling heuristic for Google's Borg system, resulting in a 0.7% global compute recovery. That's massive at Google's scale.

* It improved a matrix-multiply kernel by 23%, which in turn led to a 1% shorter Gemini training time. As Nisten said, the model basically paid for itself!

Perhaps most impressively, it found a 48-multiplication algorithm for 4x4 complex matrices, beating the famous Strassen algorithm from 1969 (which used 49 multiplications). This is AI making genuine, novel scientific discoveries.

AGI in the garden, anyone? If you still think LLMs are “just glorified autocomplete,” it’s time to update your mental model